Depending on your project, you should consider different C# web scraping libraries. You'll find options best suited for price tracking, lead generation, sentiment monitoring, financial data aggregation, etc.

Furthermore, you should keep in mind different metrics when making your pick. So, to make your choice easier, we reviewed and compared the seven best C# web scraping libraries to use.

Also, we'll see examples to help you understand how these frameworks work.

Let's dive in!

What Are the Best C# Web Scraping Libraries?

We tested different libraries for web scraping, and the best ones to use in 2024 are as follows:

- ZenRows.

- Puppeteer-Sharp.

- Selenium.

- HTML Agility Pack.

- ScrapySharp.

- IronWebScraper.

- HttpClient.

We compared the C# libraries based on core features that make web scraping smooth, like proxy configurations, dynamic content, documentation, anti-bots bypass, auto parse and infrastructure scalability.

Here is a quick overview.

| ZenRows | Puppeteer-Sharp | Selenium | HTML Agility Pack | ScrapySharp | IronWebScraper | HttpClient | |

|---|---|---|---|---|---|---|---|

| Proxy Configuration | AUTO | MANUAL | MANUAL | MANUAL | MANUAL | MANUAL | MANUAL |

| Dynamic Content | ✅ | ✅ | ✅ | - | - | - | - |

| Documentation | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| Anti-Bots Bypass | ✅ | - | - | - | - | - | - |

| Auto-Parse HTML | ✅ | - | - | - | - | - | - |

| Infrastructure Scalability | AUTO | MANUAL | MANUAL | MANUAL | MANUAL | MANUAL | MANUAL |

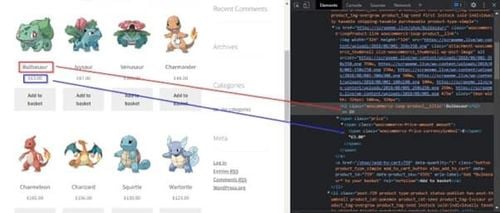

Let's go ahead and talk about these C# crawler libraries based on the features they come with and how they can extract data from a web page. We'll use the ScrapeMe website as a reference.

1. ZenRows Web Scraper API

ZenRows API is the best C# web scraping library on this list. It's an API that handles anti-bot bypass from rotating proxies and headless browsers to CAPTCHAs. Additionally, it supports auto-parsing (i.e., HTML to JSON parsing) for many popular sites and can extract dynamic content.

The only downside is that ZenRows has no proprietary C# Nuget package to send HTTP requests to its Web Scraping API. Therefore you need to use additional packages to send HTTP requests.

How to Scrape a Web Page in C# with ZenRows?

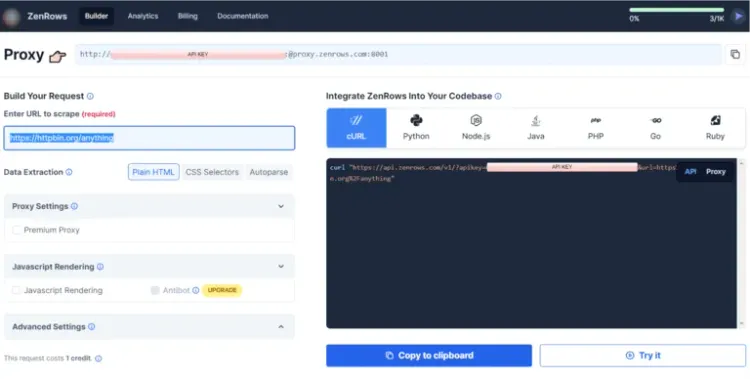

Create a free account on ZenRows to get your API key. You'll get to the following Request Builder screen.

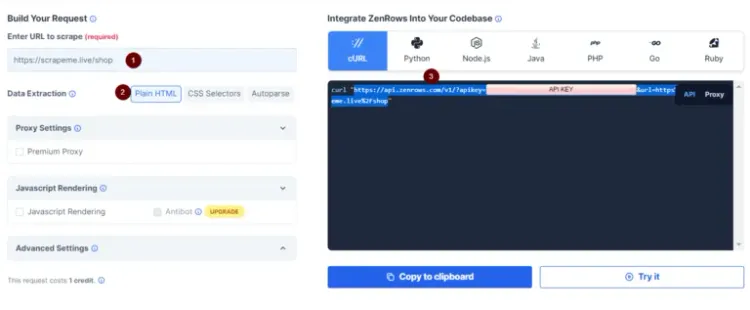

After you add the URL you want to crawl, send an HTTP GET request to it. That generates a plain HTML that can be extracted using any HTML parser.

If you used https://scrapeme.live/shop as the URL to scrape, the ZenRows API URL should look like this.

https://api.zenrows.com/v1/?apikey=<YOUR_ZENROWS_API_KEY>&url=https%3A%2F%2Fscrapeme.live%2Fshop

Remark: the API Key is a personal ID assigned to you by ZenRows and shouldn't be shared with anyone.

Since ZenRows doesn't provide any explicit Nuget package to be used with a C# program, you'll have to send an HTTP GET request to the API URL. ZenRows will scrape the target URL on your behalf and return the plain HTML in the response.

To do this, create a C# Console Application in Visual Studio and add the following code to the program's main() function.

var url = "https://api.zenrows.com/v1/?apikey=<YOUR_ZENROWS_API_KEY>&url=https%3A%2F%2Fscrapeme.live%2Fshop";

var req = WebRequest.Create(url);

req.Method = "GET";

This code will create a new WebRequest object with the ZenRows API URL as a target. You can then send the request and get the response in the HttpResponse object.

using var webResponse = request.GetResponse();

using var webStream = webResponse.GetResponseStream();

The plain HTML response is fetched in the webResponse object, then converted into a bytes stream for easy data reading. Now that you have the plain HTML of the target page in the stream, you can use any HTML parser to parse the desired elements.

Let's use HTML Agility Pack to extract the products' names and prices.

Install the Agility Pack and write the ParseHtml() function in the Program class to parse and print the product prices and names.

private static void ParseHtml(Stream html)

{

var doc = new HtmlDocument();

doc.Load(html);

HtmlNodeCollection names = doc.DocumentNode.SelectNodes("//a/h2");

HtmlNodeCollection prices = doc.DocumentNode.SelectNodes("//div/main/ul/li/a/span");

for (int i = 0; i < names.Count; i++)

{

Console.WriteLine("Name: {0}, Price: {1}", names[i].InnerText, prices[i].InnerText);

}

}

The ParseHtml() function creates an HtmlDocument instance doc and loads the passed bytes stream in it. Then it uses the SelectNodes() method to parse all the elements with product names and prices.

The SelectNodes() method takes XPath to extract the elements from an HTML document. The XPath "//a/h2" selects all <h2> elements enclosed in an anchor tag <a>, which contains names for products.

Similarly, the XPath "//div/main/ul/li/a/span" references all the <span> elements containing product prices. The for loop prints InnerTexts of these parsed elements. Let's call the ParseHTML() method from the main() function with the webStream bytes stream as an argument.

ParseHtml(webStream);

Congratulations! 👏

You have just crawled a web page using the ZenRows C# web scraping library without being blocked by any anti-bot.

Here's what the complete code looks like.

using HtmlAgilityPack;

using System;

using System.IO;

using System.Net;

namespace ZenRowsDemo

{

class Program

{

static void Main(string[] args)

{

var url = "https://api.zenrows.com/v1/?apikey=<YOUR_ZENROWS_API_KEY>&url=https%3A%2F%2Fscrapeme.live%2Fshop";

var request = WebRequest.Create(url);

request.Method = "GET";

using var webResponse = request.GetResponse();

using var webStream = webResponse.GetResponseStream();

ParseHtml(webStream);

}

private static void ParseHtml(Stream html)

{

var doc = new HtmlDocument();

doc.Load(html);

HtmlNodeCollection names = doc.DocumentNode.SelectNodes("//a/h2");

HtmlNodeCollection prices = doc.DocumentNode.SelectNodes("//div/main/ul/li/a/span");

for (int i = 0; i < names.Count; i++)

{

Console.WriteLine("Name: {0}, Price: {1}", names[i].InnerText, prices[i].InnerText);

}

}

}

}

2. Puppeteer-Sharp

Puppeteer-Sharp is a C# Web scraping library that crawls a web page using a headless browser. Some benefits of using Puppeteer-Sharp include scraping dynamic web pages, supporting headless browsers, and generating PDFs and screenshots of web pages.

There are some downsides: it requires manual proxy integrations, doesn't provide anti-bot protections, and you must manually monitor your infrastructure scalability needs.

Let's take a look at how to crawl a web page using this library.

How to Scrape a Web Page in C# with Puppeteer-Sharp?

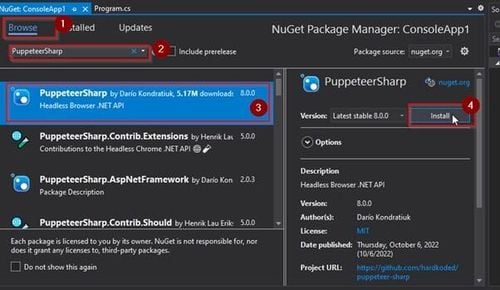

Create a C# Console Application in Visual Studio (or the IDE you prefer) and then install the PuppeteerSharp package through the NuGet Package Manager, as shown below.

Repeat the same procedure to install the AngelSharp library. That will be handy for parsing the data crawled using the PuppeteerSharp package.

Once that's done, let's go ahead and do some C# web scraping.

The first step is to include the required library files in your Program.cs file.

using PuppeteerSharp;

using AngleSharp;

using AngleSharp.Dom;

Once that's done, launch a headless Chrome instance using Puppeteer and fetch content from the same page we've just used.

// download the browser executable

await new BrowserFetcher().DownloadAsync();

var browser = await Puppeteer.LaunchAsync(new LaunchOptions

{

Headless = true

});

var page = await browser.NewPageAsync();

await page.GoToAsync("https://scrapeme.live/shop/");

var content = await page.GetContentAsync();

The code launches a headless Chrome browser instance.

The GoToAsync() function navigates the browser to the given URL. The GetContentAsync() method retrieves the current page's raw HTML content.

Let's use AngelSharp to parse the raw HTML contents to extract the product names and prices. The AngelSharp library uses LINQ queries to take the path of the elements you want to extract from the raw content.

var jsSelectAllNames = @"Array.from(document.querySelectorAll('h2')).map(a => a.innerText);";

var jsSelectAllPrices = @"Array.from(document.querySelectorAll('span[class=""price""]')).map(a => a.innerText);";

var names = await page.EvaluateExpressionAsync<string[]>(jsSelectAllNames);

var prices = await page.EvaluateExpressionAsync<string[]>(jsSelectAllPrices);

for (int i=0; i < names.Length; i++)

{

Console.WriteLine("Name: {0}, Price: {1}", names[i], prices[i]);

}

The querySelectorAll() function defines the path of the desired elements. The map() function is a filter method to iterate over each instance of the given element array to retain only the intertexts.

The EvaluateExpressionAsync() method evaluates the expressions to extract the products' names and prices in relevant string arrays. It evaluates the expressions, then it extracts and returns the matching results.

And there you have it, a web page successfully scraped with Puppeteer C# crawling library.

Here's what the full code looks like.

using PuppeteerSharp;

using System;

using System.Threading.Tasks;

using AngleSharp;

using AngleSharp.Dom;

using System.Linq;

using System.Collections.Generic;

namespace PuppeteerDemo

{

class Program

{

static async Task Main(string[] args)

{

// download the browser executable

await new BrowserFetcher().DownloadAsync();

var browser = await Puppeteer.LaunchAsync(new LaunchOptions

{

Headless = true

});

var page = await browser.NewPageAsync();

await page.GoToAsync("https://scrapeme.live/shop/");

var content = await page.GetContentAsync();

List<Products> products = new List<Products>();

var jsSelectAllNames = @"Array.from(document.querySelectorAll('h2')).map(a => a.innerText);";

var jsSelectAllPrices = @"Array.from(document.querySelectorAll('span[class=""price""]')).map(a => a.innerText);";

var names = await page.EvaluateExpressionAsync<string[]>(jsSelectAllNames);

var prices = await page.EvaluateExpressionAsync<string[]>(jsSelectAllPrices);

for (int i=0; i < names.Length && i < prices.Length; i++)

{

Products p = new Products();

p.Name = names[i];

p.Price = prices[i];

products.Add(p);

}

foreach(var p in products)

{

Console.WriteLine("Name: {0}, Price: {1}", p.Name, p.Price);

}

}

}

class Products

{

private string name;

private string price;

public string Name { get => name; set => name = value; }

public string Price { get => price; set => price = value; }

}

}

3. Selenium WebDriver

Selenium is one of the most used tools for crawling large amounts of data, like photos, links and text. It's ideal for crawling dynamic web pages because of its ability to handle dynamic content produced using JavaScript.

The downside to the Selenium C# scraping library is that it requires manual proxy integrations and anti-bots mechanisms.

How to Scrape a Web Page in C# with Selenium?

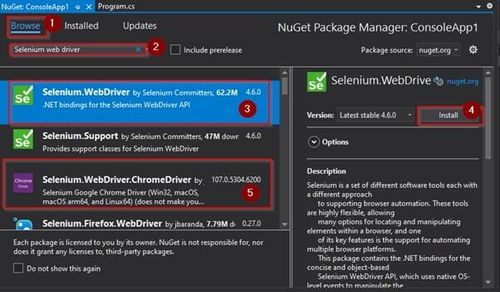

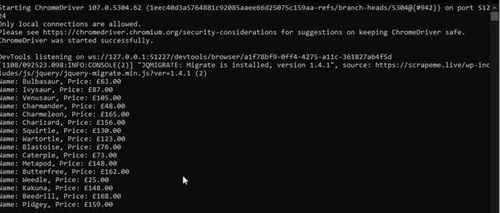

Building a web crawler with Selenium requires two external packages: Selenium WebDriver and Selenium WebDriver.ChromeDriver. You can install these packages using the NuGet Package manager.

Once you have those installed, launch the headless Chrome driver by specifying its multiple options (e.g., browser path, GPU support, etc.).

string fullUrl = "https://scrapeme.live/shop/";

var options = new ChromeOptions();

options.AddArguments(new List<string>() { "headless", "disable-gpu" });

var browser = new ChromeDriver(options);

browser.Navigate().GoToUrl(fullUrl);

After the browser navigates to the desired URL, extract the elements using the FindElements function.

var names = browser.FindElements(By.TagName("h2"));

var prices = browser.FindElements(By.CssSelector("span.price"));

for (int i = 0; i < names.Count && i < prices.Count; i++)

{

Console.WriteLine("Name: {0}, Price: {1}", names[i], prices[i]);

}

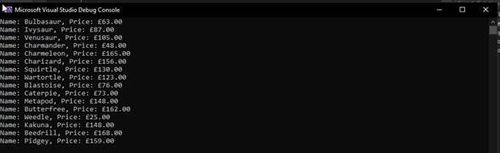

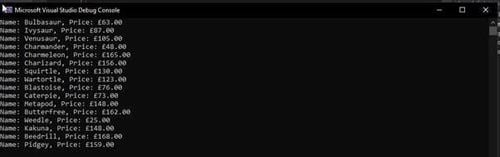

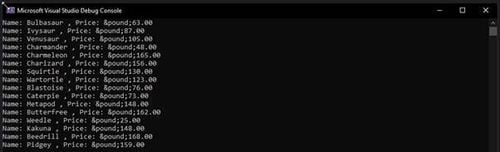

And that's it! Here's what your output will look like.

If you got lost along the line, here's the complete code used.

using OpenQA.Selenium.Chrome;

using System;

using OpenQA.Selenium;

using System.Collections.Generic;

using System.Threading.Tasks;

namespace SeleniumDemo {

class Program {

static void Main(string[] args) {

string fullUrl = "https://scrapeme.live/shop/";

List<string> programmerLinks = new List<string>();

var options = new ChromeOptions();

options.AddArguments(new List<string>() {

"headless",

"disable-gpu"

});

var browser = new ChromeDriver(options);

List<Products> products = new List<Products>();

browser.Navigate().GoToUrl(fullUrl);

var names = browser.FindElements(By.TagName("h2"));

var prices = browser.FindElements(By.CssSelector("span.price"));

for (int i = 0; i < names.Count && i < prices.Count; i++) {

Products p = new Products();

p.Name = names[i].GetAttribute("innerText");

p.Price = prices[i].GetAttribute("innerText");

products.Add(p);

}

foreach(var p in products) {

Console.WriteLine("Name: {0}, Price: {1}", p.Name, p.Price);

}

}

}

class Products {

string name;

string price;

public string Name {

get => name;

set => name = value;

}

public string Price {

get => price;

set => price = value;

}

}

}

4. HTML Agility Pack

HTML Agility Pack is the most downloaded C# DOM scraper library because it can download web pages directly or through a browser. The HTML Agility package can tackle broken HTML, support XPath, and scan local HTML files.

That said, this C# web crawling library lacks headless scraping support and needs external proxy services to bypass anti-bots.

How to Scrape a Web Page in C# with HTML Agility Pack?

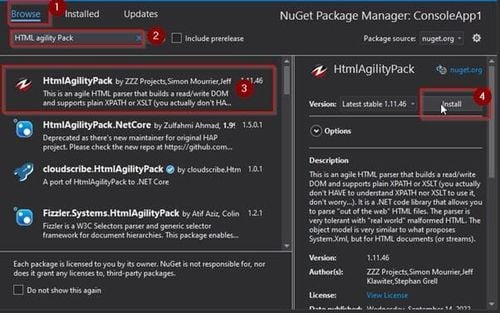

Create a C# console application and open the NuGet Package Manager from the Tools menu. Type HTML Agility Pack in the search bar under the browse tab, select the appropriate "HtmlAgilityPack" version, and click "Install".

Once the tools are installed, let's extract some data. The first step is to include the required library files in your Program.cs file.

using HtmlAgilityPack;

Then create a helper method with GetDocument() to load the HTML of the URL and return the HTML contents.

static HtmlDocument GetDocument(string url)

{

HtmlWeb web = new HtmlWeb();

HtmlDocument doc = web.Load(url);

return doc;

}

You can use GetDocument() to retrieve the HTML contents of any target page. The last step is to write the main() driver code like this.

var doc = GetDocument("https://scrapeme.live/shop/");

HtmlNodeCollection names = doc.DocumentNode.SelectNodes("//a/h2");

HtmlNodeCollection prices = doc.DocumentNode.SelectNodes("//div/main/ul/li/a/span");

for (int i = 0; i < names.Count(); i++){

Console.WriteLine("Name: {0}, Price: {1}", names[i].InnerText, prices[i].InnerText);

}

The GetDocument() function retrieves the HTML contents of the given target. The SelectNodes() function uses the relevant XPath selectors to parse the names and prices of the products on the target web page. The for loop prints all the InnerText of the parsed elements.

That's it! ScrapeMe has just been crawled using the HTML Agility Pack C# web crawling library.

Here's what the full code looks like.

using HtmlAgilityPack;

using System;

using System.Collections.Generic;

using System.Linq;

namespace HAPDemo

{

class Program

{

static void Main(string[] args)

{

var doc = GetDocument("https://scrapeme.live/shop/");

HtmlNodeCollection names = doc.DocumentNode.SelectNodes("//a/h2");

HtmlNodeCollection prices = doc.DocumentNode.SelectNodes("//div/main/ul/li/a/span");

List<Products> products = new List<Products>();

for (int i = 0; i < names.Count && i < prices.Count; i++)

{

Products p = new Products();

p.Name= names[i].InnerText;

p.Price = prices[i].InnerText;

products.Add(p);

}

foreach (var p in products)

{

Console.WriteLine("Name: {0} , Price: {1}", p.Name, p.Price);

}

}

static HtmlDocument GetDocument(string url)

{

HtmlWeb web = new HtmlWeb();

HtmlDocument doc = web.Load(url);

return doc;

}

}

class Products

{

private string name;

private string price;

public string Name { get => name; set => name = value; }

public string Price { get => price; set => price = value; }

}

}

5. ScrapySharp

ScrapySharp is an open-source C# web crawling library that combines the HTMLAgilityPack extension with a web client that can emulate a web browser like jQuery.

It significantly reduces the setup work often associated with scraping a web page, and its combination with HTML Agility Pack lets you easily access the retrieved HTML content.

ScrapySharp can simulate a web browser and handle cookie tracking, redirects, and other high-level operations.

The downsides of using the ScrapySharp C# scraping library are that it requires proxies and anti-bots and doesn't support automatic parsing of the crawled content.

How to Scrape a Web Page in C# with ScrapySharp?

Create a C# console application project and install the latest ScrapySharp package through NuGet Package Manager. Open the Program.cs file from your console application and include the following required libraries.

using HtmlAgilityPack;

using ScrapySharp.Network;

using System;

using System.Linq;

Next, create a static ScrapingBrowser object in the Program class. You can use the functionalities of this object, like mybrowser, to navigate and crawl the target URLs.

static ScrapingBrowser mybrowser = new ScrapingBrowser();

Create a helper method to retrieve and return the HTML content of the given target URL.

static HtmlNode GetHtml(string url)

{

WebPage webpage = mybrowser.NavigateToPage(new Uri(url));

return webpage.Html;

}

The GetHtml helper method takes the target URL as a parameter and sends it to the NavigateToPage() method of the mybrowser object, returning the webpage's HTML.

Let's create one more helper method to extract and print the product names and prices.

static void ScrapeNamesAndPrices(string url)

{

var html = GetHtml(url);

var nameNodes = html.OwnerDocument.DocumentNode.SelectNodes("//a/h2");

var priceNodes = html.OwnerDocument.DocumentNode.SelectNodes("//div/main/ul/li/a/span");

foreach (var (n, p) in nameNodes.Zip(priceNodes))

{

Console.WriteLine("Name: {0} , Price: {1}", n.InnerText, p.InnerText);

}

}

This snippet uses the GetHtml() method to retrieve the URL's HTML and parses it using the SelectNodes() function. The SelectNodes() function uses the XPaths of the product names & prices and returns all the elements with the same XPath.

The foreach loop gets the InnerText for each element in the nameNode and priceNode collections and prints them on the console. For a final touch, add a driver code to put everything in order.

static void Main(string[] args)

{

ScrapeNamesAndPrices("https://scrapeme.live/shop/");

}

And there you have it.

Here's what the final code looks like.

using HtmlAgilityPack;

using ScrapySharp.Network;

using System;

using System.Linq;

namespace ScrapyDemo

{

class Program

{

static ScrapingBrowser mybrowser = new ScrapingBrowser();

static void Main(string[] args)

{

ScrapeNamesAndPrices("https://scrapeme.live/shop/");

}

static HtmlNode GetHtml(string url)

{

WebPage webpage = mybrowser.NavigateToPage(new Uri(url));

return webpage.Html;

}

static void ScrapeNamesAndPrices(string url)

{

var html = GetHtml(url);

var nameNodes = html.OwnerDocument.DocumentNode.SelectNodes("//a/h2");

var priceNodes = html.OwnerDocument.DocumentNode.SelectNodes("//div/main/ul/li/a/span");

foreach (var (n, p) in nameNodes.Zip(priceNodes))

{

Console.WriteLine("Name: {0} , Price: {1}", n.InnerText, p.InnerText);

}

}

}

}

6. IronWebScraper

The IronWebScraper is a .Net Core C# web scraping library used to extract and parse data from internet sources. It can control permitted and disallowed objects, sites, media and other elements.

Other features include its ability to manage numerous identities and web cache.

The main drawbacks of using this C# web scraper library are as follows:

- It doesn't support crawling dynamic content.

- Requires manual proxy integrations.

How to Scrape a Web Page in C# with IronWebScraper?

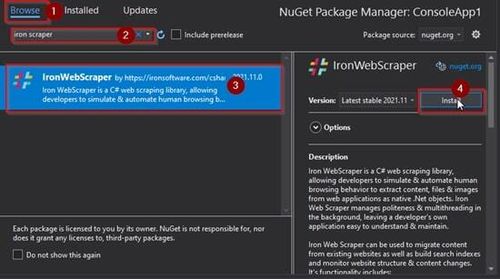

Setting up the development environment for this C# crawler library is pretty simple. Install the IronWebScraper package to your C# Console Project, and you're good to go!

The WebScraper class in IronWebScraper has two abstract methods: Init and Parse. The Init method initializes the web request and fetches the response. This response is then passed to the Parse function to extract the required elements from the HTML content.

To create your IronWebScraper, you must inherit the WebScraper class and override these two abstract methods. If you have IronScraperDemo as your main scraper class in the Program.cs file, implement the WebScraper class like this.

class IronScraperDemo : WebScraper

{

public override void Init()

{

License.LicenseKey = "ENTER YOUR LICENSE KEY HERE";

this.LoggingLevel = WebScraper.LogLevel.All;

this.Request("https://scrapeme.live/shop/", Parse);

}

public override void Parse(Response response)

{

var names = response.Css("h2");

var prices = response.Css("span.price");

for(int i=0; i<names.Length;i++)

{

Console.WriteLine("Name: {0}, Price: {1}", names[i].InnerText, prices[i].InnerText);

}

}

}

The Init method requires you to add a License Key, which you can get by creating an account on their website. The Init function further calls the Parse method on the received response after requesting the target URL.

In our case, the Parse method uses the CSS selectors to extract the product names and prices from the response and prints them out on the output console.

Congratulations! Your IronWebScraper is ready to work. Just add the following driver code to create an object of your crawler and call its Start() method.

static void Main(string[] args)

{

IronScraperDemo ironScraper = new IronScraperDemo();

ironScraper.Start();

}

Using the IronWebScraper to crawl the target webpage, your output should look like this.

Here's the complete Program.cs code for this example.

using IronWebScraper;

using System;

using System.Collections.Generic;

namespace IronScrapDemo

{

class IronScraperDemo : WebScraper

{

List<Products> products = new List<Products>();

static void Main(string[] args)

{

IronScraperDemo ironScraper = new IronScraperDemo();

ironScraper.Start();

}

public override void Init()

{

License.LicenseKey = "ENTER YOUR LICENSE KEY HERE";

this.LoggingLevel = WebScraper.LogLevel.All; // All Events Are Logged

this.Request("https://scrapeme.live/shop/", Parse);

}

public override void Parse(Response response)

{

var names = response.Css("h2");

var prices = response.Css("span.price");

for (int i=0; i < names.Length; i++)

{

Products p = new Products();

p.Name = names[i].InnerText;

p.Price = prices[i].InnerText;

products.Add(p);

}

foreach(var p in products)

{

Console.WriteLine("Name: {0}, Price: {1}", p.Name, p.Price);

}

}

}

class Products

{

public String Name

{

get;

set;

}

public String Price

{

get;

set;

}

}

}

Remark: you still need to set up the Development Environment as per the instructions in this section.

7. HttpClient

HttpClient is a C# HTML scraper library that provides async features to extract only the raw HTML contents from a target URL. However, you still need to use an HTML parsing tool to extract the desired data.

How to Scrape a Web Page in C# with HttpClient?

The HttpClient is a primitive .NET class and doesn't require any external assembly, but you have to install the HTML Agility Pack as an external dependency through the Package Manager.

To get started, include the following assemblies in the "Program.cs" file under the C# Console Application.

using System;

using System.Threading.Tasks;

using System.Net.Http;

using HtmlAgilityPack;

The System.Threading.Tasks class helps handle the asynchronous functions. Importing the System.Net.Http is needed to generate the HTTP requests, and the HTMLAgilityPack is used to parse the retrieved HTML content.

Add a GetHtmlContent() method in the Program class, like this.

private static Task<string> GetHtmlContent()

{

var hclient = new HttpClient();

return hclient.GetStringAsync("https://scrapeme.live/shop");

}

You can pass this string response to the ParseHTML() method to extract and show the desired data.

private static void ParseHtml(string html)

{

var doc = new HtmlDocument();

doc.LoadHtml(html);

HtmlNodeCollection names = doc.DocumentNode.SelectNodes("//a/h2");

HtmlNodeCollection prices = doc.DocumentNode.SelectNodes("//div/main/ul/li/a/span");

for (int i = 0; i < names.Count; i++){

Console.WriteLine("Name: {0}, Price: {1}", names[i].InnerText, prices[i].InnerText);

}

}

The above ParseHTML takes an HTML string as input, parses it using the SelectNodes() method, and displays the names and prices on the output console.

Here the SelectNodes() function plays the key parsing role. It extracts only the relevant elements from the HTML string according to the given XPath selectors.

To wrap it off, let's look at the driver code to execute everything in order.

static async Task Main(string[] args)

{

var html = await GetHtmlContent();

ParseHtml(html);

}

Notice that the main() function is now an async method. The reason is that every awaitable call can only be enclosed in an async method; thereby, the main() function has to be asynchronous.

And like the other C# web scraping libraries discussed in this article, your output will be the following. Check our guide on using a proxy with HttpClient in C# for more.

Here's what all the code chunks joined together look like.

using System;

using System.Threading.Tasks;

using System.Collections.Generic;

using System.Net.Http;

using System.Threading.Tasks;

using HtmlAgilityPack;

namespace HTTPDemo

{

class Program

{

static async Task Main(string[] args)

{

var html = await GetHtmlContent();

List<Products> products = ParseHtml(html);

foreach (var p in products)

{

Console.WriteLine("Name: {0} , Price: {1}", p.Name, p.Price);

}

}

private static Task<string> GetHtmlContent()

{

var hclient = new HttpClient();

return hclient.GetStringAsync("https://scrapeme.live/shop");

}

private static List<Products> ParseHtml(string html)

{

var doc = new HtmlDocument();

doc.LoadHtml(html);

HtmlNodeCollection names = doc.DocumentNode.SelectNodes("//a/h2");

HtmlNodeCollection prices = doc.DocumentNode.SelectNodes("//div/main/ul/li/a/span");

List<Products> products = new List<Products>();

for (int i = 0; i < names.Count && i < prices.Count; i++)

{

Products p = new Products();

p.Name = names[i].InnerText;

p.Price = prices[i].InnerText;

products.Add(p);

}

return products;

}

}

class Products

{

public String Name

{

get;

set;

}

public String Price

{

get;

set;

}

}

}

Conclusion

We did the deep test and presented the seven best C# web scraping libraries in 2024. As seen, they are:

- ZenRows.

- Puppeteer-Sharp.

- Selenium.

- HTML Agility Pack.

- ScrapySharp

- IronWebScraper.

- HttpClient.

A common challenge among scrapers is their inability to crawl a web page without triggering anti-bots. ZenRows solves this scraping problem by handling all anti-bot bypass for you, removing the headaches involved. You can test ZenRows for free to see what it's capable of.

Frequent Questions

What Is the Best C# Web Scraping Library?

ZenRows is the best option for web scraping in C#. Unlike other C# web scraping libraries, it doesn't require manual integration of proxies or setting up anti-bot logic.

It further supports scraping dynamic content with AutoParse features.

What Is the Most Popular C# Library for Web Scraping?

HTML Agility Pack is the most popular library, with around 83 million downloads. Its popularity is mainly due to its HTML parser, which can download web pages directly or through a browser.

What Is a Good C# Web Scraping Library?

A good scraping library provides efficient mechanisms to scrape and parse the contents from the targets, avoiding any blockings (including anti-bots/captchas) or revealing your IP.

Additionally, it should support scraping dynamic content and should have comprehensive documentation and active community support. ZenRows, Puppeteer-Sharp, and Selenium are good options for web scraping using C#.

Which Libraries Are Used for Web Scraping in C#?

There are different libraries used for web scraping using C#. The best ones are:

- ZenRows Web Scraper API.

- Puppeteer-Sharp.

- Selenium WebDriver.

- HTML Agility Pack.

- ScrapySharp.

- IronWebScraper.

- HttpClient.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.