Selenium is a favorite library for browser automation for web scraping and testing. Since C# is one of the languages the project supports, there are official Selenium C# bindings.

In this guide, you'll explore the basics of Selenium WebDriver with C# and then look at more complex interactions. You'll learn:

Let's dive in!

Why Use Selenium in C#

Selenium is the most widely used headless browser library. It as a consistent API that makes it ideal for multi-platform and multi-language browser automation for testing and web scraping tasks.

C# is one of the languages officially endorsed by the project, so the C# Selenium library is always up-to-date and gives you access to the latest features. For this reason, it's one of the best resources for web automation in C#.

Before diving into this Selenium C# tutorial, you may prefer to read our guide on headless browser scraping and web scraping with C#.

How to Use Selenium in C#

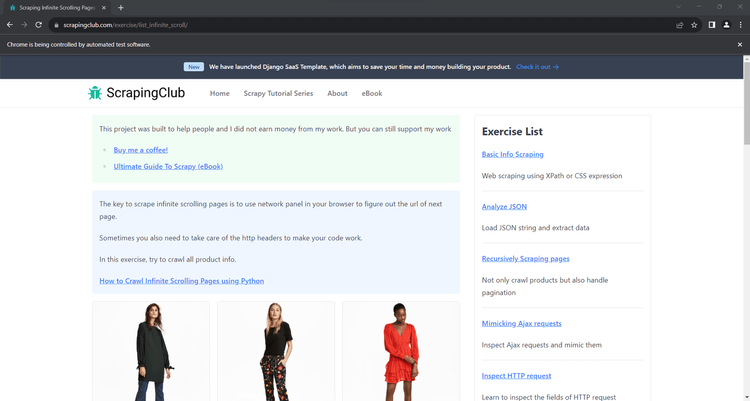

To get started with the C# Selenium library, we'll target the below infinite scrolling demo page:

It's a great example of a dynamic content page because it loads new products as the user scrolls down. To interact with it, you need a browser automation tool that can use JavaScript, such as Selenium.

Follow the steps below and learn how to extract data from it!

Step 1: Install Selenium in C#

To follow this tutorial, make sure you have the .NET SDK installed on your computer. Download the .NET 7+ installer, double-click it, and follow the instructions.

You now have everything you need to put in place a Selenium C# project. Use PowerShell to create a SeleniumCSharpProject folder and enter it:

mkdir SeleniumCSharpProject

cd SeleniumCSharpProject

Next, execute the new console command below to initialize a C# application.

dotnet new console --framework net7.0

The project folder will now contain Program.cs and other files. Program.cs is the main C# file containing the Selenium web automation logic.

Then, add the Selenium.WebDriver package to your project's dependencies:

dotnet add package Selenium.WebDriver

Keep in mind that you can run your script with the command you see next:

dotnet run

Well done! You're ready to scrape some data via Selenium in C#.

Step 2: Scrape with Selenium in C#

Paste the following lines into Program.cs to import Selenium and create a ChromeDriver instance to control a Chrome instance:

using OpenQA.Selenium.Chrome;

using OpenQA.Selenium;

class Program

{

static void Main(string[] args)

{

// instantiate a driver instance to control

// Chrome in headless mode

var chromeOptions = new ChromeOptions();

chromeOptions.AddArguments("--headless=new"); // comment out for testing

var driver = new ChromeDriver(chromeOptions);

// scraping logic...

// close the browser and release its resources

driver.Quit();

}

}

The --headless=new argument makes Chrome start in headless mode. Comment that option out if you want to see the actions made by your scraping script in the browser window.

Next, use driver to open the target page in the main tab of the controlled Chrome instance:

driver.Navigate().GoToUrl("https://scrapingclub.com/exercise/list_infinite_scroll/");

Then, access the PageSource attribute to get the source HTML of the page as a string. Print it in the terminal with this instruction:

var html = driver.PageSource;

Console.WriteLine(html);

This is what your Program.cs file should contain so far:

using OpenQA.Selenium.Chrome;

using OpenQA.Selenium;

class Program

{

static void Main(string[] args)

{

// instantiate a driver instance to control

// Chrome in headless mode

var chromeOptions = new ChromeOptions();

chromeOptions.AddArguments("--headless=new"); // comment out for testing

var driver = new ChromeDriver(chromeOptions);

// open the target page in Chrome

driver.Navigate().GoToUrl("https://scrapingclub.com/exercise/list_infinite_scroll/");

// extract the source HTML of the page

// and print it

var html = driver.PageSource;

Console.WriteLine(html);

// close the browser and release its resources

driver.Quit();

}

}

Launch the script in headed mode by commenting the --headless=new option. You'll see Selenium open a Chrome window and automatically visit the Infinite Scrolling demo page.

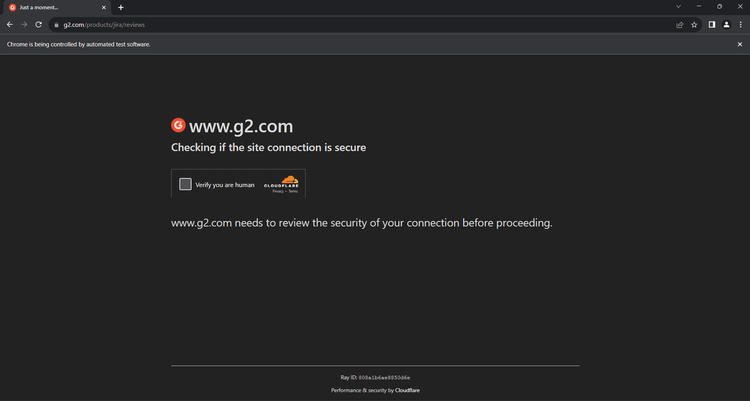

The "Chrome is being controlled by automated test software" means that Selenium is operating on Chrome as expected.

Before terminating, the script will log the content below in PowerShell:

<html class="h-full"><head>

<meta charset="utf-8">

<meta name="viewport" content="width=device-width, initial-scale=1">

<meta name="description" content="Learn to scrape infinite scrolling pages"><title>Scraping Infinite Scrolling Pages (Ajax) | ScrapingClub</title>

<link rel="icon" href="/static/img/icon.611132651e39.png" type="image/png">

<!-- Omitted for brevity... -->

Perfect, that's the HTML code of the target page!

You'll extract specific data from it, like the product names, as a next step.

Step 3: Parse the Data You Want

One of the greatest C# Selenium's features is the ability to parse the HTML content of a page to extract data from it. That's what web scraping is all about!

For example, consider that the scraping goal of your script is to get the name and price of each product on the page. Achieving that involves a 3-step process:

- Select the product HTML elements with an effective DOM selection strategy.

- Retrieve the information of interest from each of them.

- Collect the scraped data in a C# data structure.

A DOM selection strategy usually relies on a CSS Selector or an XPath expression, two viable ways to select HTML elements. While CSS selectors are intuitive, XPath expressions may be more complex to define. Find out more in our article about CSS Selector vs XPath.

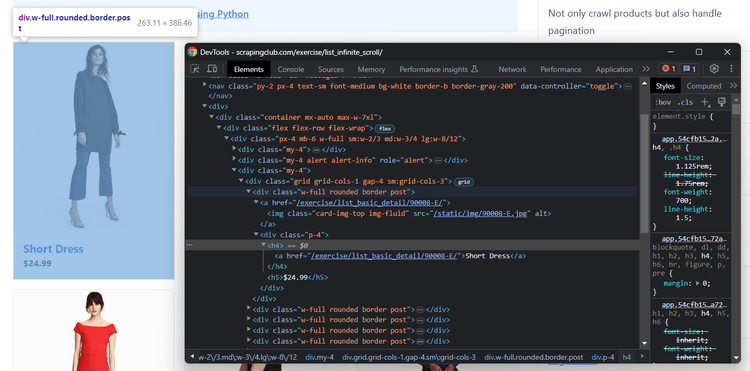

We'll go for CSS selectors to keep things simple. Keep in mind that devising an effective CSS selector strategy requires a preliminary step. So, visit the target page in your browser and inspect a product HTML element with the DevTools:

Explore the HTML code and notice that each product is an HTML <div> element with a "post" class. The product name is then in an inner <h4> element, while the price is in an <h5> node.

That means the CSS selector to find the product HTML elements is post. Given a product card, you can get its name and price elements with the CSS selectors h4 and h5, respectively.

Now, learn how to get the name and price of each product on the target page by taking the instructions below.

First, you need a data structure to keep track of the info scraped from the page. Define a global type in the Program class representing the data you want to extract from the HTML elements:

public class Product

{

public string? Name { get; set; }

public string? Price { get; set; }

}

Next, initialize an array of type Product in Main(). This array will store the scraped data at the end of the script.

var products = new List<Product>();

Use FindElements() exposed by the driver to select the product HTML elements on the page. Thanks to By.CssSelector, you can instruct Selenium to look for DOM elements using a CSS selector.

var productElements = driver.FindElements(By.CssSelector(".post"));

Once you get the product nodes, iterate over them and apply the data extraction logic.

// iterate over each product and extract data from them

foreach (var productElement in productElements)

{

// select the name and price elements

var nameElement = productElement.FindElement(By.CssSelector("h4"));

var priceElement = productElement.FindElement(By.CssSelector("h5"));

// extract their data

var name = nameElement.Text;

var price = priceElement.Text;

// create a new product object and add it to the list

var product = new Product { Name = name, Price = price };

products.Add(product);

}

FindElement() is like FindElements() but returns the first node that matches the selection strategy. Instead, the Text attribute returns the text of the current element. That's all you need to achieve the data extraction goal.

This is the current Program.cs:

using OpenQA.Selenium.Chrome;

using OpenQA.Selenium;

class Program

{

// define a custom type to store

// the product objects to scrape

public class Product

{

public string? Name { get; set; }

public string? Price { get; set; }

}

static void Main(string[] args)

{

// to store the scraped data

var products = new List<Product>();

// instantiate a driver instance to control

// Chrome in headless mode

var chromeOptions = new ChromeOptions();

chromeOptions.AddArguments("--headless=new"); // comment out for testing

var driver = new ChromeDriver(chromeOptions);

// open the target page in Chrome

driver.Navigate().GoToUrl("https://scrapingclub.com/exercise/list_infinite_scroll/");

// select the product HTML elements on the page

// with a CSS Selector

var productElements = driver.FindElements(By.CssSelector(".post"));

// iterate over each product and extract data from them

foreach (var productElement in productElements)

{

// select the name and price elements

var nameElement = productElement.FindElement(By.CssSelector("h4"));

var priceElement = productElement.FindElement(By.CssSelector("h5"));

// extract their data

var name = nameElement.Text;

var price = priceElement.Text;

// create a new product object and add it to the list

var product = new Product { Name = name, Price = price };

products.Add(product);

}

// print the scraped products

foreach (var product in products)

{

Console.WriteLine($"Name: {product.Name}, Price: {product.Price}");

};

// close the browser and release its resources

driver.Quit();

}

}

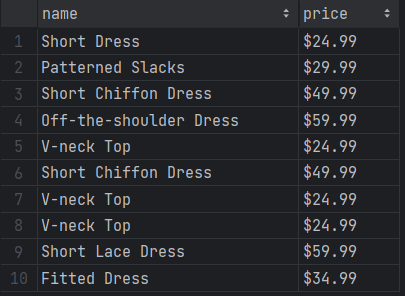

Run it, and it'll print the output below:

Name: Short Dress, Price: $24.99

Name: Patterned Slacks, Price: $29.99

// omitted for brevity...

Name: Short Lace Dress, Price: $59.99

Name: Fitted Dress, Price: $34.99

Awesome! The Selenium WebDriver C# parsing logic works as desired!

Step 4: Export Data as CSV

Vanilla C# allows export to CSV, but the easiest way to convert the collected data to CSV is through a library. CsvHelper is the most popular .NET package for reading and writing CSV files, so install it:

dotnet add package CsvHelper

Import it into your script by adding this line to the top of your Program.cs file:

using CsvHelper;

Create a products.csv file and take advantage of CsvHelper to populate it. WriteRecords() converts the product objects to CSV format and fills out the output file.

// create the CSV output file

using (var writer = new StreamWriter("products.csv"))

// instantiate the CSV writer

using (var csv = new CsvWriter(writer, CultureInfo.InvariantCulture))

{

// populate the CSV file

csv.WriteRecords(products);

}

CultureInfo.invariantCulture guarantees that any software can read the produced CSV, no matter the user's local settings.

Add the import required by CultureInfo to the C# script:

using System.Globalization;

Here's what your entire Selenium C# scraper looks like:

using OpenQA.Selenium.Chrome;

using OpenQA.Selenium;

using CsvHelper;

using System.Globalization;

class Program

{

// define a custom type to store

// the product objects to scrape

public class Product

{

public string? Name { get; set; }

public string? Price { get; set; }

}

static void Main(string[] args)

{

// to store the scraped data

var products = new List<Product>();

// instantiate a driver instance to control

// Chrome in headless mode

var chromeOptions = new ChromeOptions();

chromeOptions.AddArguments("--headless=new"); // comment out for testing

var driver = new ChromeDriver(chromeOptions);

// open the target page in Chrome

driver.Navigate().GoToUrl("https://scrapingclub.com/exercise/list_infinite_scroll/");

// select the product HTML elements on the page

// with a CSS Selector

var productElements = driver.FindElements(By.CssSelector(".post"));

// iterate over each product and extract data from them

foreach (var productElement in productElements)

{

// select the name and price elements

var nameElement = productElement.FindElement(By.CssSelector("h4"));

var priceElement = productElement.FindElement(By.CssSelector("h5"));

// extract their data

var name = nameElement.Text;

var price = priceElement.Text;

// create a new product object and add it to the list

var product = new Product { Name = name, Price = price };

products.Add(product);

}

// create the CSV output file

using (var writer = new StreamWriter("products.csv"))

// instantiate the CSV writer

using (var csv = new CsvWriter(writer, CultureInfo.InvariantCulture))

{

// populate the CSV file

csv.WriteRecords(products);

}

// close the browser and release its resources

driver.Quit();

}

}

Run the scraping script:

dotnet run

Selenium is slow, so the execution will take a while. When the script terminates, a products.csv file will appear. Open it, and you'll see this data:

Fantastic! You now know the basics of Selenium with C#!

However, if you look at the output, you'll notice that it contains only ten rows. The target page initially shows only a few products, depending on infinite scrolling to load more. No worries, the next section will guide you through the process of getting all the products!

Interacting with Web Pages in a Browser

The Selenium C# library can automate many user interactions, including waits, mouse movements, and more. That helps to simulate human behavior, enabling your script to:

- Access dynamic content that requires special interaction.

- Fool anti-bot measures into thinking that your scraping bot is a human user.

In particular, the most relevant interactions supported by C# Selenium are:

- Wait for elements to on the DOM.

- Mouse movements and click actions.

- Fill out input fields and submit forms.

- Take screenshots of the entire page.

The above operations are all available via built-in methods. For the remaining interactions, like scrolling and dragging, there is ExecuteScript(). This function takes a JavaScript script as a string and runs it on the page to reproduce any action you want.

Time to scrape all product data from the infinite scroll demo page. We'll also see other popular interactions in more specific Selenium C# example snippets!

Scrolling

The target page contains only ten products and loads new ones dynamically as you scroll down. Since C# Selenium lacks a built-in method for scrolling down, you need custom JavaScript logic to handle infinite scrolling.

This JavaScript snippet tells the browser to scroll down the page 10 times at an interval of 500 ms:

// scroll down the page 10 times

const scrolls = 10

let scrollCount = 0

// scroll down and then wait for 0.5s

const scrollInterval = setInterval(() => {

window.scrollTo(0, document.body.scrollHeight)

scrollCount++

if (scrollCount === numScrolls) {

clearInterval(scrollInterval)

}

}, 500)

Store these lines in a string variable and pass it to EvaluateScript() to perform infinite scrolling:

var jsScrollScript = @"

const scrolls = 10

let scrollCount = 0

// scroll down and then wait for 0.5s

const scrollInterval = setInterval(() => {

window.scrollTo(0, document.body.scrollHeight)

scrollCount++

if (scrollCount === numScrolls) {

clearInterval(scrollInterval)

}

}, 500)

";

driver.ExecuteScript(jsScrollScript);

Run the ExecuteScript() instruction before selecting the HTML products to be sure the DOM has been updated.

Executing the JS script takes time, so you need to wait for the operation to end. Use Thread.Sleep() to stop the execution for 10 seconds. This will allow the Selenium C# script to wait for new products to load:

driver.Manage().Timeouts().ImplicitWait = TimeSpan.FromSeconds(10);

Put it all together, and you'll get this new script:

using OpenQA.Selenium.Chrome;

using OpenQA.Selenium;

using CsvHelper;

using System.Globalization;

class Program

{

// define a custom type to store

// the product objects to scrape

public class Product

{

public string? Name { get; set; }

public string? Price { get; set; }

}

static void Main(string[] args)

{

// to store the scraped data

var products = new List<Product>();

// instantiate a driver instance to control

// Chrome in headless mode

var chromeOptions = new ChromeOptions();

chromeOptions.AddArguments("--headless=new"); // comment out for testing

var driver = new ChromeDriver(chromeOptions);

// open the target page in Chrome

driver.Navigate().GoToUrl("https://scrapingclub.com/exercise/list_infinite_scroll/");

// deal with infinite scrolling

var jsScrollScript = @"

const scrolls = 10

let scrollCount = 0

// scroll down and then wait for 0.5s

const scrollInterval = setInterval(() => {

window.scrollTo(0, document.body.scrollHeight)

scrollCount++

if (scrollCount === numScrolls) {

clearInterval(scrollInterval)

}

}, 500)

";

driver.ExecuteScript(jsScrollScript);

// wait 10 seconds for the DOM to be updated

Thread.Sleep(TimeSpan.FromSeconds(10));

// select the product HTML elements on the page

// with a CSS Selector

var productElements = driver.FindElements(By.CssSelector(".post"));

// iterate over each product and extract data from them

foreach (var productElement in productElements)

{

// select the name and price elements

var nameElement = productElement.FindElement(By.CssSelector("h4"));

var priceElement = productElement.FindElement(By.CssSelector("h5"));

// extract their data

var name = nameElement.Text;

var price = priceElement.Text;

// create a new product object and add it to the list

var product = new Product { Name = name, Price = price };

products.Add(product);

}

// create the CSV output file

using (var writer = new StreamWriter("products.csv"))

// instantiate the CSV writer

using (var csv = new CsvWriter(writer, CultureInfo.InvariantCulture))

{

// populate the CSV file

csv.WriteRecords(products);

}

// close the browser and release its resources

driver.Quit();

}

}

This time, the products list should store all 60 products. Run the script to check if that is true:

dotnet run

Be patient because the script execution will take more than 10 seconds.

The products.csv file produced by the scraper now contains more than just the first ten items:

Congratulations! You just extract data from all products on the site!

Wait for Element

The solution seen earlier works, sure. At the same time, the current C# Selenium example script uses an unreliable hard wait. What happens in case of a browser or network slowdown? Your script will fail! Hard waits make your automation logic flaky, and you should always avoid them.

Never wait a fixed number of seconds in your scraping scripts. That's a bad idea that only makes your scrapers unnecessarily slow. To get consistent results, prefer smart waits. For example, wait for a DOM element to be on the page before interacting with it.

WebDriverWait from Selenium offers the Until() method to wait for a specific condition to occur. Employ it to wait up to ten seconds for the 60th .post element to be on the DOM:

WebDriverWait wait = new WebDriverWait(driver, TimeSpan.FromSeconds(10));

wait.Until(c => c.FindElement(By.CssSelector(".post:nth-child(60)")));

Replace the Thread.Sleep() hard instruction with the two lines above. The script will now wait for the page to render the products retrieved via AJAX after the scrolls.

The ExpectedConditions Selenium interface is obsolete and no longer maintained in C#.

To make WebDriverWait work, add the following import to Program.cs:

using OpenQA.Selenium.Support.UI;

The definitive code of your Selenium C# scraper should be this one:

using OpenQA.Selenium.Chrome;

using OpenQA.Selenium;

using CsvHelper;

using System.Globalization;

using OpenQA.Selenium.Support.UI;

class Program

{

// define a custom type to store

// the product objects to scrape

public class Product

{

public string? Name { get; set; }

public string? Price { get; set; }

}

static void Main(string[] args)

{

// to store the scraped data

var products = new List<Product>();

// instantiate a driver instance to control

// Chrome in headless mode

var chromeOptions = new ChromeOptions();

chromeOptions.AddArguments("--headless=new"); // comment out for testing

var driver = new ChromeDriver(chromeOptions);

// open the target page in Chrome

driver.Navigate().GoToUrl("https://scrapingclub.com/exercise/list_infinite_scroll/");

// deal with infinite scrolling

var jsScrollScript = @"

const scrolls = 10

let scrollCount = 0

// scroll down and then wait for 0.5s

const scrollInterval = setInterval(() => {

window.scrollTo(0, document.body.scrollHeight)

scrollCount++

if (scrollCount === numScrolls) {

clearInterval(scrollInterval)

}

}, 500)

";

driver.ExecuteScript(jsScrollScript);

// wait 10 seconds for the DOM to be updated

// by waiting for the 60-th product element to be on the page

WebDriverWait wait = new WebDriverWait(driver, TimeSpan.FromSeconds(10));

wait.Until(c => c.FindElement(By.CssSelector(".post:nth-child(60)")));

// select the product HTML elements on the page

// with a CSS Selector

var productElements = driver.FindElements(By.CssSelector(".post"));

// iterate over each product and extract data from them

foreach (var productElement in productElements)

{

// select the name and price elements

var nameElement = productElement.FindElement(By.CssSelector("h4"));

var priceElement = productElement.FindElement(By.CssSelector("h5"));

// extract their data

var name = nameElement.Text;

var price = priceElement.Text;

// create a new product object and add it to the list

var product = new Product { Name = name, Price = price };

products.Add(product);

}

// create the CSV output file

using (var writer = new StreamWriter("products.csv"))

// instantiate the CSV writer

using (var csv = new CsvWriter(writer, CultureInfo.InvariantCulture))

{

// populate the CSV file

csv.WriteRecords(products);

}

// close the browser and release its resources

driver.Quit();

}

}

Execute it to verify that it produces the same results as before. The main difference? It'll be much faster, no longer having to wait 10 seconds.

Et voilà! You're now an expert in web scraping in Selenium with C#. It's time to explore other interactions.

Wait for Page to Load

By default, driver.Navigate().GoToUrl() stops execution until the page to open is considered loaded. So, the C# Selenium WebDdriver library automatically waits for pages to load.

The time the driver waits for the pages to load is controlled by the PageLoad attribute. Update its value as follows:

// wait up to 120 seconds

driver.Manage().Timeouts().PageLoad = TimeSpan.FromSeconds(120);

As web pages are more dynamic and interactive than ever, understanding when they've fully loaded is no longer simple. For more granular control over waiting logic, use the Until() method from WebDriverWait.

Click Elements

DOM elements selected with Selenium expose the Click() method to trigger click interactions. That function tells the browser to click on the specified node, leading to a mouse click event:

element.Click();

You'll be redirected to a new page when Click() triggers a page change (as in the example below). Thus, you'll have a new DOM structure to deal with:

var productElement = driver.FindElement(By.CssSelector(".post"));

productElement.Click();

// you are now on the detail product page...

// new scraping logic...

// driver.findElement(...);

Take a Screenshot

Scraping data from the web isn't just about extracting textual information. Images are useful, too! Imagine having screenshots of the pages of your competitors' sites. That'll help you study how they approach UI development.

In C#, Selenium comes with built-in screenshot functionality via the GetScreenshot() method:

driver.GetScreenshot().SaveAsFile("screenshot.png");

A screenshot.png file with the screenshot of the current viewport will appear in the project's root folder.

As of this writing, the C# port of Selenium doesn't provide an easy way to screenshot a specific element.

Avoid Getting Blocked when Web Scraping with Selenium C#

Getting blocked by anti-bot solutions is the biggest challenge when scraping online data. An effective approach to minimizing that issue is to randomize your requests. To do so, set real-world User-Agent header values. Also, use proxies to make your requests appear from different IPs and locations.

To set a custom user agent in Selenium with C#, pass it to Chrome's --user-agent flag option:

var customUserAgent = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/117.0.0.0 Safari/537.36";

chromeOptions.AddArgument($"--user-agent={customUserAgent}");

Why should you do it? Learn more in our guide about User Agents for web scraping.

Configuring a proxy is similar and involves the --proxy-server flag. Get the URL of a free proxy from sites like Free Proxy List and then set it in Chrome via Selenium:

var proxyURL = "213.33.2.28:80";

chromeOptions.AddArgument($"--proxy-server={proxyURL}");

Free proxies are so short-lived and unreliable that by the time you read the article, the chosen proxy server won't work.

Bear in mind that these approaches are just baby steps to elude anti-bot solutions. Sophisticated measures like Cloudflare will still be able to mark your script as a bot:

What to do, then? Use ZenRows! It seamlessly integrates with Selenium, provides automatic User-Agent change and IP rotation through reliable residential proxies. Its powerful anti-bot toolkit will save you from blocks and IP bans.

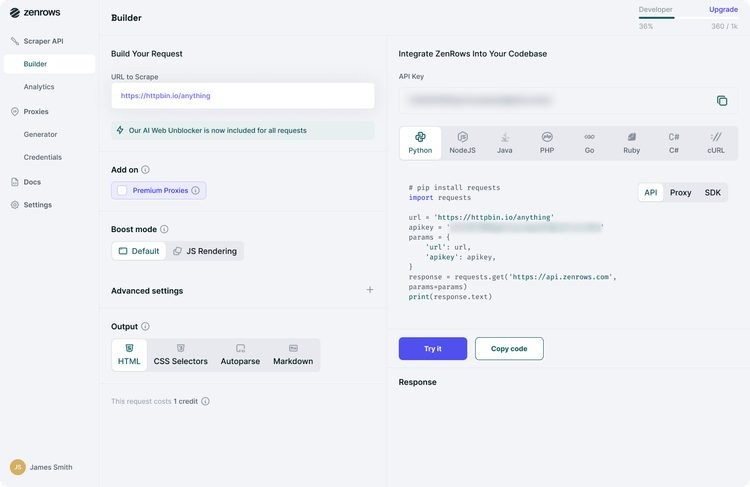

To move your first steps with ZenRows, sign up for free. You'll get redirected to the Request Builder page below:

Assume you want to extract data from an Amazon product page. Paste your target URL (https://www.amazon.com/PlayStation-5-Console-CFI-1215A01X/dp/B0BCNKKZ91/) in "URL to Scrape". Next, get rotating IPs by checking "Premium Proxy". User-Agent rotation is included by default.

On the right side of the screen, press the "cURL" button. Copy the generated link and paste it into your code as a destination URL of the Selenium C# GoToUrl() method.

using OpenQA.Selenium.Chrome;

using OpenQA.Selenium;

class Program

{

static void Main(string[] args)

{

// instantiate a driver instance to control

// Chrome in headless mode

var chromeOptions = new ChromeOptions();

chromeOptions.AddArguments("--headless=new"); // comment out for testing

var driver = new ChromeDriver(chromeOptions);

// open the ZenRows URL in Chrome

driver.Navigate().GoToUrl("https://api.zenrows.com/v1/?apikey=<YOUR_ZENROWS_API_KEY>&url=https%3A%2F%2Fwww.amazon.com%2FPlayStation-5-Console-CFI-1215A01X%2Fdp%2FB0BCNKKZ91%2F&premium_proxy=true&autoparse=true");

// extract the source HTML of the page

// and print it

var html = driver.PageSource;

Console.WriteLine(html);

// close the browser and release its resources

driver.Quit();

}

}

Launch it, and it'll print the source HTML of the Amazon page:

<!DOCTYPE html>

<head>

<meta charset="utf-8" /><!doctype html><html lang="en-us" class="a-no-js" data-19ax5a9jf="dingo"><!-- sp:feature:head-start -->

<head><script>var aPageStart = (new Date()).getTime();</script><meta charset="utf-8"/>

<!-- sp:end-feature:head-start -->

<!-- sp:feature:csm:head-open-part1 -->

<script type='text/javascript'>var ue_t0=ue_t0||+new Date();</script>

<!-- omitted for brevity ... -->

Wow! You just integrated ZenRows into Selenium with C#.

Now, what about anti-bot solutions such as CAPTCHAS that may hinder your Selenium script? Keep in mind that ZenRows not only extends Selenium but can replace it entirely! That's thanks to its equivalent built-in JavaScript rendering capabilities.

The benefit of using ZenRows only is its advanced anti-bot capabilities, granting access to any web page. To replace Selenium, activate the "AI Anti-bot" feature in the Request Builder and make a call to the target URL using any HTTP client, such as RestSharp. C# clients like the HttPClient also support proxy setup and work well with ZenRows.

As a SaaS solution, ZenRows also introduces significant savings in terms of machine costs!

C# Selenium Alternative

The C# version of Selenium is a great tool, but it's not the only .NET package with headless browser capabilities. Here's a list of alternatives to the Selenium C# library:

- ZenRows: The next-generation scraping API with JS rendering, premium proxies, and an advanced anti-bot bypass toolkit.

- Puppeteer: A popular headless browser library written in JavaScript for automating Chromium instances. For C#, there is a port called PuppeteerSharp.

-

Playwright: A modern headless browser library developed in JavaScript by Microsoft.

playwright-dotnetis the C# version of the Playwright.

Conclusion

In this Selenium C# tutorial, you explored the fundamentals of controlling headless Chrome. You started with the basics and saw more advanced techniques to become a browser automation expert.

Now you know:

- How to set up a C# Selenium WebDriver project.

- How to use it to scrape data from a dynamic content page.

- What user interactions can you simulate with Selenium.

- The challenges of web scraping and how to tackle them.

No matter how sophisticated your browser automation is, anti-bots can still block it. Avoid them all with ZenRows, a web scraping API with headless browser functionality, IP rotation, and the most advanced anti-scraping bypass available. Retrieving data from dynamic content sites has never been easier. Try ZenRows for free!

Frequent Questions

What Is C# Selenium Used For?

C# Selenium is used for automated browser automation, such as E2E testing and web scraping. It enables developers to write C# scripts that can interact with web pages as a human user would. The library is ideal for navigating through pages, extracting data from them, and/or performing testing tasks.

Can We Use C# in Selenium?

Yes, C# can be used in Selenium. Not only can this is possible, but C# is one of the languages officially supported by the project. Other languages available are Java, Python, Ruby, and JavaScript.

Does Selenium Support C#?

Yes, Selenium supports C# through the official bindings. The browser automation library is available through the nuget Selenium.WebDriver package. That's maintained at the same pace as the other official bindings, which means that users always have access to the latest features.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.