Web scraping Amazon is critical for e-commerce businesses since having information about your competitors and the latest trends is vital.

What Is Amazon Scraping?

Web scraping is data collection from websites, and Amazon is the biggest online shopping platform. So how can you use web scraping to have an advantage over your competitors?

This practical tutorial will show how to scrape product information from Amazon using Python!

Does Amazon Allow Web Scraping?

Definitely! But there's a caveat: Amazon uses rate-limiting and can block your IP address if you overburden the website. They also check HTTP headers and will block you if your activity seems suspicious.

If you try to crawl through multiple pages simultaneously, you can get blocked without proxy rotation. Also, Amazon's web pages have different structures. Even different product pages have different HTML structures. It's difficult to build a robust web crawling application.

Yet scraping product prices, reviews and listings is legal.

Why Web Scraping from Amazon?

Especially in e-commerce, having as much information as possible is essential. If you can automatize the extraction process, you can concentrate more on your competitors and your business.

These are some of the benefits:

Monitoring Products

You can look at the top-selling products in your category. That will allow you to identify current trends in the market. So you can decide on your products on better ground. You'll also be able to spot the products that are losing top sales positions.

Monitoring Competitors

The only way to win the competition is by monitoring your competitors' moves. For example, scraping Amazon product prices will help spot price trends. That will let you identify a better pricing strategy. So you can track the changes and conduct competitor product analysis.

How to Scrape Data From Amazon?

The easiest way to scrape Amazon is:

- Create a free ZenRows account.

- Open the Request Builder.

- Enter the target URL.

- Get the data in HTML or JSON.

ZenRows API handles automatic premium proxy rotation. It also has auto-parse support, automatically extracting everything available on the page! It supports Amazon, eBay, YouTube, Indeed, and more. Learn more about available platforms!

We can use its Amazon scraper to automatically extract the data from the products page. Let's start by installing the ZenRows SDK:

pip install zenrows

It's possible to add extra configuration to make the customized API calls. You can learn more from the API documentation. Now, let's automatically scrape data from products!

from zenrows import ZenRowsClient

client = ZenRowsClient(API_KEY)

url = "https://www.amazon.com/Crockpot-Electric-Portable-20-Ounce-Licorice/dp/B09BDGFSWS"

# It's possible to specify javascript rendering, premium proxies and geolocation.

# You can check the API documentation for further customization.

params = {"premium_proxy":"true","proxy_country":"us","autoparse":"true"}

response = client.get(url, params=params)

print(response.json())

Results:

{

"avg_rating": "4.7 out of 5 stars",

"category": "Home & Kitche › Kitchen & Dining › Storage & Organizatio › Travel & To-Go Food Containers › Lunch Boxes",

"description": "Take your favorite meals with you wherever you go! The Crockpot Lunch Crock Food Warmer is a convenient, easy-to-carry, electric lunch box. Plus, with its modern-vintage aesthetic and elegant Black Licorice color, it's stylish, too. It is perfectly sized for one person, and is ideal for carrying and warming meals while you’re on the go. With its 20-ounce capacity, this heated lunch box is perfect whether you're in the office, working from home, or on a road trip. Take your leftovers, soup, oatmeal, and more with you, then enjoy it at the perfect temperature! This portable food warmer features a tight-closing outer lid to help reduce spills, as well as an easy-carry handle, soft-touch coating, and detachable cord. The container is removable for effortless filling, carrying, and storage. Cleanup is easy, too: the inner container and lid are dishwasher-safe.",

"out_of_stock": false,

"price": "$29.99",

"price_without_discount": "$44.99",

"review_count": "14,963 global ratings",

"title": "Crockpot Electric Lunch Box, Portable Food Warmer for On-the-Go, 20-Ounce, Black Licorice",

"features": [

{

"Package Dimensions": "13.11 x 10.71 x 8.58 inches"

},

{

"Item Weight": "2.03 pounds"

},

{

"Manufacturer": "Crockpot"

},

{

"ASIN": "B09BDGFSWS"

},

{

"Country of Origin": "China"

},

{

"Item model number": "2143869"

},

{

"Best Sellers Rank": "#17 in Kitchen & Dining (See Top 100 in Kitchen & Dining) #1 in Lunch Boxes"

},

{

"Date First Available": "November 24, 2021"

}

],

"ratings": [

{

"5 star": "82%"

},

{

"4 star": "10%"

},

{

"3 star": "4%"

},

{

"2 star": "1%"

},

{

"1 star": "2%"

}

]

}

It's possible to add extra configuration to make the customized API calls. You can learn more from the API documentation. Now, As you can see, using the ZenRows API is really easy! But let's also see how to scrape the traditional way!

Web Scraping with BeautifulSoup

This article doesn't require advanced Python skills, but if you have just started learning web scraping, check out our web scraping with Python guide!

We have several considerations to remark on for web scraping in Python:

It's possible to build only request-based scripts. We can also use browser automation tools such as Selenium for web scraping Amazon. But Amazon detects headless browsers rather easily. Especially when you don't customize them.

Using frameworks like Selenium is also inefficient, as they're prone to overload the system if you scale up your application.

Thus, we'll use the requests and BeautifulSoup modules in Python to scrape the Amazon products' data.

Let's start by installing the necessary Python packages! We'll be using the requests and BeautifulSoup modules.

You can use pip to install them:

pip install requests beautifulsoup4

Avoid Getting Blocked While Web Scraping

As we mentioned before, Amazon does allow web scraping. But it blocks the web scraper if many requests are performed. They check HTTP headers and apply rate-limiting to block malicious bots.

It's rather easy to detect if the client is a bot or not from the HTTP headers, so using browser automation tools such as Selenium won't work if you don't customize your fixed browser settings.

We'll be using a real browser's user-agent, and since we won't send too frequent requests, we won't get rate limited. You should use a proxy server to scrape data at scale.

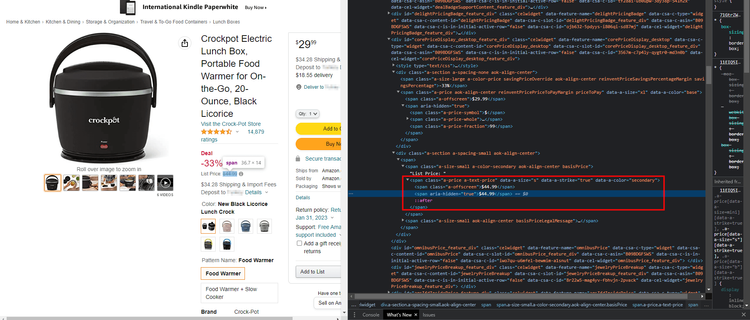

Scraping Product Data From Amazon

In this article, we'll scrape data from this lunch box product:

We'll extract the following:

- Product title.

- Rating.

- Price.

- Discount (if there's any).

- Full price (if there's a discount,

pricewill be the discounted price). - Stock status (out of stock or not).

- Product description.

- Related Items.

As mentioned, Amazon checks the fixed settings, so sending HTTP requests with standard settings won't work. You can check this out by sending a simple request with Python:

import requests

response = requests.get("https://www.amazon.com/Sabrent-4-Port-Individual-Switches-HB-UM43/dp/B00JX1ZS5O")

print(response.status_code) # prints 503

The response has the status code "503 Service Unavailable". There's also this message in the response's HTML:

To discuss automated access to Amazon data please contact [email protected].

For information about migrating to our APIs refer to our Marketplace APIs at https://developer.amazonservices.com/ref=rm_5_sv, or our Product Advertising API at https://affiliate-program.amazon.com/gp/advertising/api/detail/main.html/ref=rm_5_ac for advertising use cases.

So, what can we do to bypass Amazon's protection system?

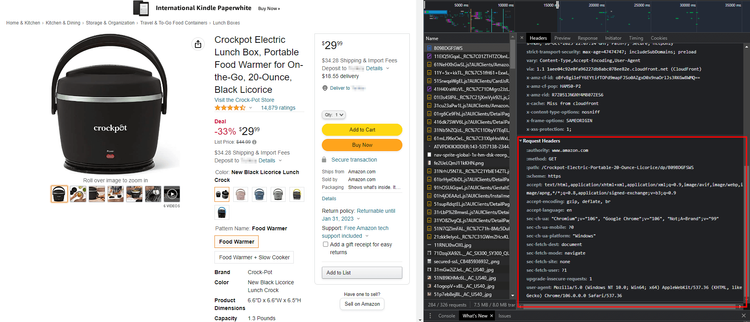

In any web scraping project, imitating a real user is critical. Thus, we can use our standard HTTP headers and remove unnecessary headers:

We need the accept, accept-encoding, accept-language, and user-agent headers. Amazon checks headers such as user-agent to block suspicious clients.

They also use rate limiting, so your IP can get blocked if you send frequent requests. You can learn more about rate limiting from our rate limit bypassing guide!

First, we'll define a class for our scraper:

from bs4 import BeautifulSoup

from requests import Session

class Amazon:

def __init__(self):

self.sess = Session()

self.headers = {

"accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9",

"accept-encoding": "gzip, deflate, br",

"accept-language": "en",

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/106.0.0.0 Safari/537.36",

}

self.sess.headers = self.headers

Amazon class defines a Session object, which keeps track of the record of our connection. We can also see that the custom user-agent and other headers are used as Session's headers. Since we'll need to get the webpage, let's define a get method:

class Amazon:

#....

def get(self, url):

response = self.sess.get(url)

assert response.status_code == 200, f"Response status code: f{response.status_code}"

splitted_url = url.split("/")

self.id = splitted_url[-1].split("?")[0]

self.product_page = BeautifulSoup(response.text, "html.parser")

return response

This method connects to the website using the Session above. If the returned status code is not 200, that means there's an error, so we raise an AssertionError.

If the request succeeds, we split the request URL and extract the product ID. The BeautifulSoup object is defined for the product page. It'll let us search HTML elements and extract data from them.

Now, we'll define the data_from_product_page method. That will extract the relevant information from the product's page. We can use the find method and specify the element attributes to search for an HTML element.

How do we know what to search, though?

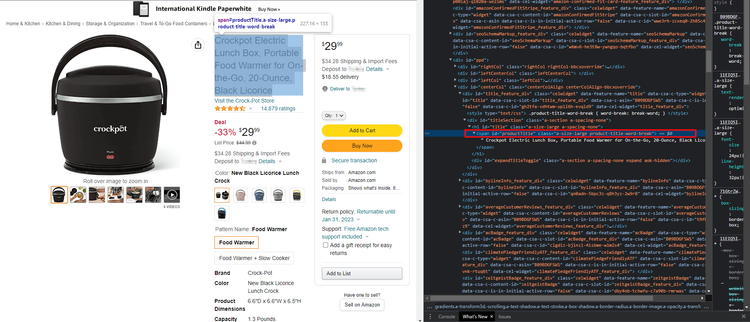

By using the developer console! We can use the developer console to find out which elements and attributes we need.

We can right-click and inspect the element:

As we can see, the product title is written in a span element with a productTitle value in the id attribute. So let's get it!

# As the search result returns another BeautifulSoup object, we use "text" to

# extract data inside of the element.

# we will also use strip to remove whitespace at the start and end of the words

title = self.product_page.find("span", attrs={"id": "productTitle"}).text.strip()

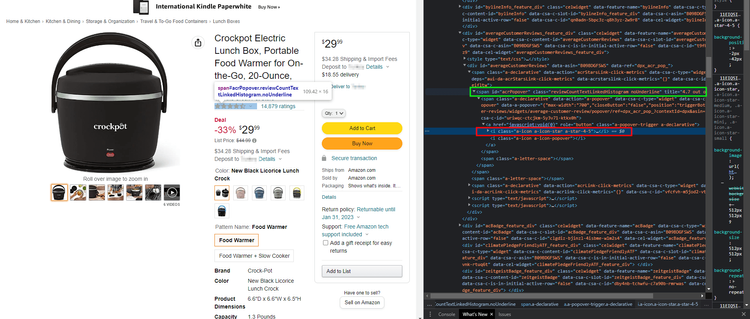

To extract the ratings, we can use the same method! When you inspect the average rating part, you'll first see an i element. Instead, we'll use the span element a little above (indicated by the green rectangle). Because its title attribute already has the average rating text and a unique class value.

We'll search the span elements with the reviewCountTextLinkedHistogram class. Instead of extracting the text inside of the element, let's use the title attribute:

Let's add the code to our script:

# The elements attributes are read as a dictionary, so we can get the title by passing its key

# of course, we will also use strip here

rating = self.product_page.find("span", attrs={"class": "reviewCountTextLinkedHistogram"})["title"].strip()

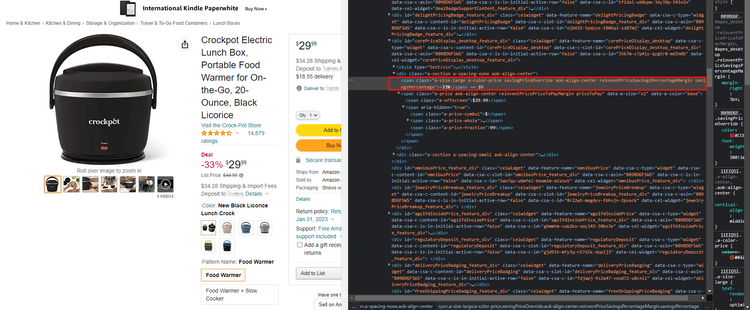

For the discount, there are lots of classes!

The reinventPriceSavingsPercentageMargin and savingsPercentage classes are unique to the discount element, so we'll use those to search for the discount. There's also the total price written below the listing price when there's a discount:

We couldn't find any other element with the a-price and a-text-price classes. So let's use them to find the actual listing price. Of course, since its uncertain if there's a discount, we extract that price only if there's a discount:

# get the element, "find" returns None if the element could not be found

discount = self.product_page.find("span", attrs={"class": "reinventPriceSavingsPercentageMargin savingsPercentage"})

# if the discount is found, extract the total price

# else, just set the total price as the price found above and set discount = False

if discount:

discount = discount.text.strip()

price_without_discount = self.product_page.find("span", attrs={"class": "a-price a-text-price"}).text.strip()

else:

price_without_discount = price

discount = False

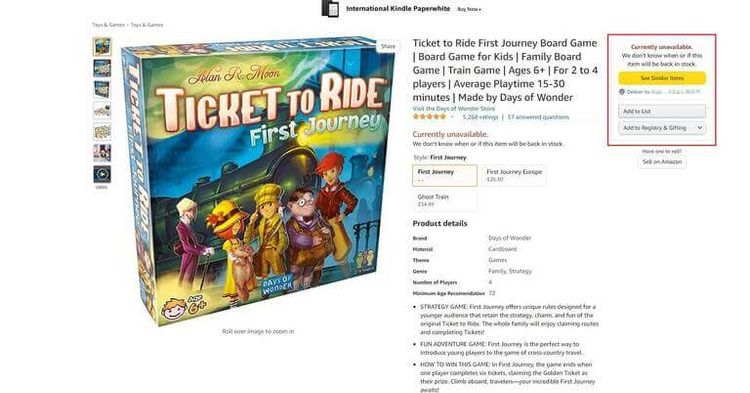

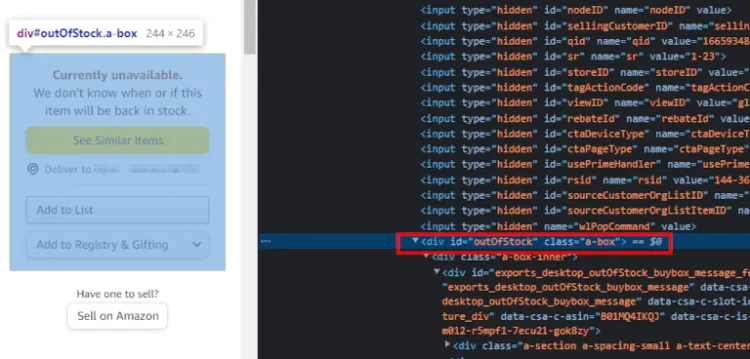

In order to check if the product is out of stock, we can check if the part in the rectangle is on the page.

Let's inspect the box:

We need to check if a div element with id=outOfStock exists. Let's add this snippet to our script:

# simply check if the item is out of stock or not

out_of_stock = self.product_page.find("div", {"id": "outOfStock"})

if out_of_stock:

out_of_stock=True

else:

out_of_stock=False

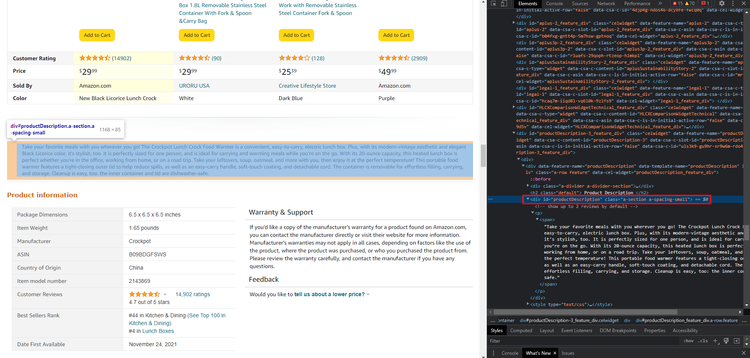

All right! We made it this far, the only thing left is the product description, and then we're done!

The product description is stored in the div element, which has id="productDescription":

We can easily extract the description:

description = self.product_page.find("div", {"id": "productDescription"}).text

Nice!

Most of the time, you'll have to crawl through relevant items. Your crawler should also collect data about other products as you need to conduct a proper analysis. You can store the related item's links and extract information from them later.

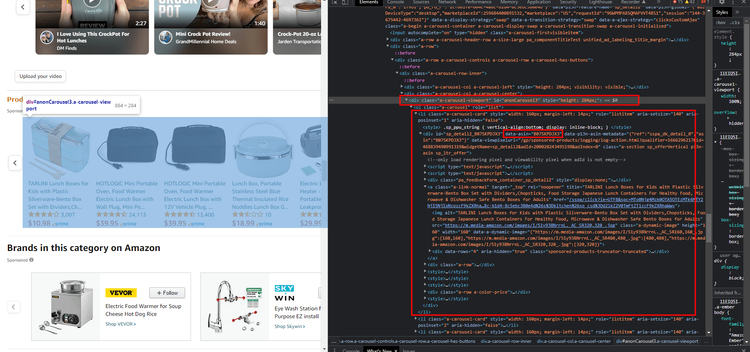

As you can see below, the related items are stored in a carousel slider div. It seems we can use the a-carousel-viewport class of the div. We'll get li elements and then collect ASIN values. ASIN values are Amazon's standard identification number, which we can use to construct product URLs.

Now, add the code to our script:

# extract the related items carousel's first page

carousel = self.product_page.find("div", {"class": "a-carousel-viewport"})

related_items = carousel.find_all("li")

related_item_asins = [item.find("div")["data-asin"] for item in related_items]

# of course, we need to get item links

related_item_links = []

for asin in related_item_asins:

link = "www.amazon.com/dp/" + asin

related_item_links.append(link)

What a ride! Now let's finish the script we've prepared till now:

from bs4 import BeautifulSoup

from requests import Session

class Amazon:

def __init__(self):

self.sess = Session()

self.headers = {

"accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9",

"accept-encoding": "gzip, deflate, br",

"accept-language": "en",

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/106.0.0.0 Safari/537.36",

}

self.sess.headers = self.headers

def get(self, url):

response = self.sess.get(url)

assert response.status_code == 200, f"Response status code: f{response.status_code}"

splitted_url = url.split("/")

self.product_page = BeautifulSoup(response.text, "html.parser")

self.id = splitted_url[-1].split("?")[0]

return response

def data_from_product_page(self):

# As the search result returns another BeautifulSoup object, we use "text" to

# extract data inside of the element.

# we will also use strip to remove whitespace at the start and end of the words

title = self.product_page.find("span", attrs={"id": "productTitle"}).text.strip()

# The elements attributes are read as a dictionary, so we can get the title by passing its key

# of course, we will also use strip here

rating = self.product_page.find("span", attrs={"class": "reviewCountTextLinkedHistogram"})["title"].strip()

# First, find the element by specifiyng a selector with two options in this case

price_span = self.product_page.select_one("span.a-price.reinventPricePriceToPayMargin.priceToPay, span.a-price.apexPriceToPay")

# Then, extract the pricing from the span inside of it

price = price_span.find("span", {"class": "a-offscreen"}).text.strip()

# Get the element, "find" returns None if the element could not be found

discount = self.product_page.find("span", attrs={"class": "reinventPriceSavingsPercentageMargin savingsPercentage"})

# If the discount is found, extract the total price

# Else, just set the total price as the price found above and set discount = False

if discount:

discount = discount.text.strip()

price_without_discount = self.product_page.find("span", attrs={"class": "a-price a-text-price"}).text.strip()

else:

price_without_discount = price

discount = False

# Simply check if the item is out of stock or not

out_of_stock = self.product_page.find("div", {"id": "outOfStock"})

if out_of_stock:

out_of_stock=True

else:

out_of_stock=False

# Get the description

description = self.product_page.find("div", {"id": "productDescription"}).text

# Extract the related items carousel's first page

carousel = self.product_page.find("div", {"class": "a-carousel-viewport"})

related_items = carousel.find_all("li")

related_item_asins = [item.find("div")["data-asin"] for item in related_items]

# Of course, we need to return the product URLs

# So let's construct them!

related_item_links = []

for asin in related_item_asins:

link = "www.amazon.com/dp/" + asin

related_item_links.append(link)

extracted_data = {

"title": title,

"rating": rating,

"price": price,

"discount": discount,

"price without discount": price_without_discount,

"out of stock": out_of_stock,

"description": description,

"related items": related_item_links

}

return extracted_data

Let's try it using the product's link:

scraper = Amazon()

scraper.get("https://www.amazon.com/Crockpot-Electric-Portable-20-Ounce-Licorice/dp/B09BDGFSWS")

data = scraper.data_from_product_page()

for k,v in data.items():

print(f"{k}:{v}")

And the final output:

title:Crockpot Electric Lunch Box, Portable Food Warmer for On-the-Go, 20-Ounce, Black Licorice

rating:4.7 out of 5 stars

price:$29.99

discount:False

price without discount:$29.99

out of stock:False

description:

Take your favorite meals with you wherever you go! The Crockpot Lunch Crock Food Warmer is a convenient, easy-to-carry, electric lunch box. Plus, with its modern-vintage aesthetic and elegant Black Licorice color, it's stylish, too. It is perfectly sized for one person, and is ideal for carrying and warming meals while you’re on the go. With its 20-ounce capacity, this heated lunch box is perfect whether you're in the office, working from home, or on a road trip. Take your leftovers, soup, oatmeal, and more with you, then enjoy it at the perfect temperature! This portable food warmer features a tight-closing outer lid to help reduce spills, as well as an easy-carry handle, soft-touch coating, and detachable cord. The container is removable for effortless filling, carrying, and storage. Cleanup is easy, too: the inner container and lid are dishwasher-safe.

related items:['www.amazon.com/dp/B09YXSNCTM', 'www.amazon.com/dp/B099PNWYRH', 'www.amazon.com/dp/B09B14821T', 'www.amazon.com/dp/B074TZKCCV', 'www.amazon.com/dp/B0937KMYT8', 'www.amazon.com/dp/B07T7F5GHX', 'www.amazon.com/dp/B07YJFB8GY']

Of course, Amazon's page structure is quite complex, so different product pages may have different structures. Thus, one should repeat this process to build a solid web scraper.

Congratulations! You built your own Amazon product scraper if you followed along!

That was quite a hassle; well, how about using a service completely designed for web scraping?

Conclusion

Amazon's web page structures are complex, and you may see a different structured page. You should find out the differences and update your application along with it.

You'll also need to randomize HTTP headers and use premium proxy servers while scaling up. Otherwise, you'd get detected easily.

In this article, you've learned

- The importance of data scraping from Amazon.

- The methods Amazon uses to block web scrapers.

- Scraping product prices, descriptions, and more data from Amazon.

- How ZenRows can help you with its auto-parse feature.

In the end, scaling and building efficient web scrapers is difficult. Even the script we've prepared will fail when the page structure changes. You can use ZenRows' web scraping API with auto-parser support, which will automatically collect the most valuable part of the data.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.