Doing article scraping is a valuable step in business and research, giving insights into relevant data from various online sources. Want to begin extracting information from online publications?

In this tutorial, you'll learn how to scrape articles and extract the information from each one.

How to Choose the Right Article Scraper Tool

You need the right article scraper tool to extract content from news websites, blogs, and other publications. But which one is the best for you? The choice depends on your use case and level of expertise.

You have two options: code and no-code solutions.

As the name sounds, no-code article scrapers don't require coding, and most offer point-and-click content extractors. While they're beginner-friendly, they offer less flexibility.

The coding option involves writing web scraping code in any web scraping language. Although more technical, the code path offers the flexibility to get exactly what you need.

Let's further overview each option in a table to see how they compare.

| Consideration | No-code | Code |

|---|---|---|

| Content scraping capability | Offers less control and may be limited | Get exactly what you need |

| Scalability | Generally limited and may not be suitable for complex or large-scale scraping | Scalable, allows extensibility with other libraries and tools, ideal for large-scale web scraping |

| Cost | Generally requires subscription fees, cost increases with feature requests and may be expensive for edge cases | No subscription fees are required. Code offers higher cost control and can be cost-effective for complex scenarios |

| Ease of use | Beginner-friendly | Requires programming and can be technical |

| Ease of maintenance | Depends on the service provider, no access to the source code | You have access to the source code and can maintain it at scale |

| Data extraction accuracy | Limited and depends on the underlying algorithm used by the service provider | Offers fine-grained control over the data extraction process, allows custom algorithms |

| Dynamic content handling | Generally depends on the service provider, may incur extra costs | Free headless browsing implementation is available |

| Avoid getting blocked | Limited and depends on the no-code provider. The implementation may require extra costs if available | Allows anti-CAPTCHA solutions, premium IPs, and many others |

Step-by-Step Guide to Article Scraping

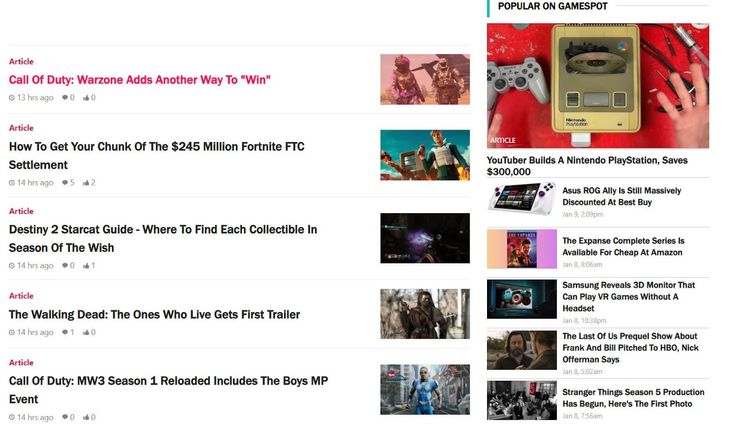

You’ll learn how to use Python to scrape article content in this section. We'll use GameSpot as a demo website and implement pagination to extract the title, author's name, published date, and article content from each article on the website.

See the website’s layout below:

Let's get started!

Prerequisites

We'll use Python's Requests library as the HTTP client and BeautifulSoup as the HTML parser. To begin, install each package using pip:

pip install beautifulsoup4 requests

Step 1: Get the Page HTML

Let’s scrape the entire HTML of the target website before diving deeper into the content extraction.

The code below requests the target web page and parses its HTML using BeautifulSoup:

# import the required libraries

from bs4 import BeautifulSoup

import requests

base_URL = 'https://www.gamespot.com'

# get the news category

category_URL = f'{base_URL}/news'

response = requests.get(category_URL)

data_links = []

if response.status_code == 200:

# parse HTML content with BeautifulSoup

soup = BeautifulSoup(response.content, 'html.parser')

print(soup)

else:

print(f'An error has occurred with status {response.status_code}')

This outputs the entire HTML page:

<!DOCTYPE html>

<!-- ... -->

<title>Video Game News, Game News, Entertainment News - GameSpot</title>

<header class="js-masthead masthead" id="masthead">

<div class="js-masthead-overlay masthead-overlay masthead-nav-overlay"></div>

<nav class="js-masthead-rows masthead-rows flexbox-column flexbox-flex-even">

<div class="promo-masthead-list flexbox-row flexbox-align-center text-xsmall border-bottom-grayscale--thin hide-scrollbar">

<span class="promo-masthead-list__column">

<a class="js-click-tag promo-masthead-list__link" data-click-tag="tracking|header|content" href="https://www.gamespot.com/articles/playstation-plus-extra-and-premium-games-for-january-announced/1100-6520247/">

PlayStation Plus Extra/Premium January Games

</a>

</span>

</div>

</nav>

</header>

<!-- ... -->

We’re good to go!

Step 2: Get an Article List

The next step is to get a list of article URLs from the website's news category. The logic is to iterate through each article card and extract its unique URL.

Let's inspect the target website to see its DOM layout.

Each article is inside a link card, and the website uses pagination. So, we'll start with the first page and scale to other pages in the next section.

The code below sends a request to the news category and parses its HTML content using BeautifulSoup. It then iterates through the article cards to extract unique URLs.

Finally, we merge each extracted URL with the base URL and append them to the empty data link array. This will allow us to request each article link iteratively and extract the desired content later.

# import the required libraries

from bs4 import BeautifulSoup

import requests

base_URL = 'https://www.gamespot.com'

# get the news category

category_URL = f'{base_URL}/news'

response = requests.get(category_URL)

data_links = []

if response.status_code == 200:

# parse HTML content with BeautifulSoup

soup = BeautifulSoup(response.content, 'html.parser')

# get link cards

article_links = soup.find_all('a', class_='card-item__link')

# iterate through link cards to get article unique hrefs

for link in article_links:

href = link.get('href')

# merge article's unique URL with base URL and append to the empty array

data_links.append(base_URL+href)

else:

print('an error has occured')

print(data_links)

The code outputs all the article URLs on the first page in a list, as shown:

[

'https://www.gamespot.com/articles/where-is-xur-today-december-29-january-2-destiny-2-exotic-items-and-xur-location-guide/1100-6520069/',

'https://www.gamespot.com/articles/playstation-plus-free-games-for-january-2024-revealed/1100-6520068/',

'https://www.gamespot.com/articles/destiny-2-starcat-guide-where-to-find-each-collectible-in-season-of-the-wish/1100-6519536/',

#... other URLs omitted for brevity

]

That works! But as mentioned earlier, we also want to scale to other pages. Let's see how to do that.

Step 3: Expand Your Article List

You'll need to crawl the website to expand the article URL list. This is pretty straightforward since the target website uses pagination.

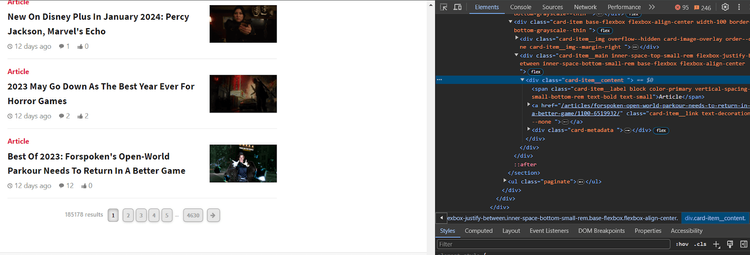

But you must first understand how the website navigates pages in its URL. For instance, see how it routes page 2 in the URL box:

So the navigation format is https://www.gamespot.com/news/?page=2. You'll have to customize your code to append the category URL to the page numbers and extract the article links incrementally.

Let's modify the code in the previous section to obtain all article links from pages 1 to 10.

In the code below, we set the initial page count to 1 and increment it based on the specified range in the loop. The loop then appends the category URL to the page number incrementally from 1 to 10.

# import the required libraries

from bs4 import BeautifulSoup

import requests

base_URL = 'https://www.gamespot.com'

# get the news category

category_URL = f'{base_URL}/news'

response = requests.get(category_URL)

# set the initial page count to 1

page_count = 1

data_links = []

# fetch article links from page 1 to 10

for i in range(0, 10):

# append category URL to page number to get the current page

current_page = f'{category_URL}?page={page_count}'

response = requests.get(current_page)

if response.status_code == 200:

soup = BeautifulSoup(response.content, 'html.parser')

# get link cards

article_links = soup.find_all('a', class_='card-item__link')

# iterate through link cards to get article unique hrefs

for link in article_links:

href = link.get('href')

# merge article's unique URL with the base URL

data_links.append(base_URL + href)

else:

print(f'Error fetching links for page {page_count}: {response.status_code}')

# increment the page count

page_count += 1

print(data_links)

As expected, the code extracts all article URLs up to page 10:

[

'https://www.gamespot.com/articles/cd-projekt-red-is-not-interested-in-being-acquired/1100-6520072/',

'https://www.gamespot.com/articles/mickey-mouse-must-be-exterminated-in-new-horror-game-infestation-88/1100-6520070/',

#... other URLs omitted for brevity

'https://www.gamespot.com/articles/warhammer-40k-rogue-trader-companion-tier-list-guide/1100-6519660/',

'https://www.gamespot.com/articles/nintendo-switch-buying-guide-best-deals-holiday-2023/1100-6518905/'

]

You just crawled 10 pages using Python's Requests and BeautifulSoup. Keep up the good work!

Next is the content extraction phase.

Step 4: Extract All Articles' Data

The data extraction is easy once all the article links from all 10 pages are ready. We'll modify the previous code to achieve this.

First, we define a function that extracts the desired content from an article's URL using CSS locators. The code appends the scraped data to the empty data dictionary and outputs it inside the empty data_list array:

# import the required libraries

from bs4 import BeautifulSoup

import requests

base_URL = 'https://www.gamespot.com'

category_URL = f'{base_URL}/news'

page_count = 1

data_list = []

def article_scraper(article_url):

# create a new dictionary for each article

data = {}

try:

response = requests.get(article_url)

soup = BeautifulSoup(response.content, 'html.parser')

# extract article title, handle NoneType

title_element = soup.find('h1', class_='news-title')

data['title'] = title_element.text.strip() if title_element else ''

# extract published date, handle NoneType

published_date_element = soup.find('time')

data['published_date'] = published_date_element['datetime'] if published_date_element else ''

# extract author, handle NoneType

author_element = soup.find('span', class_='byline-author')

data['author'] = author_element.text.strip() if author_element else ''

# extract content, handle NoneType

content_element = soup.find('div', class_='content-entity-body')

data['content'] = content_element.text.strip() if content_element else ''

# append the data for this article to the list

data_list.append(data)

except requests.RequestException as e:

print(f'Error fetching article data for {article_url}: {e}')

Next, execute the data extraction function inside a loop that obtains the article URLs from the first 10 pages. This loop works by incrementing the page count dynamically in the URL.

Add the following to the above code:

# fetch article links from page 1 to 10

for i in range(0, 10):

# append the category URL to page number to get the current page

current_page = f'{category_URL}?page={page_count}'

response = requests.get(current_page)

if response.status_code == 200:

soup = BeautifulSoup(response.content, 'html.parser')

# get link cards

article_links = soup.find_all('a', class_='card-item__link')

# iterate through link cards to get article unique hrefs

for link in article_links:

href = link.get('href')

# merge article's unique URL with the base URL

article_url = base_URL + href

# execute the scraping function

article_scraper(article_url)

else:

print(f'Error fetching links for page {page_count}: {response.status_code}')

# increment the page count

page_count += 1

print(data_list)

Your final code should look like this:

# import the required libraries

from bs4 import BeautifulSoup

import requests

base_URL = 'https://www.gamespot.com'

category_URL = f'{base_URL}/news'

page_count = 1

data_list = []

def article_scraper(article_url):

# create a new dictionary for each article

data = {}

try:

response = requests.get(article_url)

soup = BeautifulSoup(response.content, 'html.parser')

title_element = soup.find('h1', class_='news-title')

data['title'] = title_element.text.strip() if title_element else ''

# extract published date, handle NoneType

published_date_element = soup.find('time')

data['published_date'] = published_date_element['datetime'] if published_date_element else ''

# extract author, handle NoneType

author_element = soup.find('span', class_='byline-author')

data['author'] = author_element.text.strip() if author_element else ''

# extract content, handle NoneType

content_element = soup.find('div', class_='content-entity-body')

data['content'] = content_element.text.strip() if content_element else ''

# append the data for this article to the list

data_list.append(data)

except requests.RequestException as e:

print(f'Error fetching article data for {article_url}: {e}')

# fetch article links from page 1 to 10

for i in range(0, 10):

# append the category URL to page number to get the current page

current_page = f'{category_URL}?page={page_count}'

response = requests.get(current_page)

if response.status_code == 200:

soup = BeautifulSoup(response.content, 'html.parser')

# get link cards

article_links = soup.find_all('a', class_='card-item__link')

# iterate through link cards to get article unique hrefs

for link in article_links:

href = link.get('href')

# merge article's unique URL with the base URL

article_url = base_URL + href

# execute the scraping function

article_scraper(article_url)

else:

print(f'Error fetching links for page {page_count}: {response.status_code}')

# increment the page count

page_count += 1

print(data_list)

Executing the code extracts the desired content like so:

[

{'title': 'Star Wars Outlaws Release Date Set For Late 2024', 'published_date': '2024-01-02T10:10:00-0800', 'author': 'Eddie Makuch', 'content': 'Star Wars Outlaws, the new...},

#... other data omitted to brevity

{'title': 'Warhammer 40K: Rogue Trader - Trading And Reputation Guide', 'published_date': '2023-12-06T05:00:00-0800', 'author': 'Jason Rodriguez', 'content': 'The Warhammer 40K: Rogue...}

]

Your article scraper works! It's time to write the extracted data into a CSV.

Step 5: Export Data to CSV

You only need to modify the scraping function in the previous code to write the extracted data to a CSV instead of outputting it in the command line.

The modified part of the function specifies a file path for the CSV. It creates a column for each data and writes it into the CSV iteratively as the code runs (see the highlighted code below).

Let's integrate that into the article scraper function from the previous section.

# import the required libraries

from bs4 import BeautifulSoup

import requests

import csv

base_URL = 'https://www.gamespot.com'

category_URL = f'{base_URL}/news'

page_count = 1

data_list = []

def article_scraper(article_url):

# create a new dictionary for each article

data = {}

try:

response = requests.get(article_url)

soup = BeautifulSoup(response.content, 'html.parser')

# extract published date, handle NoneType

title_element = soup.find('h1', class_='news-title')

data['title'] = title_element.text.strip() if title_element else ''

# extract published date, handle NoneType

published_date_element = soup.find('time')

data['published_date'] = published_date_element['datetime'] if published_date_element else ''

# extract author, handle NoneType

author_element = soup.find('span', class_='byline-author')

data['author'] = author_element.text.strip() if author_element else ''

# extract content, handle NoneType

content_element = soup.find('div', class_='content-entity-body')

data['content'] = content_element.text.strip() if content_element else ''

# append the data for this article to the list

data_list.append(data)

# insert the data into the CSV file

csv_file_path = 'article_data.csv'

with open(csv_file_path, 'a', newline='', encoding='utf-8') as csv_file:

csv_writer = csv.writer(csv_file)

# if the file is empty, write the header row

if csv_file.tell() == 0:

csv_writer.writerow(['Title', 'Published Date', 'Author', 'Content'])

# write the data row

csv_writer.writerow([data['title'], data['published_date'], data['author'], data['content']])

except requests.RequestException as e:

print(f'Error fetching article data for {article_url}: {e}')

Execute this function inside the navigation logic as done in the previous section:

# fetch article links from page 1 to 10

for i in range(0, 10):

# append the category URL to page number to get the current page

current_page = f'{category_URL}?page={page_count}'

response = requests.get(current_page)

if response.status_code == 200:

soup = BeautifulSoup(response.content, 'html.parser')

# get link cards

article_links = soup.find_all('a', class_='card-item__link')

# iterate through link cards to get article unique hrefs

for link in article_links:

href = link.get('href')

# merge article's unique URL with the base URL

article_url = base_URL + href

# execute the scraping function

article_scraper(article_url)

else:

print(f'Error fetching links for page {page_count}: {response.status_code}')

# increment the page count

page_count += 1

print(data_list)

Here's the final code:

# import the required libraries

from bs4 import BeautifulSoup

import requests

import csv

base_URL = 'https://www.gamespot.com'

category_URL = f'{base_URL}/news'

page_count = 1

data_list = []

def article_scraper(article_url):

# create a new dictionary for each article

data = {}

try:

response = requests.get(article_url)

soup = BeautifulSoup(response.content, 'html.parser')

# extract published date, handle NoneType

title_element = soup.find('h1', class_='news-title')

data['title'] = title_element.text.strip() if title_element else ''

# extract published date, handle NoneType

published_date_element = soup.find('time')

data['published_date'] = published_date_element['datetime'] if published_date_element else ''

# extract author, handle NoneType

author_element = soup.find('span', class_='byline-author')

data['author'] = author_element.text.strip() if author_element else ''

# extract content, handle NoneType

content_element = soup.find('div', class_='content-entity-body')

data['content'] = content_element.text.strip() if content_element else ''

# append the data for this article to the list

data_list.append(data)

# insert the data into the CSV file

csv_file_path = 'article_data.csv'

with open(csv_file_path, 'a', newline='', encoding='utf-8') as csv_file:

csv_writer = csv.writer(csv_file)

# if the file is empty, write the header row

if csv_file.tell() == 0:

csv_writer.writerow(['Title', 'Published Date', 'Author', 'Content'])

# write the data row

csv_writer.writerow([data['title'], data['published_date'], data['author'], data['content']])

except requests.RequestException as e:

print(f'Error fetching article data for {article_url}: {e}')

# fetch article links from page 1 to 10

for i in range(0, 10):

# append the category URL to page number to get the current page

current_page = f'{category_URL}?page={page_count}'

response = requests.get(current_page)

if response.status_code == 200:

soup = BeautifulSoup(response.content, 'html.parser')

# get link cards

article_links = soup.find_all('a', class_='card-item__link')

# iterate through link cards to get article unique hrefs

for link in article_links:

href = link.get('href')

# merge article's unique URL with the base URL

article_url = base_URL + href

# execute the scraping function

article_scraper(article_url)

else:

print(f'Error fetching links for page {page_count}: {response.status_code}')

# increment the page count

page_count += 1

print(data_list)

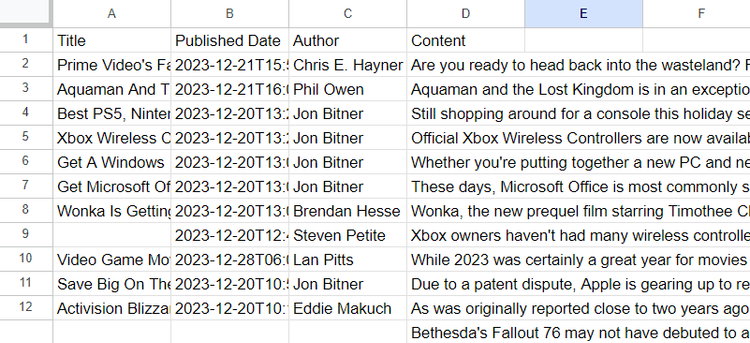

This writes the extracted article data into the CSV file, as shown:

You've now scraped article data from 10 pages of a publication. Congratulations!

However, getting blocked is another potential problem you must handle to build a formidable article scraper.

The Challenge of Getting Blocked While Scraping Articles

You risk getting blocked while trying to scrape at scale from multiple pages. This is often due to IP bans and anti-bot systems like Akamai.

You can bypass any block with an all-in-one solution like ZenRows. ZenRows helps you bypass any anti-bot system behind the scenes, so you can focus on data extraction without worrying about getting blocked.

For example, the previous script will fail with a protected publication like Reuters. Let's try to make a regular request to this website to see what happens:

# import the required libraries

import requests

from bs4 import BeautifulSoup

base_URL = 'https://www.reuters.com/'

# specify category

category_URL = f'{base_URL}/technology/'

response = requests.get(category_URL)

soup = BeautifulSoup(response.content, 'html.parser')

print(soup)

The request fails with the following response:

'host':'geo.captcha-delivery.com'}</script><script data-cfasync="false" src="https://ct.captcha-delivery.com/c.js"></script>

The error message indicates that the request has failed to pass a CAPTCHA challenge.

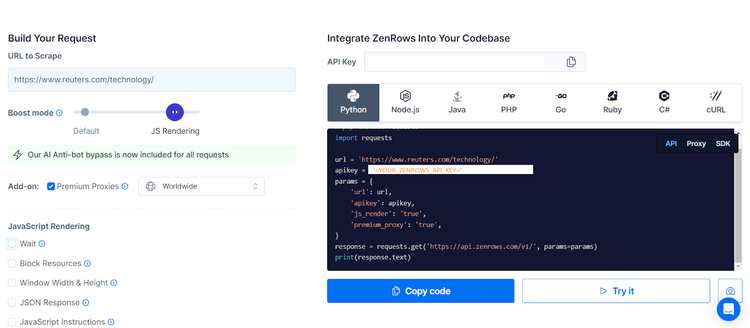

Let's solve this problem with ZenRows. Sign up to enter the ZenRows Request Builder.

Once in the Request Builder, set the "Boost mode" to JS Rendering and click "Premium Proxy". Then, choose Python as your language option.

The code below integrates ZenRows to access the protected website and scrape the article links on the first page.

We specify ZenRows configurations inside the request parameters and route the request through the ZenRows API:

# import the required libraries

from bs4 import BeautifulSoup

import requests

base_URL = 'https://www.reuters.com'

# get the news category

category_URL = f'{base_URL}/technology/'

page_count = 1

data_links = []

# specify ZenRows request parameters

params = {

'url': category_URL,

'apikey': '<YOUR_ZENROWS_API_KEY>',

'js_render': 'true',

'premium_proxy': 'true',

}

# route the request through ZenRows

response = requests.get('https://api.zenrows.com/v1/', params=params)

if response.status_code == 200:

soup = BeautifulSoup(response.content, 'html.parser')

print(f'Request successful for {category_URL}')

# find matching class names to bypass obfuscated class characters

article_links = soup.find_all('a', class_=lambda value: value and 'media-story-card__headline' in value)

# iterate through link cards to get article unique hrefs

for link in article_links:

href = link.get('href')

# merge article's unique URL with the base URL

data_links.append(base_URL + href)

else:

print(f'Error fetching links for page: {response.status_code}')

print(data_links)

The code accesses the protected website successfully and gets the article URLs, as shown:

Request successful for https://www.reuters.com/technology/

[

'https://www.reuters.com/technology/baidu-donate-quantum-computing-lab-equipment-beijing-institute-2024-01-03/',

'https://www.reuters.com/business/autos-transportation/teslas-china-made-ev-sales-jump-687-yy-december-2024-01-03/',

#... other links omitted for brevity

https://www.reuters.com/world/china/china-evergrandes-ev-share-sale-deal-lapses-2024-01-01/,

https://www.reuters.com/technology/baidu-terminates-purchase-joyys-live-streaming-business-2024-01-01/

]

That's it! You just scraped a protected article website using ZenRows anti-bot features. Feel free to enable ZenRows JavaSript instructions like clicks and waits to load more content dynamically.

Conclusion

Article scraping can be a valuable source of information for research and competition monitoring. In this article scraper tutorial, you've learned how to use Python's Requests and BeautifulSoup to extract article content from a paginated publication.

You can now:

- Extract article links from multiple pages.

- Scrape specific content from extracted article URLs.

- Bypass anti-bots blocks by integrating a scraping solution like ZenRows.

Don't forget to try more examples with what you've learned. As mentioned, you'll likely face complex anti-bots and IP bans when dealing with big websites. Bypass all that with ZenRows and scrape any website without getting blocked. Try ZenRows for free!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.