Swift is the base language for iOS app development, but it's not that popular for other uses. For example, is it possible to do web scraping in Swift? Yes, it is!

In this step-by-step tutorial, you'll learn how to do web scraping with Swip using SwiftSoup. Let's dive right in!

Can You Scrape Websites With Swift?

Yes, you can scrape a site with Swift!

Swift supports console-line scripts and comes with a rich standard API. You can build a complete scraping script thanks to a third-party HTML parser.

While web scraping in Swift is possible, most developers prefer Python or JavaScript. Those scripting languages are widely used due to their simple syntax and vast ecosystems. Not surprisingly, both appear in the list of the best languages for web scraping.

Prerequisites

Follow the instructions below and set up a Swift environment.

Install Swift

To follow this tutorial, you must have Swift installed locally. If you're on macOS, install Xcode to get ready. Otherwise, download Swift for your OS, launch it, and follow the wizard.

Verify that Swift works by launching the following command in the terminal:

swift --version

This should print something like:

Swift version 5.9.2 (swift-5.9.2-RELEASE)

Wonderful!

Create Your Swift Project

If you are on Windows, open the x64 native Tools Command Prompt for VS 2019 terminal. On macOS and Linux, use a regular terminal instead.

Create a directory called SwiftScraper for your Swift project and enter it:

mkdir SwiftScraper

cd SwiftScraper

Inside the new folder, launch the command below to set up a Swift command-line tool:

swift package init --name SwiftScraper --type executable

SwiftScraper will now store a command-line Swift application called “SwiftScraper.” In detail, it'll contain a Sources sub-folder and a Package.swift file to handle dependencies.

Open the project's folder in your Swift IDE. Xcode or Visual Studio Code with the Swift extension will do.

Inspect the main.swift file inside the Sources folder. This is what you'll see:

// The Swift Programming Language

// https://docs.swift.org/swift-book

print("Hello, world!")

Right now, it's just a Swift script that prints “Hello, world!” but you'll soon override it with some scraping logic.

Use the run command from Xcode or the command below to execute the script:

swift run

The compilation process will take a while, so be patient.

You'll see in the terminal:

Hello, world!

Fantastic! You have everything you need to create a Swift web scraping script.

Practical Tutorial to Do Web Scraping With Swift

In this step-by-step section, you'll build a script to extract all product data from an e-commerce site. The scraping target will be ScrapeMe, a platform site with a paginated list of Pokémon products:

Time to perform web scraping in Swift!

Step 1: Scrape Your Target Page

To scrape your target page, you need to retrieve its HTML content and pass it to an HTML parser. Make a GET request to the page URL to get the HTML document associated with it. The recommended way to make HTTP requests in Swift is URLSession.

At the same time, the String constructor comes with a special option to extract the content from a URL. That's enough for this application. Behind the scenes, Swift executes a GET request and passes the data returned by the server to the String:

let response = URL(string: "https://scrapeme.live/shop/")!

let html = try String(contentsOf: response)

This snippet requires the following two imports to work:

import Foundation

import FoundationNetworking

You now need to feed html to an HTML parser. SwiftSoup is the most popular HTML parsing library in Swift. It provides jQuery-like methods for parsing HTML content and extracting data from it.

Install the SwiftSoup package in your projects. Add this line to the dependencies array in Package.swift:

.package(url: "https://github.com/scinfu/SwiftSoup.git", from: "2.6.0"),

And this line to the dependencies array in targets:

.product(name: "SwiftSoup", package: "SwiftSoup"),

Your Package.swift file will now contain:

import PackageDescription

let package = Package(

name: "ScrapingScript",

dependencies: [

.package(url: "https://github.com/scinfu/SwiftSoup.git", from: "2.6.0"),

],

targets: [

.executableTarget(

name: "ScrapingScript",

dependencies: [

.product(name: "SwiftSoup", package: "SwiftSoup"),

]

),

]

)

Import SwiftSoup in main.swift:

import SwiftSoup

Next, parse the HTML content with the parse() method and print it:

let document = try SwiftSoup.parse(html)

print(try document.outerHtml())

Your current main.swift will look like as follows:

import Foundation

import FoundationNetworking

import SwiftSoup

// connect to the target site and

// retrieve the HTML content from it

let response = URL(string: "https://scrapeme.live/shop/")!

let html = try String(contentsOf: response)

// parse the HTML content

let document = try SwiftSoup.parse(html)

// print the source HTML

print(try document.outerHtml())

Execute it to install the project's dependencies. It'll produce the following output:

<!doctype html>

<html lang="en-GB">

<head>

<meta charset="UTF-8" />

<meta name="viewport" content="width=device-width, initial-scale=1, maximum-scale=2.0" />

<link rel="profile" href="http://gmpg.org/xfn/11" />

<link rel="pingback" href="https://scrapeme.live/xmlrpc.php" />

<title>Products – ScrapeMe</title>

Here we go! Your web scraping Swift script downloads the target page as desired. Get ready to retrieve some data from it.

Step 2: Parse and Extract Data

Follow the two inner steps and learn how to do web scraping with Swift. Extract product data first from a single product and then from all products on the site.

Extract the HTML for One Product

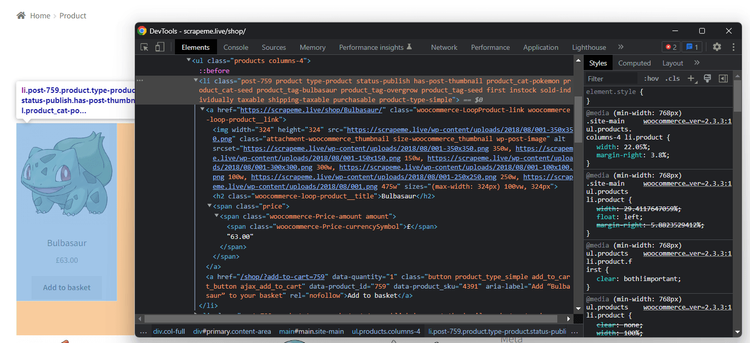

You must select its HTML node before extracting data from a product on the page. The idea is to apply a CSS selector strategy to isolate an element and access its information. Get familiar with the page's HTML to define an effective node selection strategy.

Visit the target page in the browser and inspect a product HTML element with the DevTools:

Read the HTML code and note that each product node is selectable through this CSS selector:

li.product

li is the tag of the HTML element while product is the value in its class attribute.

Given a product, you can get:

- The URL from the

<a>node. - The image URL from the

<img>node. - The name from the

<h2>node. - The price from the

<span>node.

Implement the scraping logic to extract data from a single HTML product element. Use the select() method to apply a CSS Selector. Next, call first() to get the first node that matches the selector. Access its text with text() and HTML attributes with attr():

// select the first product HTML element on the page

let htmlProduct = try document.select("li.product").first()!

// scraping logic

let url = try htmlProduct.select("a").first()!.attr("href")

let image = try htmlProduct.select("img").first()!.attr("src")

let name = try htmlProduct.select("h2").first()!.text()

let price = try htmlProduct.select("span").first()!.text()

You can finally log the scraped data in the terminal with:

print(url)

print(image)

print(name)

print(price)

Add the above lines to main.swift and get:

import Foundation

import FoundationNetworking

import SwiftSoup

// connect to the target site and

// retrieve the HTML content from it

let response = URL(string: "https://scrapeme.live/shop/")!

let html = try String(contentsOf: response)

// parse the HTML content

let document = try SwiftSoup.parse(html)

// select the first product HTML element on the page

let htmlProduct = try document.select("li.product").first()!

// scraping logic

let url = try htmlProduct.select("a").first()!.attr("href")

let image = try htmlProduct.select("img").first()!.attr("src")

let name = try htmlProduct.select("h2").first()!.text()

let price = try htmlProduct.select("span").first()!.text()

// log the scraped data

print(url)

print(image)

print(name)

print(price)

Execute it, and you'll see:

https://scrapeme.live/shop/Bulbasaur/

https://scrapeme.live/wp-content/uploads/2018/08/001-350x350.png

Bulbasaur

£63.00

Fantastic! Extend the Swift scraping logic to scrape all products on the page in the next section.

Extract Multiple Products

You need to specify a new structure to represent the data to scrape. This will help you initialize product objects with the scraped data. For this reason, add a new struct called Product:

struct Product {

var url = ""

var image = ""

var name = ""

var price = ""

}

Since the page contains multiple products, you'll need a Product array:

var products: [Product] = []

At the end of the script, this will store all scraped data objects.

Now, remove first() from the first select() instruction to get all products. Iterate over them, apply the scraping logic, instantiate a new Product, and add it to the list:

// select all product HTML elements on the page

let htmlProducts = try document.select("li.product")

// iterate over them and apply

// the scraping logic to each of them

for htmlProduct in htmlProducts.array() {

// scraping logic

let url = try htmlProduct.select("a").first()!.attr("href")

let image = try htmlProduct.select("img").first()!.attr("src")

let name = try htmlProduct.select("h2").first()!.text()

let price = try htmlProduct.select("span").first()!.text()

// initialize a new Product object

// and add it to the array

let product = Product(url: url, image: image, name: name, price: price)

products.append(product)

}

Make sure the Swift web scraping logic works by adding these lines:

for product in products {

print(product.url)

print(product.image)

print(product.name)

print(product.price)

print()

}

The main.swift file should currently contain:

import Foundation

import FoundationNetworking

import SwiftSoup

// define a data structure to represent

// the data to scrape

struct Product {

var url = ""

var image = ""

var name = ""

var price = ""

}

// where to store the scraped data

var products: [Product] = []

// connect to the target site and

// retrieve the HTML content from it

let response = URL(string: "https://scrapeme.live/shop/")!

let html = try String(contentsOf: response)

// parse the HTML content

let document = try SwiftSoup.parse(html)

// select all product HTML elements on the page

let htmlProducts = try document.select("li.product")

// iterate over them and apply

// the scraping logic to each of them

for htmlProduct in htmlProducts.array() {

// scraping logic

let url = try htmlProduct.select("a").first()!.attr("href")

let image = try htmlProduct.select("img").first()!.attr("src")

let name = try htmlProduct.select("h2").first()!.text()

let price = try htmlProduct.select("span").first()!.text()

// initialize a new Product object

// and add it to the array

let product = Product(url: url, image: image, name: name, price: price)

products.append(product)

}

// log the scraped data

for product in products {

print(product.url)

print(product.image)

print(product.name)

print(product.price)

print()

}

Launch it, and it'll generate this output:

https://scrapeme.live/shop/Bulbasaur/

https://scrapeme.live/wp-content/uploads/2018/08/001-350x350.png

Bulbasaur

£63.00

// omitted for brevity...

https://scrapeme.live/shop/Pidgey/

https://scrapeme.live/wp-content/uploads/2018/08/016-350x350.png

Pidgey

£159.00

Terrific! See how the scraped objects contain data of interest.

Step 3: Convert Scraped Data Into a CSV File

The recommended way to convert scraping data into a useful format is to use a third-party library. CSV.swift is one of the most used libraries for reading and writing CSV files in Swift.

Add the CSV.swift package to your project's dependencies with this line in the dependencies array of Package.swift:

.package(url: "https://github.com/yaslab/CSV.swift.git", from: "2.4.3"),

Then, add this line to the dependencies array in targets:

.product(name: "CSV", package: "CSV.swift"),

Package.swift will contain:

import PackageDescription

let package = Package(

name: "ScrapingScript",

dependencies: [

.package(url: "https://github.com/scinfu/SwiftSoup.git", from: "2.6.0"),

.package(url: "https://github.com/yaslab/CSV.swift.git", from: "2.4.3"),

],

targets: [

.executableTarget(

name: "ScrapingScript",

dependencies: [

.product(name: "SwiftSoup", package: "SwiftSoup"),

.product(name: "CSV", package: "CSV.swift"),

]

),

]

)

Then, import the library in main.swift:

import CSV

Initialize an OutputStream to the output CSV file and populate it with a CSVWriter. Write the header row and then add each product row:

// initialize the stream to the CSV output file

let stream = OutputStream(toFileAtPath: "products.csv", append: false)!

let csv = try CSVWriter(stream: stream)

// write the header row

try csv.write(row: ["url", "image","name", "price"])

// populate the CSV file

for product in products {

try csv.write(row: [product.url, product.image, product.name, product.price])

}

// close the stream

csv.stream.close()

Put it all together, and you'll get:

import Foundation

import FoundationNetworking

import SwiftSoup

import CSV

// define a data structure to represent

// the data to scrape

struct Product {

var url = ""

var image = ""

var name = ""

var price = ""

}

// where to store the scraped data

var products: [Product] = []

// connect to the target site and

// retrieve the HTML content from it

let response = URL(string: "https://scrapeme.live/shop/")!

let html = try String(contentsOf: response)

// parse the HTML content

let document = try SwiftSoup.parse(html)

// select all product HTML elements on the page

let htmlProducts = try document.select("li.product")

// iterate over them and apply

// the scraping logic to each of them

for htmlProduct in htmlProducts.array() {

// scraping logic

let url = try htmlProduct.select("a").first()!.attr("href")

let image = try htmlProduct.select("img").first()!.attr("src")

let name = try htmlProduct.select("h2").first()!.text()

let price = try htmlProduct.select("span").first()!.text()

// initialize a new Product object

// and add it to the array

let product = Product(url: url, image: image, name: name, price: price)

products.append(product)

}

// initialize the stream to the CSV output file

let stream = OutputStream(toFileAtPath: "products.csv", append: false)!

let csv = try CSVWriter(stream: stream)

// write the header row

try csv.write(row: ["url", "image", "name", "price"])

// populate the CSV file

for product in products {

try csv.write(row: [product.url, product.image, product.name, product.price])

}

// close the stream

csv.stream.close()

Execute the script for web scraping in Swift:

swift run

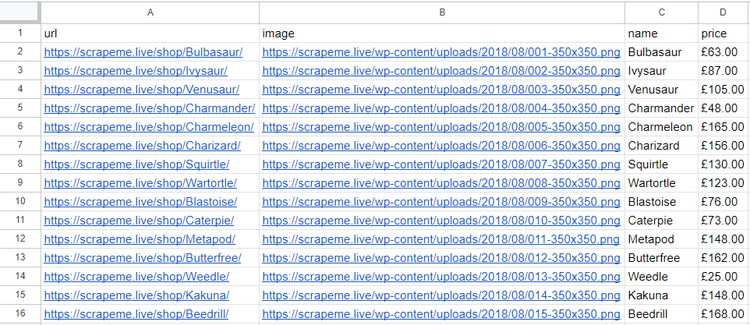

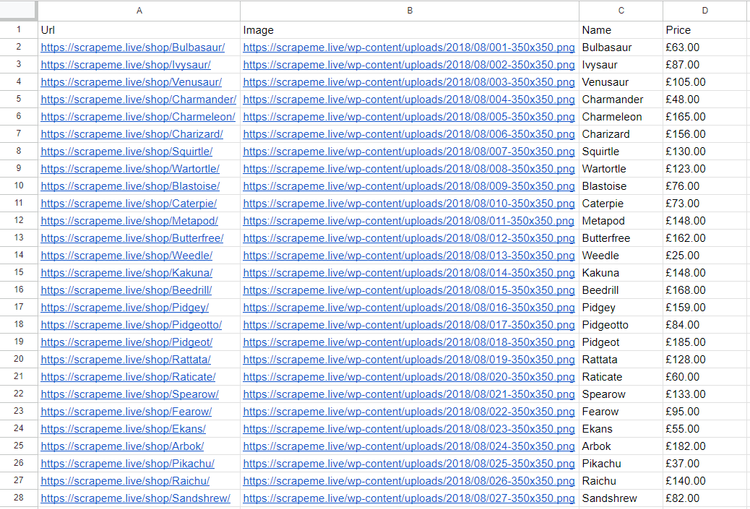

Wait for the script to complete. When the products.csv file appears in the project's folder, open it. That's what you'll see:

Et voilà! You just did web scraping with Swift!

There's still much more to learn, so keep reading to become an expert.

Scraping With Swift: Advanced Techniques

In most cases, the basics aren't enough to scrape a real-world site effectively. Explore the advanced concepts of Swift web scraping!

Web Crawling in Swift: Scrape Multiple Pages

The current script only targets a single page. Don't forget that the target site consists of several pages. What if you wanted to scrape them all and extract all products? This is where web crawling comes in!

Web crawling is the art of automatically discovering web pages on one or more sites. Find out more in our guide on web crawling vs web scraping.

In this case, the goal is to instruct the script to go through all product pages with this algorithm:

- Download the HTML document associated with a page.

- Parse it.

- Extract the URLs from the pagination link elements and add them to a queue.

- Repeat the cycle on a new page read from the queue.

This loop should stop only when there are no more pages to discover. You may also want to set a page limit to prevent your web scraping Swift script from running forever. In the demo code below, the limit will be 5 pages.

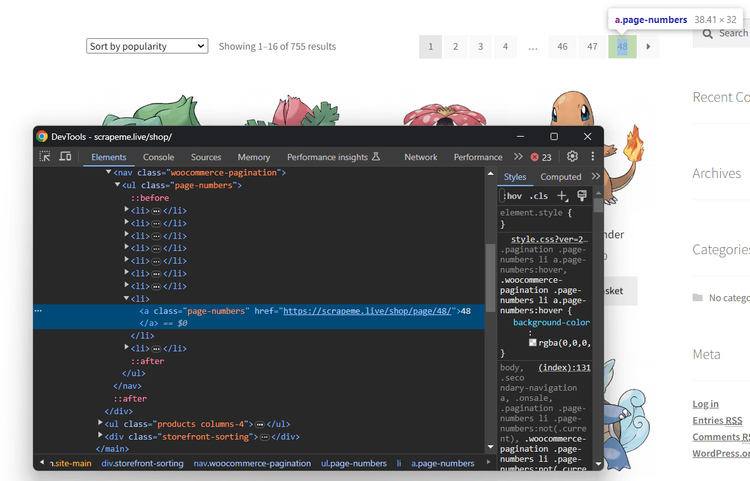

You already know how to download a page and parse it with SwiftSoup. Thus, see how to extract the URLs from the pagination link elements. As a first step, inspect their HTML nodes:

Notice how you can select them all with this CSS selector.

a.page-numbers

Crawling a site hides some pitfalls. You can't just go through each page you find out. Instead, you need to keep track of the pages you have already visited to avoid scraping them twice. Because of that, you'll have to define two extra data structures:

-

pagesDiscovered: A[Set](https://developer.apple.com/documentation/swift/set)that stores all the URLs discovered during the the crawling logic. -

pagesToScrape: An array used as a stack storing the URLs of the pages to visit soon.

Initialize both with the URL of a product pagination page:

// a pagination page to use as the first page to visit

// in the crawling logic

let firstPageToScrape = "https://scrapeme.live/shop/page/2/"

// the Set of pages discovered during the crawling logic

var pagesDiscovered: Set<String> = [firstPageToScrape]

// the list of remaining pages to scrape

var pagesToScrape: [String] = [firstPageToScrape]

Integrate it in the main.swift file and you'll have this final code:

import Foundation

import FoundationNetworking

import SwiftSoup

import CSV

// define a data structure to represent

// the data to scrape

struct Product {

var url = ""

var image = ""

var name = ""

var price = ""

}

// where to store the scraped data

var products: [Product] = []

// a pagination page to use as the first page to visit

// in the crawling logic

let firstPageToScrape = "https://scrapeme.live/shop/page/2/"

// the Set of pages discovered during the crawling logic

var pagesDiscovered: Set<String> = [firstPageToScrape]

// the list of remaining pages to scrape

var pagesToScrape: [String] = [firstPageToScrape]

// current iteration

var i = 1

// maximum number of pages to scrape

let limit = 5

// iterate until there are no pages to scrape

// or the limit is hit

while pagesToScrape.count != 0 && i < limit {

// extract the next URL to visit

let urlString = pagesToScrape[0]

pagesToScrape.removeFirst(1)

// connect to the current page and

// retrieve the HTML content from it

let response = URL(string: urlString)!

let html = try String(contentsOf: response)

// parse the HTML content

let document = try SwiftSoup.parse(html)

// select the pagination links

let paginationHTMLElements = try document.select("a.page-numbers")

// to avoid visiting a page twice

if paginationHTMLElements.array().count > 0 {

for paginationHTMLElement in paginationHTMLElements.array() {

// extract the current pagination URL

let newPaginationUrl = try paginationHTMLElement.attr("href")

// if the page discovered is new

if !pagesDiscovered.contains(newPaginationUrl) {

// if the page discovered needs to be scraped

if !pagesToScrape.contains(newPaginationUrl) {

pagesToScrape.append(newPaginationUrl)

}

pagesDiscovered.insert(newPaginationUrl)

}

}

}

// select all product HTML elements on the page

let htmlProducts = try document.select("li.product")

// iterate over them and apply

// the scraping logic to each of them

for htmlProduct in htmlProducts.array() {

// scraping logic

let url = try htmlProduct.select("a").first()!.attr("href")

let image = try htmlProduct.select("img").first()!.attr("src")

let name = try htmlProduct.select("h2").first()!.text()

let price = try htmlProduct.select("span").first()!.text()

// initialize a new Product object

// and add it to the array

let product = Product(url: url, image: image, name: name, price: price)

products.append(product)

}

// increment the iterator counter

i += 1

}

// initialize the stream to the CSV output file

let stream = OutputStream(toFileAtPath: "products.csv", append: false)!

let csv = try CSVWriter(stream: stream)

// write the header row

try csv.write(row: ["url", "image","name", "price"])

// populate the CSV file

for product in products {

try csv.write(row: [product.url, product.image, product.name, product.price])

}

// close the stream

csv.stream.close()

Launch the Swift web scraping script:

swift run

The scraper will take a while because it must go through 5 pages. The products.csv file will now contain all products:

Congrats! You just learned how to perform web crawling and web scraping in Swift!

Avoid Getting Blocked When Scraping With Swift

Even if data is publicly available on a site, companies don't want users to be able to retrieve it easily. Why? Data is the most valuable asset on Earth, and it can't be given away for free.

That's why most sites protect their websites with anti-bot technologies. These technologies can detect and block automated scripts, such as your Swift scraper. As you can imagine, this is the biggest challenge to web scraping with Swift!

There are several tips for performing web scraping without getting blocked. Yet, bypassing all anti-bot measures isn't easy. Solutions like Cloudflare will likely block your script no matter how you tweak it.

Verify that by trying to retrieve the HTML code of a site protected by Cloudflare, such as G2.com:

import Foundation

import FoundationNetworking

import SwiftSoup

// connect to the target site and

// retrieve the HTML content from it

let response = URL(string: "https://www.g2.com/products/zapier/reviews")!

let html = try String(contentsOf: response)

// parse the HTML content

let document = try SwiftSoup.parse(html)

// print the source HTML

print(try document.outerHtml())

The above Swift web scraping script fails with the following error:

Swift/ErrorType.swift:200: Fatal error: Error raised at top level: Error Domain=NSCocoaErrorDomain Code=256 "(null)"

Current stack trace:

0 (null) 0x00007ff91cbfb430 swift_stdlib_reportFatalErrorInFile + 132

That happens because Cloudflare detects your script and returns a 403 Forbidden error page.

Time to give up? Of course, not! You only need to use the right call and the best on the market is called ZenRows! As a next-generation scraping API, it provides an unstoppable anti-bot toolkit to avoid any blocks. Some other tools offered by ZenRows? IP and User-Agent rotation!

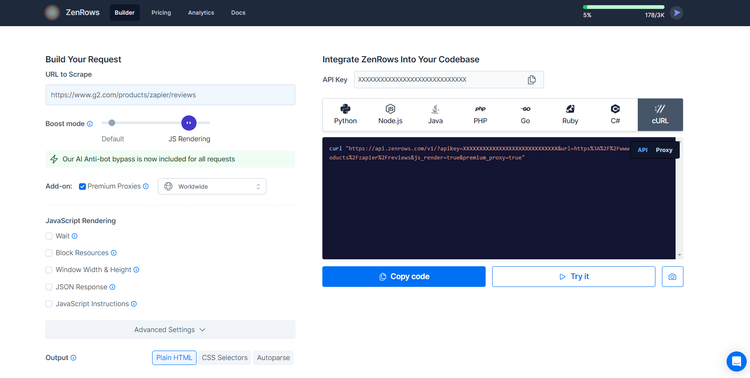

Integrate ZenRows into SwiftSoup for maximum effectiveness. Sign up for free to get your first 1,000 credits, and then visit the Request Builder page:

Suppose you want to extract data from the Cloudflare-protected G2.com page used before. You can achieve by following these steps:

- Paste the target URL (

https://www.g2.com/products/zapier/reviews) into the "URL to Scrape" input. - Click on "Premium Proxy" to enable IP rotation.

- Enable the "JS Rendering" feature (User-Agent rotation and the AI-powered anti-bot toolkit are included for you by default).

- Select the “cURL” option on the right and then the “API” mode to get the full URL of the ZenRows API.

Use the generated URL to the request function:

import Foundation

import FoundationNetworking

import SwiftSoup

// connect to the target site and

// retrieve the HTML content from it

let response = URL(string: "https://api.zenrows.com/v1/?apikey=<YOUR_ZENROWS_API_KEY>&url=https%3A%2F%2Fwww.g2.com%2Fproducts%2Fzapier%2Freviews&js_render=true&premium_proxy=true")!

let html = try? String(contentsOf: response)

// parse the HTML content

let document = try SwiftSoup.parse(html)

// print the source HTML

print(try document.outerHtml())

Run your script again. This time, it'll output the source HTML code of the desired G2 page:

<!DOCTYPE html>

<head>

<meta charset="utf-8" />

<link href="https://www.g2.com/assets/favicon-fdacc4208a68e8ae57a80bf869d155829f2400fa7dd128b9c9e60f07795c4915.ico" rel="shortcut icon" type="image/x-icon" />

<title>Zapier Reviews 2024: Details, Pricing, & Features | G2</title>

<!-- omitted for brevity ... -->

Wow! Bye-bye fatal errors. You just learned how to use ZenRows for web scraping in Swift.

Conclusion

This guided tutorial showed you how to do web scraping in Swift. You first learn the fundamentals and then explore more advanced aspects. You've become a Swift web scraping hero!

You now know that Swift is a viable language for web scraping, and what libraries to use for performing it. SwiftSoup is one the best packages for scraping static pages while swift-webdriver is great for dynamic-content pages.

The problem is that anti-scraping measures can stop your script. The solution is ZenRows, a scraping API with the most effective anti-bot bypass capabilities. Extracting online data from any web page has never been easier!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.