Did you get blocked by a website while using cURL? One of the most effective techniques is to route your requests through a proxy server, making it more difficult to identify your traffic as non-human.

In this tutorial, you'll learn the step-by-step process of using a cURL proxy and the best practices and protocols to consider when web scraping.

Let's begin!

What Is a Proxy in cURL?

A cURL proxy is a server that acts as an intermediary between the client and the destination server to access resources with increased anonymity and without network restrictions.

Here's how it works:

- First, the client sends a request to the proxy server.

- Next, the proxy server forwards it to the destination server.

- The response from the destination server is returned to the proxy server.

- Finally, the proxy forwards the response to the client.

How Do I Use a Proxy with cURL?

Let's see how you can use a cURL proxy server to send and receive data over the internet.

cURL Syntax

Before we begin, it's relevant to point out the most important aspects of the syntax of cURL:

- PROTOCOL: The internet protocol for the proxy server, such as HTTP and HTTPS.

- HOST: The proxy server's hostname, IP address, or URL.

- PORT: The port number provided for the proxy server.

- URL: The URL of the target website the proxy server will communicate with.

curl --proxy <PROTOCOL>://<HOST>:<PORT> <URL>

Set Up a Proxy with cURL

Here's what you need to do to set up a proxy with cURL:

Start by replacing [PROTOCOL://]HOST[:PORT] with the address and port number of your proxy server and the target URL with <https://httpbin.org/ip> (a testing page). You can find many free proxies.

Next, open a Terminal or Command Prompt on your machine, and run the following command to make a request with a proxy:

curl --proxy "http://144.76.60.58:8118" "https://httpbin.org/ip"

The response you get should be a JSON payload containing the IP address of the proxy server:

How to Extract Data

Consider the above cURL proxy example that delivered a JSON object with an origin field. To extract the value of that field, use jq along with the previous command.

curl -x "http://144.76.60.58:8118" "https://httpbin.org/ip" | jq ".origin"

Ensure jq is installed on your machine before running it.

The output is the actual value of the origin field, which is the IP address returned in the response in this case.

Proxy Authentication with cURL: Username & Password

Some proxy servers have security measures in place to prevent unauthorized access and require a username and password to access the proxy.

cURL supports proxy authentication, allowing web scrapers to access these proxy servers while still respecting their security measures.

Here's how to connect to a URL using cURL with an authenticated proxy.

To begin, you'll need to provide the username and password for the proxy server using the --proxy-user option.

For example, let's say you want to connect to a proxy server at http://proxy-url.com:8080 that requires authentication with the username user and the password pass. The CLI command that performs the operation is as follows:

curl --proxy http://proxy-url.com:8080 --proxy-user user:pass http://target-url.com/api

This command will use the provided username and password for authentication to send the HTTP request to the target URL via the specified proxy.

Also, you need to include a proxy-authorization header in your request header. The --proxy-header option in cURL allows you to do that, as shown below:

curl --proxy http://proxy-url.com:8080 --proxy-user user:pass --proxy-header "Proxy-Authorization: Basic dXNlcjEyMzpwYXNzMTIz" http://target-url.com/api

cURL Best Practices

Find the best practices when using a cURL proxy next.

Environment Variables for a cURL Proxy

Environment variables are important for a cURL proxy because they allow you to set proxy server URLs, usernames, and passwords as variables that can be accessed by cURL commands instead of manually entering the values each time. That saves time and effort and makes managing multiple proxies for different tasks easier.

To use cURL proxy environment variables, follow these steps:

First, in your Terminal, set the proxy server URL, username, and password as environment variables using the export command. Replace username and password with the appropriate values for your proxy server. You can omit the username and password from the URL if it doesn't require authentication.

export http_proxy=http://<username>:<password>@proxy-url.com:8080

export https_proxy=https://<username>:<password>@proxy-url.com:8080

If you're using a Windows OS, run this alternative command:

set http_proxy=http://<username>:<password>@proxy-url.com:8080

set https_proxy=https://<username>:<password>@proxy-url.com:8080

Next, use the environment variables in your cURL commands by referencing them with the $ symbol.

curl -x $http_proxy https://httpbin.org/ip

Create an Alias

Aliases are important in curl because they help simplify and streamline the process of making repeated or complex curl requests. By setting up an alias, you can create a shortcut for a specific curl command with certain options and parameters, making it easier to run the command again in the future without having to remember or retype all the details. That can save time and reduce the risk of errors.

Additionally, aliases can help make curl commands more readable and easier to understand, especially for users who may be less familiar with the syntax or options available. To create an alias, you can use the alias command in your terminal. For example, you can create an alias for ls -l as ll by running the command alias ll="ls -l.

Here's how to automatically use the proxy server and credentials specified in your environment variable, saving you the trouble of typing out the full command each time:

Start by opening your shell's configuration file, such as .bashrc or .zshrc, using a text editor. This file is typically in the home/<username>/ folder on Mac and c/Users/<username> folder on Windows. You can also create the file in this folder if it doesn't exist.

The next step is to add the following snippet to the file to create an alias. In this case, curlproxy is the alias's name, and $http_proxy used in the snippet below is the environment variable we created in the previous section. You can also customize the alias name to your preference.

alias curlproxy='curl --proxy $http_proxy'

Now, you can use the curlproxy alias followed by the URL you want to connect to via the proxy. For example, to connect to "https://httpbin.org/ip" via the proxy, you can run the following command:

curlproxy https://httpbin.org/ip

Italic### Use a .curlrc File for a Better Proxy Set Up

The .curlrc file is a text file that contains one or more command-line options passed to cURL when you run a command. You can store your cURL settings, including proxy configuration, and therefore make it easier to manage your commands.

To use a .curlrc file for cURL with proxy, do this:

- Create a new file called

.curlrcin your home directory. - Add the following lines to the file to set your proxy server URL, username, and password, then save it:

proxy = http://user:[email protected]:8080

- If a username and password are required, add them as shown below:

proxy = http://user:[email protected]:8080

- Run the default cURL command to connect to

<https://httpbin.org/ip>via the proxy you have set up in the.curlrcfile:

curl https://httpbin.org/ip

Use a Rotating Proxy with cURL

Rotating proxies are important for web scraping because they help avoid IP blocking and getting blocked by websites by changing the IP address used for each request.

Let's see how to do that with a cURL proxy using a free solution and a premium one afterward, as well as learning why the second type is key.

Rotate IPs with a Free Solution

In this example, we'll use the free provider to set up a rotating proxy with cURL.

To begin, go to Free Proxy List to get a list of free proxy IP addresses. Note the IP address, port, and authentication credentials (if any) for the rotating proxy you want to use.

Next, replace username, password, ipaddress, and port with the values for your rotating proxy list and save them in the .curlrc file you created above:

proxy = http://<username>:<password>@<ipaddress>:<port>

proxy = http://<username>:<password>@<ipaddress>:<port>

proxy = http://<username>:<password>@<ipaddress>:<port>

Finally, test if the rotating proxy works by opening a Command Prompt and running the following command:

curl -v https://www.httpbin.org/ip

The output should display one of the IP addresses you saved in the .curlrc file.

{"origin": "162.240.76.92"}

Premium Proxy to Avoid Getting Blocked

While a free rotating proxy solution can be an effective way to scrape websites without being detected, it may not always be reliable. If you require more stability and faster connection speeds, a premium proxy service may be a better option to avoid anti-bots like Cloudflare with cURL. You should consider an effective and affordable solution like ZenRows. It comes with added benefits like geolocation optimization, flexible pricing starting at $49/mo, and paying for successful requests only.

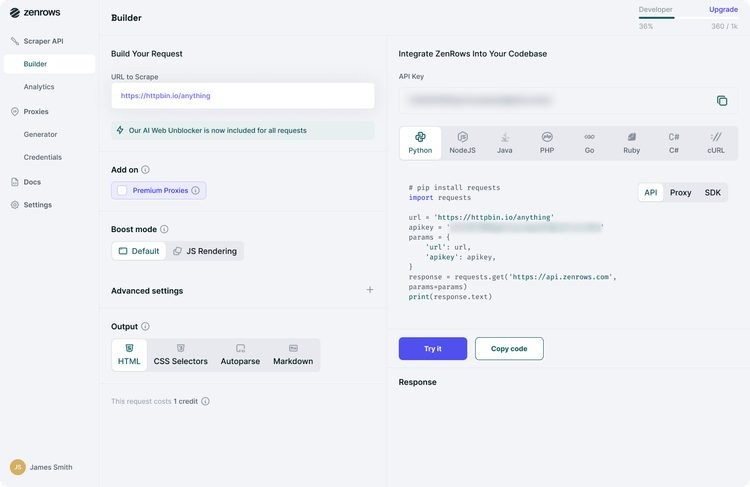

To use ZenRows cURL proxies, start by creating an account to get your API key with 1,000 free credits. Once on the Request Builder page, paste https://coingecko.com as a target URL, select cURL and copy the code you'll get.

The generated link looks like this:

curl -L -x "http://<your-api-key>:@proxy.zenrows.com:8001" -k "https://coingecko.com"

Great! Here's the response returned after sending the request:

Ignore Proxies with --noproxy if Needed When developing your scrapers locally, you may want to bypass proxy servers to check that they work correctly. This will expose your local IP but also ensure that the script is running properly.

So, let's see how you can use the curl --noproxy option to achieve that.

Start by identifying the hosts or domains for which you want to ignore proxies. For example, if you want to ignore them for www.httpbin.org, here is the command you should run in your terminal:

curl --noproxy httpbin.org http://www.zenrows.com

When the --noproxy option is used with cURL, it specifies that the proxy server should be ignored for any requests. This can be useful when you need to access a website directly without going through the proxy server. The --noproxy option can also be used to exclude certain hosts or domains from the proxy server if needed. It is important to note that if the --noproxy option is not used and no proxy server is specified, cURL will attempt to use the system's default proxy settings.

Proxies and Protocols that Work Best

The choice of a cURL proxy protocol and proxy type can significantly impact your network communication's performance and reliability.

Let's look at the most efficient options!

Best cURL Proxy Types

Here are some popular proxies for cURL web scraping:

- Residential: These proxies use IP addresses associated with real residential locations. That makes them less likely to be detected and blocked by anti-bot systems.

- Datacenter: This is a proxy server that's not associated with an internet service provider (ISP). They're widely used in web scraping because they are fast, inexpensive, and provide anonymity.

- 4G proxy: A mobile proxy server that routes internet traffic through a 4G LTE connection. They're typically more expensive than data center proxies but offer higher anonymity and better reliability.

Learn more about the different types of web scraping proxies from our detailed tutorial.

Protocols

Now, let's see the most popular protocols that cURL supports:

- HTTP: Hypertext Transfer Protocol, the foundation of data communication on the web.

- HTTPS: HTTP with an added layer of security through encryption (SSL/TLS).

- FTP: File Transfer Protocol, used for transferring files between servers and clients over the internet.

- FTPS: FTP with an added layer of security through encryption (SSL/TLS).

- LDAP: Lightweight Directory Access Protocol, an open, vendor-neutral, industry standard application protocol for accessing and maintaining distributed directory information services over an Internet Protocol (IP) network.

- LDAPS: LDAP with an added layer of security through encryption (SSL/TLS).

HTTP, HTTPS, and SOCKS are the most relevant protocols used in web scraping to enable communication between a client and a server.

Conclusion

Using a cURL proxy can greatly enhance your web scraping capabilities. It allows you to avoid IP blocks and access geographically restricted content. However, the best practices to keep in mind are rotating proxies and setting up environment variables.

Yet, free proxies aren't reliable, so you might want to consider a premium proxy provider. ZenRows offers a rotating service with residential proxies, you can choose any country, and it's significantly more affordable than traditional alternatives. Also, it comes with additional anti-bot bypass features. Sign up and get 1,000 free credits.

Frequent Questions

How to Set a Proxy in the cURL Command?

To set a proxy in a cURL command, use the -x or --proxy option followed by the proxy server URL. For example, curl -x http://proxy-url.com:8080 https://target-url.com will use the HTTP proxy server at http://proxy-url.com:8080 to access https://target-url.com.

What Is the Default Proxy Port for cURL?

The default proxy port for cURL is 1080. However, this can vary depending on the proxy server used. It's always recommended to check with the proxy provider, but it'll default to port 1080 if no port is specified.

How Do I Know If cURL Is Using a Proxy?

You can check if cURL is using a proxy with the -v option in your cURL command. It'll display the verbose output where you can see the request's details. You'll see the proxy server and port number listed in the output if a proxy is used.

How to Bypass a Proxy in cURL Command?

To bypass a proxy in a cURL command, use the --noproxy option, followed by a comma-separated list of hosts or domains you want to exclude from the proxy. For example, curl --noproxy proxy1.com,proxy2.net https://www.target-url.com will bypass the proxy for requests to proxy1.com and proxy2.net, but not for others.

How Do I Make cURL Ignore the Proxy?

The cURL --proxy option allows users to specify a proxy server for sending HTTP/HTTPS requests. This cURL proxy setting will route all requests through the specified proxy server, effectively hiding the IP address and bypassing network restrictions.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.