Websites employ multiple techniques to restrict non-human traffic, and analyzing the User Agent string provided in the HTTP request header is one of them.

In this article, you'll learn how to set and randomize a new cURL User Agent to avoid getting blocked while web scraping.

Let's dive in!

What Is the cURL User-Agent

When you use cURL to send an HTTP request, it sends a User Agent string to the website that identifies you as a client.

It identifies the software, device, or application making the request and typically includes details such as the application name, version, and operating system.

Here is what a typical UA looks like:

Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.81 Safari/537.36

From this example, we can tell that the user has a Chrome web browser version 94.0.4606.81 on a Windows 10 operating system, among other details.

Does cURL Add a User-Agent

When you do cURL web scraping without explicitly setting a User Agent, cURL provides a generic one by default. This default UA is typically something like User-Agent: curl/7.79.1. The exact format may vary based on your cURL version and platform.

However, using the default cURL User-Agent or not specifying one makes it easier for websites to detect the request is coming from an automated script. That can result in your scraper getting flagged or blocked. Changing the default cURL User Agent is essential to reduce the risk.

How to Change the User Agent in cURL

This section will show how to change and set a cURL User Agent. You'll also learn how to randomize the User Agent to simulate different users.

How to Set a Custom User Agent in cURL

To change the User-Agent (UA) in cURL, you can use the -A or --user-agent option followed by the desired User-Agent string. Here's a step-by-step guide:

The first step is to get a User Agent string. You can grab some from our list of User Agents.

The next step is to set the new user agent by including the -A or --user-agent option followed by the desired UA string in your cURL command. Here's the syntax:

curl -A "<User-Agent>" <URL>

curl --user-agent "<user-agent>" <url>

Replace <User-Agent> with the User-Agent string you want to use and <URL> with the target URL.

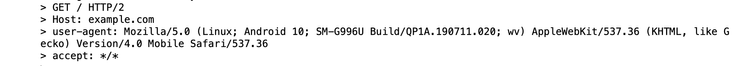

For example, let's say you want to use Mozilla/5.0 (Linux; Android 10; SM-G996U Build/QP1A.190711.020; wv) AppleWebKit/537.36 (KHTML, like Gecko) Version/4.0 Mobile Safari/537.36 and the website we want to scrape is https://example.com/. The cURL command would be this one:

curl -A "Mozilla/5.0 (Linux; Android 10; SM-G996U Build/QP1A.190711.020; wv) AppleWebKit/537.36 (KHTML, like Gecko) Version/4.0 Mobile Safari/537.36" https://example.com/

That's it! Your User-Agent will be modified once you run the command.

You can verify if you're successful by making a request to What Is My Browser to get your UA displayed. Here's what the result looks like:

Get a Random User-Agent in cURL

Changing your cURL User Agent is a good first step to avoid detection when making multiple requests to a website. However, it's not enough on its own. You should also randomize your User Agents to make your requests look more natural. This will help you avoid being blocked by the website.

To rotate the cURL User-Agent, create a list first. We'll take examples from our list of User-Agents. Here are the first few lines of code:

#!/bin/bash

user_agent_list=(

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36"

"Mozilla/5.0 (Macintosh; Intel Mac OS X 13_1) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15"

)

BoldThe next step is to specify what website we want to scrape. In this tutorial, we will be making a request to https://httpbin.org/headers. Then, we will create a loop and use the RANDOM variable to generate a random number, which is then used to select a random User-Agent from our list. Finally, we'll use the -A option to set the User-Agent header to the randomly selected option from our list.

url="https://httpbin.org/headers"

for i in {1..3}

do

random_index=$(( RANDOM % ${#user_agent_list[@]} ))

user_agent="${user_agent_list[random_index]}"

curl -A "$user_agent" "$url" -w "\n"

done

That's it! We have now successfully rotated the UAs in each round of our requests.

Here is what the full code looks like:

#!/bin/bash

user_agent_list=(

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36"

"Mozilla/5.0 (Macintosh; Intel Mac OS X 13_1) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15"

)

url="https://httpbin.org/headers"

for i in {1..3}

do

random_index=$(( RANDOM % ${#user_agent_list[@]} ))

user_agent="${user_agent_list[random_index]}"

curl -A "$user_agent" "$url" -w "\n"

done

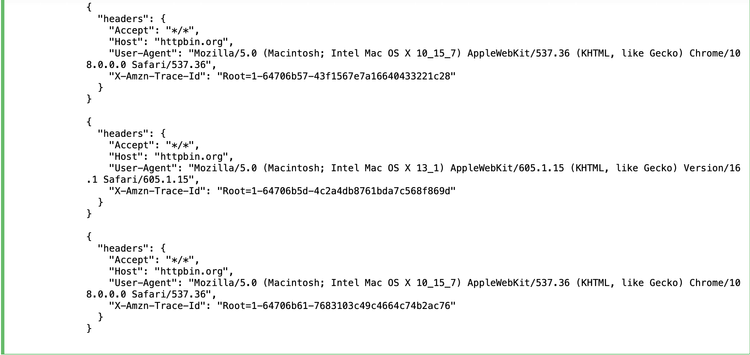

And this is the result:

How to Change the User Agent at Scale

When web scraping, it's crucial to construct and manage UAs properly. Websites often keep track of the popular User-Agent strings associated with different browsers and devices. If you use a User-Agent string that is outdated, non-standard, or uncommon, it can raise suspicions and trigger anti-bot measures.

It's important to use reliable and up-to-date UAs, and to rotate them regularly, to avoid these problems.

Then, in addition to User Agent analysis, websites employ many other techniques to detect and deter scraping activities. Some of the common anti-scraping challenges include captchas, rate limiting, IP blocking, etc. Take a look at our guide about anti-scraping techniques to learn more.

Changing your User Agent in cURL can be daunting and tiresome at scale and far from enough given many other anti-bot measures. However, ZenRows, a cloud-based web scraping API that complements cURL, makes it easy.

It offers a wide range of UAs and automates their rotation. It also provides an anti-bot bypass toolkit with premium proxies and other advanced features. With ZenRows, you can streamline the entire web scraping process and focus on the data you need.

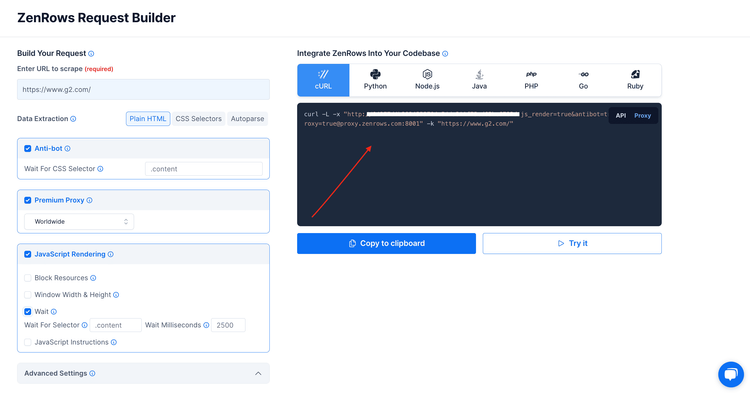

To get started, sign up, and you'll get to the Request Builder page. Once there, paste your target URL (for example, https://www.g2.com) of the website you want to scrape. We also recommend activating the anti-bot, premium proxies, and JavaScript rendering features to help you bypass anti-bots like Cloudflare with cURL. Here's what your Request Builder should look like:

Copy the automatically generated cURL command and run it in your terminal:

curl -L -x "https://'apiKey':js_render=true&antibot=true&[email protected]:8001" -k "https://www.g2.com/"

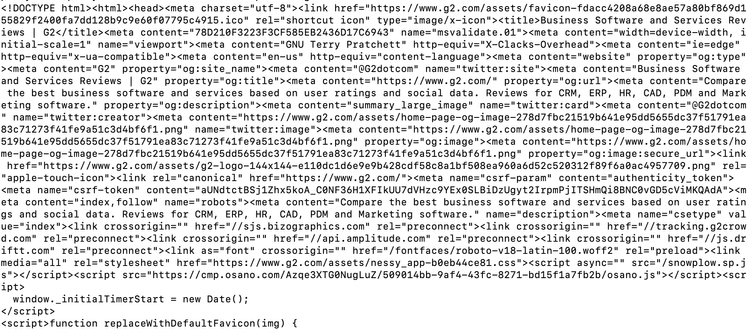

BoldHere's what the result looks like:

Awesome! You've just scraped a well-protected website with cURL.

Conclusion

Setting and randomizing cURL User-Agents is crucial for web scraping. Properly formed UAs can mimic different client software and help you avoid detection as a bot. However, that is just one of many challenges you'll face. Other obstacles include CAPTCHA challenges, IP blocking, and more.

To ensure you can scrape without getting blocked, you might need to explore comprehensive solutions like ZenRows. Give it a try with the 1,000 free API credits to see the difference it makes in your web scraping projects.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.