Goquery for Golang is one of the most popular scraping libraries. This complete HTTP parser provides a jQuery-like syntax to traverse the DOM and extract the data of interest from it. In this tutorial, you'll first dig into the basics and then explore more advanced scenarios through examples.

Let's get started!

What Is Goquery?

goquery is a library for parsing and manipulating HTML documents in Go. Built on top of Go's net/html package and the CSS selector library cascadia, it offers a syntax and set of features inspired by jQuery. This makes it an easy-to-use tool for all users who are already familiar with that technology.

Note: Since the net/html parser returns nodes and not a real DOM tree, jQuery's stateful manipulation functions like height() and css() aren't supported by goquery. However, it offers all jQuery's features you need to perform web scraping with Golang.

Getting Started

This section will guide you through the process of setting up a goquery environment.

1. Set Up a Go Project

Verify that you have Go installed on your computer:

go version

Otherwise, download the Golang installer, execute it, and follow the wizard.

After setting it up, get ready to initialize a goquery project. Create a goquery-project folder and enter it in the terminal:

mkdir goquery-project

cd goquery-project

Next, run the init command to set up a Golang module:

go mod init web-scraper

Add a file named scraper.go with the following code in the project folder. The first line contains the name of the package, then there are the imports. The main() function represents the entry point of any Go script and will soon have some scraping logic.

package main

import (

"fmt"

)

func main() {

fmt.Println("Hello, World!")

// scraping logic...

}

You can run your Go program like this:

go run scraper.go

You'll get a “Hello, World!” printed.

Fantastic! Your Golang sample project is now ready!

2. Build an Initial Golang Scraper

The target site of this guide will be ScrapeMe, an e-commerce platform with a paginated list of Pokémon-inspired products:

The first step to building a web scraper is to connect to the target page and download its HTML document. Therefore, use the Go net/http standard library to retrieve the desired HTML content:

package main

import (

"fmt"

"log"

"net/http"

)

func main() {

// download the target HTML document

res, err := http.Get("https://scrapeme.live/shop/")

if err != nil {

log.Fatal("Failed to connect to the target page", err)

}

defer res.Body.Close()

if res.StatusCode != 200 {

log.Fatalf("HTTP Error %d: %s", res.StatusCode, res.Status)

}

}

Above, the Get() method performs an HTTP GET request to the destination page because net/http acts as an HTTP client. The server will respond with the HTTP document of the page in the response body.

If you print res.Body:

fmt.Println(res.Body)

You'll get:

&{[] {0xc000144480} <nil> <nil>}

That's because res.Body is an io.ReadCloser buffer.

Use the logic below to convert it to string and print the string HTML content of the page. Use the io.ReadAll() function to convert the buffer to bytes and then to a string.

// convert the response buffer to bytes

byteBody, err := io.ReadAll(res.Body)

if err != nil {

log.Fatal("Error while reading the response buffer", err)

}

// convert the byte HTML content to string and

// print it

html := string(byteBody)

fmt.Println(html)

Remember to import io:

import (

"fmt"

"io" // <----

"log"

"net/http"

)

Putting it all together:

package main

import (

"fmt"

"io"

"log"

"net/http"

)

func main() {

// download the target HTML document

res, err := http.Get("https://scrapeme.live/shop/")

if err != nil {

log.Fatal("Failed to connect to the target page", err)

}

defer res.Body.Close()

if res.StatusCode != 200 {

log.Fatalf("HTTP Error %d: %s", res.StatusCode, res.Status)

}

// convert the response buffer to bytes

byteBody, err := io.ReadAll(res.Body)

if err != nil {

log.Fatal("Error while reading the response buffer", err)

}

// convert the byte HTML content to string and

// print it

html := string(byteBody)

fmt.Println(html)

}

Run the Go scraper, and it'll print the HTML of the page:

<!doctype html>

<html lang="en-GB">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1, maximum-scale=2.0">

<link rel="profile" href="http://gmpg.org/xfn/11">

<link rel="pingback" href="https://scrapeme.live/xmlrpc.php">

<title>Products – ScrapeMe</title>

<link rel='dns-prefetch' href='//fonts.googleapis.com' />

<link rel='dns-prefetch' href='//s.w.org' />

<!-- Omitted for brevity... -->

Great! You're ready to import the HTML parsing library goquery and extract some specific data from the page.

3. Install and Import Goquery

Install goquery and its dependencies:

go get github.com/PuerkitoBio/goquery

Note: This command may take some time, so be patient.

When it's done, import it into your scraper.go file:

import (

// other imports...

"github.com/PuerkitoBio/goquery"

)

Initialize a goquery Document object by feeding goquery.NewDocumentFromReader() with res.Body. Thus, doc will now expose all jQuery-like HTML node selection methods.

doc, err := goquery.NewDocumentFromReader(res.Body)

if err != nil {

log.Fatal("Failed to parse the HTML document", err)

}

This is what your entire scraper now contains:

package main

import (

"fmt"

"log"

"net/http"

"github.com/PuerkitoBio/goquery"

)

func main() {

// download the target HTML document

res, err := http.Get("https://scrapeme.live/shop/")

if err != nil {

log.Fatal("Failed to connect to the target page", err)

}

defer res.Body.Close()

if res.StatusCode != 200 {

log.Fatalf("HTTP Error %d: %s", res.StatusCode, res.Status)

}

// parse the HTML document

doc, err := goquery.NewDocumentFromReader(res.Body)

if err != nil {

log.Fatal("Failed to parse the HTML document", err)

}

// scraping logic...

}

Perfect! Time to learn how to get the name and price information from each product on the page.

How to Use Goquery in Golang

Let's first overview what the goquery package allows you to do when it comes to node selection and data extraction, and then see it in action in a complete real-world scraping example.

Step 1: Use CSS Selectors to Find Specific Elements

goquery only supports CSS selectors to select HTML elements on a page.

Note: If you aren't familiar with them, read the guide from the MDN documentation.

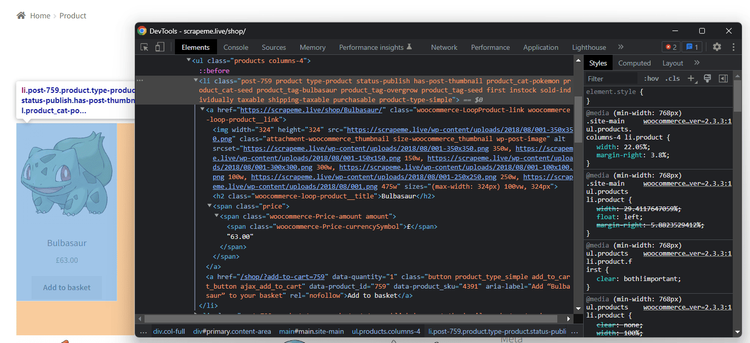

Suppose your goal is to extract the price from a product HTML element. How to do that? Open your target page ScrapeMe in the browser, right-click on a product HTML node, and select the "Inspect" option:

Take a look at the HTML code and note that each product is a li element characterized by a product class. That means you can select them with this CSS selector:

li.product

In the above image, you can also see that the product name is in an h2 element. Given a product, you can get that node with this:

h2

Awesome! It's time to put these into practice!

Step 2: Extract the Data You Want

The go-to method exposed by goquery to find elements is Find(), which accepts a selector string and returns a selection object representing the list of matching HTML nodes.

As mentioned before, the selector isn't just any selector but must be a CSS selector.

Replace the // scraping logic... comment with some HTML element selection logic. Use Find() to extract the name from the first product on the page:

productHTMLElement := doc.Find("li.product").First()

This applies to the li.product CSS selector and use First() to get the first element of the matched elements.

After selecting the desired product, extract its name:

name := productHTMLElement.Find("h2").Text()

Here, Find() works on the children of the selected HTML element. Then, Text() returns the text content of each element in the set of matched elements. As there is only one h2 inside a Pokémon-inspired product, name will contain the desired data.

Verify that by adding an extra line to log the result:

fmt.Println(name)

Your scraping logic at the end of main() will contain:

// find the name of the first product element on the page

// and log it

productHTMLElement := doc.Find("li.product").First()

name := productHTMLElement.Find("h2").Text()

fmt.Println(name)

The scraper will now print the name of the first Pokémon:

Bulbasaur

Great! First milestone accomplished!

A typical scraping process isn't about retrieving a single piece of information, though. So, let's learn how to extract the name and price from all products.

First, create a global type for the scraped data:

type PokemonProduct struct {

name, price string

}

In main(), initialize an array of type PokemonProduct. At the end of the script, this will contain all the scraped data.

var pokemonProducts []PokemonProduct

Use Find() to get all products and Each() to iterate over the selection and extract the desired data from each one. Each() behaves just like its jQuery counterpart going through the HTML elements that are part of the resulting selector strategy object.

doc.Find("li.product").Each(func(i int, p *goquery.Selection) {

// scraping logic

product := PokemonProduct{}

product.name = p.Find("h2").Text()

product.price = p.Find("span.price").Text()

// store the scraped product

pokemonProducts = append(pokemonProducts, product)

})

Print pokemonProducts:

fmt.Println(pokemonProducts)

Piecing it all together, you should have now:

package main

import (

"fmt"

"log"

"net/http"

"github.com/PuerkitoBio/goquery"

)

// custom type to keep of the target object

// to scrape

type PokemonProduct struct {

name, price string

}

func main() {

// download the target HTML document

res, err := http.Get("https://scrapeme.live/shop/")

if err != nil {

log.Fatal("Failed to connect to the target page", err)

}

defer res.Body.Close()

if res.StatusCode != 200 {

log.Fatalf("HTTP Error %d: %s", res.StatusCode, res.Status)

}

// parse the HTML document

doc, err := goquery.NewDocumentFromReader(res.Body)

if err != nil {

log.Fatal("Failed to parse the HTML document", err)

}

// where to store the scraped data

var pokemonProducts []PokemonProduct

// retrieve name and price from each product and

// print it

doc.Find("li.product").Each(func(i int, p *goquery.Selection) {

// scraping logic

product := PokemonProduct{}

product.name = p.Find("h2").Text()

product.price = p.Find("span.price").Text()

// store the scraped product

pokemonProducts = append(pokemonProducts, product)

})

fmt.Println(pokemonProducts)

}

Execute it and that'll log the list of names and prices of the Pokémon:

[{Bulbasaur £63.00} {Ivysaur £87.00} {Venusaur £105.00} {Charmander £48.00} {Charmeleon £165.00} {Charizard £156.00} {Squirtle £130.00} {Wartortle £123.00} {Blastoise £76.00} {Caterpie £73.00} {Metapod £148.00} {Butterfree £162.00} {Weedle £25.00} {Kakuna £148.00} {Beedrill £168.00} {Pidgey £159.00}]

Exactly what you wanted!

Step 3: Export to CSV with Goquery

Export the scraped data to a CSV file with the Go logic below. This snippet creates a products.csv file and initializes it with a header. Then, it converts each product object from pokemonProducts to a new CSV record and appends it to the output file.

// initialize the output CSV file

file, err := os.Create("products.csv")

if err != nil {

log.Fatal("Failed to create the output CSV file", err)

}

defer file.Close()

// initialize a file writer

writer := csv.NewWriter(file)

defer writer.Flush()

// define the CSV headers

headers := []string{

"image",

"price",

}

// write the column headers

writer.Write(headers)

// add each Pokemon product to the CSV file

for _, pokemonProduct := range pokemonProducts {

// convert a PokemonProduct to an array of strings

record := []string{

pokemonProduct.name,

pokemonProduct.price,

}

// write a new CSV record

writer.Write(record)

} // initialize the output CSV file

file, err := os.Create("products.csv")

if err != nil {

log.Fatal("Failed to create the output CSV file", err)

}

defer file.Close()

// initialize a file writer

writer := csv.NewWriter(file)

defer writer.Flush()

// define the CSV headers

headers := []string{

"image",

"price",

}

// write the column headers

writer.Write(headers)

// add each Pokemon product to the CSV file

for _, pokemonProduct := range pokemonProducts {

// convert a PokemonProduct to an array of strings

record := []string{

pokemonProduct.name,

pokemonProduct.price,

}

// write a new CSV record

writer.Write(record)

}

Make sure to add the following imports to make the script work:

import (

// ...

"encoding/csv"

"log"

"os"

)

This is what the complete scraper.go looks like so far:

package main

import (

"encoding/csv"

"fmt"

"log"

"net/http"

"os"

"strings"

"github.com/PuerkitoBio/goquery"

)

// custom type to store the scraped data

type PokemonProduct struct {

name, price string

}

func main() {

// download the target HTML document

res, err := http.Get("https://scrapeme.live/shop/")

if err != nil {

log.Fatal("Failed to connect to the target page", err)

}

defer res.Body.Close()

if res.StatusCode != 200 {

log.Fatalf("HTTP Error %d: %s", res.StatusCode, res.Status)

}

// parse the HTML document

doc, err := goquery.NewDocumentFromReader(res.Body)

if err != nil {

log.Fatal("Failed to parse the HTML document", err)

}

// initialize a list where to store the scraped data

var pokemonProducts []PokemonProduct

// select all products on the page

doc.Find("li.product").Each(func(i int, p *goquery.Selection) {

// scraping logic

product := PokemonProduct{}

product.name = p.Find("h2").Text()

product.price = p.Find("span.price").Text()

// store the scraped product

pokemonProducts = append(pokemonProducts, product)

})

// initialize the output CSV file

file, err := os.Create("products.csv")

if err != nil {

log.Fatal("Failed to create the output CSV file", err)

}

defer file.Close()

// initialize a file writer

writer := csv.NewWriter(file)

defer writer.Flush()

// define the CSV headers

headers := []string{

"image",

"price",

}

// write the column headers

writer.Write(headers)

// add each Pokemon product to the CSV file

for _, pokemonProduct := range pokemonProducts {

// convert a PokemonProduct to an array of strings

record := []string{

pokemonProduct.name,

pokemonProduct.price,

}

// write a new CSV record

writer.Write(record)

}

}

Execute the scraper:

go run scraper.go

Wait for the script to complete, and the products.csv file will appear in the root folder of your project. Open it, and you'll see this:

Well done! Let's now learn the remaining fundamentals of goquery!

The Functions You Can Use

To achieve everything, find the methods and most important functions below. After taking a quick look, we'll proceed to show you some real examples by use case.

The methods offered by goquery are split into several files based on their behavior:

-

array.go: For positional manipulation of the selection. -

expand.go: For expanding the selection's set. -

filter.go: To filter the selection's set to the desired elements. -

iteration.go: To iterate over the selection's nodes. -

manipulation.go: For modifying the HTML document. -

property.go: To inspect and get the node's properties values. -

query.go: To query or reflect a node's identity. -

traversal.go: For traversing the HTML document tree.

The most important functions are these:

| Function | Go File | Used For | Example |

|---|---|---|---|

Eq() |

array.go | Reducing the set of elements to specified index | selection.Eq(1) returns a set with the first two elements in the selection. |

First() |

array.go | Selecting the first element | selection.First() selects the first element in the selection. |

Get() |

array.go | Getting by index | selection.Get(0) retrieves the first element in the selection. |

Last() |

array.go | Selecting the last element | selection.Last() returns the last element in the selection. |

Slice() |

array.go | Slicing a selection based on indexes | selection.Slice(1, 3) selects elements 2 and 3 from the selection. |

Union() |

expand.go | Combining sets of selections | selection1.Union(selection2) returns a selection containing the combination of the elements of the selections. |

Filter() |

filter.go | Filtering elements by CSS selector | selection.Filter(".text-center") selects the elements matching the CSS selector. |

FilterFunction() |

filter.go | Filtering by custom function | selection.FilterFunction(myFilterFunc) filters elements in the selection based on myFilterFunc(). |

Has() |

filter.go | Filtering by descendants | selection.Has("em") reduces the set of matched elements to those that have a <em> descendant. |

Not() |

filter.go | Excluding elements by CSS selector | selection.Not(".hide") excludes elements with a hide class from the selection. |

Each() |

iteration.go | Iterating through elements | selection.Each(eachFunc) applies eachFunc() to each element in the selection. |

Map() |

iteration.go | Transforming elements | selection.Map(mapFunc) applies the mapFunc() to each element in the selection and returns the transformed elements. |

Clone() |

manipulation.go | Cloning elements | selection.Clone() creates a deep copy of the selection. |

Unwrap() |

manipulation.go | Removing parent wrapper | selection.Unwrap() removes parent wrapper elements from each element in the selection. |

Attr() |

property.go | Getting attributes | selection.Attr("href") gets the value of the href attribute from each element in the selection. |

HasClass() |

property.go | Checking for classes | selection.HasClass("uppercase") returns true if any of the matched element have have the specified class. |

Html() |

property.go | Getting HTML content | selection.Html() gets the raw HTML content of each element in the selection. |

Length() |

property.go | Counting elements | selection.Length() returns the number of elements in the selection. |

Text() |

property.go | Getting text content | selection.Text() gets the text content of the elements in the selections. |

Is() |

query.go | Checking element type | selection.Is("div") checks if if at least one of the elements in the selection is a <div>. |

Children() |

traversal.go | Selecting children | selection.Children() selects immediate children of each element in the selection. |

Contents() |

traversal.go | Selecting contents | selection.Contents() selects all children of the elements in the selection, including text and comment nodes. |

Find() |

traversal.go | Selecting descendants by CSS selector | selection.Find("span.uppercase") selects descendants that match the span.uppercase CSS selector |

Next() |

traversal.go | Selecting next sibling | selection.Next() selects the next sibling of each element in the selection. |

Prev() |

traversal.go | Selecting previous sibling | selection.Prev() selects the previous sibling of each element in the selection. |

Parent() |

traversal.go | Selecting parent[s] | selection.Parent() selects the parent element of each element in the selection. |

Siblings() |

traversal.go | Selecting siblings | selection.Siblings() selects all siblings of each element in the selection. |

Learn more in the goquery API docs.

Goquery Examples by Use Case

Take a look at the below goquery examples to address some of the most common scraping use cases.

Get Page Title Using Goquery

Getting the page title is useful to figure out what page you're currently on for crawling or testing purposes.

That information is stored in the <title> tag of an HTML document, so you can get it like this:

title := doc.Find("title").Text()

Find and Select by Text with Goquery

Finding elements by text is great for selecting the desired nodes when there is no simple CSS selector strategy. As in jQuery, there isn't a specific method to do that, but you can use the special operator :contain.

Use it to select all elements containing the specified string in their text, directly or in a child node:

priceElements := doc.Find(":contains('£')")

This returns the parent HTML elements that wrap the £ string.

Filter Elements on Attribute Content

After getting a selection, you may want only elements with specific values in one of their attributes. That's a pretty common selection strategy scenario in web scraping, and goquery offers several filtering functions.

Suppose your target page contains a lot of .product elements with custom HTML tags. You want to select them all and then get only the ones whose data-category attribute starts with book.

Achieve that with the following code. FilterFunction() reduces the set of matched elements to those that pass the function's test. In detail, it uses the strings.HasPrefix() Go function to test whether the link starts with the book string.

// get all product elements

productElements := doc.Find(".product")

// filtering logic

filterFunc := func(index int, element *goquery.Selection) bool {

categoryAttr, _:= element.Attr("data-category")

return strings.HasPrefix(link, "book")

}

// apply the filtering logic

bookishElements = productElements.FilterFunction(filterFunc)

That comes from the strings standard library, so you need to add the import below:

import (

// ...

"strings"

)

An equivalent approach is the one below. It relies on Filter() and a CSS selector that uses the “string begins with” ^ operator.

productElements := doc.Find(".product")

bookishElements = productElements.Filter("[data-category^=book]")

Get Links

Getting all links from a page is essential to performing web crawling. Check out our in-depth article to understand the difference between web crawling vs web scraping.

Select all <a> elements and then use Map() to convert them to a list of strings with the URLs. This also returns anchor and relative links.

links := doc.Find("a").Map(func(i int, a *goquery.Selection) string {

link, _ := a.Attr("href")

return link

})

If you want to filter them out and get only absolute links, write the following. It gets all links that start with the https string.

links := doc.Find("a").FilterFunction(func(i int, a *goquery.Selection) bool {

link, _ := a.Attr("href")

return strings.HasPrefix(link, "https")

}).Map(func(i int, a *goquery.Selection) string {

link, _ := a.Attr("href")

return link

})

Get Table Elements

Extracting data from a table is one of the most common scraping scenarios.

The best way to scrape tables is:

- Define a custom object matching the data contained in the table columns. E.g.:

type Episode struct {

number, title, description, date, audience string

}

- Initialize a slice of the type defined before to store an element for each row in the table.

- Select each row in the table with the

table trCSS selector. - Select each column in the current row with

td. - Use the

switchstatement on the column index to understand what the current column is. - Extract data from the column and add it to the custom object.

- Append the object to the slice.

The snippet below implements the mentioned algorithm to extract data from a table showing the episodes of a TV series:

var episodes []Episode

// iterate over each row in the table

doc.Find("table tr").Each(func(row int, tr *goquery.Selection) {

// custom scraping data object

episode := Episode{}

// iterate over each column in teh row

tr.Find("td").Each(func(col int, td *goquery.Selection) {

// add data to the scraping object

// based on the column index

switch col {

case 0:

episode.number = td.Text()

case 1:

episode.title = td.Text()

case 2:

episode.description = td.Text()

case 3:

episode.date = td.Text()

case 4:

episode.audience = td.Text()

}

})

// append the custom object to the slice

episodes = append(episodes, episode)

})

Combine Selections

Combining sets of elements is useful for dealing with pages that have similar content spread in different sections. You collect all the selections individually, combine them together, and apply scraping logic to each of them.

Assume you want to get all link elements in the header and footer menus of a page.

Get the first selection:

headerMenuLinkElements := doc.Find(".header .menu a")

Then, the second one:

footerMenuLinkElements := doc.Find("footer .menu-section a")

You can then use Union() method to produce the resulting selection of all menu links:

menuLinkElements = headerMenuLinkElements.Union(footerMenuLinkElements)

Now, iterate over menuLinkElements with Each() or Map() to perform the desired operation on each of them as they originated from a single selection:

headerMenuLinkElements := doc.Find(".header .menu a")

footerMenuLinkElements := doc.Find("footer .menu-section a")

menuLinkElements := headerMenuLinkElements.Union(footerMenuLinkElements)

menuLinks := menuLinkElements.Map(func(i int, a *goquery.Selection) string {

link, _ := a.Attr("href")

return link

})

Handle Javascript-Based Pages

Some sites rely on JavaScript for rendering or data retrieval. Consider, for example, the infinite scrolling demo below. This page loads new products as the user scrolls down via JavaScript, making AJAX calls. That's a pretty popular user interaction that most mobile-oriented sites use.

The problem is that a library like goquery only parses HTML code. As it can't interpret JavaScript, you require a different tool to scrape such pages.

You need a headless browser. The reason is that only browsers can render JavaScript, and the prerequisite for those pages to load data is to run JS code.

The most used Go library with headless browser capabilities is Chromedp. Learn how to use it in our complete Chromedp tutorial. Other less popular alternatives include:

- otto: A JavaScript parser and interpreter written natively in Go.

- WebLoop: A scriptable PhantomJS-like API in Golang for headless WebKit.

A better solution to goquery for dynamic-content pages is ZenRows! It's a powerful web scraping API that easily integrates with any other scraping technology. It offers headless browser capabilities, IP and User-Agent rotation, and many other useful features to make your online data retrieval experience way easier.

Avoid Being Blocked Using Goquery

The major challenge when extracting data from the web is getting blocked by anti-scraping solutions, such as CAPTCHAs and IP bans. Adopting a web scraping proxy and setting a real User-Agent header are good tips to avoid basic blocks. Yet, they aren't enough against advanced anti-bot measures.

Sites under a WAF like G2.com can detect and block most automated requests. Try making a request to it:

package main

import (

"fmt"

"io"

"log"

"net/http"

"github.com/PuerkitoBio/goquery"

)

func main() {

res, err := http.Get("https://www.g2.com/products/jira/reviews")

if err != nil {

log.Fatal("Failed to connect to the target page", err)

}

defer res.Body.Close()

if res.StatusCode != 200 {

log.Fatalf("HTTP Error %d: %s", res.StatusCode, res.Status)

}

doc, err := goquery.NewDocumentFromReader(res.Body)

if err != nil {

log.Fatal("Failed to parse the HTML document", err)

}

fmt.Println(doc.Html())

// scraping logic...

}

The script will never reach the goquery section as the request fails:

HTTP Error 403: 403 Forbidden

G2 raises a 403 Forbidden error because it can tell the request originated from automated software. You can spend time randomizing the net/http request with custom headers and rotating proxies or trying other workarounds, but the result isn't likely to change. You'll still get blocked!

An easy and effective solution to overcome anti-bot systems is ZenRows. This next-generation scraping API comes equipped with the most advanced anti-bot bypass toolkit. JavaScript rendering capabilities, rotating premium proxies, auto-rotating UAs, and anti-CAPTCHA are only some of its many built-in tools available.

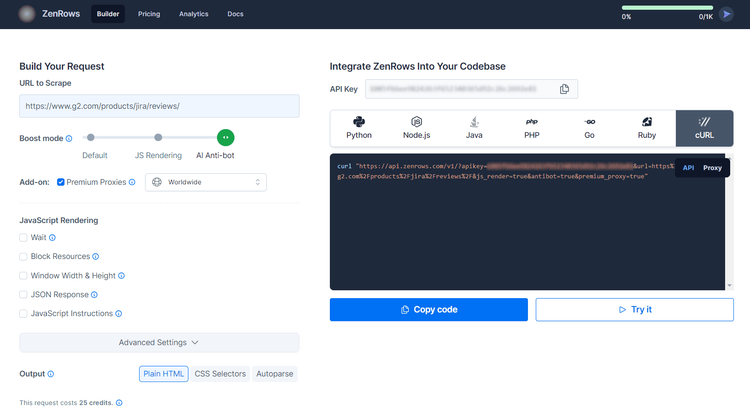

Here are the easy steps to use ZenRows in your goquery scraper:

- Sign up for free to get your free 1,000 credits. You'll reach the Request Builder page.

- Paste your target URL, activate the “AI Anti-bot” mode (it includes JS rendering), add “Premium Proxies”.

- Choose cURL to integrate with Go.

- Select the “API” connection mode.

- Copy the generated URL and add it in your Go script.

Your Golang script:

package main

import (

"fmt"

"io"

"log"

"net/http"

"github.com/PuerkitoBio/goquery"

)

func main() {

res, err := http.Get("https://api.zenrows.com/v1/?apikey=<YOUR_ZENROWS_API_KEY>&url=https%3A%2F%2Fwww.g2.com%2Fproducts%2Fjira%2Freviews%2F&js_render=true&antibot=true&premium_proxy=true")

if err != nil {

log.Fatal("Failed to connect to the target page", err)

}

defer res.Body.Close()

if res.StatusCode != 200 {

log.Fatalf("HTTP Error %d: %s", res.StatusCode, res.Status)

}

doc, err := goquery.NewDocumentFromReader(res.Body)

if err != nil {

log.Fatal("Failed to parse the HTML document", err)

}

fmt.Println(doc.Html())

// scraping logic...

}

Run it, and you'll now get a 200 response. The script above prints this:

<!DOCTYPE html>

<head>

<meta charset="utf-8" />

<link href="https://www.g2.com/assets/favicon-fdacc4208a68e8ae57a80bf869d155829f2400fa7dd128b9c9e60f07795c4915.ico" rel="shortcut icon" type="image/x-icon" />

<title>Jira Reviews 2023: Details, Pricing, & Features | G2</title>

<!-- omitted for brevity ... -->

Amazing! Bye-bye, forbidden access errors! Now you can go ahead and use the JS rendering parameters ZenRows provides, like for scrolling down.

Goquery vs. Colly: Which Is Best?

Goquery vs. Colly? These are the two most popular Go scraping libraries, so that's a common question. Choosing what to adopt between the two depends on your specific web scraping needs.

Goquery is a powerful library to parse HTML documents and select nodes via CSS selectors. Its simple syntax makes it immediate to learn and use for jQuery developers.

On the other hand, Colly is a more complete and complex scraping framework that offers a higher-level approach. Not only does it provide parsing capabilities, but also comes with features like parallel scraping and automatic request handling for crawling.

That makes it a solid solution for large scraping tasks. Find out more in our guide on Colly web scraping in Golang.

When HTML Pages Aren't Encoded as UTF8

The net/html package goquery requires HTML documents to be encoded in UTF-8. To avoid errors when the target page is not UTF-8, use the iconv library to convert it to the desired encoding.

Install iconv:

$ go get github.com/djimenez/iconv-go

Import it:

import (

// ...

iconv "github.com/djimenez/iconv-go"

)

And then use to encode HTML in UTF-8. iconv.NewReader() transforms the HTTP response to a buffer containing HTML content encoded in UTF-8. You can then pass that to NewDocumentFromReader() as usual.

res, err := http.Get("https://example.com/non-utf8-page")

if err != nil {

log.Fatal("Failed to connect to the target page", err)

}

defer res.Body.Close()

if res.StatusCode != 200 {

log.Fatalf("HTTP Error %d: %s", res.StatusCode, res.Status)

}

// convert the HTML charset to UTF-8 encoded HTML

utfBody, err := iconv.NewReader(res.Body, charset, "utf-8")

if err != nil {

log.Fatal("Error while converting to UTF-8", err)

}

// pass utfBody to goquery

doc, err := goquery.NewDocumentFromReader(utfBody)

if err != nil {

log.Fatal("Failed to parse the HTML document", err)

}

// scrapning logic...

XPpath: Goquery Selector?

As of this writing, goquery doesn't support XPath selector. Alternatively, use one of the following libraries to select HTML nodes via XPath in Go:

-

go-xmlpath: A pure Go XPath engine. -

gokogiri: A Go binding of the LibXML C library.

At the same time, keep in mind that they were both updated last time in 2015. Thus, you should prefer an approach based on CSS selectors.

Conclusion

In this goquery tutorial for Golang, you learned the fundamentals of parsing HTML documents. You started from the basics and dove into more advanced use cases to become a scraping expert.

Now you know:

- How to get started with goquery.

- How to extract data from an HTML document with it and export it to CSV.

- How to use it in common use cases.

- The challenges of web scraping and how to overcome them.

No matter how sophisticated and randomized your scraper is, anti-bots can still detect you. Avoid them all with ZenRows, a web scraping API with headless browser capabilities, IP rotation, and an advanced built-in bypass for anti-scraping measures. Scraping sites is easier now. Try ZenRows for free!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.