Tired of your boilerplate and verbose Java data extraction scripts? Try building a Kotlin web scraping script instead. Thanks to the Java interoperability of the language and dedicated libraries, everything will be much easier.

In this tutorial, you'll see how to do web scraping in Kotlin with Skrape{it} on both server-side and client-side pages. Let's dive in!

Is Kotlin Good for Web Scraping?

Yes, Kotlin is a good choice for web scraping!

Think of it this way. Java is a great language for scraping because of its large ecosystem and robust nature. Add new dedicated libraries and a more modern, concise, and intuitive syntax to the mix. You'll get Kotlin web scraping.

At the same time, the interoperability with the JVM isn't enough to make Kotlin so widely used. Python web scraping is preferred as it's backed by a larger community. JavaScript with Node.js is also a popular option. Refer to our guide for the best programming languages for web scraping.

Kotlin emerges for its simplicity, JVM interoperability, and dedicated libraries. When it comes to scripting, those features have made it much more than a Java alternative.

Prerequisites

Follow the instructions below and prepare a Kotlin environment for web scraping.

Set Up the Environment

To go through this tutorial, you'll need to meet the following prerequisites:

- JDK installed locally: Download the latest LTS version of the JDK (Java Development Kit) , launch the installer, and follow the wizard.

- Gradle or Maven: Choose your preferred Java build tool, download it, and install it.

- Kotlin IDE: IntelliJ IDEA Community Edition or Visual Studio Code with the Kotlin Language extension will do.

This guide will refer to Gradle, as it better integrates with Kotlin. Verify your Gradle installation with the following command:

gradle --version

That should produce something like:

------------------------------------------------------------

Gradle 8.5

------------------------------------------------------------

Build time: 2023-11-29 14:08:57 UTC

Revision: 28aca86a7180baa17117e0e5ba01d8ea9feca598

Kotlin: 1.9.20

Groovy: 3.0.17

Ant: Apache Ant(TM) version 1.10.13 compiled on January 4 2023

JVM: 17.0.5 (Oracle Corporation 17.0.5+9-LTS-191)

OS: Windows 11 10.0 amd64

Great! You now have everything you need to create a web scraping Kotlin project.

Set Up a Kotlin Project

Suppose you want to set up a Kotlin project in the KotlinWebScrapingDemo folder. Use these commands to create the project directory and initialize a Gradle project inside it:

mkdir KotlinWebScrapingDemo

cd KotlinWebScrapingDemo

gradle init --type kotlin-application

You'll have to answer a few questions. Select “Kotlin” as the DSL and choose a proper source package:

Select build script DSL:

1: Kotlin

2: Groovy

Enter selection (default: Kotlin) [1..2]

Project name (default: KotlinWebScrapingDemo):

Source package (default: kotlinwebscrapingdemo): com.kotlin.scraping.demo

Enter target version of Java (min. 7) (default: 17):

Generate build using new APIs and behavior (some features may change in the next minor release)? (default: no) [yes, no]

Wait for the process to complete. The KotlinWebScrapingDemo directory will now contain a Gradle project!

Load the folder in your IDE and note that the App.kt file in the nested folders inside /app contains:

/*

* This Kotlin source file was generated by the Gradle 'init' task.

*/

package com.kotlin.scraping.demo

class App {

val greeting: String

get() {

return "Hello World!"

}

}

fun main() {

println(App().greeting)

}

That's the simplest Kotlin script you can write. Launch it with the command below to verify that it works:

./gradlew run

If all goes as planned, the script will print in the terminal:

Hello World!

Well done, your Kotlin project works like a charm.

Time to add the Kotlin web scraping logic to it!

How to Do Web Scraping With Kotlin

In this guided section, you'll learn the basics of web scraping in Kotlin. The target site will be ScrapeMe, an e-commerce site with a paginated list of Pokémon products. The goal of the Kotlin scraper you're about to build is to automatically retrieve them all.

Get ready to write some Kotlin code!

Step 1: Scrape by Requesting Your Target Page

The best way to get the source HTML of a web page in Kotlin is with an external library. Skrape{it} is the most popular web scraping Kotlin library. That's because it offers a powerful API to parse HTML from server- and client-side sites.

Add skrapeit to your project's dependencies. Find the build.gradle.kts file in the /app folder of your project and add this line to the dependencies section:

implementation("it.skrape:skrapeit:1.2.2")

Your build.gradle.kts will now contain:

/*

* This file was generated by the Gradle 'init' task.

*

* This generated file contains a sample Kotlin application project to get you started.

* For more details on building Java & JVM projects, please refer to https://docs.gradle.org/8.5/userguide/building_java_projects.html in the Gradle documentation.

*/

plugins {

// Apply the org.jetbrains.kotlin.jvm Plugin to add support for Kotlin.

alias(libs.plugins.jvm)

// Apply the application plugin to add support for building a CLI application in Java.

application

}

repositories {

// Use Maven Central for resolving dependencies.

mavenCentral()

}

dependencies {

// Use the Kotlin JUnit 5 integration.

testImplementation("org.jetbrains.kotlin:kotlin-test-junit5")

// Use the JUnit 5 integration.

testImplementation(libs.junit.jupiter.engine)

testRuntimeOnly("org.junit.platform:junit-platform-launcher")

// This dependency is used by the application.

implementation(libs.guava)

// skrape{it} to perform web scraping in Kotlin

implementation("it.skrape:skrapeit:1.2.2")

}

// Apply a specific Java toolchain to ease working on different environments.

java {

toolchain {

languageVersion.set(JavaLanguageVersion.of(17))

}

}

application {

// Define the main class for the application.

mainClass.set("com.kotlin.scraping.demo.AppKt")

}

tasks.named<Test>("test") {

// Use JUnit Platform for unit tests.

useJUnitPlatform()

}

Then, install the library with this command:

./gradlew build

That will take a few seconds as it’ll download all the dependencies, so be patient.

In App.kt, import the required skrape packages:

import it.skrape.core.*

import it.skrape.fetcher.*

Skrape{it} provides different data fetchers, with HttpFetcher representing a classic HTTP client. It sends an HTTP request to a given URL and returns a result matching the server response. Use it to connect to the target page and extract its source HTML.

To do that, remove the App class and override the main() function as below:

val html: String = skrape(HttpFetcher) {

// perform a GET request to the specified URL

request {

url = "https://scrapeme.live/shop/"

}

response {

// retrieve the HTML element from the

// document as a string

htmlDocument {

html

}

}

}

// print the source HTML of the target page

println(html)

This is what your new App.kt will look like:

package com.kotlin.scraping.demo

import it.skrape.core.*

import it.skrape.fetcher.*

fun main() {

val html: String = skrape(HttpFetcher) {

// perform a GET request to the specified URL

request {

url = "https://scrapeme.live/shop/"

}

response {

// retrieve the HTML element from the

// document as a string

htmlDocument {

html

}

}

}

// print the source HTML of the target page

println(html)

}

Execute it, and it'll print:

<!doctype html>

<html lang="en-GB">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1, maximum-scale=2.0">

<link rel="profile" href="https://gmpg.org/xfn/11">

<link rel="pingback" href="https://scrapeme.live/xmlrpc.php">

<!-- omitted for brevity... -->

Amazing! The Kotlin web scraping script retrieves the target HTML as desired. It's time to see how to parse and extract data from that content.

Step 2: Extract the HTML Data You Want

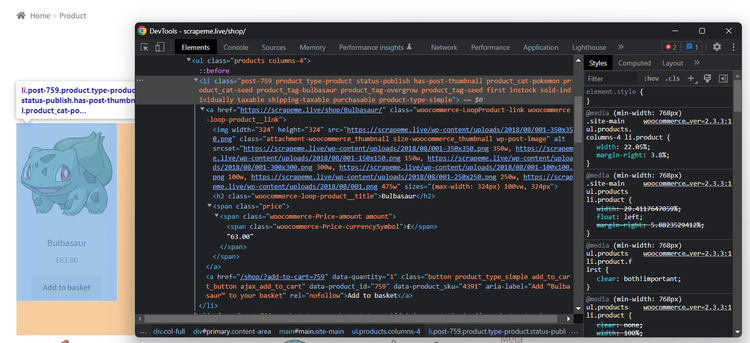

You must first define an effective node selection strategy to extract data from HTML elements. That requires an understanding of the page structure. Thus, open the target page in the browser, right-click on a product HTML element, and pick "Inspect".

The following DevTools section will show up:

Read the HTML code and notice that each product element is a <li> with a "product" class. That means you can select them all with this CSS selector:

li.product

Given a product HTML element, this is the data you should extract from it:

- The product URL in a

<a>. - The product image in an

<img>. - The product name in an

<h2>. - The product price in a

<span>.

To parse HTML in Skrape{it}, you need to use the extractIt function. This accepts a data class as a generic and returns it populated according to your scraping logic.

So, define a Kotlin data class to store the product information:

data class Product(

var url: String = "",

var image: String = "",

var name: String = "",

var price: String = ""

)

To select bare HTML5 tags, you'll need to add the following import:

import it.skrape.selects.html5.*

Remove the response section and add the extractIt logic below to the skrape function. htmlDocument gives you access to the parsed HTML document retrieved from the server. findFirst helps you select the first node matching the specified selector and apply data extraction logic to it:

extractIt<Product> {

// to parse the HTML content of the target page

htmlDocument {

// select the product nodes with a CSS selector

"li.product" {

// limit to the first product element

findFirst {

// scraping logic

it.url = a { findFirst { attribute("href") } }

it.image = img { findFirst { attribute("src") } }

it.name = h2 { findFirst { text } }

it.price = span { findFirst { text } }

}

}

}

}

App.kt should now contain:

package com.kotlin.scraping.demo

import it.skrape.core.*

import it.skrape.fetcher.*

import it.skrape.selects.html5.*

data class Product(

var url: String = "",

var image: String = "",

var name: String = "",

var price: String = ""

)

fun main() {

val product: Product = skrape(HttpFetcher) {

// perform a GET request to the specified URL

request {

url = "https://scrapeme.live/shop/"

}

// to perform web scraping and return a

// Product instance

extractIt<Product> {

// to parse the HTML content of the target page

htmlDocument {

// select the product nodes with a CSS selector

"li.product" {

// limit to the first product element

findFirst {

// scraping logic

it.url = a { findFirst { attribute("href") } }

it.image = img { findFirst { attribute("src") } }

it.name = h2 { findFirst { text } }

it.price = span { findFirst { text } }

}

}

}

}

}

// print the scraped product

println(product)

}

Run your Kotlin web scraping script, and it'll print:

Product(url=https://scrapeme.live/shop/Bulbasaur/, image=https://scrapeme.live/wp-content/uploads/2018/08/001-350x350.png, name=Bulbasaur, price=£63.00)

Way to go! The parsing logic works as expected.

Step 3: Extract Multiple Products

You just learn how to scrape data from a single product, but the page contains many. To retrieve them all, you have to change scrapeFirst with scrapeAll and adapt the logic accordingly. Use a forEach to iterate over the list of product nodes and scrape them all:

extractIt<ArrayList<Product>> {

// to parse the HTML content of the target page

htmlDocument {

// select the product nodes with a CSS selector

"li.product" {

// limit to the first product element

findAll {

forEach { productHtmlElement ->

// create a new product with the scraping logic

val product = Product(

url = productHtmlElement.a { findFirst { attribute("href") } },

image = productHtmlElement.img { findFirst { attribute("src") } },

name = productHtmlElement.h2 { findFirst { text } },

price = productHtmlElement.span { findFirst { text } }

)

// add the scraped product to the list

it.add(product)

}

}

}

}

}

The current web scraping Kotlin script App.kt should contain:

package com.kotlin.scraping.demo

import it.skrape.core.*

import it.skrape.fetcher.*

import it.skrape.selects.html5.*

data class Product(

var url: String = "",

var image: String = "",

var name: String = "",

var price: String = ""

)

fun main() {

val products: List<Product> = skrape(HttpFetcher) {

// perform a GET request to the specified URL

request {

url = "https://scrapeme.live/shop/"

}

// to perform web scraping and return a

// Product instance

extractIt<ArrayList<Product>> {

// to parse the HTML content of the target page

htmlDocument {

// select the product nodes with a CSS selector

"li.product" {

// limit to the first product element

findAll {

forEach { productHtmlElement ->

// create a new product with the scraping logic

val product = Product(

url = productHtmlElement.a { findFirst { attribute("href") } },

image = productHtmlElement.img { findFirst { attribute("src") } },

name = productHtmlElement.h2 { findFirst { text } },

price = productHtmlElement.span { findFirst { text } }

)

// add the scraped product to the list

it.add(product)

}

}

}

}

}

}

// print the scraped products

println(products)

}

Launch it, and it'll return:

[

Product(url=https://scrapeme.live/shop/Bulbasaur/, image=https://scrapeme.live/wp-content/uploads/2018/08/001-350x350.png, name=Bulbasaur, price=£63.00),

Product(url=https://scrapeme.live/shop/Ivysaur/, image=https://scrapeme.live/wp-content/uploads/2018/08/002-350x350.png, name=Ivysaur, price=£87.00),

// omitted for brevity...

Product(url=https://scrapeme.live/shop/Beedrill/, image=https://scrapeme.live/wp-content/uploads/2018/08/015-350x350.png, name=Beedrill, price=£168.00),

Product(url=https://scrapeme.live/shop/Pidgey/, image=https://scrapeme.live/wp-content/uploads/2018/08/016-350x350.png, name=Pidgey, price=£159.00)

]

Fantastic! The scraped objects store the desired data!

Step 4: Convert Scraped Data Into a CSV File

Convert the data to CSV to make it easier to share and analyze by other people. The Kotlin standard library provides what you need to export some data to CSV but using a library is easier.

Apache Commons CSV is a popular Java library for reading and writing CSV files. Since Kotlin is interoperable with Java, it'll work in your script even if it's not written in Java. Add it to your dependencies with this line in the dependencies section of build.gradle.kt:

implementation("org.apache.commons:commons-csv:1.10.0")

Add the required imports on top of App.kt:

import org.apache.commons.csv.CSVFormat

import java.io.FileWriter

Next, create a FileWriter instance and pass it to the CSVFormat class from Apache Commons CSV. This has the printRecord() method to convert each product to CSV and populate the output file:

// initialize the output CSV file

val csvFile = FileWriter("products.csv")

// populate it with the scraped data

CSVFormat.DEFAULT.print(csvFile).apply {

// write the header

printRecord("url", "image", "name", "price")

// populate the file

products.forEach { (url, image, name, price) ->

// add a new line to the CSV file

printRecord(url, image, name, price)

}

}.close()

// release the file writer resources

csvFile.close()

Put it all together, and you'll get:

package com.kotlin.scraping.demo

import it.skrape.core.*

import it.skrape.fetcher.*

import it.skrape.selects.html5.*

import org.apache.commons.csv.CSVFormat

import java.io.FileWriter

data class Product(

var url: String = "",

var image: String = "",

var name: String = "",

var price: String = ""

)

fun main() {

val products: List<Product> = skrape(HttpFetcher) {

// perform a GET request to the specified URL

request {

url = "https://scrapeme.live/shop/"

}

// to perform web scraping and return a

// Product instance

extractIt<ArrayList<Product>> {

// to parse the HTML content of the target page

htmlDocument {

// select the product nodes with a CSS selector

"li.product" {

// limit to the first product element

findAll {

forEach { productHtmlElement ->

// create a new product with the scraping logic

val product = Product(

url = productHtmlElement.a { findFirst { attribute("href") } },

image = productHtmlElement.img { findFirst { attribute("src") } },

name = productHtmlElement.h2 { findFirst { text } },

price = productHtmlElement.span { findFirst { text } }

)

// add the scraped product to the list

it.add(product)

}

}

}

}

}

}

// initialize the output CSV file

val csvFile = FileWriter("products.csv")

// populate it with the scraped data

CSVFormat.DEFAULT.print(csvFile).apply {

// write the header

printRecord("url", "image", "name", "price")

// populate the file

products.forEach { (url, image, name, price) ->

// add a new line to the CSV file

printRecord(url, image, name, price)

}

}.close()

// release the file writer resources

csvFile.close()

}

Launch the Kotlin web scraping script:

./gradlew run

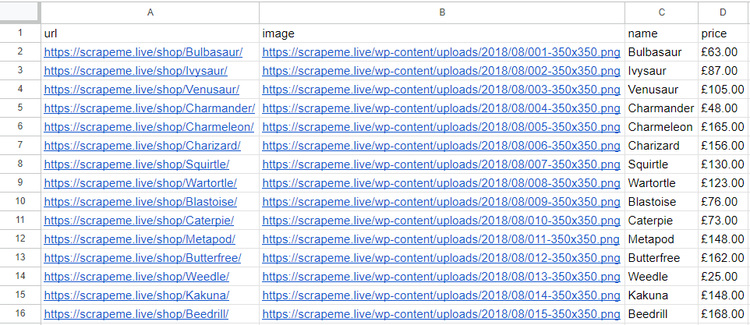

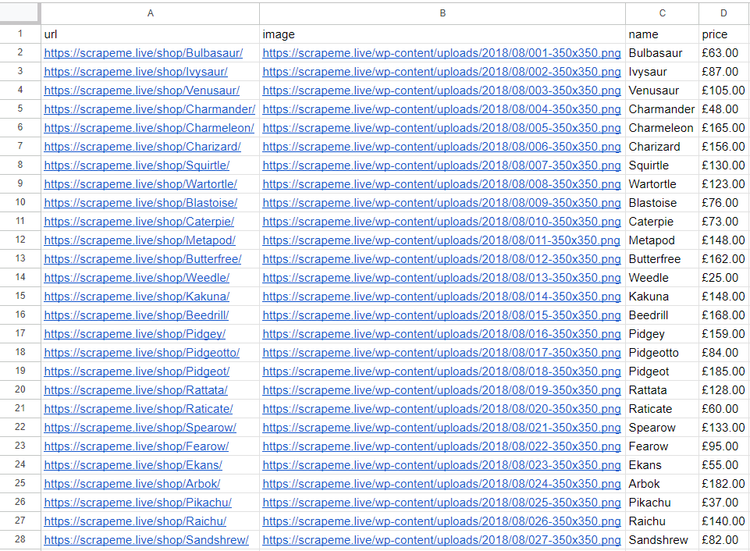

Wait for the scraper to complete and a products.csv file will appear in the root folder of your project. Open it, and you'll see this data:

Wonderful! You just extracted all product data from a single page and learned the basics of web scraping in Kotlin.

There's still a lot more to learn, so keep reading to become an expert.

Advanced Web Scraping Techniques With Kotlin

The basics aren't enough to scrape entire real-world sites or avoid anti-bot measures. Explore the advanced concepts of web scraping with Kotlin!

Web Crawling in Kotlin: Scrape Multiple Pages

The current CSV only contains a few products. That is because the Kotlin web scraping script extracts data from the first page of the target site. You need to do web crawling to go through all pages and scrape all products.

Don't know what that is? Find out more in our guide on web crawling vs web scraping. What you need to do in short is:

- Visit the page and parse its HTML document.

- Select the pagination link elements.

- Extract the URLs to new pages from those nodes and add them to a queue.

- Repeat the loop with a new page.

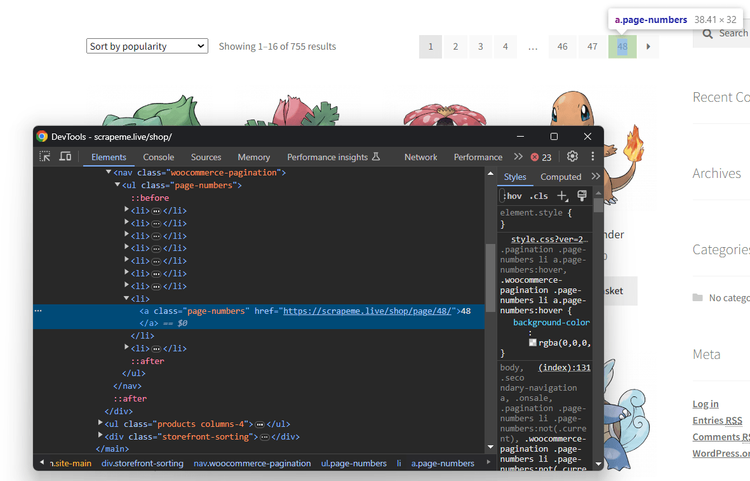

To get started, inspect the pagination HTML element you can find right above the products:

Note that the URLs to new pages are in pagination nodes you can select with:

a.page-numbers

Adding these links to a queue without some logic won't be enough. The reason is that the script shouldn't visit the same page twice. To make the crawling logic less tricky, rely on the support data structures below:

-

pagesDiscovered: A mutableSetto keep track of the URLs discovered. -

pagesToScrape: A mutableListto use as a stack to get the page the script will visit next.

To keep track of all products, you will also need a new mutable List called products. extractIt will no longer be useful as you don't need to return an object anymore. Instead, you'll have to use response, working on one product at a time inside it and adding it to products.

Implement the Kotlin web crawling logic with a while loop as follows:

// where to store the scraped products

val products = ArrayList<Product>()

// the first page to visit

val firstPage = "https://scrapeme.live/shop/page/1/"

// support data structures for web crawling

val pagesToScrape = mutableListOf(firstPage)

val pagesDiscovered = mutableSetOf(firstPage)

// current iteration

var i = 1

// max number of iterations allowed

val maxIterations = 5

while (pagesToScrape.isNotEmpty() && i <= maxIterations) {

// remove the first element from the stack

val pageURL = pagesToScrape.removeAt(0)

skrape(HttpFetcher) {

// perform a GET request to the specified URL

request {

url = pageURL

}

// to perform web scraping

response {

// to parse the HTML content of the target page

htmlDocument {

// select the pagination link elements

"a.page-numbers" {

// crawling logic

findAll {

forEach { paginationElement ->

// the new link discovered

val pageUrl: String = paginationElement.attribute("href")

// if the web page discovered is new and should be scraped

if (!pagesDiscovered.contains(pageUrl) && !pagesToScrape.contains(pageUrl)) {

pagesToScrape.add(pageUrl)

}

// adding the link just discovered

// to the set of pages discovered so far

pagesDiscovered.add(pageUrl)

}

}

}

// select the product nodes with a CSS selector

"li.product" {

// limit to the first product element

findAll {

forEach { productHtmlElement ->

// scraping logic...

}

}

}

}

}

}

// increment the iteration counter

i++

}

The scraper will now automatically discover new pages and scrape products from 5 of them. Integrate the above snippet into the App.kt file, and you'll get:

package com.kotlin.scraping.demo

import it.skrape.core.*

import it.skrape.fetcher.*

import it.skrape.selects.html5.*

import org.apache.commons.csv.CSVFormat

import java.io.FileWriter

data class Product(

var url: String = "",

var image: String = "",

var name: String = "",

var price: String = ""

)

fun main() {

// where to store the scraped products

val products = ArrayList<Product>()

// the first page to visit

val firstPage = "https://scrapeme.live/shop/page/1/"

// support data structures for web crawling

val pagesToScrape = mutableListOf(firstPage)

val pagesDiscovered = mutableSetOf(firstPage)

// current iteration

var i = 1

// max number of iterations allowed

val maxIterations = 5

while (pagesToScrape.isNotEmpty() && i <= maxIterations) {

// remove the first element from the stack

val pageURL = pagesToScrape.removeAt(0)

skrape(HttpFetcher) {

// perform a GET request to the specified URL

request {

url = pageURL

}

// to perform web scraping

response {

// to parse the HTML content of the target page

htmlDocument {

// select the pagination link elements

"a.page-numbers" {

// crawling logic

findAll {

forEach { paginationElement ->

// the new link discovered

val pageUrl: String = paginationElement.attribute("href")

// if the web page discovered is new and should be scraped

if (!pagesDiscovered.contains(pageUrl) && !pagesToScrape.contains(pageUrl)) {

pagesToScrape.add(pageUrl)

}

// adding the link just discovered

// to the set of pages discovered so far

pagesDiscovered.add(pageUrl)

}

}

}

// select the product nodes with a CSS selector

"li.product" {

// limit to the first product element

findAll {

forEach { productHtmlElement ->

// create a new product with the scraping logic

val product = Product(

url = productHtmlElement.a { findFirst { attribute("href") } },

image = productHtmlElement.img { findFirst { attribute("src") } },

name = productHtmlElement.h2 { findFirst { text } },

price = productHtmlElement.span { findFirst { text } }

)

// add the scraped product to the list

products.add(product)

}

}

}

}

}

}

// increment the iteration counter

i++

}

// initialize the output CSV file

val csvFile = FileWriter("products.csv")

// populate it with the scraped data

CSVFormat.DEFAULT.print(csvFile).apply {

// write the header

printRecord("url", "image", "name", "price")

// populate the file

products.forEach { (url, image, name, price) ->

// add a new line to the CSV file

printRecord(url, image, name, price)

}

}.close()

// release the file writer resources

csvFile.close()

}

Run the scraper again:

./gradlew run

It'll take a bit more time than before since it needs to scrape more pages. This time, the output CSV will contain more than the first 16 elements:

Congratulations! You just learn how to perform Kotlin web crawling!

Avoid Getting Blocked When Scraping With Kotlin

Many sites protect their data with anti-bot technologies. Even though data is publicly available on their site, they don't want external agents to retrieve it easily. So, they use technologies designed to detect and block automated scripts.

These anti-bot solutions represent the biggest challenges to your Kotlin scraping operation. While bypassing them all is not easy, two general tips are useful for performing web scraping without getting blocked:

- Set a real-world User-Agent.

- Use a proxy server to hide your IP.

See how to implement them in Skrape{it}.

Get the string of a User-Agent from a real-world browser and the URL of a free proxy from a site like Free Proxy List. Configure them in the request function as below:

val userAgentString = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/121.0.0.0 Safari/537.36"

skrape(HttpFetcher) {

// perform a GET request to the specified URL

request {

url = pageURL

userAgent = userAgentString

proxy = proxyBuilder {

type = Proxy.Type.HTTP

host = "212.14.5.22"

port = 3124

}

}

// ...

}

Don't forget to import Proxy with:

import java.net.Proxy

By the time you follow this tutorial, the selected proxy server will no longer work. Bear in mind that free proxies are data-greedy, short-lived, and unreliable. Use them for learning purposes, but avoid them in production!

These two tips are useful to overcome more superficial technologies. However, they are only baby steps to circumvent more complex anti-bot measures. A proxy and a real User-Agent won't be enough when dealing with WAF solutions like Cloudflare.

Verify that by trying to access a Cloudflare-protected site, such as a G2 review page:

package com.kotlin.scraping.demo

import it.skrape.core.*

import it.skrape.fetcher.*

import java.net.Proxy

fun main() {

val userAgentString = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/121.0.0.0 Safari/537.36"

val html: String = skrape(HttpFetcher) {

// perform a GET request to the specified URL

request {

url = "https://www.g2.com/products/jira/reviews"

userAgent = userAgentString

proxy = proxyBuilder {

type = Proxy.Type.HTTP

host = "212.14.5.22"

port = 3124

}

}

response {

// retrieve the HTML element from the

// document as a string

htmlDocument {

html

}

}

}

// print the source HTML of the target page

println(html)

}

The above Kotlin web scraping program will print the following 403 Forbidden error page:

<!doctype html>

<html class="no-js" lang="en-US">

<head>

<title>Attention Required! | Cloudflare</title>

<meta charset="UTF-8">

<!-- omitted for brevity... -->

Is there a solution to this obstacle? Of course, there is, and it's called ZenRows! On top of User-Agent and IP rotation, this scraping API provides the best anti-bot toolkit to avoid any blocks. These are only some of the dozens of features offered by the tool.

Integrate Skrape{it} with ZenRows for maximum effectiveness!

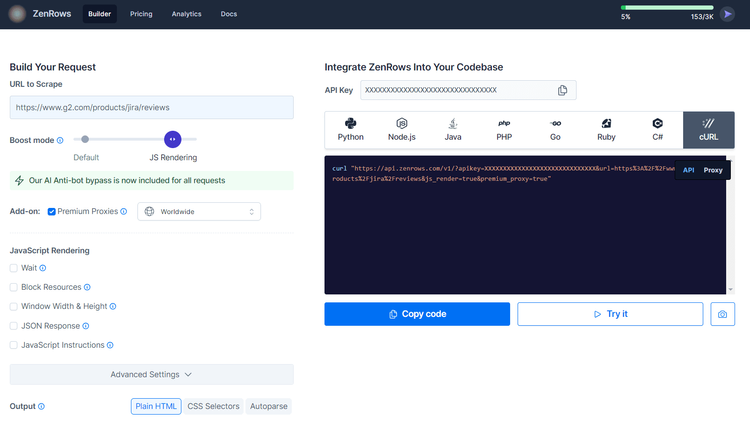

Sign up for free to get your first 1,000 credits and reach the Request Builder page:

Assume you want to scrape the Cloudflare-protected G2.com page mentioned before. Achieve that goal with the following instructions:

- Paste the target URL (

https://www.g2.com/products/jira/reviews) into the "URL to Scrape" input. - Check "Premium Proxy" to get rotating IPs.

- Enable the "JS Rendering" feature (the User-Agent rotation and AI-powered anti-bot toolkit are included for you by default).

- Select the “cURL” option on the right and then the “API” mode to get the complete URL of the ZenRows API.

Pass the generated URL to the request function:

package com.kotlin.scraping.demo

import it.skrape.core.*

import it.skrape.fetcher.*

fun main() {

val html: String = skrape(HttpFetcher) {

// perform a GET request to the specified URL

request {

url = "https://api.zenrows.com/v1/?apikey=<YOUR_ZENROWS_API_KEY>&url=https%3A%2F%2Fwww.g2.com%2Fproducts%2Fjira%2Freviews&js_render=true&premium_proxy=true"

}

response {

// retrieve the HTML element from the

// document as a string

htmlDocument {

html

}

}

}

// print the source HTML of the target page

println(html)

}

Execute your script again, and this time, it'll print the source HTML of the target G2 page:

<!DOCTYPE html>

<head>

<meta charset="utf-8" />

<link href="https://www.g2.com/assets/favicon-fdacc4208a68e8ae57a80bf869d155829f2400fa7dd128b9c9e60f07795c4915.ico" rel="shortcut icon" type="image/x-icon" />

<title>Jira Reviews 2024: Details, Pricing, & Features | G2</title>

<!-- omitted for brevity ... -->

That's impressive! Bye-bye 403 errors. You just learned how to use ZenRows for web scraping with Kotlin.

Use a Headless Browser With Kotlin

As mentioned earlier, Skrapy{it} offers different fetchers. HttpFetcher is great for sites where the content is embedded in the HTML documents returned by the server. For client-site sites that rely on JavaScript for rendering, you need to use BrowserFetcher.

That special HTTP client will behave like a headless browser, accessing the rendered DOM instead of the server returned. To show its power, let's target a web page that needs JavaScript execution.

A good example is the Infinite Scrolling demo. This page dynamically loads new data as the user scrolls down via JS:

Follow the instructions below and see how to scrape products from that page with Skrape{it}!

Call skrape with BrowserFetcher and adapt the scraping logic accordingly. By sheer coincidence, the Product class will remain the same compared to what we saw before:

package com.kotlin.scraping.demo

import it.skrape.core.*

import it.skrape.fetcher.*

import it.skrape.selects.html5.*

data class Product(

var url: String = "",

var image: String = "",

var name: String = "",

var price: String = ""

)

fun main() {

val products: List<Product> = skrape(BrowserFetcher) {

// perform a GET request to the specified URL

request {

url = "https://scrapingclub.com/exercise/list_infinite_scroll/"

}

// to perform web scraping and return a

// Product instance

extractIt<ArrayList<Product>> {

// to parse the HTML content of the target page

htmlDocument {

// select the product nodes with a CSS selector

".post" {

// limit to the first product element

findAll {

forEach { productHtmlElement ->

val baseURL = "https://scrapingclub.com"

// create a new product with the scraping logic

val product = Product(

url = baseURL + productHtmlElement.a { findFirst { attribute("href") } },

image = baseURL + productHtmlElement.img { findFirst { attribute("src") } },

name = productHtmlElement.h4 { findFirst { text } },

price = productHtmlElement.h5 { findFirst { text } }

)

// add the scraped product to the list

it.add(product)

}

}

}

}

}

}

// print the scraped products

println(products)

}

Run your new script:

./gradlew run

That will produce:

[

Product(url=https://scrapingclub.com/exercise/list_basic_detail/90008-E/, image=https://scrapingclub.com/static/img/90008-E.jpg, name=Short Dress, price=$24.99),

Product(url=https://scrapingclub.com/exercise/list_basic_detail/96436-A/, image=https://scrapingclub.com/static/img/96436-A.jpg, name=Patterned Slacks, price=$29.99),

// omitted for brevity...

Product(url=https://scrapingclub.com/exercise/list_basic_detail/96643-A/, image=https://scrapingclub.com/static/img/96643-A.jpg, name=Short Lace Dress, price=$59.99),

Product(url=https://scrapingclub.com/exercise/list_basic_detail/94766-A/, image=https://scrapingclub.com/static/img/94766-A.jpg, name=Fitted Dress, price=$34.99)

]

Here we go! You can now call yourself a Kotlin web scraping expert!

Conclusion

This step-by-step tutorial guided you through the process of doing web scraping in Kotlin. You learned the fundamentals and then dug into more complex aspects. You've become a web scraping Kotlin enthusiast!

You now know why Kotlin is great for robust, effective, reliable scraping. You can also use Skrape{it} for web scraping and crawling against server-side and client-side pages.

The main challenge? No matter how advanced your Kotlin script is, anti-scraping measures will still be able to stop it. Avoid them all with ZenRows, a scraping API with the most effective anti-bot bypass features. Retrieving online data from any site is only one API call away!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.