Anti-bot technologies can easily detect and block your scraper. Luckily, as a Node.js developer, you can rely on Node Unblocker to implement a proxy and get access to your target web pages.

What Is Node Unblocker

Node Unblocker is an open-source Node.js library with an Express-compatible API to create a proxy rotator. It allows users to access websites that are blocked or restricted for a specific location, which it does by routing your requests through a proxy server that retrieves and sends back the desired content.

This method allows you to avoid using your IP address and location, thus bypassing any network restrictions.

How to Create a Node Unblocker Proxy Service

-Now that you have an idea of what Node Unblocker is, let's create a simple proxy service using it. For this course, we'll use the Express web framework to handle requests and, of course, unblocker to set up the proxy service.

Ensure you have NodeJS (which ships with NPM) installed on your local machine before moving on.

1. Run a Server with Express

Let's start by creating a new NodeJS project with the esnext module:

npm init esnext

Then, proceed to install the required dependencies: express and unblocker.

npm install express unblocker

Now, create an index.js file in your project root and add import the required dependencies:

import express from 'express';

import Unblocker from 'unblocker';

unblocker exports a default Unblocker class that is passed in a configuration options object upon instantiation. One of its options is prefix, which specifies the path that proxied URLs begin with.

Next, create a new Express app and an instance of unblocker:

const app = express();

// Create a new instance of unblocker

const unblocker = new Unblocker({});

We've passed in an empty object here, meaning that unblocker will use the default configuration: the prefix is /proxy/.

Because of Node Unblocker's express-compatible API, we only need to register its instance as middleware to integrate it.

// Register the unblocker instance as a middleware

app.use(unblocker);

The last step is calling the listen method on Express to start the server and port 5005 and the on method to allow Unblocker to proxy web sockets when an upgrade event is triggered:

const PORT = process.env.PORT || 5005;

// Start server

app.listen(PORT, () => console.log(`Listening on port ${PORT}`))

// Allow unblocker to proxy websockets

.on('upgrade', unblocker.onUpgrade);

Here's the full code:

import express from 'express';

import Unblocker from 'unblocker';

const app = express();

// Create a new instance of unblocker

const unblocker = new Unblocker({});

// Register the unblocker instance as a middleware

app.use(unblocker);

const PORT = process.env.PORT || 5005;

// Start server

app.listen(PORT, () => console.log(`Listening on port ${PORT}`))

// Allow unblocker to proxy websockets

.on('upgrade', unblocker.onUpgrade);

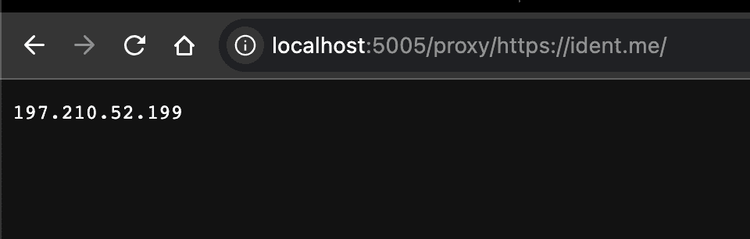

Now, run the index.js file using node index to spin up your server on port 5005 and append your target URL to proxy to test. We'll use https://ident.me/ as a target page. Open the following link in your browser:

http://localhost:5005/proxy/https://ident.me/

You'll have an output like this, displaying your server's IP:

2. Create a Heroku App

Having successfully tested a lightweight Node Unblocker proxy service locally, we can move on to deploying it to a platform like Heroku.

It's important to be aware of a hosting platform's Acceptable Use Policy (AUP) before putting any scraping or proxy software on it. Some hosting providers will not permit such software at all, and others, like Heroku, will only do so with strict requirements and discretion.

First of all, head to Heroku to create an account. It's no longer free, but you can get the basic plan for $5, so you can proceed to the billing section of your account and get it.

Then, you'll need the Heroku CLI tool to deploy, so install it globally depending on the kind of local machine you use. When it's installed, log in to your account using the heroku login command.

The next step is creating a new app on your dashboard or alternatively via the CLI:

heroku apps:create <app-name>

# Eg. heroku apps:create nblocker

Replace <app-name> here with a suitable name for your app.

Now, specify the start script and Node.js version in your package.json file:

{

"name": "express-unblocker",

"version": "1.0.0",

"type": "module",

"module": "index.js",

"scripts": {

"start": "node index.js"

},

"dependencies": {

"express": "^4.18.2",

"unblocker": "^2.3.0"

},

"engines": {

"node": "18.x"

}

}

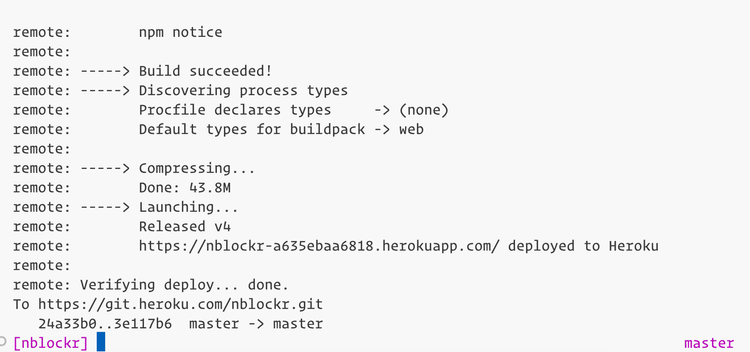

Run the below commands to deploy:

git init

git add .

git commit -m "initial commit"

heroku git:remote -a <app-name>

git push heroku master

Heroku will detect that your app is a NodeJS one and build it as such, and your proxy service will be assigned a domain after deployment based on the app name you specified.

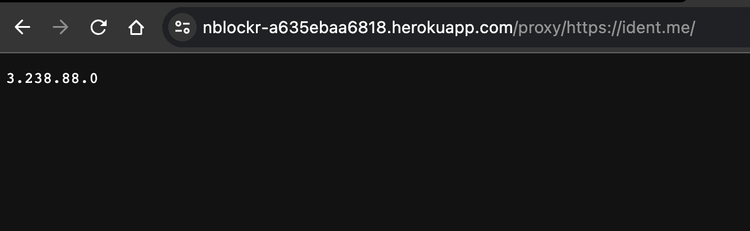

Go ahead and test it with https://ident.me/ in your browser to confirm that the deployed service is working like in the image below.

Fantastic! You've successfully deployed a working Node Unblocker proxy service to Heroku.

3. Node Unblocker for Web Scraping

To use Node Unblocker for scraping, you can first create a proxy pool or network by simply deploying the above implementation on multiple rented remote servers. Secondly, you'll need to create a scraper or bot to use a random server from the proxy pool for each request.

The scraper could be implemented with Axios like this:

import axios from 'axios';

// Node Unblocker Servers

const proxies = [

'http://3.237.11.18:5005',

'http://3.237.11.19:5005',

'http://3.237.11.20:5005',

]

const proxy = proxies[Math.floor(Math.random() * proxies.length)],

url = 'https://ident.me'

axios.get(`${proxy}/proxy/${url}`)

.then(({ data }) => {

console.log({ data })

}).catch(err => console.error(err))

The script could be enhanced to improve the rotating proxy logic so that the scraper can better distribute connections to the several available Node Unblocker proxies.

Alternatively, check other options for a web scraping proxy.

4. Next Steps

If you need a bit more browser-specific features, such as JavaScript rendering, then you'll need to implement a headless browser like Selenium or Puppeteer. It provides a full browser environment, enabling you to automate interactions with websites, fill out forms, simulate user behavior, and more.

Check out our article on headless browsers for web scraping.

Limitations of Node Unblocker and Alternatives

Node Unblocker is easy to set up and deploy, but it has some serious limitations to using it as a proxy for web scraping: you can still get blocked, and the cost of maintenance is high.

Let's explore them and find solutions.

Getting Blocked while Scraping

When using Node Unblocker proxies, you'll face some extra difficulties, like CAPTCHAs, rate limiting, session management, and JavaScript challenges. If your proxy server fails to handle these, the chances of getting blocked by websites are high.

Node Unblocker is unlikely to work out of the box for OAuth login forms and anything that uses postMessage, which means your proxies won't work properly with more protected websites such as Google, Twitter, or Discord.

Many developers consider using a web scraping API like ZenRows to get the job done.

Maintenance Work

From time to time, it may be necessary to perform maintenance tasks like installing new patches and fixing software bugs, interfering with your ability to access the content you want.

Also, you may need to add more Node Unblocker proxies to reduce the frequency at which the existing ones are used to access target websites. Generally, the work involved in maintaining Node Unblocker is huge and not cost-effective.

Other limitations of Node Unblocker might include not being able to provide the full functionality of the original target website and meeting specific compatibility requirements or restrictions posed by certain websites or web applications.

For these reasons, web scraping APIs are gaining popularity among developers.

Conclusion

The increasing challenges for accessing web content demand new solutions. In this article, we looked at Node Unblocker, a NodeJS library that provides a web proxy that processes data and relays it to the client. Now you know how to create a Node Unblocker proxy service and how to deploy one to Heroku.

Aside from the easy setup of Node Unblocker, we also learned about its limitations and why they prevent it from being a suitable solution for cost-effective web scraping. The article also presented and advised using a web scraping API like ZenRows. You can sign up now to get your free API key.

Frequent Questions

What Does Node Unblocker Do?

Node Unblocker is a tool used to bypass network restrictions, allowing users to access content from blocked websites. It bypasses restrictions by rerouting internet traffic, hiding its origin and maintaining anonymity.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.