Using a PHP web scraping library to build a scraper helps you save time and increase your success rate. Whether you're extracting data for monitoring stock prices, feeding marketing teams, or analyzing e-commerce trends, they'll be valuable.

We tested many PHP libraries regarding JavaScript rendering, proxy support and other technical factors, then came up with the most amazing ones. We'll discuss them and go through a quick coding example of each to see how they work.

Stay close!

Which Libraries Are Used for Web Scraping in PHP?

Plenty of PHP packages are available online, but only a few are reliable, practical, and easy to use. Here are the ten best PHP web scraping libraries we found effective and will help scraping different types of websites, like Amazon, Instagram, Google and so on.

Let's dig into the details of each tool and scrape the title, author and tag of articles on freeCodeCamp News.

| PHP Library | HTML Parsing | JavaScript Rendering | Proxy Support | Anti-bot | Good Documentation |

|---|---|---|---|---|---|

| ZenRows | - | ✅ | ✅ | ✅ | ✅ |

| Simple HTML DOM | ✅ | - | - | - | ✅ |

| cURL | - | - | ✅ | - | ✅ |

| Goutte | ✅ | - | - | - | - |

| Guzzle | ✅ | ✅ | ✅ | - | ✅ |

| Panther | ✅ | ✅ | ✅ | - | ✅ |

| DiDOM | ✅ | - | - | - | ✅ |

| PHP-Webdriver | ✅ | ✅ | ✅ | - | ✅ |

| HTTPful | - | - | ✅ | - | - |

| hQuery | ✅ | - | ✅ | - | ✅ |

1. ZenRows

ZenRows is an all-in-one PHP scraping library that will help you avoid getting blocked while scraping. It includes rotating proxies, headless browsers, JavaScript rendering and other essential features for anyone serious about getting data from web pages. You can get your free API key.

👍 Pros:

- An easy-to-use PHP data scraping library.

- Fast and smart proxy service with a low fare rate to avoid bot detection.

- Geo-targeting configuration to allow you to bypass restrictions based on location.

- Anti-bot and CAPTCHA bypass.

- It supports concurrency, plus HTTP and HTTPS protocols.

- Prepared scrapers for popular websites like Google and Amazon.

- It supports JavaScript-rendered web pages.

👎 Cons:

- It doesn't provide proxy browser extensions.

- You'll need another library for HTML parsing.

How to Scrape a Web Page with ZenRows

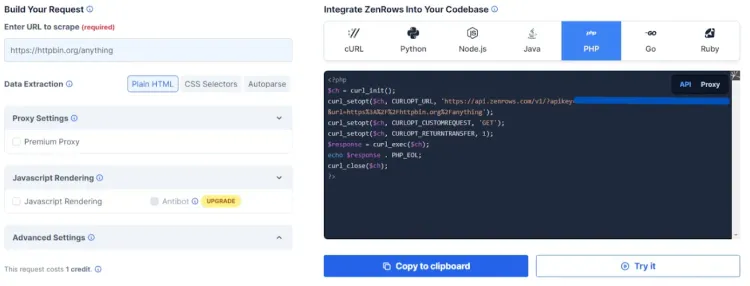

Step 1: Log in to ZenRows and generate the PHP code

To scrape a web page with ZenRows, create a free account and sign in to the ZenRows dashboard.

Paste the URL to scrape https://www.freecodecamp.org/news, select Plain HTML, and then pick PHP as the language on the right. This is the code we'll use.

$ch = curl_init();

curl_setopt($ch, CURLOPT_URL, "https://api.zenrows.com/v1/?apikey=<YOUR_ZENROWS_API_KEY>&url=https%3A%2F%2Ffreecodecamp.org%2Fnews");

curl_setopt($ch, CURLOPT_CUSTOMREQUEST, "GET");

curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1);

$response = curl_exec($ch);

curl_close($ch);

Step 2: Parse the HTML

Since we'll need to parse HTML, let's use the popular Simple HTML DOM as a complementary tool.

To install Simple HTML DOM, download it from SourceForge and extract the simple_html_dom.php file to your coding environment.

To do this, copy the generated PHP code from ZenRows to your coding environment and add Simple HTML DOM using the include() method. This will introduce HTML parsing for grouping the article titles, authors and tags.

// Make use of the plain HTML obtained via ZenRows

// together with Simple HTML DOM library to allow for proper data grouping

// Include the Simple HTML DOM library to have access to all the functions

include("simple_html_dom.php");

$html = str_get_html($response);

The next step is to find the elements using the find() method and then map the extracted data to an array using array_map():

// Find elements by descendants and class names

$titles = $html->find("h2.post-card-title");

$tags = $html->find("span.post-card-tags");

$authors = $html->find("a.meta-item");

// Iterate over the arrays contain the elements

// and make use of the plaintext property to access only the text values

$data = array_map(function ($a1, $a2, $a3) {

$new = array(

"title" => trim($a1->plaintext),

"tag" => trim($a2->plaintext),

"author" => trim($a3->plaintext)

);

return $new;

}, $titles, $tags, $authors);

print_r($data);

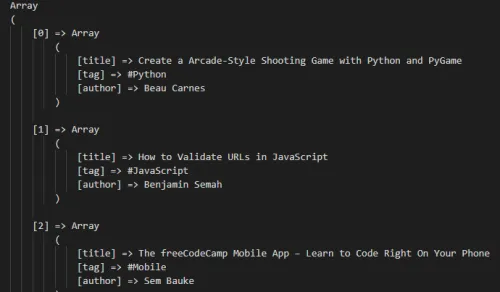

Go ahead and run the code to scrape the target URL. Your output should look like this:

Congratulations, you have successfully scraped a web page using ZenRows. In case you missed a step, here's the full PHP script:

$ch = curl_init();

curl_setopt($ch, CURLOPT_URL, "https://api.zenrows.com/v1/?apikey=<YOUR_ZENROWS_API_KEY>&url=https%3A%2F%2Ffreecodecamp.org%2Fnews");

curl_setopt($ch, CURLOPT_CUSTOMREQUEST, "GET");

curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1);

$response = curl_exec($ch);

curl_close($ch);

// Make use of the plain HTML obtained via ZenRows

// together with Simple HTML DOM library to allow for proper data grouping

// Include the Simple HTML DOM library to have access to all the functions

include("simple_html_dom.php");

$html = str_get_html($response);

// Find elements by descendants and class names

$titles = $html->find("h2.post-card-title");

$tags = $html->find("span.post-card-tags");

$authors = $html->find("a.meta-item");

// Iterate over the arrays contain the elements

// and make use of the plaintext property to access only the text values

$data = array_map(function ($a1, $a2, $a3) {

$new = array(

"title" => trim($a1->plaintext),

"tag" => trim($a2->plaintext),

"author" => trim($a3->plaintext)

);

return $new;

}, $titles, $tags, $authors);

print_r($data);

2. Simple HTML DOM

The Simple HTML DOM library is a popular PHP DOM library that provides a way to access and manipulate HTML elements using CSS selectors. It's easy to use and can scrape data from simple websites.

👍 Pros:

- Easy to parse and extract data from HTML since it requires only basic knowledge.

- Intuitive API using familiar PHP syntax.

- Lightweight and efficient.

👎 Cons:

- Not as powerful or flexible as other PHP web scraping libraries.

- It may not support newer HTML features and standards.

- Not suitable for parsing complex or heavily-formatted HTML pages.

How to Scrape with the Simple HTML DOM Library

No-brainer: the first step to using Simple HTML DOM is to install it. Download the PHP crawler library from SourceForge and extract the simple_html_dom.php file to your coding environment.

Use the file_get_html() method to get the content of a page, parse it and return a DOM object. That object provides methods to access and manipulate the content.

// Include the Simple HTML DOM library to have access to all the functions

include("simple_html_dom.php");

// Create DOM object using the file_get_html method

$html = file_get_html("https://www.freecodecamp.org/news");

The next step is to use the find() method to locate elements in an HTML document, as we did:

// Find elements by descendants and class names

$titles = $html->find("h2.post-card-title");

$tags = $html->find("span.post-card-tags");

$authors = $html->find("a.meta-item");

The find() method is used to search for three different sets of elements:

-

Title:

h2elements with the classpost-card-title. -

Tag:

spanelements with the classpost-card-tags. -

Author:

anchorelements with the classmeta-item.

The matching elements are stored in the variables $titles, $tags and $authors.

To scrape that above, use the array_map() function to apply a callback function to each element in order to return a new array containing the transformed elements.

$data = array_map(function ($a1, $a2, $a3) {

$new = array(

"title" => trim($a1->plaintext),

"tag" => trim($a2->plaintext),

"author" => trim($a3->plaintext)

);

return $new;

}, $titles, $tags, $authors);

print_r($data);

Congratulations! You've successfully scraped a webpage using the Simple HTML DOM PHP web crawler library. Here's what the full code looks like:

// Include the Simple HTML DOM library to have access to all the functions

include("simple_html_dom.php");

// Create DOM object using the file_get_html method

$html = file_get_html("https://www.freecodecamp.org/news");

// Find elements by descendants and class names

$titles = $html->find("h2.post-card-title");

$tags = $html->find("span.post-card-tags");

$authors = $html->find("a.meta-item");

// Iterate over the arrays contain the elements

// and make use of the plaintext property to access only the text values

$data = array_map(function ($a1, $a2, $a3) {

$new = array(

"title" => trim($a1->plaintext),

"tag" => trim($a2->plaintext),

"author" => trim($a3->plaintext)

);

return $new;

}, $titles, $tags, $authors);

print_r($data);

3. cURL

cURL is a PHP crawling library that supports various protocols, including HTTP, HTTPS and FTP. It was designed to make HTTP requests, yet it can be used as a web scraping library.

👍 Pros:

- A high degree of control and flexibility in HTTP requests.

- It supports a wide range of features, like proxies, SSL/TLS, authentication and cookies.

👎 Cons:

- It has a low-level interface and can be challenging to use.

- It doesn't provide many built-in convenience functions, like automatic retries and error handling.

- There are no methods for finding elements based on attributes, classes or identifiers.

- It can't parse HTML.

How to Scrape a Page with cURL

First, you'll need to initialize a cURL session and set some options for it, then send an HTTP request to a specified URL. The server's response is then stored in a variable, after which you can close the cURL session. Here's what it looks like:

// Initialize curl

$ch = curl_init();

// URL for Scraping

curl_setopt(

$ch,

CURLOPT_URL,

"https://www.freecodecamp.org/news"

);

// Return Transfer True

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

$output = curl_exec($ch);

// Closing cURL

curl_close($ch);

Since cURL doesn't support HTML parsing, you'll need to use a library for that function. Let's use the Simple HTML DOM once again. You can parse the HTML response gotten from the cURL session using this PHP script:

// include the SIMPLE HTML DOM library to introduce

// HTML parsing support

include("simple_html_dom.php");

$html = str_get_html($output);

// Find elements by descendants and class names

$titles = $html->find("h2.post-card-title");

$tags = $html->find("span.post-card-tags");

$authors = $html->find("a.meta-item");

// Iterate over the arrays contain the elements

// and make use of the plaintext property to access only the text values

$data = array_map(function ($a1, $a2, $a3) {

$new = array(

"title" => trim($a1->plaintext),

"tag" => trim($a2->plaintext),

"author" => trim($a3->plaintext)

);

return $new;

}, $titles, $tags, $authors);

print_r($data);

And there, you have freeCodeCamp's news data using the cURL PHP crawler library. Here's what the complete code looks like:

// Initialize curl

$ch = curl_init();

// URL for Scraping

curl_setopt(

$ch,

CURLOPT_URL,

"https://www.freecodecamp.org/news"

);

// Return Transfer True

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

$output = curl_exec($ch);

// Closing cURL

curl_close($ch);

// include the SIMPLE HTML DOM library to introduce

// HTML parsing support

include("simple_html_dom.php");

$html = str_get_html($output);

// Find elements by descendants and class names

$titles = $html->find("h2.post-card-title");

$tags = $html->find("span.post-card-tags");

$authors = $html->find("a.meta-item");

// Iterate over the arrays contain the elements

// and make use of the plaintext property to access only the text values

$data = array_map(function ($a1, $a2, $a3) {

$new = array(

"title" => trim($a1->plaintext),

"tag" => trim($a2->plaintext),

"author" => trim($a3->plaintext)

);

return $new;

}, $titles, $tags, $authors);

print_r($data);

4. Goutte

Goutte is a PHP web scraper library and HTTP client that makes it easy to scrape websites supporting various protocols, including HTTP, HTTPS and FTP, as well as extracting data from HTML and XML responses. Goutte uses the DomCrawler component to quickly and effectively crawl data from web pages.

👍 Pros:

- Easy to use.

- It provides a convenient DOM-style interface for extracting data from HTML documents.

👎 Cons:

- Not as flexible as other PHP scraping libraries.

- It may not be suitable for more advanced web scraping scenarios, like dealing with JavaScript-heavy websites or large volumes of data.

- It's only designed for web scraping and HTML parsing, so it may not be a good fit for other types of HTTP tasks or use cases.

- Badly organized documentation.

How to Scrape with Goutte

Start by installing the Goutte PHP scraper library:

composer require fabpot/goutte

Now, make a GET request to the freeCodeCamp' news frontpage, and store the response in a crawler object.

require "vendor/autoload.php";

// reference the Goutte library

use \Goutte\Client;

// Create a Goutte Client instance

$client = new Client();

// Make requests to obtain the freecodecamp news page

$crawler = $client->request("GET", "https://www.freecodecamp.org/news");

The next step is to crawl the data from the webpage's HTML, which can be done by using the filter() method to find elements on the page that match specific selectors.

// Use the filter method to obtain the <h2> element

// which corresponds to the article titles

$titles = $crawler->filter("h2")->each(function ($node) {

return $node->text();

});

// Filter <span> elements with the class corresponding to article tags

$tags = $crawler->filter("span.post-card-tags")->each(function ($node) {

return $node->text();

});

// Filter link elements corresponding to authors

$authors = $crawler->filter("a.meta-item")->each(function ($node) {

return $node->text();

});

Go ahead and store the data scraped in a single array for easy traversal using a similar approach as the one seen in the last sections.

That's it! That's how to use the Goutte PHP web scraping framework to crawl a web page. Here's the full PHP code:

require "vendor/autoload.php";

// reference the Goutte library

use \Goutte\Client;

// Create a Goutte Client instance

$client = new Client();

// Make requests to obtain the freecodecamp news page

$crawler = $client->request("GET", "https://www.freecodecamp.org/news");

// Use the filter method to obtain the <h2> element

// which corresponds to the article titles

$titles = $crawler->filter("h2")->each(function ($node) {

return $node->text();

});

// Filter <span> elements with the class corresponding to article tags

$tags = $crawler->filter("span.post-card-tags")->each(function ($node) {

return $node->text();

});

// Filter link elements corresponding to authors

$authors = $crawler->filter("a.meta-item")->each(function ($node) {

return $node->text();

});

5. Guzzle

Guzzle is a PHP web scraping library that makes it easy to interface with online services and submit HTTP queries. It uses the PHP stream wrapper to send HTTP requests.

Guzzle is a framework that includes the tools needed to create a robust web service client, like service descriptions for defining the inputs and outputs of an API, parameter validation for verifying that API calls are correctly formed, and error handling for dealing with API errors.

👍 Pros:

- Intuitive interface for sending HTTP requests and handling responses.

- Guzzle supports proxy configuration, parallel requests, caching, middleware, and error handling.

- It can be extended and customized using plugins and event subscribers.

👎 Cons:

- It has a steep learning curve.

- It has a large number of dependencies that can increase the complexity of your project.

How to Scrape a Web Page Using Guzzle

To get started, install Guzzle via the composer:

composer require guzzlehttp/guzzle

Once Guzzle is installed, use the Symfony DomCrawler and Guzzle libraries to extract data from the target web page. Then, make a GET request to the freeCodeCamp's news URL, and bind the HTML of the web page to the $html variable. This variable can then be used with the Crawler class to extract data from the HTML. Okay, let's make it easy; here's what it looks like:

require "vendor/autoload.php";

use Symfony\Component\DomCrawler\Crawler;

use GuzzleHttp\Client;

$url = "https://www.freecodecamp.org/news/";

// Create the Client instance

$client = new Client();

// Create a request to the FreeCodeCamp URL

$response = $client->request("GET", $url);

// Bind the Body DOM elements to the html variable using the Guzzle method

$html = $response->getBody();

Next, use the Symfony DomCrawler instance Crawler() to extract data from the HTML of a web page. Use the filter() method to find elements on the page that match specific selectors and then use anonymous functions to extract the text content of each matching element.

// Create a Crawler instance using the html binding

$crawler = new Crawler($html->getContents());

// Filter the Crawler object based on the h2 element and return only the inner text

$titles = $crawler->filter("h2")->each(function (Crawler $node, $i) {

return $node->text();

});

// Filter the Crawler object based on the span element

// and the corresponding class and return only the inner text

$tags = $crawler->filter("span.post-card-tags")->each(function (Crawler $node, $i) {

return $node->text();

});

// Filter the Crawler object based on the anchor element

// and the corresponding class and return only the inner text

$authors = $crawler->filter("a.meta-item")->each(function (Crawler $node, $i) {

return $node->text();

});

Similarly to other libraries seen before, make use of the array_map() function to present and group your array data together. With that, congratulations! You've just used the Guzzle PHP web scraping library to scrape the target URL. And if you got lost along the line, here's what the full script looks like:

require "vendor/autoload.php";

use Symfony\Component\DomCrawler\Crawler;

use GuzzleHttp\Client;

$url = "https://www.freecodecamp.org/news/";

// Create the Client instance

$client = new Client();

// Create a request to the FreeCodeCamp URL

$response = $client->request("GET", $url);

// Bind the Body DOM elements to the html variable using the Guzzle method

$html = $response->getBody();

// Create a Crawler instance using the html binding

$crawler = new Crawler($html->getContents());

// Filter the Crawler object based on the h2 element and return only the inner text

$titles = $crawler->filter("h2")->each(function (Crawler $node, $i) {

return $node->text();

});

// Filter the Crawler object based on the span element

// and the corresponding class and return only the inner text

$tags = $crawler->filter("span.post-card-tags")->each(function (Crawler $node, $i) {

return $node->text();

});

// Filter the Crawler object based on the anchor element

// and the corresponding class and return only the inner text

$authors = $crawler->filter("a.meta-item")->each(function (Crawler $node, $i) {

return $node->text();

});

6. Panther

Panther is a headless PHP package that provides an easy-to-use interface for accessing and manipulating data from various sources. It uses the existing browsers on your PC as a headless browser, eliminating the need to install new software.

Since the Panther PHP web scraping library works well with headless, it can scrape dynamic and non-dynamic sites.

👍 Pros:

- You can automate the web scraping process and lead generation across multiple web browsers.

- It provides a rich set of features for interacting with web pages, such as filling out forms, clicking buttons and extracting elements.

- It's well-documented and actively maintained, with a large and supportive community.

👎 Cons:

- It may not be able to bypass certain types of anti-scraping measures, such as CAPTCHAs or IP blocking.

How to Scrape with the Panther's Help

Start by installing the Panther PHP web scraping library, ChromeDrivers and Gecko Drivers with the following composer command:

composer require symfony/panther

composer require --dev dbrekelmans/bdi

vendor/bin/bdi detect drivers

Once installed, use the Symfony Panther library to simulate a web browser and make a GET request to the target URL using the get() method, as well as take a screenshot of the page using the takeScreenshot() method:

use Symfony\Component\Panther\Client;

require __DIR__ . "/vendor/autoload.php"; // Composer's autoloader

// Use the chrome browser driver

$client = Client::createChromeClient();

// get response

$response = $client->get("https://www.freecodecamp.org/news/");

// take screenshot and store in current directory

$response->takeScreenshot($saveAs = "zenrows-freecodecamp.jpg");

Go ahead and use Panther to extract specific information, like tags, author names and article titles from the target URL:

// let's bind article tags

$tags = $response->getCrawler()->filter("span.post-card-tags")->each(function ($node) {

return trim($node->text()) . PHP_EOL;

});

// let's bind author names

$authors = $response->getCrawler()->filter("a.meta-item")->each(function ($node) {

return trim($node->text()) . PHP_EOL;

});

// let's bind article titles

$titles = $response->getCrawler()->filter("h2")->each(function ($node) {

return trim($node->text()) . PHP_EOL;

});

Afterward, you can use a mapping function like the one used in the above sections. With that, you've finally scraped a web page using Panther!

This is the complete code:

use Symfony\Component\Panther\Client;

require __DIR__ . "/vendor/autoload.php"; // Composer's autoloader

// Use the chrome browser driver

$client = Client::createChromeClient();

// get response

$response = $client->get("https://www.freecodecamp.org/news/");

// take screenshot and store in current directory

$response->takeScreenshot($saveAs = "zenrow-freecodecamp.jpg");

// let's bind article tags

$tags = $response->getCrawler()->filter("span.post-card-tags")->each(function ($node) {

return trim($node->text()) . PHP_EOL;

});

// let's bind author names

$authors = $response->getCrawler()->filter("a.meta-item")->each(function ($node) {

return trim($node->text()) . PHP_EOL;

});

// let's bind article titles

$titles = $response->getCrawler()->filter("h2")->each(function ($node) {

return trim($node->text()) . PHP_EOL;

});

// Use the array_map to bind the arrays together into one array

$data = array_map(function ($title, $tag, $author) {

$new = array(

"title" => trim($title),

"tag" => trim($tag),

"author" => trim($author)

);

return $new;

}, $titles, $tags, $authors);

print_r($data);

7. DiDOM

The DiDOM package is a simple and lightweight HTML parser and document generator for PHP. It provides a fluent interface for creating and manipulating HTML documents and elements.

Some DiDOM features include support for XPath expressions, a clean and easy-to-use API, support for custom callbacks, and a wide range of options to fine-tune the scraping process. It's open-source and released under the MIT license.

👍 Pros:

- Easy to use PHP web scraping library.

- Intuitive API.

- It allows you to parse and extract data from HTML pages.

- Lightweight and efficient.

👎 Cons:

- Not as flexible as other PHP scraper libraries.

- Not suitable for parsing complex or heavily-formatted HTML pages.

How to Do Scraping with DiDOM

Install the DiDOM PHP web scraping library using the composer command in your environment.

composer require imangazaliev/didom

The next step is to create a Document instance based on the target URL and then use DiDom's built-in find() method to search the page for the relevant HTML elements.

// Composer's autoloader

require __DIR__ . "/vendor/autoload.php";

use DiDom\Document;

// Create a Document instance based on the FreeCodeCamp News Page

$document = new Document("https://www.freecodecamp.org/news/", true);

// Search for the article title using the <h2> element

// and its corresponding class

$titles = $document->find("h2.post-card-title");

// Search for the article tags using the <span> element

// and its corresponding class

$tags = $document->find("span.post-card-tags");

// Search for the author using the <a> element

// and its corresponding class

$authors = $document->find("a.meta-item");

Use the array_map() function to organize the extracted data. This creates a new array with the values from each input array, which is stored in the $data variable and printed.

// Use the array_map to bind the arrays together into one array

$data = array_map(function ($title, $tag, $author) {

$new = array(

"title" => trim($title->text()),

"tag" => trim($tag->text()),

"author" => trim($author->text())

);

return $new;

}, $titles, $tags, $authors);

print_r($data);

That's how to use the DiDOM library to scrape a webpage. The final code looks like this:

// Composer's autoloader

require __DIR__ . "/vendor/autoload.php";

use DiDom\Document;

// Create a Document instance based on the FreeCodeCamp News Page

$document = new Document("https://www.freecodecamp.org/news/", true);

// Search for the article title using the <h2> element

// and its corresponding class

$titles = $document->find("h2.post-card-title");

// Search for the article tags using the <span> element

// and its corresponding class

$tags = $document->find("span.post-card-tags");

// Search for the author using the <a> element

// and its corresponding class

$authors = $document->find("a.meta-item");

// Use the array_map to bind the arrays together into one array

$data = array_map(function ($title, $tag, $author) {

$new = array(

"title" => trim($title->text()),

"tag" => trim($tag->text()),

"author" => trim($author->text())

);

return $new;

}, $titles, $tags, $authors);

print_r($data);

8. Php-Webdriver

Php-webdriver is a scraping package for PHP that provides a client for the WebDriver protocol, which allows you to control a web browser from your PHP scripts. The framework is designed to be used with Selenium, a tool for automating web browsers.

Using php-webdriver, you can write scripts that automate tasks such as filling out forms, clicking buttons and navigating to different pages on a website.

👍 Pros:

- Easy to set up and use since it requires minimal configuration to get started.

- It integrates well with other Symfony components and libraries.

- Provides a headless browser for running dynamic sites.

👎 Cons:

- It requires some set-up and configuration, including installing the package and its dependencies, implementing Selenium and running a web driver.

- It can get resource-intensive as it runs a web browser in the background.

- It may not be suitable for certain types of web scraping, such as sites that use complex JavaScript or CAPTCHAs to protect against bots.

How to Scrape a Webpage with php-Webdriver

To use php-webdriver, you'll need to install the library and its dependencies to import it into your PHP scripts.

Start by installing the php-webdriver library with the following composer command:

composer require php-webdriver/webdriver

Import the necessary classes from the php-webdriver package. Then, set up chrome options and the desired capabilities using the DesiredCapabilities() method for the web browser we want to use. In this case, Chrome.

require_once("vendor/autoload.php");

// Import the WebDriver classes

use Facebook\WebDriver\Chrome\ChromeDriver;

use Facebook\WebDriver\Chrome\ChromeOptions;

use Facebook\WebDriver\Remote\DesiredCapabilities;

use Facebook\WebDriver\WebDriverBy;

// Create an instance of ChromeOptions:

$chromeOptions = new ChromeOptions();

// Configure $chromeOptions

$chromeOptions->addArguments(["--headless"]);

// Set up the desired capabilities

$capabilities = DesiredCapabilities::chrome();

$capabilities->setCapability("acceptInsecureCerts", true);

$capabilities->setCapability(ChromeOptions::CAPABILITY, $chromeOptions);

Next, start a new web driver session using the start() method and navigate to the URL to scrape using the get() method.

// Start a new web driver session

$driver = ChromeDriver::start($capabilities);

// Navigate to the URL you want to scrape

$driver->get("https://www.freecodecamp.org/news/");

Use CSS selectors to locate three groups of elements on the page: tags, authors and titles via the findElements() method. These elements are stored in variables called $tags, $authors and $titles, respectively. The results can then be mapped together using a mapping function.

// Extract the elements you want to scrape

$tags = $driver->findElements(WebDriverBy::cssSelector("span.post-card-tags"));

$authors = $driver->findElements(WebDriverBy::cssSelector("a.meta-item"));

$titles = $driver->findElements(WebDriverBy::cssSelector("h2.post-card-title"));

// Use the array_map to bind the arrays together into one array

$data = array_map(function ($a1, $a2, $a3) {

$new = array(

"title" => trim($a1->getText()),

"tag" => trim($a2->getText()),

"author" => trim($a3->getText())

);

return $new;

}, $titles, $tags, $authors);

print_r($data);

Then close the web driver session:

// Close the web driver

$driver->quit();

Awesome, you've successfully scraped a web page using the php-webdriver package.

This is the full code:

require_once("vendor/autoload.php");

// Import the WebDriver classes

use Facebook\WebDriver\Chrome\ChromeDriver;

use Facebook\WebDriver\Chrome\ChromeOptions;

use Facebook\WebDriver\Remote\DesiredCapabilities;

use Facebook\WebDriver\WebDriverBy;

// Create an instance of ChromeOptions:

$chromeOptions = new ChromeOptions();

// Configure $chromeOptions

$chromeOptions->addArguments(["--headless"]);

// Set up the desired capabilities

$capabilities = DesiredCapabilities::chrome();

$capabilities->setCapability("acceptInsecureCerts", true);

$capabilities->setCapability(ChromeOptions::CAPABILITY, $chromeOptions);

// Start a new web driver session

$driver = ChromeDriver::start($capabilities);

// Navigate to the URL you want to scrape

$driver->get("https://www.freecodecamp.org/news/");

// Extract the elements you want to scrape

$tags = $driver->findElements(WebDriverBy::cssSelector("span.post-card-tags"));

$authors = $driver->findElements(WebDriverBy::cssSelector("a.meta-item"));

$titles = $driver->findElements(WebDriverBy::cssSelector("h2.post-card-title"));

// Use the array_map to bind the arrays together into one array

$data = array_map(function ($a1, $a2, $a3) {

$new = array(

"title" => trim($a1->getText()),

"tag" => trim($a2->getText()),

"author" => trim($a3->getText())

);

return $new;

}, $titles, $tags, $authors);

print_r($data);

// Close the web driver

$driver->quit();

9. HTTPful

HTTPful is a chainable and readable PHP library intended to make it easy to send HTTP requests. It provides a clean interface for constructing them, including GET, POST, PUT, DELETE, and HEAD, and can also be used to send custom HTTP methods.

👍 Pros:

- Easy to work with.

- Clean and chainable methods and interfaces for creating complex HTTP requests.

- You can send requests using cURL and sockets, giving you flexibility in how you make them.

- The package parses responses as XML, JSON or plain text, making it easy to work with different types of data.

- It provides helper methods for sending multi-part form data.

👎 Cons:

- It isn't as feature-rich as some other HTTP libraries for PHP. For example, it doesn't support asynchronous requests or advanced authentication methods.

- Not as well maintained as some other libraries. The latest release was in 2020, and the project appears inactive.

How to Scrape Hand-in-hand with HTTPful

Install the package with the following composer command:

composer require nategood/httpful

Include the HTTPful library and send a GET request to the specified URL using the request::get() method. This obtains an HTML response of the page. Afterward, check the status code of the response to make sure the request was successful via the code property.

require_once("vendor/autoload.php");

// Next, define the URL you want to scrape

$url = "https://www.freecodecamp.org/news/";

// Send a GET request to the URL using HTTPful

$response = \Httpful\Request::get($url)->send();

// Check the status code of the response to make sure it was successful

if ($response->code == 200) {

// code to be executed

} else {

// If the request was not successful, you can handle the error here

echo "Error: " . $response->code . "\n";

}

If the request is successful, we access the body of the response (using the body property) and use the Simple HTML DOM Parser library to parse the HTML response with the str_get_html() method.

Find the elements on the page by their descendants and class names using the find() method, then store the elements in variables: $titles, $tags, and $authors. Just as in the cURL section, you can use the mapping function to group the titles, tags and authors together.

// If the request was successful,

// you can access the body of the response like this:

$html = $response->body;

// You can then use the Simple HTML DOM Parser to

// parse the HTML and extract the data you want.

include("simple_html_dom.php");

// Create DOM object using the str_get_html method

$html = str_get_html($html);

// Find elements by descendants and class names

$titles = $html->find("h2.post-card-title");

$tags = $html->find("span.post-card-tags");

$authors = $html->find("a.meta-item");

Awesome, you've made it! Here's the full code:

require_once("vendor/autoload.php");

// Next, define the URL you want to scrape

$url = "https://www.freecodecamp.org/news/";

// Send a GET request to the URL using HTTPful

$response = \Httpful\Request::get($url)->send();

// Check the status code of the response to make sure it was successful

if ($response->code == 200) {

// If the request was successful,

// you can access the body of the response like this:

$html = $response->body;

// You can then use the Simple HTML DOM Parser to

// parse the HTML and extract the data you want.

include("simple_html_dom.php");

// Create DOM object using the str_get_html method

$html = str_get_html($html);

// Find elements by descendants and class names

$titles = $html->find("h2.post-card-title");

$tags = $html->find("span.post-card-tags");

$authors = $html->find("a.meta-item");

// Iterate over the arrays contain the elements

// and make use of the plaintext property to access only the text values

$data = array_map(function ($a1, $a2, $a3) {

$new = array(

"title" => trim($a1->plaintext),

"tag" => trim($a2->plaintext),

"author" => trim($a3->plaintext)

);

return $new;

}, $titles, $tags, $authors);

print_r($data);

} else {

// If the request was not successful, you can handle the error here

echo "Error: " . $response->code . "\n";

}

10. hQuery

hQuery is used for parsing and manipulating HTML documents. It's based on the jQuery API, so you should find hQuery easy to use if you're familiar with jQuery. This web scraping library uses a DOM parser to parse HTML and provide a jQuery-like interface for navigating and manipulating the document.

You can use hQuery to find elements in the document, modify their attributes or content and perform other common tasks.

👍 Pros:

- Easy to use PHP web scraping library, especially if you're familiar with jQuery.

- Great interface for navigating and manipulating HTML documents

👎 Cons:

- It may not be as efficient or performant as other options, especially for very large HTML documents.

Steps to Scrape a Page with hQuery

Let's add hQuery to our coding environment via composer:

composer require duzun/hquery

Include the hQuery library and autoloader at the top of the file. Next, define a URL to scrape. Then, download the HTML content of this website and store it in a variable called $doc using the hQuery method called fromUrl(). This will load the HTML directly from the URL we pass.

// Require the autoloader

require "vendor/autoload.php";

// reference the hQuery library

use duzun\hQuery;

// Define the URL to scrape

$url = "https://www.freecodecamp.org/news/";

// Download the HTML content of the URL

$doc = hQuery::fromUrl($url);

In addition, this allows us to use other hQuery's methods to navigate and manipulate the HTML document. One method is find() to search for three types of elements in the document: h2 with the class post-card-title, span with the class post-card-tags, and a elements with meta-item. These contain the data we want to extract from the page.

// Find all the paragraphs in the body and print their text

$titles = [...$doc->find("h2.post-card-title")];

$tags = [...$doc->find("span.post-card-tags")];

$authors = [...$doc->find("a.meta-item")];

Use the array_map() function to organize the extracted data. This creates a new array with the values from each input array, which is stored in the $data variable and printed.

// Use the array_map to bind the arrays together into one array $data = array_map(function ($a1, $a2, $a3) { $new = array( "title" => trim($a1->text() ), "tag" => trim($a2->text() ), "author" => trim($a3->text() ) ); return $new; }, $titles, $tags, $authors);

print_r($data);

The final code looks like this:

// Require the autoloader

require "vendor/autoload.php";

// reference the hQuery library

use duzun\hQuery;

// Define the URL to scrape

$url = "https://www.freecodecamp.org/news/";

// Download the HTML content of the URL

$doc = hQuery::fromUrl($url);

// Find all the paragraphs in the body and print their text

$titles = [...$doc->find("h2.post-card-title")];

$tags = [...$doc->find("span.post-card-tags")];

$authors = [...$doc->find("a.meta-item")];

// Use the array_map to bind the arrays together into one array

$data = array_map(function ($a1, $a2, $a3) {

$new = array(

"title" => trim($a1->text() ),

"tag" => trim($a2->text() ),

"author" => trim($a3->text() )

);

return $new;

}, $titles, $tags, $authors);

print_r($data);

That's it! This code downloads and parses the webpage and uses hQuery to extract specific pieces of data from it.

Conclusion

Web scraping with PHP can be a smooth process if done properly using the right libraries and techniques, for which we discussed the best 10 PHP web scraping libraries to use in 2024. As a recap, they're:

- ZenRows.

- Simple HTML DOM.

- cURL.

- Goutte.

- Guzzle.

- Panther.

- DiDOM.

- PHP-Webdriver.

- HTTPful.

- hQuery.

A common challenge PHP web scrapers face is the difficulty of crawling web pages without triggering anti-bots. This can cause a lot of headaches and stress. As commented earlier, libraries like ZenRows solve this problem by handling all anti-bot bypass for you. Best part? You can get started for free with 1,000 API credits, and no credit card is required.

Frequent Questions

What Is a Good Scraping Library for PHP?

A good scraping library for PHP is a fast and efficient library that's easy to use, supports the use of proxies, can scrape dynamic content and has great documentation. In addition, compatibility, maintenance, security and support are features to look out for. Some popular options for web scraping in PHP include Goutte, ZenRows, Panther and Simple HTML DOM.

What Is the Best PHP Web Scraping Library?

ZenRows is the best PHP web scraping library to avoid getting blocked. This is due to its ability to handle all anti-bot bypasses for you, from rotating proxies and headless browsers to CAPTCHAs. ZenRows also has ready-to-use scrapers for popular websites like Instagram, Youtube and Zillow.

What Is the Most Popular PHP Library for Web Scraping?

The most popular PHP library for web scraping is Simple HTML DOM. It's an efficient library for parsing HTML. Also, it provides different methods to create DOM objects, find elements and traverse the DOM tree, plus it supports custom parsing behaviors.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.