Playwright, the popular library for browser automation, can extend its capabilities with Playwright Extra. We'll explore them and learn how to use each one.

What Is Playwright Extra?

Playwright Extra is a modular framework that augments Playwright with add-ons, which enable Playwright to handle more advanced use cases that may require additional control and customization. For example, Plugin-proxy-router allows you to use multiple proxies dynamically.

The Playwright-extra framework supports JavaScript and TypeScript, as well as Python for some plugins.

Getting Started with playwright-extra Plugins

Using Playwright-extra plugins requires integrating Playwright Extra with Playwright. Follow the steps below to achieve that.

Step 1: Initialize a NodeJS Project

Ensure NodeJS is installed on your machine by entering node-v in your terminal. That'll display the version that's present on your system.

Then, create a new project folder in your desired location and initialize a new project.

$ mkdir project_folder

$ npm init -y

Step 2: Install Necessary Dependencies

If Playwright is already available on your machine, proceed to install Playwright Extra. Otherwise, install it, too.

$ npm install playwright playwright-extra

Bold### Step 3: Create Initial Playwright Extra Script

For a starting script, import Playwright Extra (it includes base Playwright), launch your Chromium browser and set headless mode. Then, visit your target page, extract its HTML and close the browser.

const { chromium } = require('playwright-extra');

chromium.launch({ headless: true }).then(async browser => {

const page = await browser.newPage()

// Navigate to target URL

await page.goto('https://www.example.com');

// Get page HTML

const textContent = await page.evaluate(() => document.body.textContent);

await browser.close()

})

Note: To use a different browser, like Firefox, modify the code by replacing chromium with firefox.

Let's explore the Playwright-extra plugins next.

Playwright Extra Plugins for Web Scraping

Here's a list of Playwright Extra plugins tailored for web scraping. They're ordered by popularity to provide you with an intuitive pathway.

Playwright Extra Stealth (Most Popular)

The Playwright Extra Stealth plugin is an adaptation of the popular Puppeteer Extra Plugin Stealth, now optimized for Playwright.

Since base Playwright has in-built properties that make it easily identifiable as scaper by anti-bot systems, this plugin masks those properties, making it harder for websites to identify.

We published a comprehensive tutorial on how Playwright Stealth works and how to use it. Check it out for more.

Proxy Router Plugin

The Proxy Router plugin is a Playwright Extra extension for managing proxies seamlessly.

This feature is helpful in avoiding detection, as websites often implement techniques like rate-limiting to control the number of requests from a specific IP address over a certain time frame.

Proxy Router creates a local proxy server that acts as a middleman between you and your configured upstream servers, which offers increased anonymity, caching, and bandwidth saving.

When you make a request, this local server connects to the proxy servers you've configured, relaying connections based on user-defined routing. It also handles authentication.

How to Use Proxy Router

To get started, install the plugin with the command below:

npm install @extra/proxy-router

Then, import the necessary dependencies, add the plugin using chromium.use(), and define your proxy credentials. If you don't have a paid web scraping proxy yet, you can grab a free one from FreeProxyList.

const { chromium } = require('playwright-extra')

// Configure and add the proxy router plugin with a default proxy

const ProxyRouter = require('@extra/proxy-router')

chromium.use(

ProxyRouter({

proxies: { DEFAULT: 'http://164.132.170.100:80' },

})

)

Lastly, open an async function and write a Playwright scraper, just like our initial script above (navigate to the target URL and print page HTML). As a target URL, use Httpbin, a website that displays your used IP address.

//..

chromium.launch({ headless: true }).then(async browser => {

const page = await browser.newPage()

// Navigate to target URL

await page.goto('http://httpbin.io/ip');

// Get page HTML

const textContent = await page.evaluate(() => document.body.textContent);

console.log(textContent);

await browser.close()

})

Putting it all together, here's your complete code:

const { chromium } = require('playwright-extra')

// Configure and add the proxy router plugin with a default proxy

const ProxyRouter = require('@extra/proxy-router')

chromium.use(

ProxyRouter({

proxies: { DEFAULT: 'http://164.132.170.100:80' },

})

)

chromium.launch({ headless: true }).then(async browser => {

const page = await browser.newPage()

// Navigate to target URL

await page.goto('http://httpbin.io/ip');

// Get page HTML

const textContent = await page.evaluate(() => document.body.textContent);

console.log(textContent);

await browser.close()

})

/*

{

"origin": "164.132.170.100"

}

*/

Awesome! Run it, and your result will be your proxy IP address, which proves you've successfully routed your request using the Proxy Router plugin.

However, websites can detect and block IP addresses sending too many requests. Thus, for better results, you need to rotate between multiple proxies dynamically. And fortunately, the Proxy Router plugin allows you to do that.

How? Follow the next few steps.

You must define your proxy list. Feel free to grab some more fresh free proxies from FreeProxyList for testing purposes.

const { chromium } = require('playwright-extra');

const ProxyRouter = require('@extra/proxy-router');

// Configure the proxy router plugin

const proxyRouter = ProxyRouter({

proxies: {

DEFAULT: 'https://217.11.184.20:3128',

PROXY2: 'https://20.33.5.27:8888',

PROXY3: 'https://45.133.72.252:3128',

// Define more proxies as needed

},

});

Next, add the plugin and refer to the previous target URL.

// Add the plugin

chromium.use(proxyRouter);

chromium.launch({ headless: true }).then(async browser => {

// Define target URL

const url = 'http://httpbin.io/ip';

// List of proxies to use

const proxyNames = ['DEFAULT', 'PROXY2', 'PROXY3'];

//..

})

Lastly, loop through the proxy list, navigate to the target URL using the selected proxy, and extract the page content.

//..

// Configure the proxy router plugin

const proxyRouter = ProxyRouter({

proxies: {

DEFAULT: 'http://217.11.184.20:3128',

PROXY2: 'http://20.33.5.27:8888',

PROXY3: 'http://45.133.72.252:3128',

// Define more proxies as needed

},

});

//..

// Loop through each proxy

for (const proxyName of proxyNames) {

const page = await browser.newPage();

// Use the current proxy for the page

await page.route(url, (route) => {

route.continue({ server: proxyName });

});

// Navigate to the target page

await page.goto(url, { waitUntil: 'domcontentloaded' });

// Get the text content of the page

const textContent = await page.evaluate(() => document.body.textContent);

console.log(textContent);

// Close the page for the next iteration

await page.close();

}

await browser.close();

});

Putting everything together, here's the complete code:

const { chromium } = require('playwright-extra');

const ProxyRouter = require('@extra/proxy-router');

// Configure the proxy router plugin

const proxyRouter = ProxyRouter({

proxies: {

DEFAULT: 'http://217.11.184.20:3128',

PROXY2: 'http://20.33.5.27:8888',

PROXY3: 'http://45.133.72.252:3128',

// Define more proxies as needed

},

});

// Add the plugin

chromium.use(proxyRouter);

chromium.launch({ headless: true }).then(async browser => {

// Define target URL

const url = 'http://httpbin.io/ip';

// List of proxies to use

const proxyNames = ['DEFAULT', 'PROXY2', 'PROXY3'];

// Loop through each proxy

for (const proxyName of proxyNames) {

const page = await browser.newPage();

// Use the current proxy for the page

await page.route(url, (route) => {

route.continue({ server: proxyName });

});

// Navigate to the target page

await page.goto(url, { waitUntil: 'domcontentloaded' });

// Get the text content of the page

const textContent = await page.evaluate(() => document.body.textContent);

console.log(textContent);

// Close the page for the next iteration

await page.close();

}

await browser.close();

});

Run the script, and you'll get a similar result to the one below.

{

"origin": "217.11.184.20"

}

{

"origin": "20.33.5.27"

}

{

"origin": "45.133.72.252"

}

Congrats, you've successfully rotated proxies using Proxy Router, as the result shows different IP addresses for each request.

Check out Proxy Router's GitHub page to learn more.

ReCAPTCHA Plugin

Websites often use CAPTCHAs to mitigate automated traffic, posing a major challenge for Playwright. However, the reCaptcha plugin allows Playwright to solve reCAPTCHA and hCaptcha, two of the most common types, using a third-party CAPTCHA-solving service.

When a CAPTCHA challenge is detected, the plugin sends it to the chosen service's API, which automatically returns the solution.

How to use the ReCAPTCHA plugin

To use the ReCAPTCHA plugin, you need a CAPTCHA-solving service configured for this plugin. Currently, 2Captcha is the only built-in solution provider. However, you could also use a different service by providing the plugin a function.

For this tutorial, we'll use 2Captcha. So, sign up to get your API key (the use cost is about $1 per 1,000 normal CAPTCHAs). Then, follow the steps below.

Install puppeteer-extra-plugin-recaptcha.

npm install puppeteer-extra-plugin-recaptcha

Import Playwright Extra, add the reCaptcha plugin and provide it with your 2Captcha credentials.

const { chromium } = require('playwright-extra')

// add recaptcha plugin and provide it your 2captcha credentials

const RecaptchaPlugin = require('puppeteer-extra-plugin-recaptcha')

chromium.use(

RecaptchaPlugin({

provider: {

id: '2captcha',

token: 'Your_API_key' // REPLACE THIS WITH YOUR OWN 2CAPTCHA API KEY ⚡

}

})

)

Like in our initial base script, open an async function and write your Playwright code (that is, launch a browser instance, navigate to the target URL, and wait for CAPTCHA to load). For this example, we'll target 2Captcha's ReCaptcha v2 demo page.

// Launch Chromium browser in headless mode

chromium.launch({ headless: true }).then(async browser => {

// Open a new page

const page = await browser.newPage();

// Navigate to target URL and wait for Captcha to load

await page.goto('https://2captcha.com/demo/recaptcha-v2', { waitUntil: 'networkidle' });

//..

})

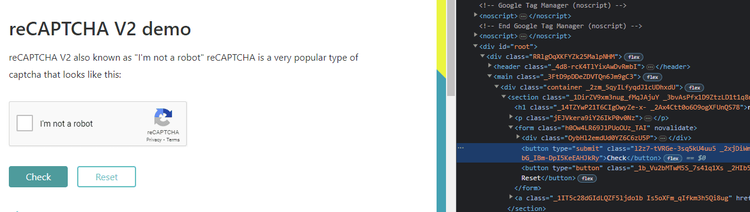

Call the page.solveRecaptchas() method to automatically solve reCAPTCHA (see details below), and use the page.click() method to click the Check button. Then, take a screenshot and close the browser.

The page.click() method takes the selector of the element you want to click as an argument. For that, inspect the Check button on a browser to locate its CSS selector (button.l2z7-tVRGe-3sq5kU4uu5).

//..

chromium.launch({ headless: true }).then(async browser => {

//..

// The single line of code to solve reCaptchas

await page.solveRecaptchas()

// Click the Check button

await page.click('text=Check');

// Wait a few seconds

await page.waitForTimeout(5000);

console.log('Taking a screenshot...');

// Take a screenshot and save to project folder

await page.screenshot({ path: 'screenshot.png', fullPage: true });

console.log('Screenshot saved');

await browser.close();

});

In the code snippet above, the page.solveRecaptchas() method triggers the plugin to attempt to detect any active CAPTCHA challenge, extract its configuration, and pass it on to the CAPTCHA-solving service (2Captcha). This in-built function also enables the plugin to retrieve the solution to put back into the page.

Putting everything together, here's your complete CAPTCHA-solving script:

const { chromium } = require('playwright-extra')

// add recaptcha plugin and provide it your 2captcha credentials

const RecaptchaPlugin = require('puppeteer-extra-plugin-recaptcha')

chromium.use(

RecaptchaPlugin({

provider: {

id: '2captcha',

token: 'Your_API_key' // REPLACE THIS WITH YOUR OWN 2CAPTCHA API KEY ⚡

}

})

)

//Launch Chromium browser in headless mode

chromium.launch({ headless: true }).then(async browser => {

// Open a new page

const page = await browser.newPage();

// Navigate to target URL

await page.goto('https://2captcha.com/demo/recaptcha-v2', { waitUntil: 'networkidle' });

// The single line of code to solve reCAPTCHAs

await page.solveRecaptchas()

// Click the Check button

await page.click('text=Check');

// Wait a few seconds

await page.waitForTimeout(5000);

console.log('Taking a screenshot...');

// Take a screenshot and save to project folder

await page.screenshot({ path: 'screenshot.png', fullPage: true });

console.log('Screenshot saved');

await browser.close();

});

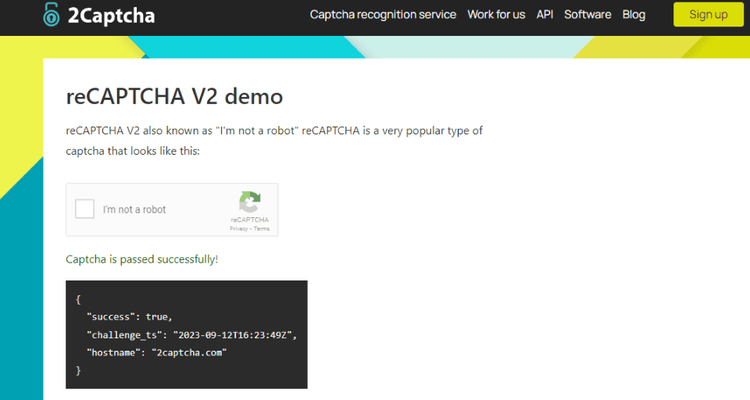

Run the code, and you'll get the following screenshot:

Bingo! You were able to solve the demo reCaptcha, as seen in the screenshot. You can check out how to block resources in Playwright and scrape faster.

The reCaptcha plugin documentation provides more information.

Using Proxies while Solving CAPTCHAs

If you want to use a specific proxy while solving CAPTCHAs, set the environment variables 2CAPTCHA_PROXY_TYPE and 2CAPTCHA_PROXY_ADDRESS.

There are different ways to do that. The easiest is through the process.env object. Below is an example.

const { chromium } = require('playwright-extra')

const RecaptchaPlugin = require('puppeteer-extra-plugin-recaptcha')

// Set the environment variables for the 2Captcha proxy

process.env['2CAPTCHA_PROXY_TYPE'] = 'HTTP'; // Adjust based on your proxy type

process.env['2CAPTCHA_PROXY_ADDRESS'] = 'YOUR_PROXY_ADDRESS'; // Replace with your proxy address

// add recaptcha plugin and provide it your 2captcha credentials

chromium.use(

RecaptchaPlugin({

provider: {

id: '2captcha',

token: 'Your_API_key' // REPLACE THIS WITH YOUR OWN 2CAPTCHA API KEY ⚡

}

})

)

// Launch Chromium browser in headless mode

chromium.launch({ headless: true }).then(async browser => {

// Open a new page

const page = await browser.newPage();

// Navigate to target URL

await page.goto('https://2captcha.com/demo/recaptcha-v2', { waitUntil: 'networkidle' });

// The single line of code to solve reCAPTCHAs

await page.solveRecaptchas()

// Click the on check button.

await page.click('button.l2z7-tVRGe-3sq5kU4uu5');

console.log('Taking a screenshot...');

// Take a screenshot and save to project folder

await page.screenshot({ path: 'screenshot.png', fullPage: true });

console.log('Screenshot saved');

await browser.close();

});

Common Questions

How to Use Different Browsers

Playwright Extra's flexibility means it works with different browsers. While base Playwright supports Chromium, Firefox, and Webkit, Playwright Extra lets you use its plugins with these browsers.

Change your import statement and the plugin .use method to the browser-type you want to use. Below is an example.

/ Any browser supported by playwright can be used with plugins

import { chromium, firefox, webkit } from 'playwright-extra'

chromium.use(plugin)

firefox.use(plugin)

webkit.use(plugin)

Scaling up: Multiple Playwrights with Different Plugins

Large-scale scraping with Playwright often requires multiple instances with different configuration needs. In this case, Playwright Extra lets you use different plugins for each instance.

However, in NodeJS, when you import a module, the imported module values are cached, meaning subsequent import of the same module will receive the same instance. The same applies to Playwright. So, if you naturally modify settings for one instance, it'll reflect in subsequent instances.

Luckily, Playwright-extra allows you to create independent instances using the addExtra module. For that, import the addExtra module and use it to create multiple instances as needed. In the example below, Chromium1 and Chromium2 are distinct instances and don't share plugins or configurations.

// Use `addExtra` to create a fresh and independent instance

import playwright from 'playwright'

import { addExtra } from 'playwright-extra'

const chromium1 = addExtra(playwright.chromium)

const chromium2 = addExtra(playwright.chromium)

chromium1.use(onePlugin)

chromium2.use(anotherPlugin)

// chromium1 and chromium2 are independent

Typescript Support for playwright-extra

Using Playwright-extra and its plugins in TypeScript gives you perfect type support right off the bat. Follow the steps below to use TypeScript with Playwright.

Since you've already created your package.json file, install TypeScript and the required dependencies using the following command.

npm install --save-dev typescript @types/node esbuild esbuild-register

Boostrap a tsconfig.json file. This file contains the TypeScript configuration file.

npx tsc --init --target ES2020 --lib ES2020 --module commonjs --rootDir src --outDir dist

Rename your script from .js to .ts and replace your imports with the browser-type equivalent. From const { chromium } = require('playwright-extra') to import { chromium } from 'playwright-extra'.

Your initial script will look like this in TypeScript:

import { chromium } from 'playwright-extra'

// Launch Chromium browser in headless mode

chromium.launch({ headless: true }).then(async browser => {

// Open a new page

const page = await browser.newPage();

console.log('Taking a screenshot...');

// Navigate to target URL and wait until network idle

await page.goto('https://www.example.com', { waitUntil: 'networkidle' });

// Take a screenshot and save to screenshot.png

await page.screenshot({ path: 'screenshot.png', fullPage: true });

console.log('Screenshot saved as screenshot.png');

await browser.close();

});

Lastly, create a source folder (mkdir src) and place your script in src/project.ts. You can run your TypeScript using the command below:

node -r esbuild-register src/project.ts

Conclusion

Playwright Extra extends Playwright functionalities with the help of plugins designed to enhance your web scraping experience. For example, the Stealth plugin masks the automation properties websites use to identify Playwright as a bot. That increases your chances of not being flagged as an automated scraper.

We recommend checking out an in-depth tutorial of the most popular Playwright extra plugin to avoid getting blocked: Playwright Stealth.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.