Are you looking for the best way to take screenshots while testing a website or web scraping with Selenium?

You're in the right place. In this article, you'll learn how to capture screenshots with Selenium and Python:

- Option 1: Generate a screenshot of the visible part of the screen.

- Option 2: Capture a full-page screenshot.

- Option 3: Create a screenshot of a specific element.

Let's go!

Use Scrapingcourse.com as a demo site to test each method.

Option 1: Screenshot the Visible Part of the Screen

The most basic way to get a screenshot with Selenium is to capture the area the user can see in the browser (the viewport).

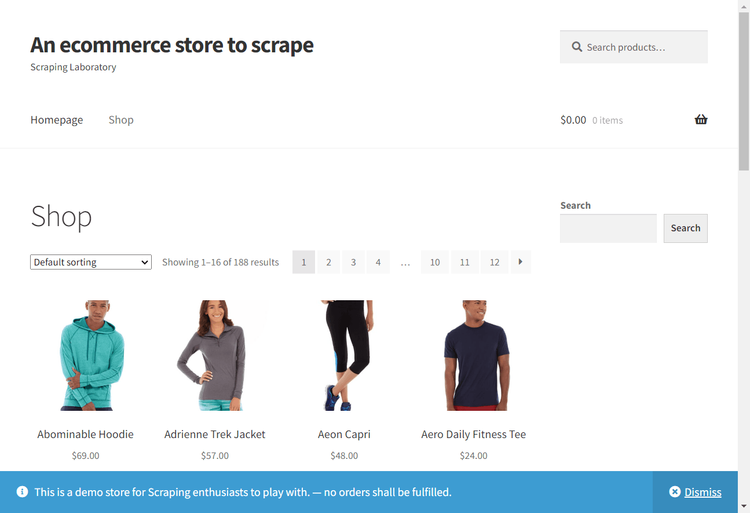

The output of using Selenium to screenshot the visible part of the screen looks like this:

Let's see how to get to the viewport screenshot above.

Start a Chrome WebDriver instance and visit the target page. Then, save the screenshot to the specified directory using Selenium's built-in screenshot saver method, as shown in the code below:

# import the required library

from selenium import webdriver

# start a WebDriver instance

driver = webdriver.Chrome()

# visit the target page

driver.get("https://scrapingcourse.com/ecommerce/")

# take a screenshot of the visible part

driver.save_screenshot("screenshots/visible-part-of-screen.png")

# quit the driver

driver.quit()

Congratulations! You now know how to take a basic viewport screenshot with Selenium. Let's turn that into a full-page screenshot in the next section.

Option 2: Capture a Full-Page Screenshot

A full-page screenshot encaptures the entire web page, including the parts hidden behind the scrollbar. Taking a full-page screenshot in Selenium involves adjusting the scroll dimension, which only works in headless mode.

Here's what the full-page screenshot looks like, including the content outside of the viewport:

To grab a screenshot like the one above, import the required modules into your script, set the Chrome options, and start the WebDriver in headless mode:

# import the required libraries

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

from selenium.webdriver.common.by import By

# run Chrome in headless mode

options = Options()

options.add_argument("--headless")

# start a driver instance

driver = webdriver.Chrome(options=options)

Visit the target web page and define a function to obtain the page's scroll dimension. Implement the function along the page's width and height and use the result to set the browser window.

# ...

# open the target website

driver.get("https://scrapingcourse.com/ecommerce/")

# define a function to get scroll dimensions

def get_scroll_dimension(axis):

return driver.execute_script(f"return document.body.parentNode.scroll{axis}")

# get the page scroll dimensions

width = get_scroll_dimension("Width")

height = get_scroll_dimension("Height")

# set the browser window size

driver.set_window_size(width, height)

Finally, retrieve the body element and capture it:

# ...

# get the full body element

full_body_element = driver.find_element(By.TAG_NAME, "body")

# take a full-page screenshot

full_body_element.screenshot("screenshots/selenium-full-page-screenshot.png")

# quit the browser

driver.quit()

Put the three snippets together, and your final code should look like this:

# import the required libraries

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

from selenium.webdriver.common.by import By

# run Chrome in headless mode

options = Options()

options.add_argument("--headless")

# start a driver instance

driver = webdriver.Chrome(options=options)

# open the target website

driver.get("https://scrapingcourse.com/ecommerce/")

# define a function to get scroll dimensions

def get_scroll_dimension(axis):

return driver.execute_script(f"return document.body.parentNode.scroll{axis}")

# get the page scroll dimensions

width = get_scroll_dimension("Width")

height = get_scroll_dimension("Height")

# set the browser window size

driver.set_window_size(width, height)

# get the full body element

full_body_element = driver.find_element(By.TAG_NAME, "body")

# take a full-page screenshot

full_body_element.screenshot("screenshots/selenium-full-page-screenshot.png")

# quit the browser

driver.quit()

You've just taken a full-page screenshot with Selenium. Good job!

Option 3: Create a Screenshot of a Specific Element

Now, let's see how to capture a screenshot of a specific element.

First, you need to point Selenium to the element's attribute. Luckily, Selenium has a built-in method to achieve that.

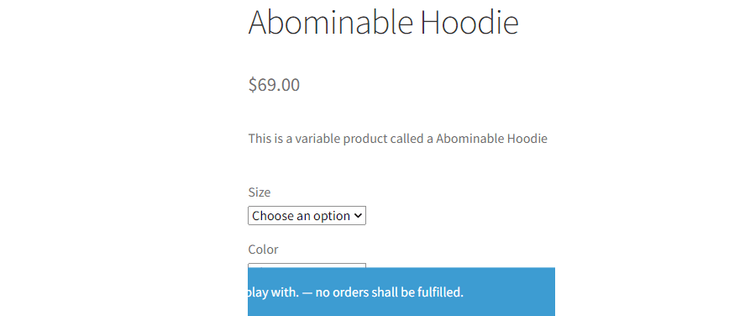

Let's capture a product's summary section on Scraping Lab to see how the specific element screenshot works in Selenium.

Here's what the output will look like:

To start, import the required libraries into your code. Start a Chrome browser instance and visit the target product page:

# import the required library

from selenium import webdriver

from selenium.webdriver.common.by import By

# start a WebDriver instance

driver = webdriver.Chrome()

# visit the target product page

driver.get("https://scrapingcourse.com/ecommerce/product/abominable-hoodie/")

Get the element's class attribute (entry-summary) and use it to execute the screenshot method, which saves the file to the specified directory:

# ...

# find the element to screenshot

target_element = driver.find_element(By.CLASS_NAME, "entry-summary")

# take a screenshot of the visible part

target_element.screenshot("screenshots/specific-element-screenshot.png")

# quit the driver

driver.quit()

Combine the two snippets to get the following code:

# import the required library

from selenium import webdriver

from selenium.webdriver.common.by import By

# start a WebDriver instance

driver = webdriver.Chrome()

# visit the target product page

driver.get("https://scrapingcourse.com/ecommerce/product/abominable-hoodie/")

# find the element to screenshot

target_element = driver.find_element(By.CLASS_NAME, "entry-summary")

# take a screenshot of the visible part

target_element.screenshot("screenshots/specific-element.png")

# quit the driver

driver.quit()

That works!

While the methods above work for taking screenshots, they won't help with the anti-bots that can still block your scraper. Let's see what to do about them in the next part of this tutorial.

Avoid Getting Blocked While Taking Screenshots

Websites employ bot detection methods to prevent users from taking screenshots during web scraping. If you want to scrape without getting blocked, you must bypass the anit-bot systems automatically.

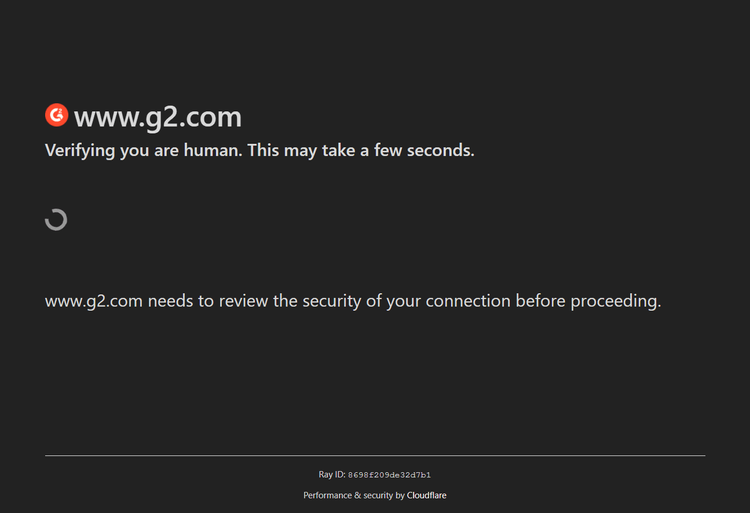

For instance, the current scraping scripts won't work for a protected web page like G2 Reviews.

Try it out with the following code. Replace the target website with G2's URL:

# import the required library

from selenium import webdriver

# start a WebDriver instance

driver = webdriver.Chrome()

# visit the target page

driver.get("https://www.g2.com/products/azure-sql-database/reviews")

# take a screenshot of the visible part

driver.save_screenshot("screenshots/G2-reviews-screenshot.png")

# quit the driver

driver.quit()

The request gets blocked by Cloudflare Turnstile:

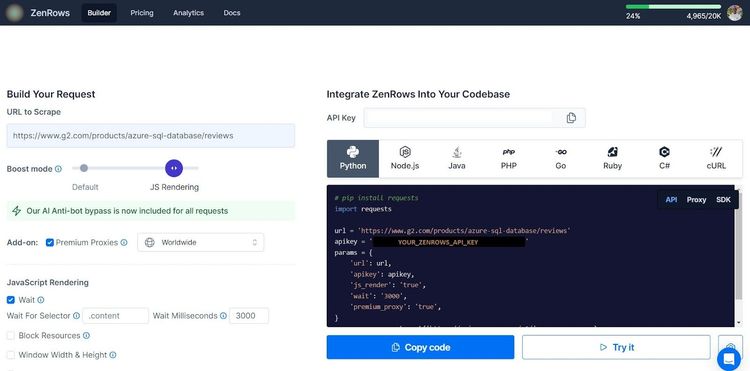

The best solution to evade anti-bots is to use a web scraping API, such as ZenRows. It optimizes your request headers, auto-rotates premium proxies, and bypasses CAPTCHAs and any anti-bot system. You'll never get blocked when web scraping again.

ZenRows also provides JavaScript instructions for scraping dynamic content. Thus, you can replace Selenium with ZenRows and avoid Selenium's technical setup and browser instance overhead.

Let's use ZenRows to access and screenshot the protected page that blocked you earlier.**

Sign up to open Request Builder. Paste the target URL in the link box, toggle on JS Rendering, and activate Premium Proxies. Then, select Python as your chosen language.

You'll use the Requests library as the HTTP client. Ensure you install it using pip:

pip install requests

Format the generated code as shown below. Pay attention to the return_screenshot flag in the payload:

# import the required library

import requests

# specify the query parameters with a screenshot option

params = {

"url": "https://www.g2.com/products/azure-sql-database/reviews",

"apikey": "<YOUR_ZENROWS_API_KEY>",

"js_render": "true",

"premium_proxy": "true",

"return_screenshot": "true"

}

# send the rquest and set the response type to stream

response = requests.get("https://api.zenrows.com/v1/", params=params, stream=True)

# check if the request was successful

if response.status_code == 200:

# save the response content as a screenshot

with open("screenshots/g2-page-screenshot.png", "wb") as f:

f.write(response.content)

print("Screenshot saved successfully.")

else:

print(f"Error: {response.text}")

The code accesses the protected website and takes its full-page screenshot. See the demo below:

You've bypassed anti-bot protection to screenshot a web page using ZenRows. Congratulations!

Conclusion

In this tutorial, you've seen the three methods of taking a screenshot in Selenium:

- Screenshotting the part of the page that the user can see in the browser window.

- Taking a full-page screenshot, including the parts hidden behind the scrollbar.

- Capturing a screenshot of a specific part of a web page.

Remember that anti-bots can prevent you from scraping the content you need during web scraping. We recommend integrating ZenRows into your web scraper to bypass these detection systems and scrape any website without limitations. Try ZenRows for free!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.