Are you looking for the best web scraping tool between Selenium and the Requests library?

In this article, we'll compare both tools so you can tell them apart and know which works best in specific scenarios.

Selenium vs. Requests: Which Is Best?

Selenium is a test automation framework with headless browsing functionality and supports many languages, including Python, Java, PHP, C#, Ruby, and Perl. Although commonly used for software testing, it can work independently as a web scraping tool.

Requests is a Python library for making HTTP requests to websites and APIs. While the Requests library is a valuable web scraping tool, it works best with HTML parsers like BeautifulSoup.

Choose Selenium if you want a single web scraping tool with JavaScript support. Go with the Requests library if dealing with a static website that only requires a parser like BeautifulSoup.

However, you can use both tools together in edge cases. For instance, you can handle login and dynamic page navigation with Selenium and use Requests to extract cookies and prevent page re-rendering.

Feature Comparison: Selenium vs. Requests

Let's quickly compare Selenium and Requests in the table below.

| Consideration | Selenium | Requests |

|---|---|---|

| Language | Python, Java, PHP, C#, Ruby, Perl | Python |

| Ease of use and learning curve | The learning curve can be steep for beginners | Beginner-friendly with simple learning curve |

| HTTP requests | Yes | Yes |

| JavaScript support | Yes | No |

| Browser | Yes | No |

| Avoid getting blocked | Proxy rotation, Undetected ChromeDriver, and Selenium Stealth | Proxy rotation, request headers customization |

| Speed | Slow | Fast |

Now, let's dive into a deeper comparison of both tools.

Selenium Can Scrape Dynamic Content

Dynamic content changes as data gets updated from the database, usually triggered by the user's preference or interaction with a web page. The Requests library can’t handle such dynamic events.

Selenium supports the scraping of dynamic content by default and can spin up a browser to automate web pages. This gives it an advantage over the Requests library because, on most websites, you'll scrape dynamically loaded data with JavaScript.

Python Requests Is Much Faster than Selenium

Selenium's browser instance requirement and detailed operations slow it down considerably. The Requests library is lighter, and its execution is straightforward, contributing to efficient data fetching and API testing capabilities.

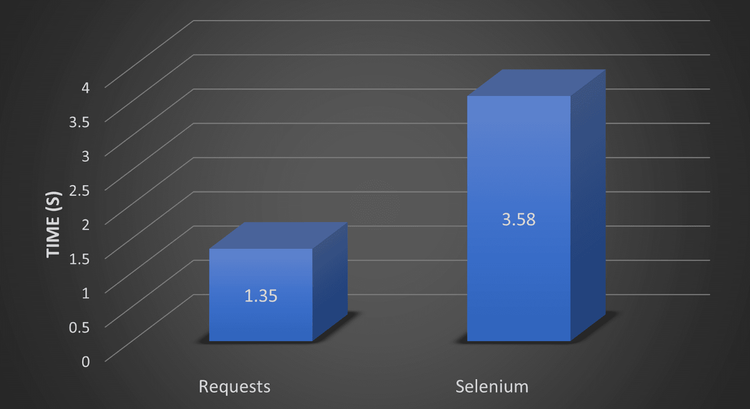

We ran a 100-iteration benchmark test to compare the speed of both tools on fetching the same website. The Requests library was faster, averaging 1.35 seconds, whereas Selenium averaged 3.58 seconds.

We've presented the benchmark in a graphical format below (from the fastest to the slowest):

The time unit used is the seconds (s = seconds).

It's Easier to Get Started with Python Requests

The Requests library has a straightforward learning curve with minimal code requirements, making it more user-friendly than Selenium.

Selenium is more versatile and requires extra setup steps like specific WebDriver installations. Plus, it has many programmable parts that complicate its learning curve and make it less beginner-friendly.

You Can Interact with Web Pages with Selenium

Selenium's built-in ability to spin up a web browser and interact with web pages like a real user gives it an edge over the Requests library. This isn't possible with the Requests library, as it's only an HTTP client.

For instance, Selenium supports interactive scrolling, form filling, and mouse and keyboard actions. All these can help you avoid getting blocked because they add the human touch to your scraper.

Best Choice to Avoid Getting Blocked While Scraping

Many websites you'll encounter during web scraping employ various means to block bots. So, your web scraper must be able to avoid anti-bot detection regardless of the tool you use.

Both tools have various ways of avoiding anti-bot detection.

Selenium supports proxy rotation for masking your IP, and it can integrate with a third-party anti-bot plugin called Undetected ChromeDriver for anti-bot bypass.

The Requests library is more flexible at evading blocks. It supports header and proxy rotation to mimic a real browser and prevent IP bans.

However, these methods aren't enough to bypass most anti-bot protections. The most effective way to avoid detection is to use a web scraping API like ZenRows. It integrates perfectly with Python Requests and provides premium proxy rotation, header customization, anti-bot bypass, and more.

Try ZenRows for free and scrape any web page without getting blocked!

Conclusion

After comparing Selenium vs. Requests in this article, it's clear that the Requests library is lighter and more user-friendly than Selenium. While Selenium is slower, it's more functional and better at scraping dynamic websites.

Both tools are valuable web scraping tools with specific use cases. However, they can't bypass anti-bot detection effectively and are vulnerable to getting blocked. Bypass all blocks with ZenRows and scrape any website without limitations. Integrating ZenRows with Selenium is also easy if you're going with the headless browser option.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.