Looking to avoid detection when web scraping? Then, let's learn how to set up Superagent-proxy in this tutorial.

What Is Superagent-proxy in NodeJS?

Superagent-proxy is a NodeJS module that extends the functionality of Superagent, an HTTP request library, by adding proxy support. That can be helpful to avoid getting blocked while web scraping in NodeJS.

How Superagent-proxy Works

Under the hood, Proxy-agent provides an http.Agent implementation, allowing Superagent and other NodeJS HTTP clients like Axios to use proxy servers. Agent handles the proxy communication and allows the underlying JavaScript client to connect to the remote server through proxies.

How to Set a Superagent Proxy

The steps to set up a Superagent proxy include setting up a scraper with Superagent and then ass superagent-proxy. Let's go step by step!

To set a Superagent proxy, you must first set up your Superagent web scraper.

1. Prerequisites

First of all, make sure you have NodeJS installed.

Then, create a new project folder (e.g. Superagent_Scraper) and start a NodeJS project inside using the following commands.

mkdir Superagent_Scraper

cd Superagent_Scraper

npm init -y

Next, install Superagent.

npm install superagent

2. Create a Superagent Scraper

To create your Superagent scraper, open a new JavaScript file (e.g. call it superagent-proxy.js), import Superagent using the require function, and define an asynchronous function that'll contain the scraper's logic. Also, insert a try-catch block within your asynchronous function to easily handle errors.

const request = require('superagent');

(async () => {

try {

// Function content will be added in the next steps.

} catch (error) {

console.error(error);

}

})

Now, make a GET request to your target URL and log the response body. If you send a request to [https://httpbin.io/ip](https://httpbin.io/ip), you'll get your IP address as a response. Lastly, add (); to call the async function. Here's the complete code.

const request = require('superagent');

(async () => {

try {

const response = await request.get('https://httpbin.io/ip');

console.log(response.body);

} catch (error) {

console.error(error);

}

})();

Run it, and your result should be your IP address.

{ origin: '197.100.236.106:25609' }

3. Add a Superagent Proxy

Install the superagent-proxy module with the following command:

npm install superagent-proxy

Then, import the module, enable the proxy feature using Request#proxy(), and define your proxy URL. You can get a free proxy from FreeProxyList. Your previous code should now look like this:

const request = require('superagent');

// import and enable superagent-proxy using Request#proxy()

require('superagent-proxy')(request);

// Define proxy URL

const proxyUrl = 'http://20.89.38.178:3333';

(async () => {

try {

const response = await request.get('https://httpbin.io/ip');

console.log(response.body);

} catch (error) {

console.error(error);

}

})();

Next, within the async function, apply the proxy using the .proxy() method. You should have the following complete code.

const request = require('superagent');

// import and enable superagent-proxy using Request#proxy()

require('superagent-proxy')(request);

// Define proxy URL

const proxyUrl = 'http://20.89.38.178:3333';

(async () => {

try {

const response = await request

.get('https://httpbin.org/ip')

.proxy(proxyUrl); // Apply the proxy

console.log(response.body);

} catch (error) {

console.error(error);

}

})();

And here's the output:

{ origin: '20.89.38.178:4763' }

Awesome, your result this time is the Proxy's IP address, meaning you've set your first Superagent proxy.

Now, a few considerations:

HTTP vs. HTTPS Proxies

HTTP proxies are designed to handle HTTP requests (http://), while HTTPS ones work for websites using SSL/TLS encryption (https://). We recommend HTTPS proxies, as they work for both secure (HTTPS) and non-secure (HTTP) websites.

Authentication with superagent-proxy

Some proxies require authentication with a username and password. This is common with paid proxies to ensure only permitted users can access their proxy servers.

In superagent-proxy, you can add the necessary authentication details by adding an auth object as an argument in the .proxy() method, like in the example below.

.proxy(proxyUrl, {

auth: `${username}:${password}`

});

Your script would look like this:

const request = require('superagent');

// import and enable superagent-proxy using Request#proxy()

require('superagent-proxy')(request);

// Define proxy URL and auth details

const proxyUrl = 'http://20.89.38.178:3333';

const username = 'your-username';

const password = 'your-password';

(async () => {

try {

const response = await request

.get('https://httpbin.org/ip')

.proxy(proxyUrl, {

auth: `${username}:${password}`

}); // Apply the proxy

console.log(response.body);

} catch (error) {

console.error(error);

}

})();

Add More Proxies to Superagent

Websites can flag proxies as bots and block them accordingly. Therefore, you need to rotate proxies to increase your chances of avoiding detection.

To see how to rotate proxies using Superagent proxy, get more free proxies from FreeProxyList.

Then change your single proxy to a proxy list like the one below.

const request = require('superagent');

require('superagent-proxy')(request);

// Define a list of proxy URLs

const proxyList = [

'http://20.89.38.178:3333',

'http://198.199.70.20:31028',

'http://8.219.97.248:80',

// Add more proxy URLs as needed

];

Next, create a function that randomly selects a proxy from your proxy list. You can use Math.random() to achieve this.

// Function to select a random proxy from the list

function getRandomProxy() {

const randomIndex = Math.floor(Math.random() * proxyList.length);

return proxyList[randomIndex];

}

In your async function, call getRandomProxy() to get a random proxy URL and use it with the .proxy() method.

(async () => {

try {

const proxyUrl = getRandomProxy(); // Select a random proxy URL from the list

const response = await request

.get('https://httpbin.org/ip')

.proxy(proxyUrl);

console.log('Using proxy:', proxyUrl);

console.log(response.body);

} catch (error) {

console.error(error);

}

})();

Your final code should look like this:

const request = require('superagent');

require('superagent-proxy')(request);

// Define a list of HTTPS proxy URLs

const proxyList = [

'http://20.89.38.178:3333',

'http://198.199.70.20:31028',

'http://8.219.97.248:80',

// Add more proxy URLs as needed

];

// Function to select a random HTTPS proxy from the list

function getRandomProxy() {

const randomIndex = Math.floor(Math.random() * proxyList.length);

return proxyList[randomIndex];

}

(async () => {

try {

const proxyUrl = getRandomProxy(); // Select a random proxy URL from the list

const response = await request

.get('https://httpbin.org/ip')

.proxy(proxyUrl);

console.log('Using proxy:', proxyUrl);

console.log(response.body);

} catch (error) {

console.error(error);

}

})();

To verify it works, run the code multiple times using the following command.

node superagent-proxy.js

You should get a different IP address every time you run it.

Here's the result for three runs:

Using proxy: http://198.199.70.20:31028

{ origin: '198.199.70.20' }

Using proxy: http://20.89.38.178:3333

{ origin: '20.89.38.178' }

Using proxy: http://8.219.97.248:80

{ origin: '8.219.97.248' }

Awesome! You've successfully rotated proxies using Superagent-proxy.

However, it's worth noting that free proxies are for testing purposes and don't work in real-world scenarios as they're easily detected, as well as prone to failure. For example, if you replace the target URL with Opensea, a Cloudflare-protected website, you'll get an error 403 message similar to the one below.

Error: Forbidden

at Request.callback (C:\Users\dalie\Superagent_scraper\node_modules\superagent\lib\node\index.js:845:17)

at IncomingMessage.<anonymous> (C:\Users\dalie\Superagent_scraper\node_modules\superagent\lib\node\index.js:1070:18)

at IncomingMessage.emit (node:events:525:35)

at endReadableNT (node:internal/streams/readable:1359:12)

at process.processTicksAndRejections (node:internal/process/task_queues:82:21) {

status: 403,

To avoid getting blocked, consider residential proxies, which are home IP addresses. Check out our guide on the best proxies for web scraping to learn more and find recommended providers.

Why Proxies Aren't Enough

While residential proxies are more reliable than free proxies, they aren't enough to avoid getting blocked in most web scraping projects.

Fortunately, there's an easy add-on for Superagent.

ZenRows, in addition to providing the most effective and reliable premium proxies, bypasses all anti-bot measures for you, including CAPTCHAs, JS challenges, fingerprinting, and any other one. Let's try it to scrape Opensea.

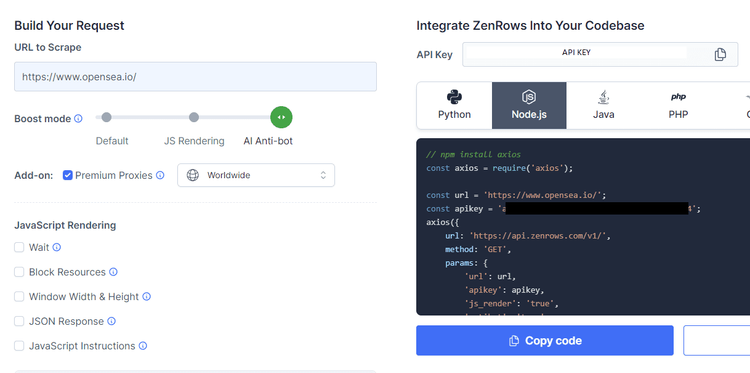

To get started, sign up for free, and you'll get to the Request Builder page.

Input the target URL (https://opensea.io/), activate the anti-bot boost mode, and use Premium Proxies. Also, select Node.js as a language.

That'll generate your request code on the right (Axios is suggested, but you can use Superagent). Copy the code, use Superagent, and your new code should look like this:

const request = require('superagent');

const url = 'https://www.opensea.io/';

const apikey = 'Your_API_Key';

request

.get('https://api.zenrows.com/v1/')

.query({

url: url,

apikey: apikey,

js_render: 'true',

antibot: 'true',

premium_proxy: 'true',

})

.end((err, response) => {

if (err) {

console.log(err);

} else {

console.log(response.body);

}

});

Run it, and this will be your result:

<!DOCTYPE html>

//..

<title>OpenSea, the largest NFT marketplace</title>

//..

Bingo! ZenRows makes bypassing any anti-bot solution easy.

Conclusion

The Superagent-proxy module allows you to route your requests through different IP addresses to reduce the risk of getting blocked while web scraping. As a note, free proxies are unreliable, so premium residential proxies are the best option. At the same time, even premium proxies aren't enough to scrape many websites.

Whichever the case is, you can consider ZenRows as a toolkit to bypass all anti-bot measures. Sign up now to get 1,000 free API credits.

Frequent Questions

What Is SuperAgent Used for?

SuperAgent is used for making HTTP requests in both NodeJS and browser environments. Its intuitive API makes it easy to handle responses and manage headers and other query parameters. Additionally, it supports promises and asynchronous requests.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.