Do you find yourself getting blocked despite employing the power of Undetected ChromeDriver?

While this ChromeDriver patch is optimized to mask Selenium's automation properties, websites can still block your IP address. In this tutorial, you'll learn how to implement a proxy with Undetected ChromeDriver to overcome this obstacle.

Let’s dive in.

Configure the Proxy With Undetected ChromeDriver

To implement an Undetected ChromeDriver proxy, add your proxy settings to Chrome options using the add_argument() method. Then, initialize an Undetected ChromeDriver instance with these settings.

Let's see it in practice.

We'll use proxies from FreeProxyList for demonstration purposes. Please note that the proxies used in this tutorial may not be operational at the time of reading.

Additionally, we'll opt for HTTPS proxies as they also work with HTTP, following the pattern <protocol:host:port>.

To get started, install Undetected ChromeDriver using the following command.

pip install undetected-chromedriver

Next, import the library and By class from Selenium so you can select elements on the page to view your result.

import undetected_chromedriver as uc

from selenium.webdriver.common.by import By

After that, define your proxy details, set Chrome options, and add your proxy to the Chrome options using the add_argument() method.

# define proxy details

proxy = '50.168.163.183:80'

# set Chrome options

options = uc.ChromeOptions()

# add proxy to Chrome options

options.add_argument(f'--proxy-server={proxy}')

Lastly, initialize an Undetected ChromeDriver instance, passing the configured Chrome options. Navigate to the target website, in this case, HTTPbin, which returns the IP address of the client making the request, and print its text content to see your result.

#...

# intialize uc instance with configured options

driver = uc.Chrome(options=options)

# navigate to target website

driver.get('https://httpbin.io/ip')

# retrieve text content of the page

textContent = driver.find_element(By.TAG_NAME, 'body').text

print(textContent)

driver.quit()

Your complete code should look like this:

import undetected_chromedriver as uc

from selenium.webdriver.common.by import By

# define proxy details

PROXY = '50.168.163.183:80'

# set Chrome options

options = uc.ChromeOptions()

# add proxy to Chrome options

options.add_argument(f'--proxy-server={PROXY}')

# intialize uc instance with configured options

driver = uc.Chrome(options=options)

# navigate to target website

driver.get('https://httpbin.io/ip')

# retrieve text content of the page

textContent = driver.find_element(By.TAG_NAME, 'body').text

print(textContent)

driver.quit()

Run this code, and you'll get your proxy's IP address.

{

"origin": "50.168.163.183:8989"

}

Bingo!

Proxy Authentication for Selenium

It's worth noting that while free proxies may be suitable for learning, they're often unreliable and don't work in real-world use cases. On the other hand, premium web scraping proxies offer reliability and better performance.

However, commercial proxy providers often require authentication to ensure that only authorized users can use their services. This authentication process takes several forms, with some involving credentials, such as username and password.

Since Selenium does not support basic authentication, you must integrate with other libraries to achieve this. This tutorial uses Selenium Wire, a Python library that enhances Selenium's Python bindings to grant you access to the underlying requests made by the browser.

To authenticate an Undetected ChromeDriver proxy using this library, configure your proxy settings in the Seleniumwire_options attribute of the ChromeDriver.

Let's see it in practice.

Start by installing Selenium Wire using the following command.

pip install selenium-wire

Then import the following module to load Undetected Chromedriver from Selenium Wire.

# import required library

import seleniumwire.undetected_chromedriver as uc

Next, set Chrome Options and define your proxy settings, including the username and password, in the format protocol://username:password@ip:port.

# set Chrome Options

chrome_options = uc.ChromeOptions()

# define your proxy settings

proxy_options = {

'proxy': {

'http': 'http://username:password@ip:port',

'https': 'https://username:password@ip:port'

}

}

Lastly, initialize an Undetected ChromeDriver instance with the specified proxy settings.

#...

# initialize Chrome driver instance with specified proxy settings

driver = uc.Chrome(

options=chrome_options,

seleniumwire_options=proxy_options

)

That's it.

To verify it works, navigate to your target website (https://httpbin.io/ip](https://httpbin.io/ip) and print its text content.

# navigate to target website

driver.get('https://httpbin.io/ip')

# retrieve text content of the page

textContent = driver.find_element(By.TAG_NAME, 'body').text

print(textContent)

driver.quit()

You should have the following complete code.

# import required library

import seleniumwire.undetected_chromedriver as UC

# set Chrome Options

chrome_options = uc.ChromeOptions()

# define your proxy settings

proxy_options = {

'proxy': {

'http': 'http://username:password@ip:port',

'https': 'https://username:password@ip:port'

}

}

# initialize Chrome driver instance with specified proxy settings

driver = uc.Chrome(

options=chrome_options,

seleniumwire_options=proxy_options

)

# navigate to target website

driver.get('https://httpbin.io/ip')

# retrieve the text content of the page

textContent = driver.find_element(By.TAG_NAME, 'body').text

print(textContent)

driver.quit()

Get the Best Premium Proxies to Scrape

Even with proxies and Undetected Chromedriver, there's still a risk of getting blocked while web scraping. Let's try scraping a protected web page (G2 Review page) using an Undetected Chromedriver proxy.

import undetected_chromedriver as uc

from selenium.webdriver.common.by import By

# define proxy details

proxy = '50.168.163.183:80'

# set Chrome options

options = uc.ChromeOptions()

# add proxy to Chrome options

options.add_argument(f'--proxy-server={proxy}')

# intialize uc instance with configured options

driver = uc.Chrome(options=options)

# navigate to target website

driver.get('https://www.g2.com/products/salesforce-salesforce-sales-cloud/reviews')

# take screenshot

driver.save_screenshot('blocked.png')

driver.quit()

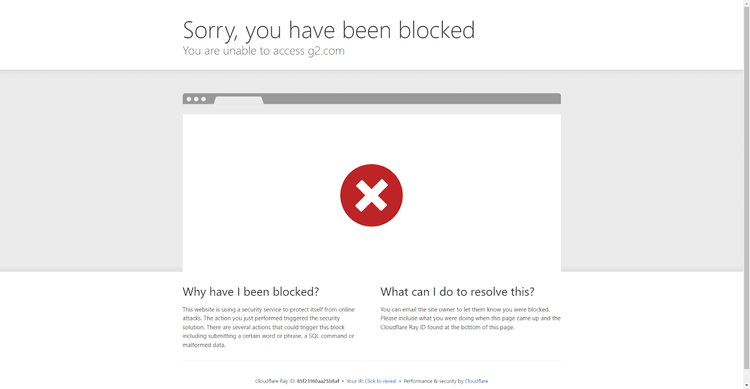

Here's the result:

This result shows that the website's anti-bot system blocked our scraper, reaffirming that Undetected Chromedriver, even with proxies, isn't enough.

"Why not use premium proxies?", you may ask.

You're not wrong. However, while premium proxies may produce better results, you'll still get blocked against advanced anti-bot systems, like the one used by the web page above.

Your best bet here would be to use a web scraping API like ZenRows. This tool provides everything you need to scrape without getting blocked, including headless browser functionality, like Selenium, but without the additional infrastructure overhead.

ZenRows also automatically integrates auto-rotating premium proxies, optimized headers, anti-CAPTCHAs, and more.

Let's see ZenRows against the same web page that blocked Undetected ChromeDriver.

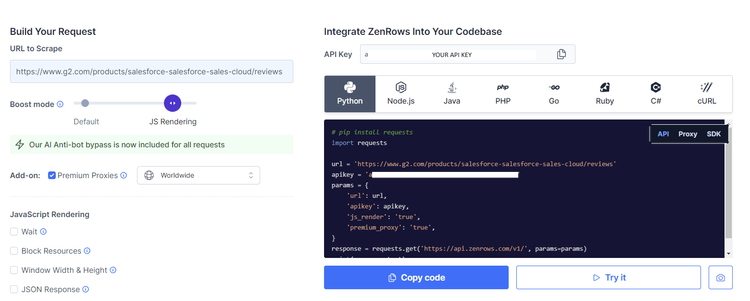

To get started, sign up for free, and you'll be directed to the Request Builder page.

Paste your target URL (https://www.g2.com/products/salesforce-salesforce-sales-cloud/reviews), select the anti-bot boost mode, and check the box for Premium Proxies to rotate residential proxies automatically. Then, choose Python as the language that'll generate your request code on the right.

Although Requests is suggested, you can use any Python HTTP client you choose.

Copy the generated code to your favorite editor. Your new script should look like this.

# pip install requests

import requests

url = 'https://www.g2.com/products/salesforce-salesforce-sales-cloud/reviews'

apikey = '<YOUR_ZENROWS_API_KEY>'

params = {

'url': url,

'apikey': apikey,

'js_render': 'true',

'premium_proxy': 'true',

}

response = requests.get('https://api.zenrows.com/v1/', params=params)

print(response.text)

Run it, and you'll get the page's HTML content.

<!DOCTYPE html>

<!-- ... -->

<title id="icon-label-55be01c8a779375d16cd458302375f4b">G2 - Business Software Reviews</title>

<!-- ... -->

<h1 ...id="main">Where you go for software.</h1>

Awesome, right? That's how easy it is to avoid detection with ZenRows.

Conclusion

This tutorial showed how to use proxies with Undetected ChromeDriver to bypass blocks. We covered configuring proxy settings and authenticating premium proxies for better scraping outcomes. However, it may not be sufficient against advanced anti-bot systems. For guaranteed results against any system, try ZenRows.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.