Are you unsure about the right HTTP library for your project? Both Urllib3 and Requests provide easy-to-use solutions for making HTTP requests in Python, but they have different approaches and features.

In this article, we'll explore the characteristics of both libraries and overview when one might be preferred over the other.

Requests vs. urllib3: Which One You Should Choose?

Urllib3 and Requests are both great tools for web scraping in Python. However, your choice depends on your project needs and requirements.

Urllib3 is a Python library for making HTTP requests. It’s also a dependency of its more popular counterpart: Python Requests.

While Python Requests uses Urllib3 under the hood, it wraps these functionalities in a higher-level Pythonic API, which abstracts the complexity associated with HTTP requests.

Feature Comparison: Requests vs urllib3

Here’s a comparison table with the key features of Urllib3 and Requests:

| Consideration | Urllib3 | Requests |

|---|---|---|

| Ease of use | More code verbosity | User-friendly API that handles many tasks automatically |

| Customization | Highly customizable, providing more control for lower-level details | Sufficient customization for common use cases, like headers, session handling, timeouts, etc. |

| Performance | Fast | Fast |

| Popularity | Established, but not as popular as Requests | The most popular Python HTTP client |

| Compatibility | Compatible with a broader range (e.g., Python 2.7, 3.3+) | Supports modern Python versions |

| JSON support | Does not provide native JSON support. However, it can be used with other libraries to add this functionality | Provides built-in JSON support |

| Community support | Strong community and well-structured documentation | Active community with extensive online resources and documentation |

Requests Is More User-Friendly

Requests lives on its user-friendly design and intuitive API, branding itself as "HTTP for Humans". Let's see why.

Firstly, Requests provides a higher-level API that conceals the intricacies of making HTTP requests. For example, It offers easy-to-use methods for common HTTP use cases, such as get(), post(), put(), and delete(). That reduces the amount of code required for basic requests.

Also, it combines its API with built-in functionalities, including methods and objects, to simplify common HTTP operations like session management, timeouts, JSON handling, etc. Those features eliminate the need for additional libraries or custom implementations depending on your use case.

Requests Has More Popular Features than urllib3

The Requests library builds upon the features of Urllib3 and adds its own set of convenient and user-friendly functionalities. That means Requests offers all Urllib3 features and then some more, like authentication, cookie handling, and session management.

Right off the bat, Requests provides a straightforward approach for handling basic authentication with the auth parameter. On the other hand, Urllib3 requires an extra import (the make_headers helper from urllib3.util). Similarly, Requests streamlines cookie handling and session management by offering a session object, which makes it easy to persist cookies across multiple requests.

Urllib3 Allows Greater HTTP Customization with Its API

Urllib3 is known for its high level of customization and control, which allows you to configure almost every aspect of your requests and responses.

For example, while the PoolManager class automatically handles connection pooling for each host, Urllib3 allows complete customization of the ConnectionPool instances. This can include custom timeouts, retries, and maximum connection limits. This level of control is useful in improving performance, especially when making requests to many hosts.

Additionally, Urllib3 allows you to define custom SSL/TLS configurations, which include custom TLS certificates, control certificate verification, and configuring other SSL-related parameters. For example, you can provide your certificate authority bundle by providing the full path to the certificate bundle when creating a PoolManager.

On the other hand, while Requests also support some level of customization, it abstracts away some of these low-level details to provide a user-friendly API.

They Both Have the Same Speed

The speed of Urllib3 and Requests can be similar in practice, as Urllib3 is responsible for HTTP connections in both libraries.

Yes, you read that right.

As implied earlier, Requests uses Urllib3 under the hood. So, they both use the same underlying mechanisms and offer similar performance.

Let's do a quick performance test for the two libraries.

The code snippet below measures the time taken to make a GET request using Urllib3.

import urllib3

import time

# create a PoolManager instance

http = urllib3.PoolManager()

start_time = time.time() # record the start time

response = http.request('GET', 'https://httpbin.org') # make the get request

end_time = time.time() # record the end time

# calculate the time taken

elapsed_time = end_time - start_time

# Optionally, do something with the response and the time taken

# print(f"Response: {response.data}")

print(f"Time taken: {elapsed_time} seconds")

#.. Time taken: 1.02 seconds

Similarly, here's the same test for the Requests library.

import requests

import time

start_time = time.time() # record the start time

response = requests.get('https://httpbin.org') # make GET request

end_time = time.time() # record the end time

# calculate the time taken

elapsed_time = end_time - start_time

# optionally, do something with the response and the time taken

# print(f"Response: {response.data}")

print(f"Time taken: {elapsed_time} seconds")

#... Time taken: 1.07.. seconds

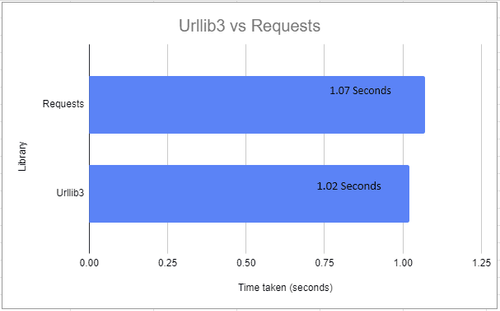

The results above show that the time taken to make a GET request is similar for both libraries. Urllib3 took roughly 1 second to make a GET request, the same as Python Requests. To visualize this better, below is a graph showing the results.

Requests Has A Larger Community

Requests is the most popular HTTP client in Python. Even those outside of the Python community will have heard of it. If you're a numbers person, the 2.6 million+ users on GitHub alone might intrigue you.

But that's not all. The Requests library is actively maintained and receives regular updates to address bugs and introduce new features. Its large community makes it even easier to use and troubleshoot, as you can quickly find resources on any issue.

Best Choice to Avoid Getting Blocked While Scraping

While choosing the right HTTP library for your project is essential, avoiding detection is even more critical. This is because websites are increasingly implementing anti-scraping techniques to regulate bot traffic. So, to stand a chance, you must mimic a real user's request.

Using techniques like user agent spoofing, proxy rotation with Python, and others, you can configure both Requests and Urllib3 to attempt to mirror a natural request. However, this is usually not enough for many websites, especially those using advanced anti-bot measures.

Luckily, a web scraping API like ZenRows guarantees you to bypass any anti-bot measures. Plus, it integrates seamlessly with any HTTP client, including Requests and Urllib3.

Conclusion

Urllib3 and Requests are capable libraries, and your choice depends on your project requirements and customization needs. For use cases requiring extensive customization and optimal performance, Urllib3 might be a better fit. But if ease of use is a critical factor, Requests is the choice.

Overall, to scrape without getting blocked, consider integrating with ZenRows.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.