Many websites use AJAX to dynamically display content, meaning web pages add or update data without requiring a full page refresh. While this leads to better user experiences, it poses unique web scraping challenges.

In this tutorial, you'll learn everything you need to know for web scraping AJAX pages.

What is AJAX in Web Scraping?

AJAX stands for Asynchronous JavaScript and XML. It's a set of web development techniques where the website makes asynchronous requests to the server (via API) using the XMLHttpRequest object rather than a standard HTTP request.

The data retrieved from the server can be used to update portions of the web page based on user input and state changes without page refresh. A classic example is a social media feed: when you scroll down (user input), new posts automatically load, courtesy of AJAX.

The challenge for you is that the dynamic content is not present in the website's static HTML. So, if you try web scraping AJAX pages, you won't get the new content. That makes extracting data from an AJAX website different.

The solution? You must intercept the AJAX request. Let's learn how next!

How to Scrape AJAX Pages

We'll go through the following steps to ensure you retrieve your desired data from any AJAX-driven website:

- Get the tools you need.

- Create your initial scraper.

- Identify AJAX requests.

- Make AJAX requests.

- Parse the response.

- Export Data to CSV.

The process is the same for other programming languages, but we'll use Python for AJAX web scraping pages. If you prefer, feel free to check out our quick guides for scraping dynamic content in Java, Ruby, PHP, and cURL.

As a target URL, we'll use an infinite scroll demo page, which loads more ecommerce products as the user scrolls down.

Step 1. Get the Tools You Need

To create your AJAX scraper, you need tools that'll enable you to reproduce an AJAX call and parse your response. Here are the modules that should make up your Python stack:

- Python Requests (or another HTTP client).

- BeautifulSoup (or another data parser).

If you need a refresher on these tools, check out our step-by-step tutorial on Python web scraping.

Tools like Python are suitable for scraping unprotected websites. However, dealing with more complex scenarios like AJAX pages with anti-bot protection might require headless browsers, such as Selenium, and setting that up is a bit more complex, so stay tuned to our future tutorials.

Step 2. Create Your Initial Scraper

To set up your scraper, install the above-mentioned tools (Requests and BeautifulSoup) using this command:

pip install requests beautifulSoup4

Then, import the installed libraries, define your target URL, send an HTTP GET request, fetch the HTML content, and parse it using BeautifulSoup.

import requests

from bs4 import BeautifulSoup

# Define your target URL

url = 'https://scrapingclub.com/exercise/list_infinite_scroll/'

# Send an HTTP GET request and fetch the HTML content

response = requests.get(url)

# Fetch the HTML content

html_content = response.text

# Parse the HTML content using Beautiful Soup

soup = BeautifulSoup(html_content, 'html.parser')

# Print formatted HTML content

print(soup.prettify())

When you run that script, you'll get the website's static HTML. It doesn't include the images and other JavaScript-rendered content but a bunch of script tags.

<!DOCTYPE html>

<html class="h-full">

<head>

<meta charset="utf-8"/>

<meta content="width=device-width, initial-scale=1" name="viewport"/>

<meta content="Learn to scrape infinite scrolling pages" name="description"/>

<title>

Scraping Infinite Scrolling Pages (Ajax) | ScrapingClub

</title>

#...

....#

<script src="/static/js/runtime.733406a7.437561bb6191.js" type="text/javascript">

</script>

<script src="/static/js/844.c955a86a.8ae7a88d9ece.js" type="text/javascript">

</script>

<script src="/static/js/app.9eae399d.fbbfa467cd03.js" type="text/javascript">

</script>

<script src="https://unpkg.com/infinite-scroll@4/dist/infinite-scroll.pkgd.js">

</script>

<script>

let elem = document.querySelector('.container .grid');

let infScroll = new InfiniteScroll(elem, {

// options

path: 'a[rel="next"]',

append: '.post',

history: false,

});

</script>

Clearly, you can't retrieve the data you're after from this static HTML because it is dynamically loaded through AJAX. To access such data, you need to identify the AJAX request responsible for the content, replicate it using Python Requests, and parse the AJAX response with BeautifulSoup.

Step 3. Identify AJAX requests for Python Web Scraping

To identify AJAX requests for web scraping, visit the website using your Chrome browser, right-click anywhere on the web page, and select Inspect to open the Developer Tools. You can also use this shortcut: Ctrl+Shift+I (or Cmd+Option+I on Mac).

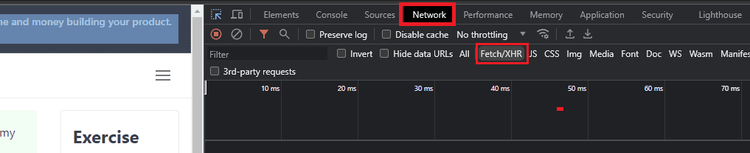

Next, navigate to the Network tab and click the `Fetch/XHR` button. That'll filter requests to show only XMLHttpRequests, which are AJAX requests.

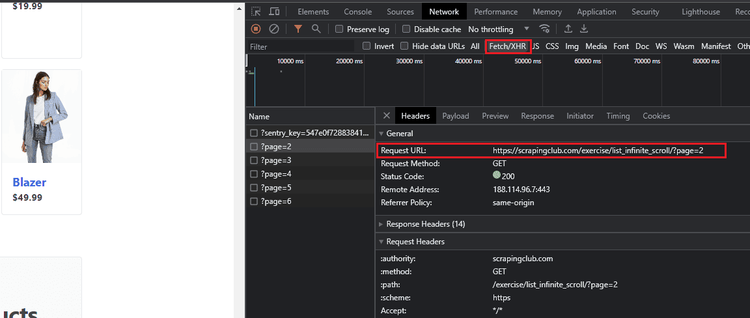

Then, refresh the page and scroll to the bottom of the page. You'll see new requests appear for every new content uploaded to the page. Click on these requests to inspect their content (You'll see: request URL, headers, cookies, etc.).

Now, identify the specific request that fetches the data you're interested in. You can preview each request by copying the Request URL and loading it in a new tab. For example, if you're interested in the content on the second window, it's the first request that appears. By inspecting that request, you'll find a GET request made to the following Request URL: https://scrapingclub.com/exercise/list_infinite_scroll/?page=2.

If you keep scrolling, you'll notice that there are 6 pages in total, and each AJAX request URL has the format [base_url] + [page_number], where [base_url] is https://scrapingclub.com/exercise/list_infinite_scroll/.

Step 4. Make AJAX requests

After identifying the AJAX requests that display the data you're after for web scraping, the next step is to reproduce it in your scraper. Since they're all GET requests, you can make the same GET requests from your scraper to retrieve the AJAX-rendered content. Here's how:

Define the base URL, and initialize the page_number variable and total_pages. Then, using a while loop, make GET requests to the six request URLs.

The code below retrieves the HTML content directly from each AJAX page and stops the loop at the last page.

import requests

from bs4 import BeautifulSoup

base_url = 'https://scrapingclub.com/exercise/list_infinite_scroll/'

page_number = 1 # Start with the base URL

total_pages = 6

while True:

# Construct ajax request URL

url = f'{base_url}?page={page_number}'

# Make GET request

response = requests.get(url)

# Retrieve the response content

html_content = response.text

# Move to the next page

page_number += 1

if page_number > total_pages:

break # Stop the loop

Let's now parse the content we're after!

Step 5. Parse the response

To parse the response and grab the product names and prices, select the elements you want using CSS selectors.

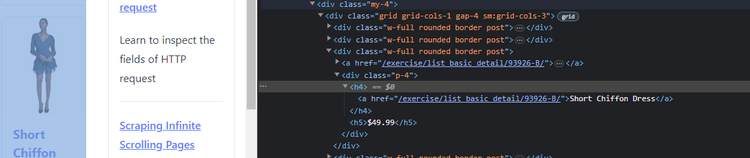

You can find them by inspecting the page elements in the Chrome DevTools. Focus on the Elements tab this time. By selecting products on the page to inspect, you can see that product names are located in div.p-4>h4>a and prices are in div.p-4>h5.

Create a BeautifulSoup object that takes the html_content variable as an argument and uses those selectors.

# Parse the HTML content using Beautiful Soup

soup = BeautifulSoup(html_content, 'html.parser')

# Extract product names and prices

products = soup.select('div.p-4 h4 > a')

prices = soup.select('div.p-4 h5')

Next, loop through products and prices to extract the text content of each element using the get_text() method.

for product, price in zip(products, prices):

product_name = product.get_text(strip=True)

product_price = price.get_text(strip=True)

Putting everything together and adding a printing instruction, this is your full AJAX web scraping code:

import requests

from bs4 import BeautifulSoup

base_url = 'https://scrapingclub.com/exercise/list_infinite_scroll/'

page_number = 1 # Start with the base URL

total_pages = 6

while True:

# Construct ajax request URL

url = f'{base_url}?page={page_number}'

# Make GET request

response = requests.get(url)

# Retrieve the response content

html_content = response.text

# Move to the next page

page_number += 1

if page_number > total_pages:

break # Stop the loop

# Parse the HTML content using Beautiful Soup

soup = BeautifulSoup(html_content, 'html.parser')

# Extract product names and prices

products = soup.select('div.p-4 h4 > a')

prices = soup.select('div.p-4 h5')

for product, price in zip(products, prices):

product_name = product.get_text(strip=True)

product_price = price.get_text(strip=True)

print(f'Product: {product_name} | Price: {product_price}')

print('-' * 50)

# Move to the next page

page_number += 1

if page_number > total_pages:

break # Stop the loop

Run it, and you should have all the data you targeted.

Product: Short Dress | Price: $24.99

Product: Patterned Slacks | Price: $29.99

Product: Short Chiffon Dress | Price: $49.99

Product: Off-the-shoulder Dress | Price: $59.99

Product: V-neck Top | Price: $24.99

Product: Short Chiffon Dress | Price: $49.99

Product: V-neck Top | Price: $24.99

Product: V-neck Top | Price: $24.99

Product: Short Lace Dress | Price: $59.99

Product: Fitted Dress | Price: $34.99

--------------------------------------------------

Product: V-neck Jumpsuit | Price: $69.99

Product: Chiffon Dress | Price: $54.99

Product: Skinny High Waist Jeans | Price: $39.99

Product: Super Skinny High Jeans | Price: $19.99

Product: Oversized Denim Jacket | Price: $19.99

#.....

# truncated for brevity's sake

#.....

Remark: This result is partial for brevity's sake.

Bingo! You've scraped your first AJAX website.

Depending on the complexity of your target website, you may also need to include the same headers as the XHR request.

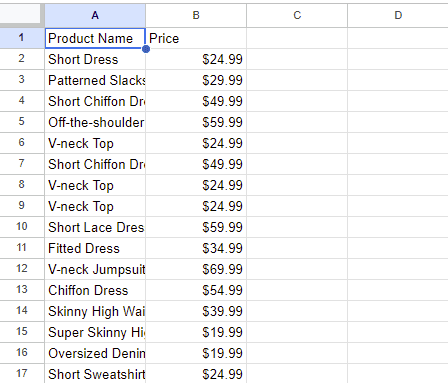

Step 6. Export Data to CSV

If you need, you can also export the extracted data from AJAX web scraping to CSV.

For that, import the csv module, initialize an empty product_data array, and append the product data to it.

import requests

from bs4 import BeautifulSoup

import csv

base_url = 'https://scrapingclub.com/exercise/list_infinite_scroll/'

page_number = 1 # Start with the base URL

total_pages = 6

product_data = []

while page_number <= total_pages:

# Construct ajax request URL

url = f'{base_url}?page={page_number}'

# Make GET request

response = requests.get(url)

# Retrieve the response content

html_content = response.text

# Parse the HTML content using Beautiful Soup

soup = BeautifulSoup(html_content, 'html.parser')

# Extract product names and prices

products = soup.select('div.p-4 h4 > a')

prices = soup.select('div.p-4 h5')

# Collect product information

for product, price in zip(products, prices):

product_name = product.get_text(strip=True)

product_price = price.get_text(strip=True)

#add data to product_data []

product_data.append({'name': product_name, 'price': product_price})

# Move to the next page

page_number += 1

Then, create a function named write_to_csv that writes this data to a CSV file. It'll open the file, define the field names, and use the csv.DictWriter class to write the data to the CSV.

def write_to_csv(product_data):

# Open the CSV file for writing

with open('products.csv', 'w', newline='', encoding='utf-8') as csvfile:

# Define the field names for the CSV header

fieldnames = ['Product Name', 'Price']

# Create a DictWriter object

writer = csv.DictWriter(csvfile, fieldnames=fieldnames)

The csv.DictWriter class expects that the first row you write to the CSV file is the header row, containing the field names. So, still, within the function, instruct the csv.DictWriter class to automatically write the headers using the writer.writeheader() method.

Next, loop through the product_data list and write a new row for each dictionary list using the writer.writerow() method.

#....

#...

# Write CSV header

writer.writeheader()

# Loop through the product data list

for product in product_data:

# Write a new row with product name and price

writer.writerow({'Product Name': product['name'], 'Price': product['price']})

Lastly, call the write_to_csv function to export the data to CSV.

Putting it all together, here's the final code:

import requests

from bs4 import BeautifulSoup

import csv

def write_to_csv(product_data):

# Open the CSV file for writing

with open('products.csv', 'w', newline='', encoding='utf-8') as csvfile:

# Define the field names for the CSV header

fieldnames = ['Product Name', 'Price']

# Create a DictWriter object

writer = csv.DictWriter(csvfile, fieldnames=fieldnames)

# Write CSV header

writer.writeheader()

# Loop through the product data list

for product in product_data:

# Write a new row with product name and price

writer.writerow({'Product Name': product['name'], 'Price': product['price']})

base_url = 'https://scrapingclub.com/exercise/list_infinite_scroll/'

page_number = 1 # Start with the base URL

total_pages = 6

product_data = []

while page_number <= total_pages:

# Construct ajax request URL

url = f'{base_url}?page={page_number}'

# Make GET request

response = requests.get(url)

# Retrieve the response content

html_content = response.text

# Parse the HTML content using Beautiful Soup

soup = BeautifulSoup(html_content, 'html.parser')

# Extract product names and prices

products = soup.select('div.p-4 h4 > a')

prices = soup.select('div.p-4 h5')

# Collect product information

for product, price in zip(products, prices):

product_name = product.get_text(strip=True)

product_price = price.get_text(strip=True)

#add data to product_data []

product_data.append({'name': product_name, 'price': product_price})

# Move to the next page

page_number += 1

# Call the function to write data to CSV

write_to_csv(product_data)

This creates a CSV file named products.csv in your project file.

Congrats, you've accomplished AJAX web scraping!

The Challenge of Getting Blocked Easily

Scraping numerous pages can easily lead to getting blocked. Websites often employ anti-scraping measures like rate-limiting and IP banning to regulate traffic.

A recommended quick solution is using a web scraping proxy. Moreover, rotating between proxies allows you to route your requests through multiple IP addresses, making the requests look like they're coming from different users. This is recommended for better results, so you might be interested in our tutorial on implementing proxies with Python Requests.

Conclusion

While AJAX enhances user experience by enabling websites to render content dynamically, it also introduces complexities for web scraping. Dynamic contents are not present in a website's static HTML. Therefore, you must identify the AJAX requests responsible for the data you're after and replicate them.

It's also important to be aware of the increasing risk of getting blocked, as web servers can flag your activity as suspicious and deny you access.

Frequent Questions

What Is AJAX Used for?

AJAX is used for developing dynamic websites where web pages can interact with servers and update content based on user input and state changes. For example, a load more button or an infinite scroll page where more content loads as you scroll to the bottom of a page.

What Is the Difference between HTTP and AJAX?

The difference between HTTP and AJAX is that HTTP is a protocol for communication between a browser and a web server. In contrast, AJAX is not a protocol but a set of web development techniques that allow websites to dynamically update portions of a web page by making asynchronous requests to a server.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.