Ever faced the frustration of slow and inefficient web scraping? You're not alone. Traditional web scrapers often process tasks synchronously (one after the other) and can feel like "waiting for paint to dry". But with asynchronous web scraping in Python, you can extract data from multiple sources concurrently, boosting speed and scalability.

In this guide, you'll learn how to harness the power of asyncio and AIOHTTP for fast and scalable web scraping. We'll show you how asynchronous web scraping outshines the synchronous approach. Let's dive in.

What Is Asynchronous Web Scraping?

In web scraping, where milliseconds matter, the synchronous approach can feel like a diligent librarian fetching one book at a time from the shelves. But what if you could simultaneously summon an army, each grabbing a book?

That's asynchronous web scraping in a nutshell. It's all about making requests in parallel and extracting data from multiple sources at once.

Asynchronous web scraping in Python allows your script to send multiple requests without waiting for each response before firing the next one. This increases project speed and scalability. Some developers report speed improvement of more than 50 times when transitioning between asynchronous and synchronous web scraping.

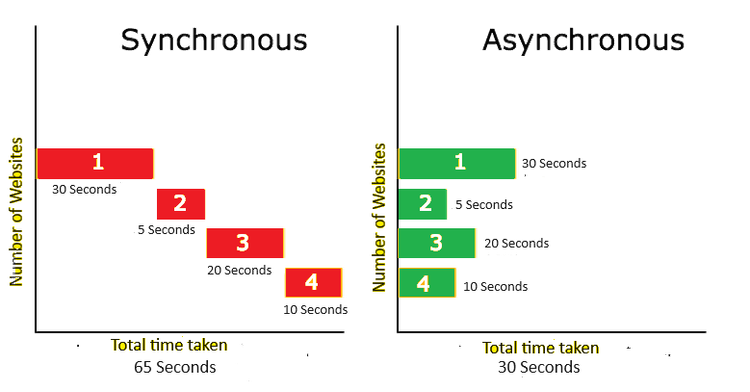

Let's look at a quick illustration to compare the two approaches further.

Here, we can easily visualize the difference between synchronous and asynchronous scripts. The graph on the left shows four requests scheduled synchronously. The script processes one request at a time and will only move on to the next when the initial request is complete. On the right, all requests are processed simultaneously, leading to better speed and performance.

How Do You Do Asynchronous Web Scraping in Python?

Extracting data from multiple sources in Python typically involves looping through requests using the Requests library. However, for asynchronous web scraping, you need an event loop, a dynamic mechanism where tasks operate independently and concurrently.

In Python, we can implicitly create an event loop with asyncio, and AIOHTTP allows you to make asynchronous HTTP requests within that loop.

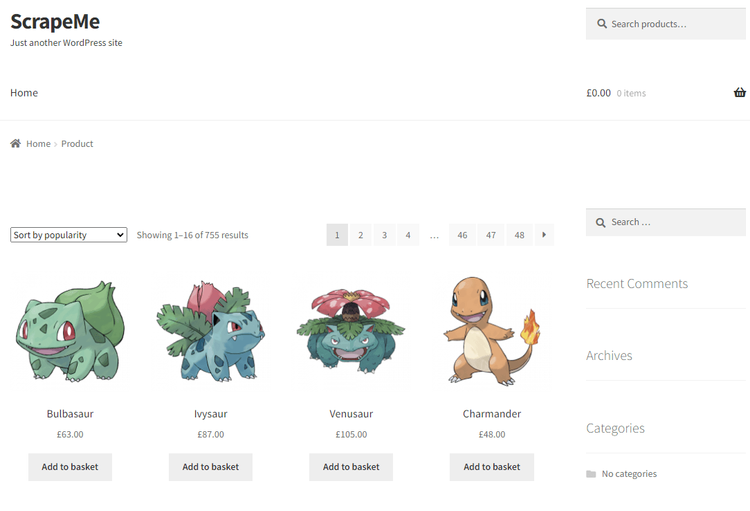

Let's see how asyncio and AIOHTTP work using ScrapeMe Live Shop as the target URL.

Step 1: Scrape Your First Page with AIOHTTP

To get started, install the AIOHTTP library using the following command.

pip install aiohttp

Next, import the necessary dependencies (AIOHTTP, asyncio, and time) and create an asynchronous main function.

import aiohttp

import asyncio

import time

async def main():

#..

Remark: asyncio and time are part of the Python standard library, so they don't require additional installations.

Within the main function, define the target URL (https://scrapeme.live/shop/) and record the time at the start so we can track the performance of this script.

Then include your scraping logic; for now, call a fetch function, which we'll define later. Finally, track the current time and calculate the time taken.

async def main():

url = "https://scrapeme.live/shop/"

# Time Tracking: Start Time

start_time = time.time()

# Fetching content asynchronously

content = await fetch(url)

# Printing content

print(content)

# Time Tracking: End Time

end_time = time.time()

# Calculating and printing the time taken

print(f"Time taken: {end_time - start_time} seconds")

Now, define the fetch function. Inside this function, create an asynchronous HTTP session using aiohttp.ClientSession and use the session to make a GET request to the target URL. Then, retrieve and return the text content.

async def fetch(url):

# Create HTTP session

async with aiohttp.ClientSession() as session:

# Make GET request using session

async with session.get(url) as response:

# Return text content

return await response.text()

Lastly, put everything together and initiate the execution of the main() function using asyncio.run(main()). You should have the following complete code.

import aiohttp

import asyncio

import time

async def main():

url = "https://scrapeme.live/shop/"

# Time Tracking: Start Time

start_time = time.time()

# Fetching content asynchronously

content = await fetch(url)

# Printing content

print(content)

# Time Tracking: End Time

end_time = time.time()

# Calculating and printing the time taken

print(f"Time taken: {end_time - start_time} seconds")

async def fetch(url):

# Create HTTP session

async with aiohttp.ClientSession() as session:

# Make GET request using session

async with session.get(url) as response:

# Return text content

return await response.text()

# Run the main function

asyncio.run(main())

Run it, and your result will be similar to the one below.

<!doctype html>

#..

<title>Products &...; ScrapeMe</title>

#..

Time taken: 1.26.. seconds

Congrats, you made your first asynchronous request.

Step 2: Scrape Multiple Pages Asynchronously

Having dipped our toes into asynchronous web scraping in Python, let's take it up a notch.

For multiple pages, you must create separate tasks for each URL you want to scrape and then group them using asyncio.gather(). Each task represents an async operation to retrieve data from a specific page. Once grouped, tasks can be executed concurrently.

Let's see this in practice.

To create tasks, first define a function that takes a session and a URL as parameters and retrieves data from a page. This function should make a GET request using an AIOHTTP session and return the HTML content.

async def fetch_page(session, url):

# Make GET request using session

async with session.get(url) as response:

# Return HTML content

return await response.text()

Next, define a main() function. Within the function, initialize a list of URLs, record the time at the start, create an AIOHTTP session, and initialize the task list.

async def main():

# Initialize a list of URLs

urls = ["https://scrapeme.live/shop/", "https://scrapeme.live/shop/page/2/", "https://scrapeme.live/shop/page/3/"]

# Time Tracking: Start Time

start_time = time.time()

# Create an AIOHTTP session

async with aiohttp.ClientSession() as session:

# Initialize tasks list

tasks = []

Remark: for this example, we used other pages of the initial target page to make up our URL list. Check out our Python web crawler guide to learn more about URL discovery.

Then, loop through URLs to create a separate task for each and append it to the tasks list. Lastly, group the tasks using asyncio.gather() to execute them concurrently.

#..

async with aiohttp.ClientSession() as session:

#..

# Loop through URLs and append tasks

for url in urls:

tasks.append(fetch_page(session, url))

# Group and Execute tasks concurrently

htmls = await asyncio.gather(*tasks)

What's left is tracking the current time and processing the HTML responses. For now, let's print. We'll do more with these responses in the next section. If you put everything together, your complete code should look like this.

import aiohttp

import asyncio

import time

async def fetch_page(session, url):

# Make GET request using session

async with session.get(url) as response:

# Return HTML content

return await response.text()

async def main():

# Initialize a list of URLs

urls = ["https://scrapeme.live/shop/", "https://scrapeme.live/shop/page/2/", "https://scrapeme.live/shop/page/3/"]

# Time Tracking: Start Time

start_time = time.time()

# Create an AIOHTTP session

async with aiohttp.ClientSession() as session:

# Initialize tasks list

tasks = []

# Loop through URLs and append tasks

for url in urls:

tasks.append(fetch_page(session, url))

# Group and Execute tasks concurrently

htmls = await asyncio.gather(*tasks)

# Time Tracking: End Time

end_time = time.time()

# Print or process the fetched HTML content

for url, html in zip(urls, htmls):

print(f"Content from {url}:\n{html}\n")

# Calculate and print the time taken

print(f"Time taken: {end_time - start_time} seconds")

# Run the main function

asyncio.run(main())

Run it, and you'll have a result like the one below.

Content from https://scrapeme.live/shop/:

<!doctype html>

#..

<title>Products &..; ScrapeMe</title>

************************************************

Content from https://scrapeme.live/shop/page/2/:

<!doctype html>

#..

<title>Products &..; Page 2 &..; ScrapeMe</title>

************************************************

Content from https://scrapeme.live/shop/page/3/:

<!doctype html>

#..

<title>Products &..; Page 3 &..; ScrapeMe</title>

Time taken: 1.09.. seconds

Awesome!

Step 3: Parse the Data with BeautifulSoup

Now that you've retrieved the HTML content of each page, the next step is to extract the data of interest. For this example, we'll scrape each product's name, price, and image source on each page using BeautifulSoup.

To do that, first, ensure you have BeautifulSoup installed. You can do so using the following command.

pip install beautifulsoup4

Next, import BeautifulSoup and modify the fetch_page() function to return HTML and parse it using BeautifulSoup.

async def fetch_page(session, URL):

# Make GET request using session

async with session.get(url) as response:

# Retrieve HTML content

html_content = await response.text()

# Parse HTML content using BeautifulSoup

soup = BeautifulSoup(html_content, 'html.parser')

# Return parsed HTML

return soup

Now, within the main() function, extract the relevant information, in this case, name, price, and image.

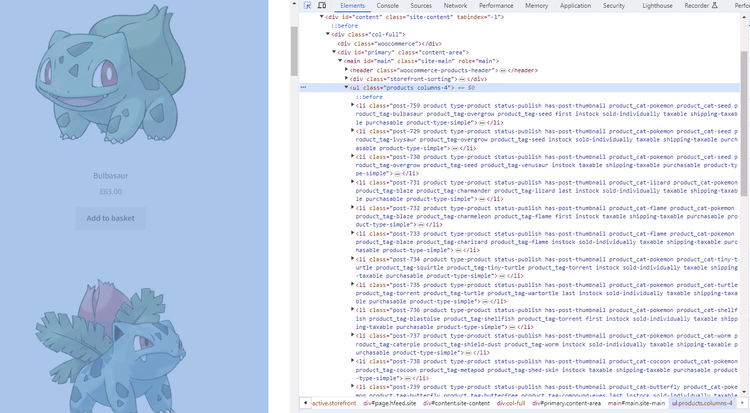

For that, you must first inspect the page to identify the elements that contain the data you're interested in. You can do this using any browser of your choice. For Chrome, right-click on the page and select inspect.

All the products are wrapped in a <ul> with class products. Within the <ul>, are <li> elements, which contain the names (H2s with class woocommerce-loop-product__title), the prices (span tags with class amount), and images in the tag.

Knowing these elements, we can extract the desired information using BeautifulSoup by first finding all products <li> in <ul> with class products. Then, iterating through each <li> to retrieve the names, prices, and images accordingly.

Here's the new main() function.

async def main():

# ... (previous code remains unchanged)

# Process the extracted information

for url, soup in zip(urls, htmls):

# Find products in <ul>

product_list = soup.find('ul', class_='products')

# Find all <li>s

products = product_list.find_all('li')

# Iterate through products and extract name, price, and image of each.

for product in products:

name = product.find('h2').text

price = product.find('span', class_='amount').text

image = product.find('img')['src']

print(f"Product from {url}:\nName: {name}\nPrice: {price}\nImage: {image}\n")

# ... (rest of the code remains unchanged)

Putting everything together, we have the following complete code.

import aiohttp

import asyncio

import time

from bs4 import BeautifulSoup

async def fetch_page(session, url):

# Make GET request using session

async with session.get(url) as response:

# Retrieve HTML content

html_content = await response.text()

# Parse HTML content using BeautifulSoup

soup = BeautifulSoup(html_content, 'html.parser')

# Return parsed HTML

return soup

async def main():

# Initialize a list of URLs

urls = ["https://scrapeme.live/shop/", "https://scrapeme.live/shop/page/2/", "https://scrapeme.live/shop/page/3/"]

# Time Tracking: Start Time

start_time = time.time()

# Create an AIOHTTP session

async with aiohttp.ClientSession() as session:

# Initialize tasks list

tasks = []

# Loop through URLs and append tasks

for url in urls:

tasks.append(fetch_page(session, url))

# Group and Execute tasks concurrently

htmls = await asyncio.gather(*tasks)

# Time Tracking: End Time

end_time = time.time()

# Process the extracted information

for url, soup in zip(urls, htmls):

# Find products in <ul>

product_list = soup.find('ul', class_='products')

# Find all <li>s

products = product_list.find_all('li')

# Iterate through products and extract name, price, and image of each.

for product in products:

name = product.find('h2').text

price = product.find('span', class_='amount').text

image = product.find('img')['src']

print(f"Product from {url}:\nName: {name}\nPrice: {price}\nImage: {image}\n")

# Calculate and print the time taken

print(f"Time taken: {end_time - start_time} seconds")

# Run the main function

asyncio.run(main())

Run this code, and your result should look like this.

Product from https://scrapeme.live/shop/:

Name: Bulbasaur

Price: £63.00

Image: https://scrapeme.live/wp-content/uploads/2018/08/001-350x350.png

#.. truncated for breveity

*************************************************************

Product from https://scrapeme.live/shop/page/2/:

Name: Pidgeotto

Price: £84.00

Image: https://scrapeme.live/wp-content/uploads/2018/08/017-350x350.png

#.. truncated for breveity

*************************************************************

Product from https://scrapeme.live/shop/page/3/:

Name: Clefairy

Price: £160.00

Image: https://scrapeme.live/wp-content/uploads/2018/08/035-350x350.png

#.. truncated for breveity

*************************************************************

Time taken: 1.03.. seconds

Well done!

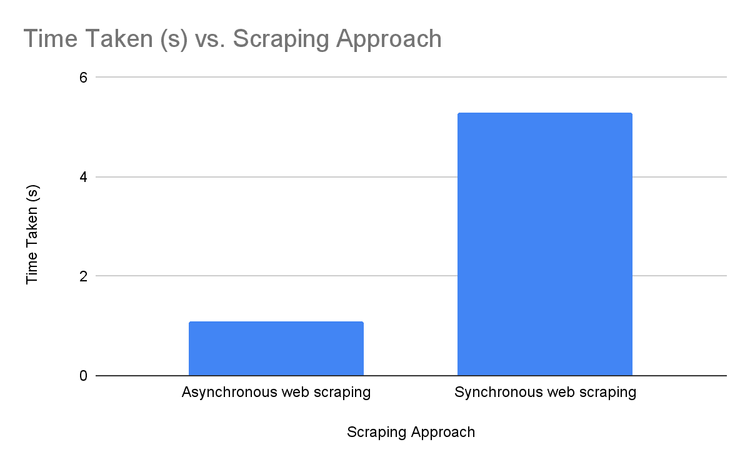

Benchmark: Comparing the Asynchronous vs. Synchronous Performance

We've seen asynchronous web scraping in Python, but how does it compare to the synchronous approach? To know this, we need to compare the performances of both systems. Here's a basic synchronous script that scrapes 3 URLs.

import requests

import time

urls = ["https://scrapeme.live/shop/", "https://scrapeme.live/shop/page/2/", "https://scrapeme.live/shop/page/3/"]

start_time = time.time()

for url in urls:

response = requests.get(url)

html_content = response.text

end_time = time.time()

print(f"Time taken: {end_time - start_time} seconds")

# Result

# Time taken: 5.29.. seconds

From the result above, you can see that the time difference is huge. The synchronous script took roughly 5 seconds to scrape the same URLs as our asynchronous script, which took only a second to execute the same tasks.

To help you visualize the difference better, below is a graph showing the results of both approaches.

Advanced Concepts for Asynchronous Web Scraping

Let's explore some advanced concepts to enhance your asynchronous web scraping skills.

Concurrency

Using asynchronous web scraping in Python means that you can make many HTTP requests almost simultaneously (concurrently). This can become an issue as web servers often flag high-frequency requests as suspicious activity and will block such requests.

To avoid getting blocked, you need to control the rate at which requests are made to the server to avoid overloading it or triggering its defense mechanism. This is known as throttling.

One way to throttle your web scraper is by using Python's native threading module to introduce delays between requests. Learn more about our guide on parallel scraping in Python with Concurrency.

While you may not require the threading module, it's worth considering the capabilities of asyncio, particularly its Semaphore feature.

Semaphore is a tool designed to handle asynchronous coroutines, effectively guaranteeing that only a predetermined number of these routines can run simultaneously.

From Static Lists to Queues

Asynchronous web scraping in Python can become even more dynamic by moving from static lists to queues.

While static lists have a predetermined set of URLs or tasks, queues provide a flexible way to deal with dynamic environments where URLs are generated on the fly or the number of tasks is unknown.

A queue allows you to enqueue and dequeue tasks as they become available or are completed. This is particularly valuable when implementing features like request limiting or prioritization.

Check out our guide on Python web crawling for a tutorial on switching to queues.

Making Python Requests Async-friendly

Although Python Requests is not async-friendly, you can perform asynchronous requests using this library.

For this, you'll need Python's threading module or the concurrent.futures.

In threading, you need to instantiate a new thread for each URL you want to scrape. Then, start all the threads concurrently, each responsible for performing specific tasks independently.

However, the Global Interpreter Lock (GIL) can affect the effectiveness of using threads as it prevents multiple threads from executing the same requests at once.

Conclusion

Asynchronous web scraping offers a fast, scalable, and efficient approach to fetching data from the web. However, high-frequency traffic can result in blocks and IP bans. So, remember to throttle your requests.

Yet, getting blocked remains a common web scraping challenge as websites continuously implement new techniques to prevent bot traffic. For tips on overcoming this challenge, refer to our guide on web scraping without getting blocked.

Frequent Questions

What Is the Use of AIOHTTP?

AIOHTTP is used to make asynchronous HTTP requests, particularly in scenarios where you need to perform multiple concurrent requests without blocking the execution of other tasks. This makes it suitable for web scraping, API requests, and any situation involving I/O-bound operations.

What Is the Difference between HTTPX and AIOHTTP?

The difference between HTTPX and AIOHTTP lies in the scope of their libraries. HTTPX is a full-featured HTTP client for both synchronous and asynchronous requests. In contrast, AIOHTTP primarily focuses on asynchronous programming. Also, HTTPX supports the latest HTTP protocol version, HTTP/2, whereas AIOHTTP does not.

What Is the Meaning of Asyncio?

Asyncio is a standard Python library for writing asynchronous code using the async/await syntax. It's primarily used as a foundation for asynchronous frameworks, where tasks can run concurrently without blocking the execution of others. Also, it is built around an event loop, which manages the execution of asynchronous tasks.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.