Are you looking for ways to scrape without getting blocked? Try Botasaurus, a new web scraping library with cool anti-bot bypass features.

In this article, you'll learn how to scrape websites with the Botasaurus library and see how it works to enhance data extraction efficiency.

What Can You Do With Botasaurus?

Botasaurus is a web scraping library built to bypass anti-bot systems. It features browser functionalities for interacting with dynamic web pages.

The library lets you authenticate proxy via SSL, install Chrome extensions, and route requests through Google to enhance anti-bot evasion. While the alternatives such as Undetected ChromeDriver, Selenium Stealth, and Puppeteer Stealth, are more popular, Botasaurus has a higher success rate at bypassing anti-bots like Cloudflare.

Botasaurus patches the Selenium WebDriver and the standard Requests library for anti-detection functionality. Unlike Selenium, Botasaurus doesn't require extra driver installation. It automatically installs the ChromeDriver during its first build, simplifying the initial setup for beginners.

Tutorial: How to Scrape With Botasaurus

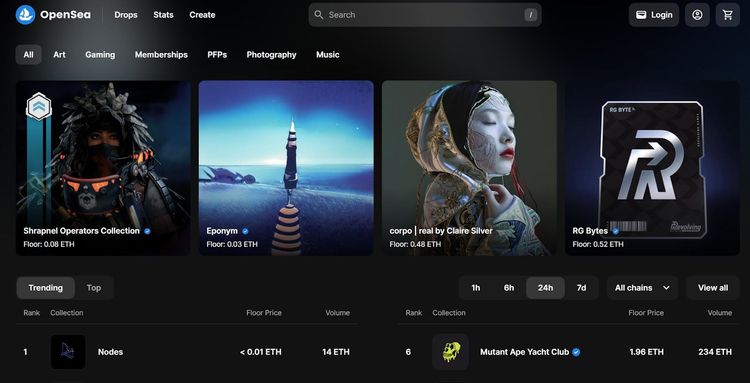

Botasaurus supports CSS selectors, has a built-in standard Requests library (HTTP client), and Selenium WebDriver automation capabilities. Let's see it in action by scraping OpenSea, an NFT website.

Here's the target web page layout:

Prerequisites

This tutorial uses Python 3.12.1 on a Windows operating system. Install the latest version from the Python website if you haven't already.

Once Python is up and running, install the Botasaurus library using pip:

pip install botasaurus

Now, create a new project folder with a scraper.py file and follow the instructions in the next section to extract the full-page HTML.

Get the Target Page Full HTML

The first step to scraping with Botasaurus is opening the target website. Although the library supports direct access using the WebDriver's get method, it also allows you to route requests through Google to mimic a legitimate user.

You'll use its Google routing functionality to open the target web page and extract its content.

To begin, define a scraper function with a browser decorator and visit the target website through Google. The function uses the AntiDetectDriver option to indicate that your scraper requires an anti-bot bypass capability. It then applies the data argument to collect the extracted data into an output JSON file:

# import all modules

from botasaurus import *

@browser

def scraper(driver: AntiDetectDriver, data: dict):

# navigate to the target website

driver.google_get("https://opensea.io")

Extract the text content from the page's HTML and print the result in your console. Return the extracted data to store it in a JSON file inside an output directory. Finally, execute the scraper function:

@browser

def scraper(driver: AntiDetectDriver, data: dict):

# ...

# retrieve the html element

content = driver.text("html")

# print the HTML content

print(content)

# save the data as a JSON file in output/scraper.json

return {

"content": content

}

# execute the scraper fucntion

scraper()

Combine both snippets to get the following complete code:

# import all modules

from botasaurus import *

@browser

def scraper(driver: AntiDetectDriver, data: dict):

# navigate to the target website

driver.google_get("https://opensea.io")

# retrieve the html element

content = driver.text("html")

# print the HTML content

print(content)

# save the data as a JSON file in output/scraper.json

return {

"content": content

}

# execute the scraper fucntion

scraper()

Executing the code above for the first time installs the ChromeDriver and creates additional folders and files, including the output folder, to collect the extracted data.

Add a Proxy to Botasaurus

Some websites limit the volume of requests an IP address can send to their server in a particular period. This limitation may block your scraper if you attempt to extract data from multiple pages.

Proxies prevent potential IP bans by sending your request through another IP address, so the server thinks you're requesting from another location.

Botasaurus has a very straightforward proxy configuration. Let's see how it works by requesting https://httpbin.io/ip, a website that returns your current IP address.

In this tutorial, let's use a free proxy from the Free Proxy List. However, keep in mind that they may not work at the time of reading since free proxies are short-lived, so switch them for fresh ones. Choose HTTPS proxies since they work with secure (HTTPS) and unsecured (HTTP) websites.

Specify the proxy address inside the browser decorator. Define a scraper function, request the target website, and print its content to view the current IP address:

# import all modules

from botasaurus import *

# specify a proxy address inside the broswer decorator

@browser(proxy="http://185.217.136.67:1337")

def scraper(driver: AntiDetectDriver, data: dict):

# navigate to the target website

driver.get("https://httpbin.io/ip")

# retrieve the html element content

ip_address = driver.text("body")

# print the IP address

print(ip_address)

# execute the scraper fucntion

scraper()

Executing the code twice outputs the following IP addresses with different ports:

{

"origin": "185.217.136.67:57998"

}

{

"origin": "185.217.136.67:39944"

}

You just configured a free proxy with Botasaurus. Great job!

Remember that free proxies only work for testing and aren't recommended for large-scale projects due to their unreliability. You'll need premium web scraping proxies for real-life scraping projects. These require you to add your authentication credentials.

Deep Dive Into Botasaurus' Features

Botasaurus has other features that make it suitable for web scraping. Let's discuss them in this section.

Stealth and Evasion

- Dynamic User-Agent switching: Botasaurus supports User Agent autorotation to generate fake user agent strings. It lets you mimic a different browser environment per request and makes your scraper harder to detect.

- Stealth request mode: Using Botasaurus' standard Request mode or borrowing Selenium's WebDriver, you can add AntiDetectDriver, AntiDetectRequest, or explicit stealth flags to scrape unnoticed.

- SSL support for authenticated proxies: Botasaurus allows credential encryption during proxy authentication to protect sensitive information, including passwords and usernames, from leaking while communicating with the proxy server.

- Bypass Cloudflare with HTTP requests: In addition to the stealth arguments, Botasaurus offers a straightforward Google routing method for accessing Cloudflare-protected websites.

Efficiency and Speed

- Parallel scraping: Botasaurus allows you to scrape multiple web pages simultaneously with minimal configurations, significantly speeding up the data harvesting process. This feature makes it suitable for complex scraping tasks.

- Caching: Caching in Botasaurus lets you temporarily store previously scraped data in memory, reducing the total load time for subsequent requests.

- Sitemap: Botasaurus has a one-liner feature for discovering and extracting a website's sitemap so that you can navigate the URLs you want to scrape more efficiently.

- Block Resources: Botasaurus lets you block specific resources, including videos, images, and animations. This functionality allows you to deliver text content faster by disallowing memory-demanding data during scraping.

Convenience and Flexibility

- Use any Chrome extension: With Botasaurus, you can install any Chrome extension for a particular browser instance. To do so, specify the extension's URL in the browser decorator.

- Debugging support: Botasaurus helps you debug errors mid-scraping by pausing the browser so you can visualize why a functionality fails. For example, you can keep the browser instance opened to see why the driver doesn't respond to a scrolling or clicking effect.

Limitations of Botasaurus

Botasaurus' web scraping capabilities are powerful, but it has a few limitations that may cause you to be blocked during scraping. Let's examine them.

1. Lack of Advanced Browser Fingerprinting Management

Botasaurus doesn't fully address variations in browser environments while imitating different devices. Some information still leaks to anti-bots that analyze the user's environment, so they can detect and block your scraper during browser fingerprinting.

For instance, the browser version and platform may change if you dynamically switch the User Agent. However, Botasaurus doesn't update the secure client hint User Agent and platform headers, resulting in inconsistencies in the request headers.

2. Ineffectiveness in Server Environments

Botasaurus is only fully efficient while working in a local development environment, since it can access the browser APIs locally. However, it fails to access the browser APIs directly in a remote setting, so it underperforms on remote servers like AWS.

This barrier makes Botasaurus unsuitable for server-based web scraping integrations or deployments.

3. Documentation and Under-the-Hood Operations Clarity

Botasaurus' documentation is detailed regarding the use of its features. However, it doesn't explain the underlying mechanisms behind its core functionalities, so it may be difficult for users without code analysis experience to understand how it works.

This documentation setback also discourages developers from contributing to improving Botasaurus' anti-bot bypass capabilities.

4. Dependency on External Sites for Evasion

Sometimes, you might need to route the Botasaurus request through an external website like Google to access protected websites.

That approach is less sustainable than built-in options. If the external website adjusts its security measures against bot access, your scraper is likely to get blocked.

Best Alternative to Botasaurus

Botasaurus' limitations can get you blocked if you scrape large data volumes and heavily protected websites. But don't get discouraged. You just need a smart way to solve this issue.

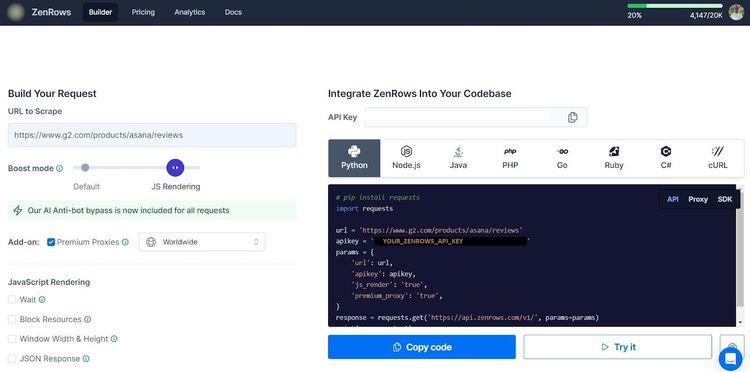

The best way to overcome Botasaurus' limitations and effectively bypass all anti-bots is to use a web scraping API like ZenRows. It fixes your request headers, auto-rotates premium proxies, and bypasses CAPTCHAs and other anti-bot mechanisms, so you can scrape any website without getting blocked.

ZenRows also features JavaScript instructions for headless browser functionality, allowing you to replace memory-intensive headless browsers like Botasaurus and Selenium.

Let's use ZenRows to scrape a Cloudflare-protected website, G2 Review.

Sign up to open the Request Builder. Enter the target URL in the link box, toggle on the Boost mode to JS Rendering, and activate Premium Proxies. Select the API request mode and choose Python as your programming language. Copy and paste the generated code into your script.

The generated code should look like this in your scraper script:

# pip install requests

import requests

params = {

"url": "https://www.g2.com/products/asana/reviews",

"apikey": "<YOUR_ZENROWS_API_KEY>",

"js_render": "true",

"premium_proxy": "true",

}

response = requests.get("https://api.zenrows.com/v1/", params=params)

print(response.text)

The code extracts the protected website's HTML, as shown:

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8" />

<link href="https://www.g2.com/images/favicon.ico" rel="shortcut icon" type="image/x-icon" />

<title>Asana Reviews, Pros + Cons, and Top Rated Features</title>

</head>

<body>

<!-- other content omitted for brevity -->

</body>

Congratulations! You've just used ZenRows to bypass Cloudflare Turnstile to extract content from a protected web page.

Conclusion

In this article, you've seen how to scrape a website with the Botasaurus library. Here's a recap of everything you've learned:

- What you can do with the Botasaurus library.

- Extracting a full-page HTML with Botasaurus via Google routing.

- Proxy configuration with Botasaurus.

- Botasaurus' key features and how they enhance web scraping.

- The limitations of Botasaurus.

As earlier mentioned, Botasaurus' limitations make it less effective against advanced anti-bot measures. We recommend using ZenRows, an all-in-one web scraping solution, to bypass any anti-bot system and scrape at scale without getting blocked. Try ZenRows for free!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.