When a request fails, the Polly algorithm retries it a few times in a given time frame. The idea is to make some attempts for the same failed request, hoping it will eventually succeed. C# Polly retry logic is a key feature every .NET web scraper should have for handling errors!

In this guide, you'll see how to implement retry logic in both vanilla C# and with the Polly library through real-world code examples. You'll learn:

Let's dive in!

What to Know to Build the C# Polly Retry Logic

The C# Polly retry logic is about repeating a task for a specified number of attempts. That's useful when making error-prone online requests, especially for C# web scraping. Now, the questions you might be wondering are:

- Should I always retry a request or just in some cases?

- When should I retry it?

- How many attempts should I make?

- Should I wait some time between attempts?

- How much time should I wait?

Follow the section below to find answers to those questions and see some code examples.

Types of Failed Requests

No online services work forever and all the time. Like any other software, sites and API backends are prone to errors. In particular, two scenarios that can lead to a failed request:

- A timeout: When the server does not respond in the expected time.

- An error: When the server returns an error response.

Learn how to address these two C# retry scenarios!

Timed Out

A request can timeout for two reasons:

-

The server is taking too long to respond and decides to interrupt the connection with the client.

-

The HTTP client declares the request as timed out because the server didn't respond in the expected time.

The second is the most common cause, as HTTP clients usually impose a timeout to avoid waiting forever for a server response. For example, HttpClient has a timeout of 100,000 milliseconds (100 seconds). To change it, pass a new value to the Timeout property as follows:

HttpClient httpClient = new HttpClient();

// set the internal timeout to 5 minutes

httpClient.Timeout = TimeSpan.FromMinutes(5);

HttpClient raises a TaskCancelledException when it hits a timeout.

In most cases, the reason for a request timeout is server overload. The server machine is too busy with the current load and can't produce a response quickly. The best approach to avoid timeouts is to wait a few seconds before retrying the request.

async Task<HttpResponseMessage> RequestWithRetryOnTimeout(HttpClient client, string url, int delay = 30)

{

try

{

// try to make the request

return await client.GetAsync(url);

}

catch (TaskCanceledException)

{

// wait for a while after a timeout error

// before repeating the request

await Task.Delay(TimeSpan.FromSeconds(delay));

}

// repeat the request if it failed because of a timeout

return await client.GetAsync(url);

}

If this isn't enough, increment the HTTP client's internal timeout value.

Returned an Error

The reasons why an HTTP request can fail are:

- Network errors: Connection failures and/or network slowdowns.

- Client errors: The client sends an unexpected request to the server (e.g., the requested page doesn't exist).

- Server errors: The server has a bug in its logic and cannot produce a correct response (e.g., fatal error responses).

When dealing with network errors, wait for the network to become reliable again. In case of a network error, HttpClient will throw a HttpRequestException:

try

{

var response = await httpClient.GetAsync(url);

}

catch (HttpRequestException e)

{

// no connectivity, ...

}

With client errors, repeating the request without changing anything would result in the same error. As for server errors, it may be a temporary problem or a serious issue that only their developers can fix. Either way, the solution is to try again at increasing intervals.

HttpClient doesn't raise an exception with client and server errors. You can understand the cause by looking at the response.StatusCode attribute. That will return the HTTP status code of the response. Find out more in the next section!

Error Status Codes

When making online requests, especially for web scraping, the HTTP status codes to consider are:

-

3xxredirection codes: They are not error codes; they specify that further action must be taken to complete the request. In web scraping, they indicate that you must follow redirects to reach the desired page. -

4xxclient error codes: Tell the client (e.g., the web scraper) that it made a mistake while forging the request. Proper handling is essential for effective data scraping.

Take a look at the table below to explore the most common HTTP status error codes in web scraping:

| Common Error | Explanation |

|---|---|

| 400 Bad Request | The request is malformed or contains invalid parameters. |

| 401 Unauthorized | Authentication credentials are missing or incorrect (e.g., you need to log in). |

| 403 Forbidden | The server understands the request but denies access to the resource. |

| 404 Not Found | The server couldn't find the requested resource. |

| 429 Too Many Requests | The client has made too many requests to the server in a short amount of time. Learn how to bypass rate limiting while scraping. |

| 500 Internal Server Error | The server couldn't generate the response because of an internal error. |

| 502 Bad Gateway | The server received an invalid response from the upstream server. |

| 503 Service Unavailable | The server isn't ready to handle the request. |

| 504 Gateway Timeout | The server didn't receive a timely response from the upstream server. |

Understanding and handling these errors in your scraping process ensures reliable data extraction. Check out the MDN page on HTTP response status codes for more information.

Number of Retries

Retrying a request allows you to recover from failures like network issues or temporary server unavailability. Determining the right amount of retry attempts is essential to:

- Protect against prolonged outages.

- Avoid overwhelming the server with too many requests.

You can implement a simple C# Polly retry mechanism as below:

async Task<HttpResponseMessage> RequestWithRetry(HttpClient client, string url, int maxRetryAttempts = 5, int delay = 30)

{

// repeat the request up to maxRetryAttempts times

for (int attempt = 1; attempt <= maxRetryAttempts; attempt++)

{

try

{

// try to make the request

return await client.GetAsync(url);

}

catch (HttpRequestException e)

{

// wait, log, ...

}

}

throw new Exception($"Request to '{url}' failed after {maxRetryAttempts} attempts!");

}

Bear in mind that some errors may demand different retry logic than others. For example, you should retry up to 8 times for 429 errors, but not more than 10 times in general.

A thoughtful approach to the number of retries improves the resilience of your scraping process. The idea is to find a balance between robust error recovery and efficiency.

Delay

Introducing a delay between retry attempts is critical to avoid the same error twice. Time spent without doing anything allows the server or network to recover. A delay strategy also helps avoid triggering rate-limiting measures.

Use Task.Delay() in vanilla C# to stop the execution for a specified time span:

await Task.Delay(TimeSpan.FromMinutes(2)); // waiting for 2 minutes

Finding the right time to wait is tricky and that changes from scenario to scenario. Waiting for too short leads to frequent errors, but the opposite results in bad performance.

To define the right delay, follow the indications of the RateLimit headers you can find in the server responses, if any.

Backoff Strategy

The idea behind exponential backoff is to start with a short delay and gradually increase it between retries. This delay strategy gives time for transient problems to resolve themselves. An intuitive way to achieve that involves raising the fixed delay by the retry counter.

Take a look at the following exponential backoff Retry C# method:

async Task<HttpResponseMessage> RequestWithBackoffRetry(HttpClient client, string url, int maxRetryAttempts = 5, int delay = 3)

{

// repeat the request up to maxRetryAttempts times

for (int attempt = 1; attempt <= maxRetryAttempts; attempt++)

{

try

{

// try to make the request

return await client.GetAsync(url);

}

catch (HttpRequestException e)

{

// exponential backoff waiting retry logic

await Task.Delay(TimeSpan.FromSeconds(Math.Pow(delay, attempt)));

}

}

throw new Exception($"Request to '{url}' failed after {maxRetryAttempts} attempts!");

}

Consider the following example to better understand how exponential backoff C# logic works. Suppose the request to the target page is successful only after the fourth attempt. Here's how the delay would evolve:

- Attempt 1: Request fails. Waits for 3 seconds.

- Attempt 2: Retry attempt fails. Wait for 9 seconds.

- Attempt 3: Retry attempt fails again. Wait for 81 seconds.

- Attempt 4: Request succeeds.

As learned here, building C# retry logic isn't complex but does involve boilerplate code. Let's see how to avoid that with the .NET package Polly!

How to Retry C# Requests with Polly

Polly is a .NET resiliency library to build effective retry strategies with just a few lines of code. This tutorial section will show how to configure it to achieve both simple and complex retry behavior.

Dig into the C# Polly retry library!

1. Retry on Error with Polly

Assume you want to implement C# retry logic with up to 7 retries on 429 errors, with a 5-second delay each.

It all boils down to initializing a retry Policy C# object as below. The HandleResult() method specifies the retry condition, while WaitAndRetryAsync() the retry attempts and delay. After defining your Polly retry policy example C# object, call ExecuteAsync() to apply it:

using (var httpClient = new HttpClient())

{

// define the retry policy with delay in Polly

var policyWithDelay = Policy

.HandleResult<HttpResponseMessage>(r => r.StatusCode == HttpStatusCode.TooManyRequests) // retry on 429 errors

.WaitAndRetryAsync(

7,// up to 7 attempts

retryAttempt => TimeSpan.FromSeconds(5) // 5-second delay after each attempt

);

// make the request with the specified retry policy

var response = await policyWithDelay.ExecuteAsync(() => httpClient.GetAsync("https://www.scrapingcourse.com/ecommerce/"));

}

If you're instead a .NET 8 user, you'll need the equivalent C# Polly retry example snippet:

using (var httpClient = new HttpClient())

{

// define the retry policy with delay in Polly

var pipelineOptions = new RetryStrategyOptions<HttpResponseMessage>

{

ShouldHandle = new PredicateBuilder<HttpResponseMessage>()

.HandleResult(r => r.StatusCode == HttpStatusCode.TooManyRequests), // retry on 429 errors

MaxRetryAttempts = 7, // up to 7 attempts

Delay = TimeSpan.FromSeconds(5) // 5-second delay

};

var pipeline = new ResiliencePipelineBuilder<HttpResponseMessage>()

.AddRetry(pipelineOptions) // add retry logic with custom options

.Build(); // build the resilience pipeline

// make the request with the specified retry policy

var response = await pipeline.ExecuteAsync(async token => await httpClient.GetAsync("https://www.scrapingcourse.com/ecommerce/"));

}

Awesome! This retry C# approach is easy to read and maintain!

2. Implement an Exponential Backoff Strategy with Polly in C#

An exponential backoff delay strategy means progressively increasing the time interval between retry attempts. To achieve that behavior, all you have to do is change the retry attempt logic as below:

var policyWithBackoffDelay = Policy

.HandleResult<HttpResponseMessage>(r => r.StatusCode == HttpStatusCode.TooManyRequests) // retry on 429 errors

.WaitAndRetryAsync(

7,// up to 7 attempts

retryAttempt => TimeSpan.FromSeconds(Math.Pow(5, retryAttempt)) // 5-second-based backoff exponential waiting logic

);

var response = await policyWithDelay.ExecuteAsync(() => httpClient.GetAsync("https://www.scrapingcourse.com/ecommerce/"));

Your delay strategy will now wait longer and longer in an exponential way.

If you're using Polly 8, you'll have access to the BackoffType setting. Set it to DelayBackoffType.Exponential to specify backoff delay behavior:

var pipelineOptions = new RetryStrategyOptions<HttpResponseMessage>

{

ShouldHandle = new PredicateBuilder<HttpResponseMessage>()

.HandleResult(r => r.StatusCode == HttpStatusCode.TooManyRequests), // retry on 429 errors

MaxRetryAttempts = 7, // up to 7 attempts

Delay = TimeSpan.FromSeconds(5), // 5-second delay

BackoffType = DelayBackoffType.Exponential // enable exponential backoff logic

};

var pipeline = new ResiliencePipelineBuilder<HttpResponseMessage>()

.AddRetry(pipelineOptions)

.Build();

var response = await pipeline.ExecuteAsync(async token => await httpClient.GetAsync("https://www.scrapingcourse.com/ecommerce/"));

The above Polly retry example C# snippet will produce the same result as above.

Fantastic, Implementing exponential backoff retry C# logic has never been easier!

3. Learn the Polly Options

The retry policy in Polly 8 offers the following options:

| Property | Default value | Description |

|---|---|---|

ShouldHandle |

A Predicate object that handles all exceptions except OperationCanceledException. |

A Predicate object that determines what results and exceptions should be handled by the retry strategy. |

MaxRetryAttempts |

3 |

The maximum number of retries to perform in addition to the original call. |

Delay |

2 seconds | The delay to apply between retries. |

BackoffType |

DelayBackoffType.Constant |

A DelayBackoffType value represents the backoff type to use to generate the retry delay. |

UseJitter |

False |

To add random delays to retries. |

DelayGenerator |

null |

It accepts a function that specifies custom delay strategies for retries. |

OnRetry |

null |

It accepts a function that defines the action to execute when a retry occurs. |

MaxDelay |

null |

It caps the calculated retry delay to a specified maximum duration. |

For more information, check out the docs. Congrats! You're now a C# Polly retry expert!

Avoid Getting Blocked with Vanilla C#

The biggest challenge when making web requests in C# is getting blocked by anti-bots. These technologies can recognize your requests as coming from an automated script and block them. This means that your retry attempts will fail all the time.

That's a huge problem!

For example, try to extract the source HTML from a G2 review page:

class Program

{

static async Task Main(string[] args)

{

using (var httpClient = new HttpClient())

{

var response = await httpClient.GetAsync("https://www.g2.com/products/notion/reviews");

// extract the source HTML from the page

string html = await response.Content.ReadAsStringAsync();

Console.WriteLine($"Status code: {response.StatusCode}");

Console.WriteLine(html);

}

}

}

Execute the above script, and it'll log:

Status code: Forbidden

<!DOCTYPE html>

<!--[if lt IE 7]> <html class="no-js ie6 oldie" lang="en-US"> <![endif]-->

<!--[if IE 7]> <html class="no-js ie7 oldie" lang="en-US"> <![endif]-->

<!--[if IE 8]> <html class="no-js ie8 oldie" lang="en-US"> <![endif]-->

<!--[if gt IE 8]><!--> <html class="no-js" lang="en-US"> <!--<![endif]-->

<head>

<title>Attention Required! | Cloudflare</title>

<!-- omitted for brevity -->

The G2 server responded to your request with a 403 Forbidden error page.

A web scraping proxy service is the go-to solution for most sites to avoid these problems. At the same time, they aren't enough for pages protected by WAF systems like Cloudflare

So, how to avoid getting blocked?

With ZenRows! This powerful API provides an AI-based anti-bot toolkit that will save you from blocks and IP bans. It will change User-Agent and exit IP via residential proxies at each request for you

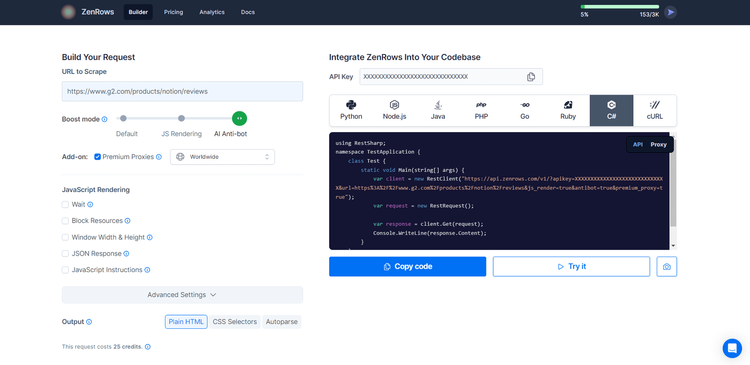

To move your first steps with ZenRows, sign up for free. You'll get redirected to the Request Builder page below:

Next, follow the steps below:

- Paste your target URL (

https://www.g2.com/products/notion/reviews) in the "URL to Scrape” input. - Select the “AI Anti-bot” option for maximum effectiveness.

- Enable the rotating IPs by clicking the "Premium Proxy" check. (Note that User-Agent rotation is included by default).

- Select “C#” on the right side of the screen and then “API”. (Select “cURL” to get the raw API endpoint you can call with any HTTP client in your favorite programming language).

- Copy the generated link and paste it into your C# script:

// dotnet add package RestSharp

using RestSharp;

namespace TestApplication {

class Test {

static void Main(string[] args) {

var client = new RestClient("https://api.zenrows.com/v1/?apikey=<YOUR_ZENROWS_API_KEY>&url=https%3A%2F%2Fwww.g2.com%2Fproducts%2Fnotion%2Freviews&js_render=true&antibot=true&premium_proxy=true");

var request = new RestRequest();

var response = client.Get(request);

Console.WriteLine(response.Content);

}

}

}

Install RestSharp and launch the script. It'll print the source HTML of the G2 page:

<!DOCTYPE html>

<head>

<meta charset="utf-8" />

<link href="https://www.g2.com/assets/favicon-fdacc4208a68e8ae57a80bf869d155829f2400fa7dd128b9c9e60f07795c4915.ico" rel="shortcut icon" type="image/x-icon" />

<title>Notion Reviews 2023: Details, Pricing, & Features | G2</title>

<!-- omitted for brevity ... -->

Wow! You just integrated ZenRows into a C# web scraping script.

Now, what if your request fails? ZenRows will not charge for it and will automatically retry it for you.

Conclusion

In this retry C# tutorial, you saw the basics of the Polly algorithm applied to failed requests. You started with the fundamentals and explored advanced techniques like exponential backoff. You're now a Polly retry C# expert.

Now you know:

- When and why you should apply retry logic on failed requests in C#.

- How to implement basic C# Polly retry logic.

- How to use Polly to achieve exponential backoff behavior.

- What the library has to offer through some Polly retry example C# snippets.

Retry logic is not enough when dealing with anti-bots, as those measures will always stop them. Avoid that with ZenRows, a web scraping API with IP rotation, an unstoppable anti-scraping bypass toolkit, and built-in retry logic. Making successful requests has never been easier. Try ZenRows for free!

Frequent Questions

What Is Retry in C#?

Retry in C# refers to building a mechanism that automatically reattempts a task upon failure based on specified conditions. That improves the resilience of your code against temporary issues or errors. The best .NET library for implementing that behavior is Polly.

How to Retry a Task in C#?

To retry a task in C#, specify the conditions for retries and how many attempts to make. For example, you should retry the operation only on a specific exception and for a given number of times.

How to Retry a Request in C#?

To retry a request in C#, embed it in a function that repeats it on network errors or specific HTTP status codes. Make sure to define the number of retry attempts and the delay between retries. Otherwise, use the C# Polly retry library to create effective retry policies with a few lines of code.

How to Add Polly in C#?

To add Polly to your C# project's dependencies, launch the command below:

dotnet add package Polly

Then, import the package in your code with:

using Polly;

using Polly.Retry;

For .NET 7 users, use this command instead:

dotnet add package Polly --version 7

Next, import it with:

using Polly;

How to Use Polly in C#?

To use Polly in C#, you must first install and import it into your C# script. You can then use it to define a C# retry policy and apply it to a task to handle transient errors. The program will run the task again when particular errors occur.

What Is the Retry Method in Polly?

The RetryAsync() method in Polly enables you to define retry policy C# logic for handling transient failures. Polly allows you to specify conditions for retries, such as specific exceptions, and configure the number of retry attempts and the delay between retries.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.