Are you getting blocked while web scraping in Java? One key factor contributing to this issue is the User-Agent header. The website may block your requests if your Java HttpClient user agent identifies you as a bot.

In this guide, you'll learn how to set a custom User-Agent header in HttpClient to avoid detection. Let's dive in.

What Is the HttpClient User Agent?

HTTP headers convey essential information between the web client and the target server. The user agent is the most critical component, as it divulges details about the client making the requests.

A typical User-Agent (UA) string consists of various components, including the browser name, version, operating system, and sometimes additional details like device type. For instance, below is a Google Chrome UA string.

Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/92.0.4515.159 Safari/537.36`

It tells the web server that the request comes from a Chrome browser with version 92.0.4515.159, running on Windows 10, among other details.

On the other hand, your Java HttpClient user agent informs the server that you're not requesting from an actual browser, as it typically looks like this.

Java-http-client/17.0.10

You can see yours by making a basic request to httpbin.io/user-agent.

From the examples of User-Agent strings above, it's clear how easily websites can differentiate between Java HttpClient requests and those from an actual browser. This is why setting a custom user agent is essential to avoid detection.

How to Set a Custom User Agent in HttpClient in Java

Follow the steps below to set up a custom Java HttpClient user agent.

1. Getting Started

Create your Java project to kickstart your journey to a custom HttpClient user agent. The HttpClient class is part of the standard Java Development Kit (JDK), so you don't need to install anything separately or include external dependencies.

Once you have everything set up, you're ready to write your code. Below is a basic script that makes a GET request to https://httpbin.io/user-agent and retrieves its text content.

package com.example;

// import the required classes

import java.net.URI;

import java.net.http.HttpClient;

import java.net.http.HttpRequest;

import java.net.http.HttpResponse;

import java.net.http.HttpResponse.BodyHandlers;

public class Main {

public static void main(String[] args) {

// create an instance of HttpClient

HttpClient client = HttpClient.newHttpClient();

// build request using the Request Builder

HttpRequest request = HttpRequest.newBuilder()

.uri(URI.create("http://httpbin.io/user-agent"))

.build();

// send request asynchronously and print response to the console

client.sendAsync(request, BodyHandlers.ofString())

.thenApply(HttpResponse::body)

.thenAccept(System.out::println)

.join();

}

}

2. Customize UA

The HttpRequest.Builder class allows you to set HTTP headers using the header() method. This method takes two parameters: the header's name and value. In this case, you would pass "User-Agent" as the header name and your desired UA string as the header value, as in the code snippet below.

// create an HttpRequest instance

HttpRequest request = HttpRequest.newBuilder()

.uri(URI.create("https://httpbin.io/user-agent"))

// set custom user agent header

.header("User-Agent", "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36") // set the User-Agent header

.build(); // build request

This code changes the default user agent to the sample Google Chrome UA you saw earlier.

Now, set a custom User Agent in the HTTP request script in step 1, and you'll have the following complete code.

package com.example;

// import the required classes

import java.net.URI;

import java.net.http.HttpClient;

import java.net.http.HttpRequest;

import java.net.http.HttpResponse;

import java.net.http.HttpResponse.BodyHandlers;

public class Main {

public static void main(String[] args) {

// create an instance of HttpClient

HttpClient client = HttpClient.newHttpClient();

// build request using the Request Builder

HttpRequest request = HttpRequest.newBuilder()

.uri(URI.create("http://httpbin.io/user-agent"))

.header("User-Agent", "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36") // set the User-Agent header

.build();

// send request asynchronously and print response to the console

client.sendAsync(request, BodyHandlers.ofString())

.thenApply(HttpResponse::body)

.thenAccept(System.out::println)

.join();

}

}

Run the code, and your response should be the Google Chrome UA.

{

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"

}

Congrats, you've changed your Java HttpClient user agent to that of a Chrome browser.

However, with a single user agent, websites can eventually identify your scraper and block you accordingly. But rotating user agents can help you avoid detection in some scenarios.

3. Use a Random User Agent with HttpClient

Websites may detect patterns in multiple requests from the same user agent and interpret them as automated traffic. You can overcome this issue by varying the User-Agent header per request.

To rotate User-Agent headers, maintain a list of different User-Agent strings and randomly select one for each HTTP request.

Here's how you can modify your previous code to achieve this.

Start by creating an array or list containing all the user agent strings you want to use. Ensure you import the required Java classes (List and Random). For this example, we've selected a few UAs from this list of web-scraping user agents.

package com.example;

// import the required classes

import java.net.URI;

import java.net.http.HttpClient;

import java.net.http.HttpRequest;

import java.net.http.HttpResponse;

import java.net.http.HttpResponse.BodyHandlers;

import java.util.List;

import java.util.Random;

public class Main {

public static void main(String[] args) {

// define a list of User-Agent strings

List<String> userAgents = List.of(

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"

// Add more User-Agent strings as needed

);

}

}

After that, randomly select a User-Agent string from the list. To do this, create a random number generator and use it to choose a UA string from the list.

public class Main {

public static void main(String[] args) {

//...

// randomly select UA from the list

Random random = new Random();

String randomUserAgent = userAgents.get(random.nextInt(userAgents.size()));

}

}

Lastly, set the selected User-Agent string as the value of the "User-Agent" header in the HTTP request and send the request.

public class Main {

public static void main(String[] args) {

//...

// create an instance of HttpClient

HttpClient client = HttpClient.newHttpClient();

// build an HTTP request with a randomly selected User-Agent header

HttpRequest request = HttpRequest.newBuilder()

.uri(URI.create("https://httpbin.io/user-agent"))

.header("User-Agent", randomUserAgent) // set a random User-Agent header

.build();

// send request asynchronously and print response to the console

client.sendAsync(request, BodyHandlers.ofString())

.thenApply(HttpResponse::body)

.thenAccept(System.out::println)

.join();

}

}

Putting everything together, you'll have the following complete code.

package com.example;

// import the required classes

import java.net.URI;

import java.net.http.HttpClient;

import java.net.http.HttpRequest;

import java.net.http.HttpResponse;

import java.net.http.HttpResponse.BodyHandlers;

import java.util.List;

import java.util.Random;

public class Main {

public static void main(String[] args) {

// define a list of User-Agent strings

List<String> userAgents = List.of(

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"

// add more User-Agent strings as needed

);

// randomly select UA from the list

Random random = new Random();

String randomUserAgent = userAgents.get(random.nextInt(userAgents.size()));

// create an instance of HttpClient

HttpClient client = HttpClient.newHttpClient();

// build an HTTP request with a randomly selected User-Agent header

HttpRequest request = HttpRequest.newBuilder()

.uri(URI.create("https://httpbin.io/user-agent"))

.header("User-Agent", randomUserAgent) // Set a random User-Agent header

.build();

// send request asynchronously and print response to the console

client.sendAsync(request, BodyHandlers.ofString())

.thenApply(HttpResponse::body)

.thenAccept(System.out::println)

.join();

}

}

Every time you run the script, a different UA will be used to make your request. For example, here are our results for three requests:

{

"user-agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"

}

{

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"

}

{

"user-agent": "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36"

}

Bingo! You've successfully rotated HttpClient user agents.

You'll need to expand your list in real-world use cases, so paying attention to your UA construction is essential. A properly constructed UA can help ensure smooth communication between the client and the server, while an incorrectly formatted or suspicious UA might trigger anti-bot measures.

For example, if the User Agent suggests a specific browser version that doesn't exist or is outdated, websites can easily detect the discrepancy and block your scraper.

Also, your UA string must match other HTTP headers. If the User-Agent string identifies the client as a particular browser and version, but other HTTP headers suggest different characteristics or behaviors, it could signal irregularities in the request.

Maintaining a diverse, well-formed, and up-to-date pool of User Agents can be challenging. The following section provides an easier solution.

Avoid Getting Blocked With HttpClient in Java

Websites continuously evolve their anti-bot techniques, so you can still get blocked even when rotating properly formed user agents.

A popular complementary technique is using web scraping residential proxies to hide your IP address and also distribute traffic across multiple IPs. While this can help you avoid IP-based blocking and a few other challenges, it's also not enough.

Here's the previous custom user agent script against a protected web page (https://www.g2.com/products/visual-studio/reviews).

package com.example;

// import the required classes

import java.net.URI;

import java.net.http.HttpClient;

import java.net.http.HttpRequest;

import java.net.http.HttpResponse;

import java.net.http.HttpResponse.BodyHandlers;

import java.util.List;

import java.util.Random;

public class Main {

public static void main(String[] args) {

// define a list of User-Agent strings

List<String> userAgents = List.of(

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.163 Safari/537.36",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"

// add more User-Agent strings as needed

);

// randomly select UA from the list

Random random = new Random();

String randomUserAgent = userAgents.get(random.nextInt(userAgents.size()));

// create an instance of HttpClient

HttpClient client = HttpClient.newHttpClient();

// build an HTTP request with a randomly selected User-Agent header

HttpRequest request = HttpRequest.newBuilder()

.uri(URI.create("https://www.g2.com/products/visual-studio/reviews"))

.header("User-Agent", randomUserAgent) // Set a random User-Agent header

.build();

// send request asynchronously and print response to the console

client.sendAsync(request, BodyHandlers.ofString())

.thenApply(HttpResponse::body)

.thenAccept(System.out::println)

.join();

}

}

Below is the result:

<body>

<!-- ... -->

<div class="cf-wrapper cf-header cf-error-overview">

<h1 data-translate="block_headline">Sorry, you have been blocked</h1>

<h2 class="cf-subheadline"><span data-translate="unable_to_access">You are unable to access</span> g2.com</h2>

</div><!-- /.header -->

<!-- ... -->

</body>

This affirms that only changing user agents do not work against protected websites, which is virtually every real-world use case.

Fortunately, you can complement ZenRows with Java HttpClient to overcome all challenges automatically. ZenRows is a web scraping API that provides everything you need to scrape without getting blocked.

By handling user agent rotation, premium residential proxies, JavaScript rendering, CAPTCHA bypass, and more under the hood, ZenRows allows you to focus on extracting data rather than the technicalities of bypassing anti-bot techniques.

Let's try ZenRows against the same protected web page that blocked us earlier.

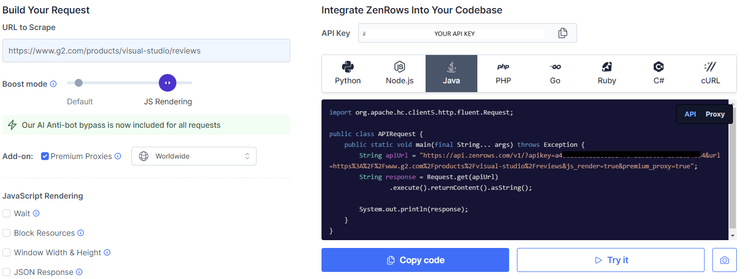

First, sign up for your free API key, and you'll be directed to the Request Builder page.

Paste your target URL (https://www.g2.com/products/visual-studio/reviews), check the box for Premium Proxies, and activate the JavaScript Rendering boost mode. Then, Select Java as the language you'll use to get your request code generated on the right.

You'll see that Apache HttpClient Fluent API is suggested, but you can use Java HttpClient. You only need to send a request to the ZenRows API. For that, first copy the ZenRows API URL from the generated request.

Below is the ZenRows API URL, as seen in the screenshot.

https://api.zenrows.com/v1/?apikey=<YOUR_ZENROWS_API_KEY>&url=https%3A%2F%2Fwww.g2.com%2Fproducts%2Fvisual-studio%2Freviews&js_render=true&premium_proxy=true

Make a request to it using Java HttpClient. Your new script should look like this:

package com.example;

// import the required classes

import java.net.URI;

import java.net.http.HttpClient;

import java.net.http.HttpRequest;

import java.net.http.HttpResponse;

import java.net.http.HttpResponse.BodyHandlers;

public class Main {

public static void main(String[] args) {

// create an instance of HttpClient

HttpClient client = HttpClient.newHttpClient();

// build request using the Request Builder

HttpRequest request = HttpRequest.newBuilder()

.uri(URI.create("https://api.zenrows.com/v1/?apikey=<YOUR_ZENROWS_API_KEY>&url=https%3A%2F%2Fwww.g2.com%2Fproducts%2Fvisual-studio%2Freviews&js_render=true&premium_proxy=true"))

.build();

// send request asynchronously and print response to the console

client.sendAsync(request, BodyHandlers.ofString())

.thenApply(HttpResponse::body)

.thenAccept(System.out::println)

.join();

}

}

Run it, and you'll get the target page's HTML.

<!DOCTYPE html>

<head>

<title>Visual Studio Reviews 2024: Details, Pricing, & Features | G2</title>

<!--

...

-->

</head>

Cool, right? ZenRows makes scraping any website easy.

Conclusion

The User Agent string is a critical component of the HTTP headers. Understanding and configuring the UA properly can help you mimic natural user behavior and ultimately avoid detection.

However, with websites constantly evolving their anti-bot techniques, you may require more than a properly crafted UA. Not to mention how challenging maintaining accurate and consistent UAs can be. To avoid the hassle of finding and configuring UAs, consider ZenRows.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.