Web scraping is a technique for extracting data from websites, but it has become harder in recent years because many websites block bots.

Luckily, you'll find low-code and no-code web scrapers here to allow you to get data from any website regardless of your technical background:

| Solution | Best for | Price |

|---|---|---|

| ZenRows | Best overall | $49/month |

| Octoparse | Simple no-code web scraping | $75/month |

| ParseHub | Ecommerce scraping | $189/month |

| WebScraper.io | Scraping from a browser | $100/month |

| Data Miner | Low-code users | $49/month |

| Apify | Users with more technical knowledge | $49/month |

| Import.io | Data to analytics | $399/month |

Let's explore each of these tools in more detail.

1. ZenRows: Best No-Code Web Scraper

ZenRows is an intuitive web scraper that allows you to extract your desired data from any website without worrying about getting blocked. It powers over 5,000+ app integrations with Zapier, the most popular no-code integration platform, as well as Make, its low-code counterpart.

You can use both integrations without writing a single line of code. However, the Make integration offers greater customization options for more technical users.

How ZenRows Works

ZenRows' integration with Zapier and Make enables you to automate web scraping within a few clicks. We'll go through an example on monitoring your competitors' prices on various marketplaces.

More specifically, we'll scrape data from a product on Amazon and populate your spreadsheet with new prices weekly. That way, you can make strategic pricing decisions and stay ahead of the curve.

To follow along, first create a Zap, which is Zapier's word for "automation." To do that, sign up to create a free Zapier account. Then, in your Dashboard, click the Create Zap button in the top-left corner.

A Zap consists of a trigger (an event that starts your Zap) and actions (one or more). In our case, the trigger is a time scheduler that runs every week, meanwhile the actions are scraping the website and then adding the data to Google Sheets.

To set up your trigger, click on the Trigger box and select Schedule by Zapier to schedule when the automation runs.

Next, from the event dropdown menu, choose how frequently you want this automation to occur (every week).

After that, choose the Day of the Week and Time of Day. Then, click Continue to confirm.

Click the Action box to select the event you want to perform. Choose the ZenRowsapp and then the action Scraping a URL with Autoparse (the autoparse mode gives you the data you need directly).

After that, you must connect your ZenRows account. To do that, click Continue to navigate to the Account tab, and then click the Sign in button.

This opens a new Zapier window that requests your API key. The API key is like a special token or code that allows Zapier to communicate with ZenRows. Sign up on ZenRows and you'll get to the Request Builder page, where you'll see your free API key near the top-right of the screen. Next, copy your API key and paste it on Zapier.

Now back to Zapier, click Continue to navigate to the Actions tab, where you'll define your action. Here, you only need to enter your target URL: https://www.amazon.com/dp/B01LD5GO7I/.

Next, test the action to confirm it works. This will automatically scrape Amazon and return the data to Zapier.

Great! You're getting the data.

Now, let's add a second step: Adding the scraped data to a spreadsheet. For that, click the + icon to add a new action, choose the Google Sheets app, and connect your Google account.

Now, select the event Create Spreadsheet Row.

After that, navigate to the Action tab.

Before continuing, you must set up the spreadsheet you want to populate. Just create it and add the headers for the data you want to analyze (you can use any names). Here's our setup for this example:

Next on Zapier, select the spreadsheet and map the headers to the corresponding data by clicking the dialogue box and selecting from the data provided by the first action (Scraping a URL with Autoparse).

To fill the date column with the date your zap runs, add {{zap\_meta\_human\_now}} in the dialogue box.

Now, you can test it. By doing so, Zapier will actually perform the action, which is populating your spreadsheet with the data you selected.

Congrats! Now, you can activate your Zap, and it will perform the action steps every week at the time you choose.

If you wish, you can populate the same spreadsheet with other products from other marketplaces by creating another Zap. Or you might create your own advanced zap.

Testimonials on ZenRows

"I noticed the insane amount of time ZenRows has saved me". — Giuseppe C.

"For me, the best side is the reliability". — Jose Ilberto F.

"It handles getting data well, even behind sophisticated anti-bot solutions" — Cosmin I.

2. Octoparse

Octoparse is a web scraping software for easy data extraction. It offers an intuitive interface with a click-to-build approach for creating web scrapers. Moreover, it has hundreds of ready-made code templates for popular websites, which allows you to retrieve data without writing a single line of code.

Octoparse is intuitive enough for beginners, but it also boasts advanced features for more complex scraping scenarios, such as websites that require filling forms, infinite scrolling, etc. Additionally, it uses AI-powered algorithms to automatically select data from a target website.

How Octoparse Works

Octoparse takes the target URL and automatically analyzes the web page's structure to identify elements, such as texts, links, and images, based on the website niche. You can then select and export your desired data using its point-and-click interface.

Octoparse also allows you to streamline your scraping process using templates. Those are designed to automatically retrieve data related to the website. For example, a real estate template automatically extracts details about properties, such as location, prices, descriptions, etc.

Testimonials on Octoparse

"The IP rotation feature prevents our requests from getting blocked". —Aris G.

"Advanced features are a bit complicated". — Adriano S.

"The UI [User Interface] is smooth and (...). Also, the exported data is accurate and saves much of my time". — Jassim.

3. ParseHub

ParseHub is a no-code web scraper available as a desktop app. It offers a straightforward three-step workflow process (enter target URL > click to select data > download results) that makes it easy for anyone to extract data from websites.

It also allows you to clean your data, which eliminates the need for further processing. However, its free plan comes with limited features. And, depending on your use case, you might need a subscription plan.

How ParseHub Works

ParseHub loads the website you want to scrape and allows you to select the desired data. If the page requires user interaction to access it, you can also do so visually. Once you run your scraper, you can manually retrieve the data from the app or upload it to Google Sheets.

Testimonials on ParseHub

"What I like best about ParseHub is the support; very fast to help". — Mateusz M.

"ParseHub can do a lot, such as creating templates, scheduling, scraping recurringly, scraping dynamic elements, and much more." — Diego Asturias.

"It (...) takes a lot of time to scrape a few pages. Also, the paid plan is high." — Mohammad B.

4. WebScraper.io

Webscraper.io offers a free and easy-to-use Chrome and Firefox browser extension to extract data from websites. Its point-and-click interface makes it easy to configure a scraper to extract data from websites and export it to file formats such as CSV.

Webscraper.io allows you to completely automate data extraction using its cloud-based browser scraper. This feature enables you to schedule scraping tasks, automate data exports to external storage services like Dropbox, and manage projects via its API.

How Webscraper.io Works

Webscraper.io expects you to navigate to the target website in the browser where its extension is installed and open the developer tools to locate the Web Scraper tab.

Then, you can quickly create a sitemap and add the desired selectors to create your web scraper. Those selectors define how Webscraper.io would navigate the site and extract the data.

Testimonials on WebScraper.io

"It's easy to use, fast, and allows exporting to Excel and CSV files." — Hemal B.

"Even without deep programming knowledge, I could still set up and execute tasks. — Julian H.

"I experienced some problems with more complex websites, or websites that differ significantly in structure. This added time to my overall process." — Monica D.

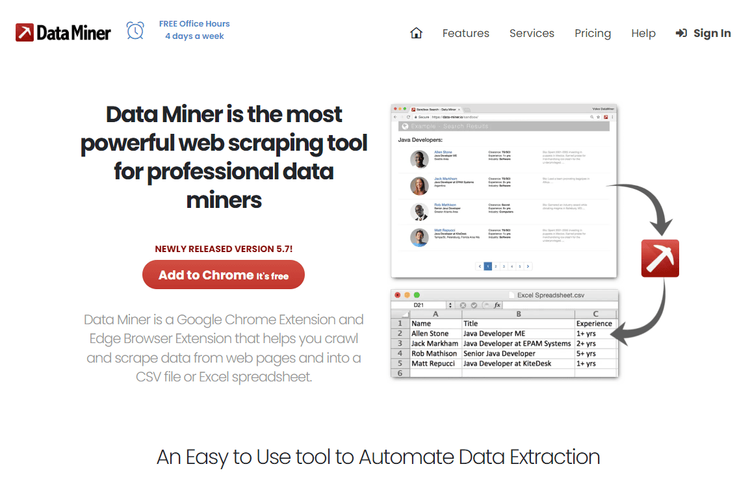

5. Data Miner

Like Webscraper.io, Data Miner is a Chrome and Edge browser extension for building web scrapers. It offers an intuitive user interface and workflow that lets you configure and execute scrapers in a few clicks.

With over 60,000 in-built rules, Data Miner accounts for numerous web scraping use cases, including complex websites, like those requiring user interactions, JavaScript rendering, etc. Plus, you can also create your custom extraction rules to get only the data you need. However, its free plan has limited features, so you might need a subscription for niche web scraping tasks.

How Data Miner Works

Data Miner takes your target URL (you must navigate to the website in your browser) and offers a list of pre-built scraping configurations (Recipes) matching the data you want to scrape. Then, you can customize those to specify the data elements you want to extract.

It also offers multiple scraping options for different use cases. For example, its New Scrape feature lets you retrieve data from a single webpage. Its Scrape and Append feature allows you to choose multiple pages and append results to make a large file. Lastly, New Page Automation enables Data Miner to scrape through multiple pages automatically.

Testimonials on Data Miner

"Easy to handle, and I like that the extractions can be very fast." — Angel U.

"The tutorials and interface make it super easy to get used to the tool and start right away." — David B.

"If you need to scrape in high volume, you may need to pay a lot. You can paginate, but you can only scrape one URL at a time." — Sanders M.

6. Apify

Apify is a platform that caters to a non-technical audience with tons of web automation features. It has hundreds of ready-made templates to scrape popular websites without writing code.

How Apify Works

According to the Apify doc, its web scraper actor requires two actions to extract data from popular pages, like Facebook, Instagram, or Google Maps: Entering the target URL and selecting the page elements you want to extract. However, you might actually need to adjust the template and use additional paid tools to retrieve your desired data.

Testimonials on Apify

"Apify (...) reduces the complexity typically associated with building web scraping and automation tools." — Abdurrachman M.

"One of the best features of Apify is their free scrapers. They provide a variety of ready-made solutions for common web scraping tasks." — Dmitry

"Some actors are a little too expensive." — Emre K.

7. Import.io

Import.io is a versatile no-code web scraper that helps you retrieve and transform information from websites with ease. Its point-and-click interface, easy scheduling, and automated reporting make it a great option. Also, Import.io can extract data in different web scraping scenarios, including those that require filling out forms to access data.

This tool allows you to transform data into spreadsheets, comparison tables, change reports, and other usable formats. That makes it a perfect choice for businesses.

How Import.io Works

Import.io offers an Extractor function that takes the target URL and works behind the scenes to grab data from the webpage. The extractor loads the web page and allows you to train it to extract your desired data. That involves selecting specific page elements or discarding unwanted data.

Once the webpage is fully trained, you can run the extractor to view your outputs, which can be exported in multiple formats.

Testimonials on Import.io

"A very easy scraping tool for those who have no basic knowledge of code and programming." — Vincent F.

"As a business with ambitious growth expectations, it was imperative that we found a partner that could provide web data at scale (...). Import.io is that partner." — John T. Shea.

"It's extremely expensive compared to its competitors." — Floris G.

Why Build a No-code Web Scraping Tool

The internet is the largest and most active data source on Earth, and capturing this data enables you to gain insights that can serve as a competitive edge. No-code web scraping tools bring this possibility to everyone interested in data, regardless of their technical background. You can get your web scraping project up and running with only a few clicks.

Moreover, there are numerous no-code web scraping use cases that can generate income, like a price monitoring tool. You can track real-time competitor prices using no-code web scraping tools. Also, by scraping industry pricing data, you can gain retail insights to inform your pricing strategies. Another popular use case is lead generation by scraping target business and persona information and store it in a CSV file.

These use cases highlight the importance of no-code web scrapers in creating data-driven solutions for various industries. To explore additional project ideas, check out our article on amazing web scraping project ideas.

Conclusion

Manually retrieving data from websites can get complex, even for experienced developers. But, no-code web scrapers like those mentioned above empower everyone to harness web scraping functionalities.

Those tools are great for extracting data. However, only a few account for the challenge of getting blocked by anti-bot measures, and leading the pack is ZenRows.

Frequent Questions

How Do I Scrape a Website Without Code?

You can scrape a website without code using a low-code or no-code web scraping tool. To get started, choose a web scraper that meets your project needs. Also, with modern websites implementing anti-scraping measures, ensure your choice enables you to avoid bot detection.

What Is a No-code Scraper?

A no-code scraper is a data extraction tool or platform that allows you to retrieve data from websites without writing any code. Those tools aim to ensure inclusivity and make web scraping accessible in all industries.

Which Is the Best No-code Web Scraping Tool?

The best no-code tool largely depends on your project needs. There are several tools available, each with its own strengths and features. But generally, one that's easy to use, easy to understand, and enables you to scrape without getting blocked is the best one.

Does Web Scraping Need Coding?

Web scraping traditionally requires writing code to extract data. However, with the rise of low-code and no-code web scrapers, it's possible to scrape websites without coding. They provide user-friendly interfaces that allow users to drag and drop or click to implement instructions, eliminating the need for code.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.