Web scraping is a powerful technique that opens up endless data-related possibilities, and here you'll find a list of web scraping project ideas to help you elevate your skills or build a business.

Whether you're a developer or a data scientist, this guide has you covered. We have a spoiler for you: the top 10 most exciting web scraping ideas you'll see in this guide:

- Build the ultimate video game database.

- Get a recipe idea from the ingredients you have.

- Create a sports statistic tracker to attract millions of fans.

- Build a news aggregator.

- Track IT salaries to recommend people when to quit their job.

- Find new clients for your business at the right time.

- Create a tech job portal.

- Predict the next trending stock.

- Get images to train your machine learning model.

- Improve the world with an aggregator of volunteer opportunities.

Let's get scraping!

Is a Web Scraper a Good Project?

No matter the industry, a web scraper is a project always worth your time and effort. There's no doubt about that! Why? Because web scraping unlocks a hidden gem of valuable online data, which is the most valuable asset on Earth.

Data scraping applications are endless, from content aggregation to online price monitoring, from machine learning to search engines. Learn more about the many use cases in our article on what web scraping is about.

Get some online data and unleash your entrepreneurship to create a business out of it. Anyone could have built million-dollar services like CyberLeads or AnwerThePublic. All you need to replicate that successful project is a web scraper, and for that, it's useful to get inspiration on web scraping business ideas.

Getting Started with Web Scraping Project Ideas in Python and NodeJS

If you don't know what technology to pick, remember that Python is the go-to language for scraping. That's because of its simple syntax and vast ecosystem of libraries. Find out more in your in-depth tutorial on web scraping with Python.

Another great option is JavaScript, the most popular language for web development. Explore our Node.js web scraping guide to learn more about it. And depending on your background, you might find R for web scraping is your best option.

These are some of the most popular choices for your web scraping project ideas but, based on your needs, you may go for other solutions because different programming languages are better for performance or data processing tasks. To make it easy, you can check out our comparison guide about the best languages for web scraping.

Must Tools for Your Scraping Projects Using Python and NodeJS

The must tools for data extraction depend on whether your target page is dynamic or not. A dynamic website is one that doesn't have all its content directly in its static HTML, and this sort of page is more popular every day.

To scrape static pages, you need to download the HTML document from the server and parse it. These tasks require an HTTP client and a parsing library, respectively.

A dynamic page relies on JavaScript to get data via API and requires a headless browser to get scraped. Thus, web scraping libraries boil down to HTTP clients, parsing libraries, and headless browsers.

In Python, the most popular scraping packages are:

- HTTP client: Requests.

- Parsing library: Beautiful Soup.

- Headless browser: Selenium.

Instead, in Node.js they are:

Regardless of which approach you take, there's a common challenge: getting blocked. You may run into CAPTCHAs, IP bans, rate limiting, and other anti-bot systems. You can read our blog on web scraping without getting blocked.

The easy solution? ZenRows, a web scraping API with a complete toolkit to bypass anti-scraping measures. It also comes with premium proxies and has built-in headless browser capabilities.

Best Web Scraping Project Ideas

Let's now explore different compelling web scraping ideas!

1. Build a News Aggregator Like Squid as a Simple Web Scraping Project Idea

There's an overwhelming amount of information available online. The idea behind this web scraping project is to scrape news from various websites and present it in a single place with filters and categories!

This approach to news aggregation has proven successful with SQUID, an Android app with millions of downloads and more than 15k positive reviews. The Apple version of the app isn't popular, which means that there's still space in the market for similar tools.

✅ Steps to success:

- Identify and select reliable news sources to scrape data from.

- Build a web scraper that keeps extracting news articles, headlines, and metadata from those sites or their RSS systems.

- Implement a fresh interface for the news aggregator, allowing customization and personalization, such as choosing the news categories the user is most interested in.

- Devise an algorithm to rank news articles based on user preferences.

- Keep adding new news sources.

🛠️ Must-use tool: JAXB, one of the most powerful and efficient XML libraries to deal with RSS feed files, which are in XML format. Very handy for any feed-based web scraping ideas.

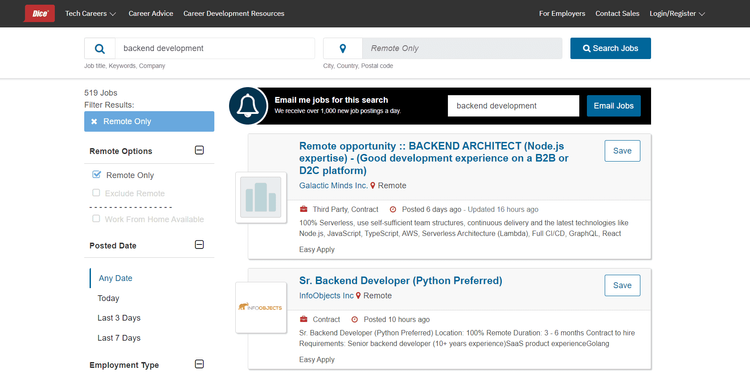

2. Create a Tech Job Portal By Scraping Indeed and Glassdoor

Developers are always looking for interesting job opportunities. The problem with Indeed and Glassdoor is that they aren't designed to meet the needs of a tech audience, but you could scrape job openings and build a streamlined tech job search platform. Save time for job seekers!

That's exactly how Dice has become the go-to resource for job seekers in the US tech industry. This site boasts millions of users and annual sales of more than $100 million. Similarly, AngelList's Career platform attracts thousands of people who want to work in startups.

✅ Steps to success:

- Identify and select reliable job listing sources, like Indeed and Glassdoor.

- Build a web scraper to extract tech job openings only and store them in a database.

- Design and develop a site for job search with advanced filtering options.

- Allow users to sign up to save their favorite jobs, set preferences, and receive job alerts.

🛠️ Must-use tool: Indeed Scraper, a solution to scrape job offers without getting blocked, with the data obtained in a JSON, and export them to any format or database.

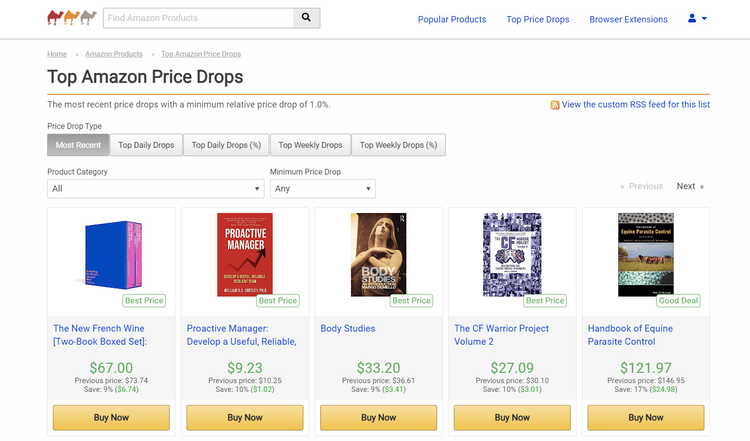

3. Alert Price Drops for Your Hunted Products on Amazon

Amazon product prices constantly change, and visiting the site many times a day to avoid missing a price drop on products on your wishlist would take plenty of time. Instead, track price fluctuations through scraping and get notified when there's a discount. This helps you make informed purchasing decisions and save money.

CamelCamelCamel is an existing platform that follows a similar approach. And although the site has millions of users, it feels quite old. Thus, there's an opportunity to build the next market-leading application!

✅ Steps to success:

- Build an Amazon web scraper and store the product data in a database.

- Integrate the scraper with users' Amazon wishlist products by using Amazon APIs.

- Set up a scheduler to launch your scraper several times a day.

- Enable a notification functionality to alert users when prices drop below their set thresholds.

🛠️ Must-use tool: Amazon Scraper, an easy tool to get products and listings without restrictions or limits.

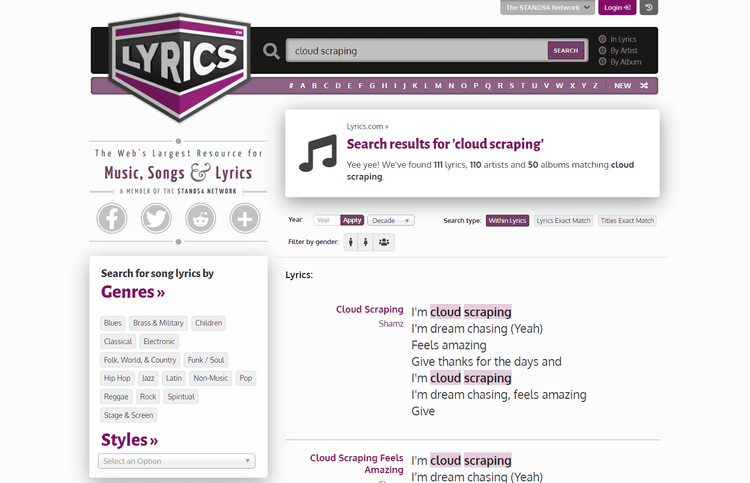

4. Discover Music as with Shazam but Based on Lyrics

How many times do we hear a song, we love it, the lyrics stick in our heads, but we don't know its title? Well, you can solve that problem with web scraping! Collect song lyrics from several sites and build a platform to find music based on words and phrases.

This web scraping idea is backed by some real-world sites, like Lyrics.com. That database of song lyrics has become a popular resource for music enthusiasts. Yet, its UI hasn't received an update in years, and there's room for a new, fresher, cooler app!

✅ Steps to success:

- Identify and select reliable music websites to scrape lyrics from, such as Lyrics.com and AZLyrics.

- Build a scraping script to get song data and lyrics from those sites.

- Process, clean, and aggregate the scraped data to avoid repetitions and store it in a database.

- Develop a search engine interface for users to enter some words and retrieve matching songs.

🛠️ Must-use tool: Premium scraping proxies to avoid the IP banning protections that lyrics sites usually adopt.

5. Build the Ultimate Video Game Database as a Fun Web Scraping Project Idea

Millions of users enjoy video games daily. Many of them are PC gamers and are always looking for info about old and new games. It's no coincidence that SteamDB, a third-party site based on Steam data, is one of the most loved sites by gamers.

The idea here is to scrape stores like Steam and Epic Games Store and sites like Can YOU Run It? By integrating this information into a single platform, you can help gamers:

- Know where a video game is available for download.

- Find out stats about the most played video games at the moment.

- Get the latest news on updates and patches.

- Discover the minimum and recommended hardware requirements to run a game.

✅ Steps to success:

- Scrape game titles, descriptions, system requirements, release dates, and player statistics from Steam, Origin, Epic Games Store, UPlay, and similar digital stores.

- Integrate that data into a single database.

- Make the scraping a continuous process to track game releases, updates, and stats in real-time.

- Build a site to browse and search for games based on various criteria.

🛠️ Must-use tool: Most gaming stores rely on Cloudflare for anti-bot protection, so you need to bypass Cloudflare.

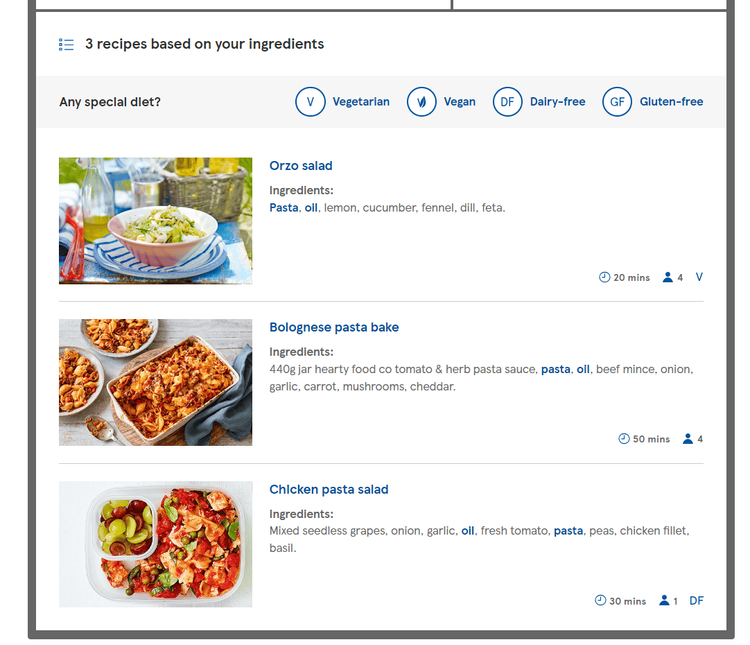

6. Get a Recipe Idea to Get the Best Out of the Ingredients You Have. Amazing Web Scraping Project Ideas Here.

Most people have a lot of ingredients in their kitchen but don't know what to do with them. At the same time, the world has been facing a food crisis for years, and it's time to avoid waste. Scrape recipes online and build a system to give users cooking ideas involving the ingredients they already have.

Sites like The Recipe Finder Tool provide a recipe recommendation engine, and it's one of the many cool data scraping project ideas. At the same time, none of them is popular, and the reason is bad marketing. Yet, building a successful recommendation recipe site based on ingredient availability is possible.

✅ Steps to success:

- Scrape recipes from the most popular cooking websites in the world.

- Clean and select the data to keep only the best recipes according to user reviews on those sites.

- Build a recommendation engine that allows users to insert one or more ingredients and returns a list of possible recipes.

🛠️ Must-use tool: An efficient HTML parser like BeautifulSoup to get data from several pages efficiently might be necessary for web scraping ideas like this one.

7. Assemble an Internet Time Machine as the Most Basic Scraping Project

What will be left of the current web in 100 years? The online world evolves so fast that the history of the internet is at risk. By crawling raw HTML pages, you can create a record of what the internet looked like at different points in time. That's one of the most basic web scraping project ideas, as it doesn't involve data extraction logic.

This idea isn't new, though. The Wayback Machine is an archive with several versions over time of the most popular sites. That's useful for anyone who wants to see how the web has changed over the years. You may want to build something similar to track competitors or an area of knowledge. This is one of many web scraping undergraduate project ideas.

✅ Steps to success:

- Identify the websites that you want to scrape.

- Write a web crawler to visit all site pages and download them.

- Store the extracted HTML document in a database.

🛠️ Must-use tool: Scrapy, one of the most popular crawling libraries in the world.

8. E-Commerce Price Comparison Tool as one of the Most Popular Web Scraping Project Ideas for Beginners

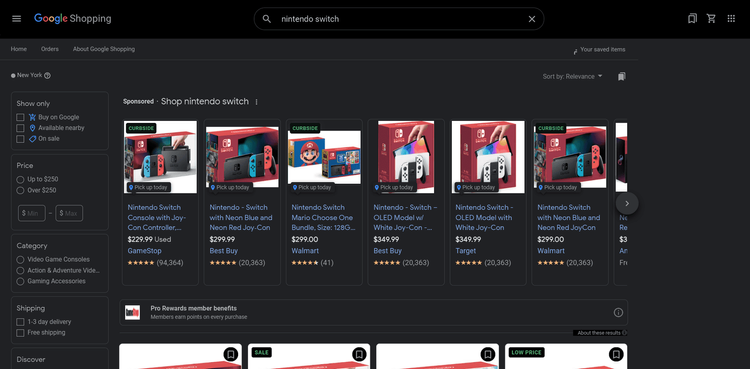

The same product is usually available on many e-commerce sites at different prices. By tracking that data, you can always know what is the best platform to buy an item from. Save money when shopping online, especially when it comes to buying expensive items.

That's precisely what Google Shopping aims to do. Yet, the platform has never become popular, and there's room for improvement. Start scraping e-commerce sites to build an alternative!

✅ Steps to success:

- Identify the e-commerce websites that you want to scrape, such as Alibaba, Amazon, Walmart, and eBay.

- Write specific web spiders that can visit the sites and extract product prices from them.

- Aggregate the scraped data into the same database.

- Create an online price comparison tool to search and compare the deals.

🛠️ Must-use tool: Alibaba Scraper and Walmart Scraper to get product prices from the most convenient e-commerce platforms on the planet. Make your web scraping ideas happen!

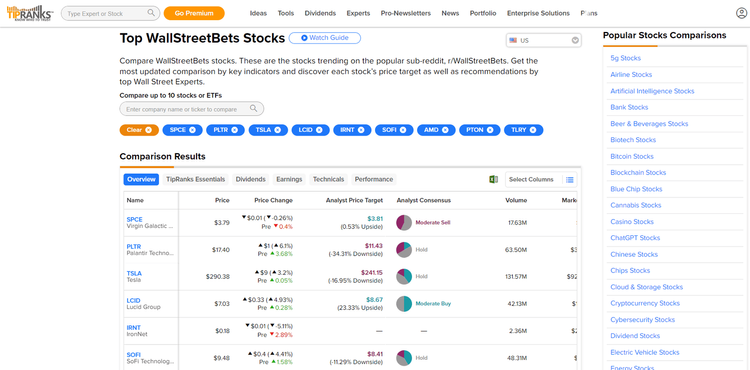

9. Predict the Next Trending Stock

Many online projects track theWallStreetBets subreddit for indications of upcoming trending stocks, and one of them is TipRanks, which performs sentiment analysis to figure out whether users there are buying or selling a specific stock.

The main concern is the source of the data. Is WallStreetBets a good place to find stock opinions? There are other more reliable sites and subreddits to monitor, so gather data from them to identify the next stocks to buy.

✅ Steps to success:

- Write a web scraper to track discussions about stocks from popular social platforms like Reddit.

- Develop a machine learning model that can predict the next trending stock.

- Feed the extracted data to the model.

🛠️ Must-use tool: Python, the most popular programming language in the AI and data science world.

10. Spot a Real Estate Opportunity The best way to make a deal in real estate is to keep monitoring the market, and scraping real estate listings and property data from sites like Zillow is the easiest way to do so. Use that data to get insights to make informed decisions about buying or selling.

For example, you could use this project to track the prices of homes in a specific neighborhood over time. That information will help you or your users identify homes that are undervalued or overpriced or be helpful to identify homes that are likely to appreciate in value in the future.

✅ Steps to success:

- Create a web scraper to extract real estate data from Zillow.

- Store the extracted data in a database.

- Process and analyze the data to identify market trends and opportunities.

- Present the data in a real estate platform.

🛠️ Must-use tool: Zillow Scraper so that getting real-estate data has never been easier.

11. Create a Flight Comparison Tool to Always Get the Best Deal Not all airlines are the same, and we all want to travel comfortably, especially on long trips. Price isn't the only factor to consider, but sites like Skyscanner mainly focus on that.

Scrape airline sites and retrieve the data needed to build a tool to compare flights and services offered by airlines. That's useful for finding the best deals while ensuring users are getting the best value for their money.

✅ Steps to success:

- For this web scraping idea, collect the list of all major airlines.

- Write a web scraper for each airline site.

- Store the extracted data in a database and standardize it toward the same format to facilitate comparison.

- Build a user interface that allows users to compare flights.

🛠️ Must-use tool: Selenium, a great headless browser to get dynamic price data from airline sites.

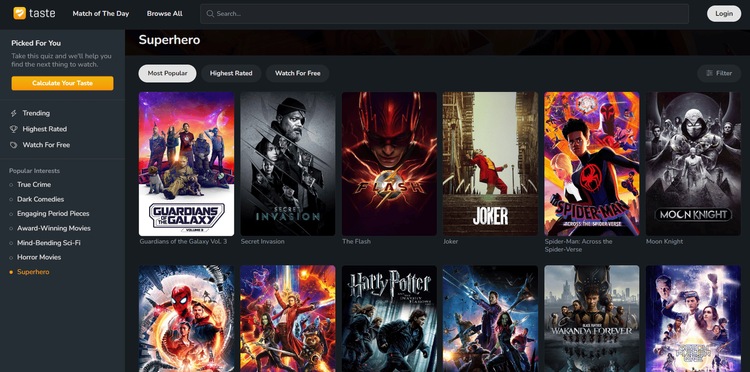

12. Build a Recommendation Tool to Watch Movies and TV Shows and Movies You Might Like. People Love What Web Scraping Project Ideas Can Create.

Imagine having a system that retrieves the best movie recommendations based on what you like. You could watch only movies and TV shows that you're likely to love! Building such a platform is possible, especially with the right data.

To do it, you need to collect movie reviews and descriptions from IMDb and Rotten Tomatoes. Taste.io did something similar and has thousands of downloads:

✅ Steps to success:

- Collect data from films and television programs.

- Build a recommendation system through data science.

- Deploy the online platform.

- Let users take a movie taste test to tune the algorithm.

- Give users a chance to say whether they like the recommendations to train the model behind the system.

🛠️ Must-use tool: A powerful proxy rotator to retrieve data from sites like IMDb and Rotten Tomatoes without getting Access Denied errors.

13. Track IT Salaries to Recommend People When to Quit Their Job

Support developers in making smart career decisions with this web scraping project idea: gather information on salaries of data science and IT professionals from a variety of sources and create a tool to compare salaries by experience, position, and industry.

That'll help people in IT understand when it's time to find a new job or negotiate a raise. Some private companies provide that service, such as PayScale, but a popular public platform is lacking. Time to build it!

✅ Steps to success:

- Scrape salaries and job positions from Indeed, ZipRecruiter, GlassDoor, and similar sites.

- Aggregate the data to associate salary ranges with job roles.

- Develop a salary comparison tool with data exploration capabilities and many filtering options.

🛠️ Must-use tool: Proxies with geolocalization to access local job platforms and see offers in different countries.

14. Crawl YouTube to Create a Database of Influencers

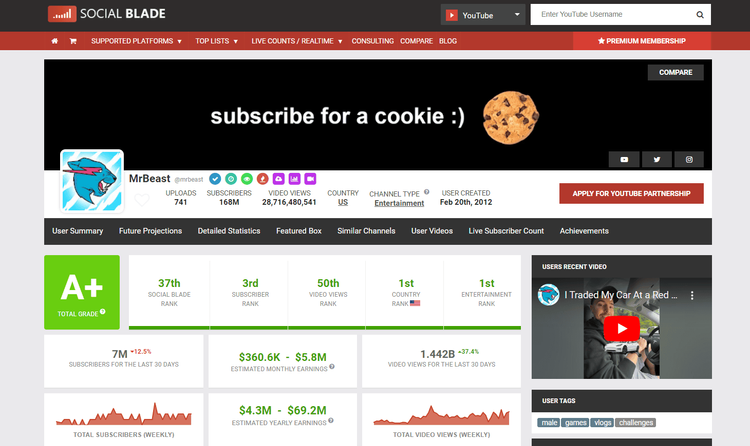

Scrape YouTube and start populating a database of influencers, including their contact details, social media profiles, and audience demographics. The most popular social media analysis site is SocialBlade, with tens of millions of monthly visits, which proves this has a real market.

However, this site mainly focuses on telling how much influencers make. Businesses looking for partnerships are interested in other information to find good fits to promote their services to their target audience.

✅ Steps to success:

- Build a YouTube spider that can crawl channels and automatically scrape data from them.

- Store the entire data in a centralized database.

- Process the scraped data to infer the target audience of each YouTuber based on video titles and interactions.

- Present the database of influencers in an explorable database system.

🛠️ Must-use tool: The easy-to-use YouTube Scraper to get data from YouTube videos and channels without getting stopped.

15. Scrape Long-Tail Keywords Based on a Search Term for SEO

To deliver SEO hits, you need to know what users tend to search. There are several platforms to get information about search volumes out there, but some are more innovative than others. They scrape long-tail keywords from a search term to get a better understanding of what people look for.

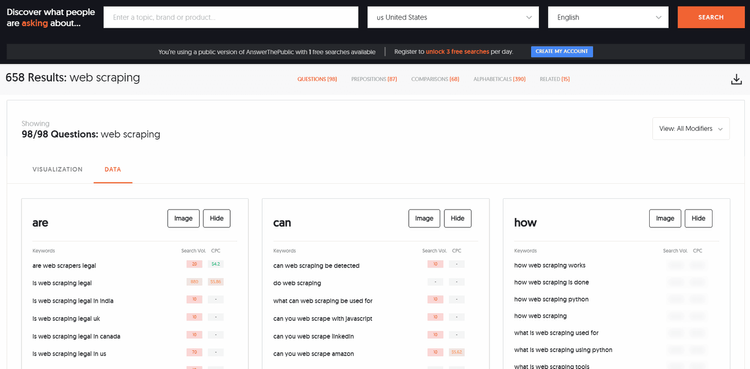

An example is AnswerThePublic, a fast-growing site that gives users relevant insights to write content that leads to an increase in traffic.

✅ Steps to success:

- Identify the search term that you want to scrape long-tail keywords for.

- Scrape Google's auto-complete generated search terms to get a list of long-tail keywords.

- Store the list of keywords in a database.

- Create a service for SEO optimization based on that data.

🛠️ Must-use tool: Google Search Scraper to get SERPs, organic, and paid search results with a single API call.

16. Find New Clients for Your Business by Monitoring CrunchBase

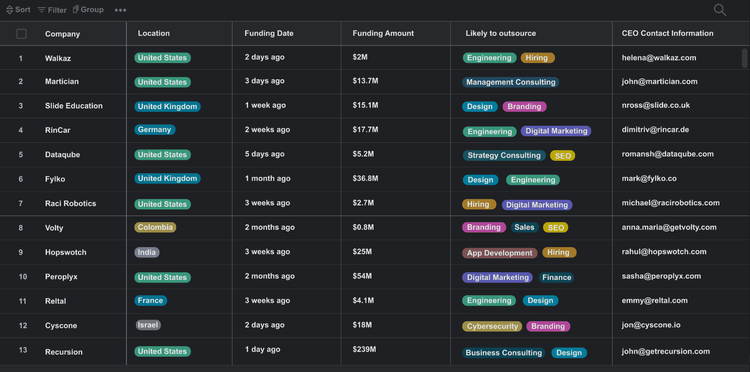

Scraping CrunchBase is a good way to discover businesses that recently raised money because they're likely to be looking for companies to outsource some of their workload to. Thus, you can use that information to help companies already in the market find new clients.

For example, CyberLeads has built a million-dollar company by doing something similar. It retrieves data from CrunchBase, integrate it into an Airtable database, and sells insights to paying users.

✅ Steps to success:

- Scrape CrunchBase to get information about startups, such as their funding history, target market, and contacts.

- Store that data in a privately accessible database.

- Notify users when a new startup in their industry has just raised money.

- Give users the ability to reach out to companies to offer their services.

🛠️ Must-use tool: Crunchbase Scraper to get listings, companies, investors, and people related to the startup world.

17. Discover What Users Think About Brands and Products: A Web Scraping Project Idea Raising in Popularity

Most products and services fail because they don't meet users' needs or expectations. Avoid that by scraping data from social media platforms to find out what people think about brands. That also applies to politics, as we all know, due to the Cambridge Analytica scandal. Can data change the fate of an election? It would seem so, at least in part!

This a popular data scraping project because it provides value to any business. The goal is to track user engagement and sentiment related to your products. The information is then used to improve product development, devise new marketing strategies and track the effectiveness of current campaigns.

✅ Steps to success:

- Scrape public social media platforms like Reddit and Threads to retrieve user comments and opinions.

- Feed the scraped data to a natural language processing technology for sentiment analysis.

- Instruct the model to identify trends and produce insights.

🛠️ Must-use tool: tidytext, one of the most popular packages to perform text mining and analysis tasks. It's an R library, so check out our guide about web scraping in R.

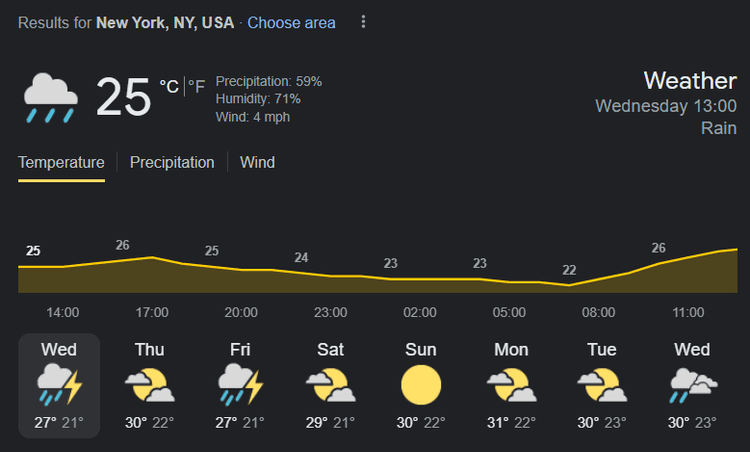

18. Scrape Weather Data To Build a Forecast App with Data Science

Weather apps are among the most downloaded apps in app stores. That's because everyone wants to stay up to date about the weather conditions to plan their day, and weather forecasting is now more accurate than ever before, thanks to data science.

One of the most popular sources when it comes to the weather is the Google widget:

Behind the scene, it uses machine learning to predict weather conditions and climate risks. The ECMWF (European Centre for Medium-Range Weather Forecasting) also uses machine learning models for forecasts.

Now it's your turn to build the next-generation weather forecast app based on data science. You first need to collect as much weather data as possible. When the forecast model is ready, you can then build a weather forecast app around it.

✅ Steps to success:

- Extract past weather data from public and government-owned platforms.

- Train a machine learning model for prediction with that data.

- Get current weather data from the Open Weather API.

- Pass it to the model to get some forecasts.

🛠️ Must-use tool: Golang, because of its incredible performance and ability to deal with large data.

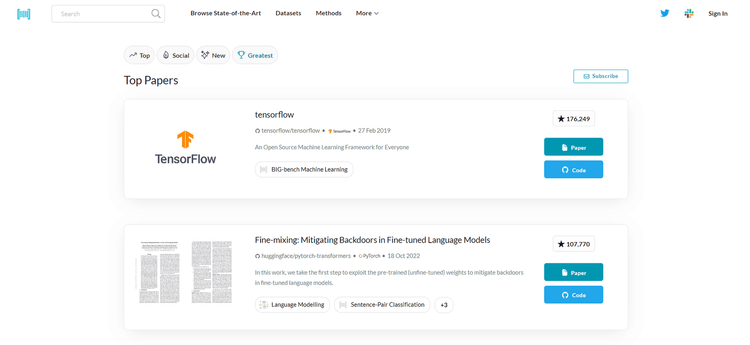

19. Build a Platform to See the Most Popular AI or Data Science Research Papers in Real Time

AI and data science are such interesting topics that many want to stay up-to-date on the latest research in the field. One of the possible web scraping ideas is to get papers from online journals about those topics.

After collecting a good amount, you can create a platform that shows the most popular papers in real-time. You'd build a web app like Papers With Code but related to AI and data science.

✅ Steps to success:

- Get research paper PDFs on AI and data science from sites like ResearchGate and IEEE.

- Store them in a cloud storage and save their reference in a database.

- Present the papers in real time on a web platform.

- Offer users an upvote system, filters, and a search feature.

🛠️ Must-use tool: A CAPTCHA solver, as most paper sites ask you to prove you aren't a bot before the download.

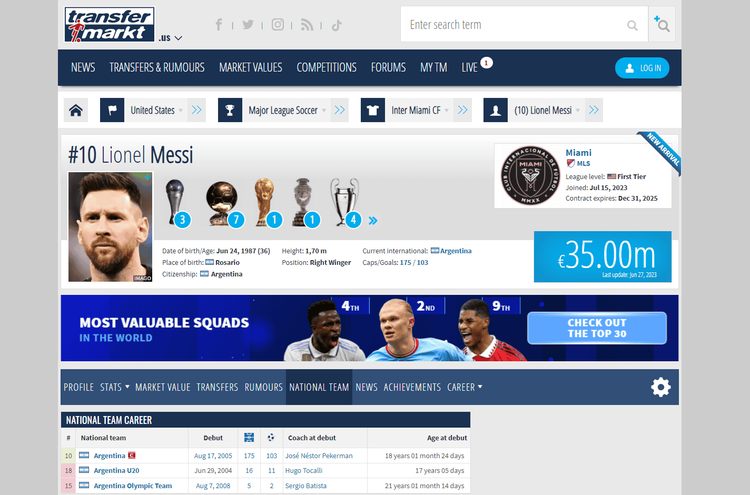

20. Build a Sports Statistic Tracker to Attract Millions of Fans

There are billions of sports fans in the world, and many of them spend tons of hours on sports sites. They look for news, but especially for real-time stats and historical results. For example, Transfermarkt has more than 25 million visits per month!

The definitive sports statistic tracker can rely on data as a killer feature. For that, you need to scrape sports data to build such a platform. Retrieve player stats, team performance, and match results from several sources.

✅ Steps to success:

- Scrape historical and current sports stats from popular specialized sites and Wikipedia.

- Integrate all data so that there's only one reliable data point for each element.

- Build a mobile app with a great UX or a web application to present this data in SEO-oriented pages.

- Allow users to select their favorite teams and players to make it easier to track their stats.

🛠️ Must-use tool: A solution with advanced anti-scraping capabilities, such as ZenRows, as most sports sites protect their data with advanced technologies.

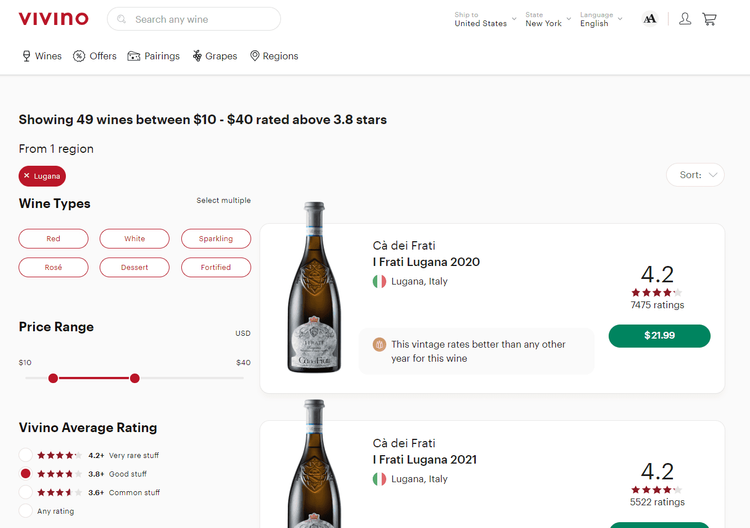

21. Create a Next-Generation Wine Selection Assistant

Wine attracts people from all over the world, but not everyone is an expert, and choosing the right one is difficult. A data-based wine selection assistant might be helpful! All you have to do is scrape wine reviews, ratings, and characteristics and create an intuitive interface to filter by parameters.

A wine app like Vivino, with more than 60 million downloads, recommends wines based on user preferences for an occasion or meal.

✅ Steps to success:

- Scrape wine names, characteristics, and vintages from producer sites and public wine databases.

- Get user reviews and opinions from review sites and social networks for each wine.

- Aggregate all data into a single database.

- Create a system that accepts the desired characteristics sought by the user or food ingredients and returns a list of good wines.

🛠️ Must-use tool: An fully-featured HTML parser like Cheerio to extract data from several sites. A prerequisite for data scraping ideas like this one.

22. Collect Data from Governments to Monitor the Health and Growth of the World's Population

Track the health and growth of the world's population by scraping government sites. Look for information about births, deaths, and specific diseases. Provide insights about the present and projected future of the world.

Worldometer has been doing that for years and was one of the most visited sites during the latest global pandemic. After all, what's better than data about the world we live in?

✅ Steps to success:

- Search government sites for official data on a country's medical situation.

- Extract that data.

- Repeat the operation for each country that provides public information about the health of its population.

- Aggregate the data.

- Make it easier to explore and understand in an online platform.

🛠️ Must-use tool: A proxy rotator to avoid getting blocked.

23. Scrape Free Images from Popular Providers to Train Your Machine Learning Model

The web is full of copyright-free images you can use as you want. Retrieve tons of them from several providers like Unsplash to train your machine learning models with visual recognition capabilities.

✅ Steps to success:

- Crawl sites like Unsplash, Pexels and Iconfinder to discover as many images as possible.

- Scrape their metadata, descriptions, and tags and download the images.

- Train your machine learning models on those images based on your data science goals.

🛠️ Must-use tool: Celery, an open-source asynchronous task queuing system to create a distributed scraping process that downloads images.

24. Create a Resource Hub for Language Learners

Language learning is a subject with wide interest. One example is Duolingo, an app with half a billion downloads.

The main challenge when learning a new language is finding reliable resources, so imagine having a hub where users can easily search and download what they're looking for. How many would such a platform attract?

Scrape vocabulary lists, words of the day, audio files, and online dictionaries. Turn that idea into reality!

✅ Steps to success:

- Identify reliable sites where to find language learning media files, such as programs for foreign students in college sites.

- Scrape those resources and categorize them in a database by language and level.

- Create a web app where users can search and filter resources based on specific criteria.

🛠️ Must-use tool: Playwright because of its feature to get optimized CSS selectors by clicking elements in a browser, which makes it easier to scrape sites in languages you don't know.

25. Create a Travel Itinerary Generator

Tourists want to try to make the most of the limited time they have in a city. That's why they tend to seek advice on places to visit or experiences to live. They might end up reading some blog posts, but what about a mobile app?

By getting popular attractions from Google Maps and TripAdvisor, you can create an itinerary generator tool like TripHobo. This could be a mobile app with some data preloaded so that it doesn't require an Internet connection.

✅ Steps to success:

- Scrape data on attractions of the most visited cities in the world from Google Maps or TripAdvisor.

- Add them to a database, together with aggregated user evaluations about them.

- Ask users to enter their preferences in terms of what they want to see and how much they're willing to pay.

- Generate a few itinerary options, prioritizing what other people loved about a particular destination.

🛠️ Must-use tool: Google Maps Scraper, the definite tool to extract landmarks and must-visit places.

26. Improve the World with a Volunteer Opportunities Aggregator

We all want to make the world a better place, and an approach to doing that is to use data to help people find meaningful volunteer work. The idea is to collect volunteer opportunities and create a centralized platform.

Something similar exists and is called VolunteerMatch, but it focuses on the US. Why not create something more global? The world needs you!

✅ Steps to success:

- Scrape volunteer opportunities from local platforms, such as municipality and NGO sites.

- Process the data so that you can store it in a centralized database.

- Develop an easy and accessible interface to enable users to search and filter valuable volunteer work.

🛠️ Must-use tool: A configurable crawler with timeouts like Scrapy to avoid overloading the volunteering sites with requests.

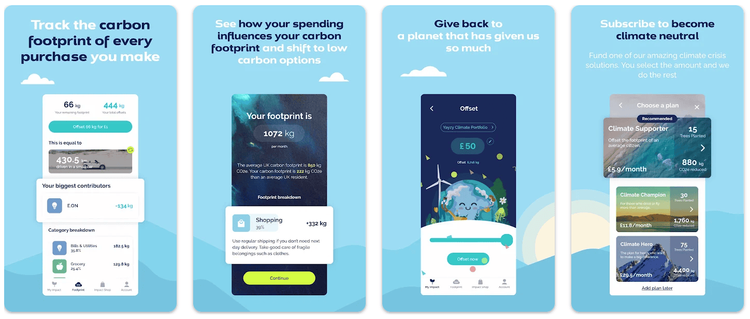

27. Build a Footprint Portal to Let People Know What Companies Are Green and Which Aren't

Another way to help the world is to prefer products from green companies. Since many companies know that, they pass themselves off as green when they are not. How to address this problem? With something objective: data!

Start by collecting carbon footprint reports on the best-known companies. Use scraping to extract only the key points and create a database of companies that truly care about their footprint. That information enables users to make sustainable choices about where to shop and eat.

Yayzy is an example of a more generic app that helps consumers reduce their footprint.

✅ Steps to success:

- Identify the companies that you want to include in the footprint portal.

- Gather data about the companies' carbon footprints from their websites, annual reports, and sources like WWF.

- Store the data in a database.

- Expose the data to a site where users can search and filter.

🛠️ Must-use tool: WWF's Carbon Footprint Calculator, a tool to get the footprint generated by a business office.

Web Scraping Data Science Project

In data science projects, you won't always find ready-made datasets to get started. Oftentimes, you need to roll up your sleeves and retrieve the data you need with a custom script.

Here's why data scraping is so valuable in the data science industry: it enables researchers to gather vast amounts of data and get valuable insights from it.

A web scraping data project is helpful in many industries, including:

- Decision-making: Collect insights from social media and use them to make data-driven decisions.

- Finance: Use data to do quantitative analysis, risk assessment, and stock picking. Define data-driven investment strategies.

- Market Research: Retrieve data on consumer behavior and product reviews to do sentiment analysis. Track your competitors to understand what direction the market is taking.

Conclusion

In this article, you discovered many web scraping project ideas you can use and real examples of companies that perform data extraction. All you need are the right tools, and here you looked at some of the most popular ones.

You know now:

- If it's worth building a web scraper.

- What tools and programming languages to use.

- 27 ideas for building a successful scraper in dozens of industries.

However, no matter how good your idea is, anti-scraping measures will always be your main challenge. Avoid them with ZenRows, a web scraping API with IP rotation, CAPTCHA solving, headless browser capabilities, and an advanced built-in bypass to avoid getting blocked. Scraping any site can be easier. Try ZenRows for free!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.