Are you web scraping with Playwright in JavaScript and want to extract content from multiple pages? Playwright makes it easy with its automation capabilities.

In this article, you'll learn how to use Playwright to extract data from paginated websites, including those using JavaScript for infinite scrolling.

You'll use JavaScript in this tutorial, but similar methods applied here work for other languages like Python, C#, C++, Golang, and others.

Scrape With a Navigation Page Bar

The navigation bar is the simplest and most common form of pagination. You can scrape a website with a navigation bar by following the next page link on the bar or changing the page number in its URL.

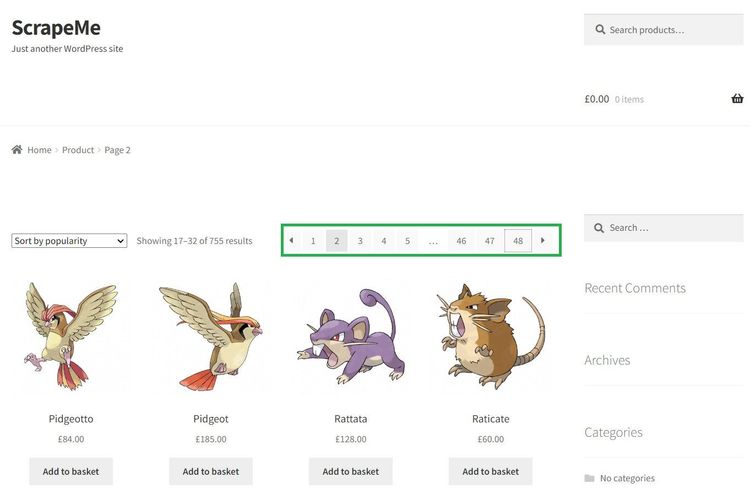

In this section, you'll scrape product information from ScrapeMe, a website with a navigation bar, to see how both methods work. See how it features the navigation bar below:

You'll scrape all 48 pages in the following sections.

Use the Next Page Link

The next page link method involves clicking the next arrow in the navigation bar with Playwright's click feature. You'll collect product names and prices from the target website for this.

Extract the entire page's HTML with the following code:

const { chromium } = require("playwright");

(async () => {

// start the browser instance

const browser = await chromium.launch({ headless: false, timeout: 60000 });

const context = await browser.newContext();

const page = await context.newPage();

// launch the target website

await page.goto("https://scrapeme.live/shop/", { timeout: 1000000 });

// get the HTML content of the page

const html = await page.content();

console.log(html);

// close the browser

await browser.close();

})();

It extracts the website's HTML, as shown:

<!DOCTYPE html>

<html lang="en-GB">

<head>

<!-- ... -->

<title>Products – ScrapeMe</title>

</head>

<body>

<!-- ... -->

</body>

</html>

Now, scale that up to scrape specific product information from all pages, including names and prices.

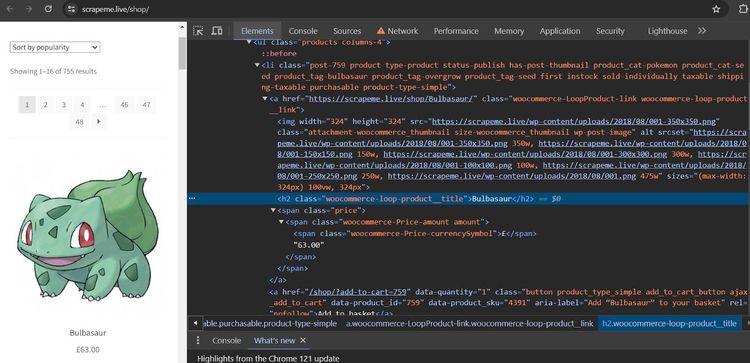

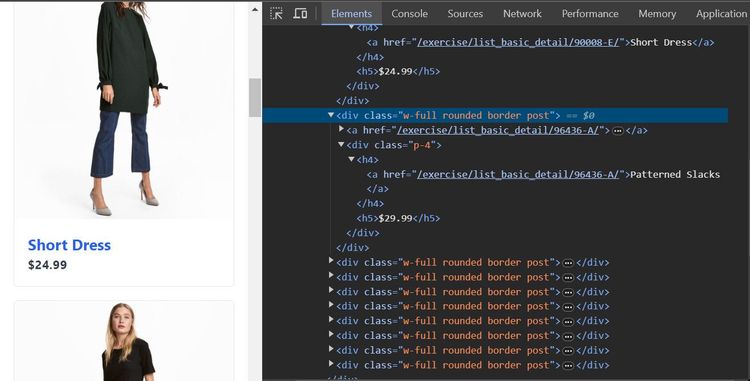

Launch the target website in your browser, right-click any product, and click Inspect to view the product elements. Here's the HTML layout on Chrome:

Each product is inside a container. You'll loop through each container to scrape the required data.

To begin, start an asynchronous function. Then launch a Chromium browser, create a new web page instance, and visit the target website:

// import the required library

const { chromium } = require("playwright");

(async () => {

const browser = await chromium.launch({ timeout: 60000 });

const context = await browser.newContext();

const page = await context.newPage();

await page.goto("https://scrapeme.live/shop/", { timeout: 1000000 });

// close the browser

await browser.close();

})();

Next, define a scraper function that loops through each product container to scrape specific information (product names and prices):

(async () => {

// ...

// define the scraper function

async function scraper() {

// find all product containers on the page

const productContainers = await page.$$(".woocommerce-LoopProduct-link");

for (const container of productContainers) {

// extract product name and price from each container

const productName = await container.$eval(".woocommerce-loop-product__title", element => element.innerText);

const price = await container.$eval(".price", element => element.innerText);

console.log("Name:", productName);

console.log("Price:", price);

}

}

// close the browser

await browser.close();

})();

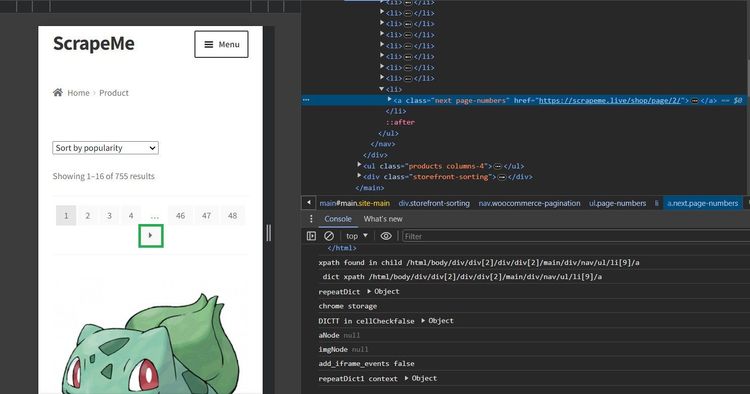

The above function doesn't go past the first page. You'll modify the code to click the next page arrow. See its element below:

The next code uses a while loop to click the next page arrow continuously until the element isn't in the DOM anymore:

(async () => {

// ...

while (true) {

try {

// execute the scraper function

await scraper();

// find and click the next page link

const nextPageLink = await page.$(".next.page-numbers");

if (nextPageLink) {

await nextPageLink.click({ timeout: 100000 });

await page.waitForTimeout(30000);

} else {

console.log("No more pages available");

break;

}

} catch (e) {

console.log("An error occurred:", e);

break;

}

}

// close the browser

await browser.close();

})();

See the final code below:

// import the required library

const { chromium } = require("playwright");

(async () => {

const browser = await chromium.launch({ timeout: 60000 });

const context = await browser.newContext();

const page = await context.newPage();

await page.goto("https://scrapeme.live/shop/", { timeout: 1000000 });

// define the scraper function

async function scraper() {

// find all product containers on the page

const productContainers = await page.$$(".woocommerce-LoopProduct-link");

for (const container of productContainers) {

// extract product name and price from each container

const productName = await container.$eval(".woocommerce-loop-product__title", element => element.innerText);

const price = await container.$eval(".price", element => element.innerText);

console.log("Name:", productName);

console.log("Price:", price);

}

}

while (true) {

try {

// execute the scraper function

await scraper();

// find and click the next page link

const nextPageLink = await page.$(".next.page-numbers");

if (nextPageLink) {

await nextPageLink.click({ timeout: 100000 });

await page.waitForTimeout(30000);

} else {

console.log("No more pages available");

break;

}

} catch (e) {

console.log("An error occurred:", e);

break;

}

}

// close the browser

await browser.close();

})();

This outputs the specified product data from all pages:

Name: Bulbasaur

Price: £63.00

Name: Ivysaur

Price: £87.00

#... 747 items omitted for brevity

Name: Stakataka

Price: £190.00

Name: Blacephalon

Price: £149.00

You just scraped a paginated website by following the next page link on its navigation bar. Brilliant! Let's wrap this section up with the other method.

Change the Page Number in the URL

The page number method implements pagination by increasing the page number continuously in the URL and scraping the pages as you go.

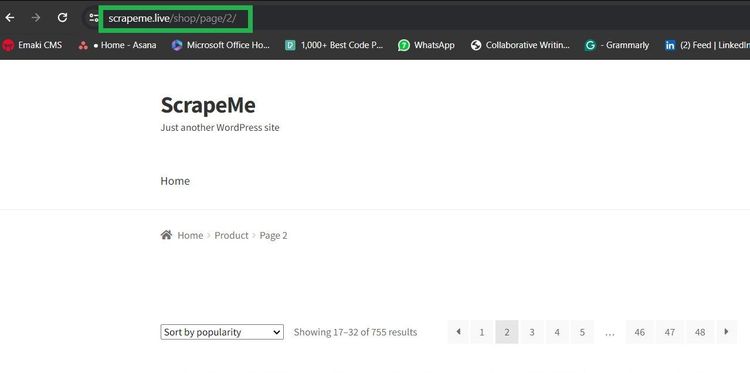

Observe how the target website formats its URL for the second page:

The URL format for page 2 is /page/2. This numbering continues until the last page. You'll simulate this in your code to navigate the paginated website and scrape from each page.

First, spin a browser instance and define a scraper function to extract product names and prices from their containers using a for loop:

// import the required library

const { chromium } = require("playwright");

(async () => {

const browser = await chromium.launch({ timeout: 60000 });

const context = await browser.newContext();

const page = await context.newPage();

// define a scraper function

async function scraper(page) {

// find all product containers on the page

const productContainers = await page.$$(".woocommerce-LoopProduct-link");

for (const container of productContainers) {

// extract product name and price from each container

const productName = await container.$eval(".woocommerce-loop-product__title", element => element.innerText);

const price = await container.$eval(".price", element => element.innerText);

console.log("Name:", productName);

console.log("Price:", price);

}

}

// close the browser

await browser.close();

})();

Use a for loop to increase the page number in the URL continuously until it hits the last page. Then, run the scraper function for each page navigation.

(async () => {

// ...

const lastPage = 49;

for (let page_count = 1; page_count <= lastPage; page_count++) {

await page.goto(`https://scrapeme.live/shop/page/${page_count}`, { timeout: 1000000 });

await scraper(page);

}

// close the browser

await browser.close();

})();

Now, put everything together:

// import the required library

const { chromium } = require("playwright");

(async () => {

const browser = await chromium.launch({ timeout: 60000 });

const context = await browser.newContext();

const page = await context.newPage();

// define a scraper function

async function scraper(page) {

// find all product containers on the page

const productContainers = await page.$$(".woocommerce-LoopProduct-link");

for (const container of productContainers) {

// extract product name and price from each container

const productName = await container.$eval(".woocommerce-loop-product__title", element => element.innerText);

const price = await container.$eval(".price", element => element.innerText);

console.log("Name:", productName);

console.log("Price:", price);

}

}

const lastPage = 49;

for (let page_count = 1; page_count <= lastPage; page_count++) {

await page.goto(`https://scrapeme.live/shop/page/${page_count}`, { timeout: 1000000 });

await scraper(page);

}

// close the browser

await browser.close();

})();

As expected, the code visits each page iteratively and extracts the required information from all 48 pages:

Name: Bulbasaur

Price: £63.00

Name: Ivysaur

Price: £87.00

#... 747 items omitted for brevity

Name: Stakataka

Price: £190.00

Name: Blacephalon

Price: £149.00

You now know the two methods of scraping a paginated website with a navigation bar. That's cool!

What if the website loads content dynamically with JavaScript? You'll see how to handle that in the next section.

Scrape With JavaScript-Based Pagination

Some websites load more content as you scroll instead of using the visible navigation bar. This might involve automatic infinite scrolling or require a user to click a button to load more content.

Playwright has no problem scraping such dynamic websites since it supports JavaScript. You'll see how to handle both scenarios while scraping.

Infinite Scroll to Load More Content

Infinite scroll requires scrolling down a web page continuously to show more content. It’s common with social sites and e-commerce websites.

You'll scrape product information from ScrapingClub, a website that uses infinite scrolling. See how it loads content in the demo below:

First, extract the website's HTML content without scrolling:

const { chromium } = require("playwright");

(async () => {

// start the browser instance

const browser = await chromium.launch({ timeout: 60000 });

const context = await browser.newContext();

const page = await context.newPage();

// launch the target website

await page.goto(

"https://scrapingclub.com/exercise/list_infinite_scroll/",

{ timeout: 1000000 }

);

// get the HTML content of the page

const html = await page.content();

console.log(html);

// close the browser

await browser.close();

})();

That only gets the first 10 products:

<!-- ... -->

<div class="p-4">

<h4>

<a href="/exercise/list_basic_detail/90008-E/">Short Dress</a>

</h4>

<h5>$24.99</h5>

</div>

<!-- 8 products omitted for brevity -->

<div class="p-4">

<h4>

<a href="/exercise/list_basic_detail/94766-A/">Fitted Dress</a>

</h4>

<h5>$34.99</h5>

</div>

<!-- ... -->

Inspect the website element, and you'll see that each product is in the div tag:

Scale the previous code by adding a scrolling functionality. To begin, define a scraper function to extract each product's name and price from its container. You'll call this function while simulating an infinite scroll:

// import the required library

const { chromium } = require("playwright");

async function scraper(page) {

// extract the product container

const products = await page.$$(".post");

// loop through the product container to extract names and prices

for (const product of products) {

const name = await product.$eval("h4 a", element => element.innerText);

const price = await product.$eval("h5", element => element.innerText);

console.log(`Name: ${name}`);

console.log(`Price: ${price}`);

}

}

Next, start a new browser instance and visit the target web page:

(async () => {

// start a browser instance

const browser = await chromium.launch({ timeout: 60000 });

const context = await browser.newContext();

const page = await context.newPage();

// open the target page

await page.goto(

"https://scrapingclub.com/exercise/list_infinite_scroll/",

{timeout: 60000}

);

// close the browser

await browser.close();

})();

Insert the scrolling logic inside a while loop that continuously scrolls to the bottom of the page and waits for content to load before repeating the scroll.

The loop stops once the previous height is the same as the current height, indicating no more heights to scroll. It then executes the scraper function and extracts all the loaded content after completing the scroll:

(async () => {

// ...

let lastHeight = 0;

while (true) {

// scroll down to bottom

await page.evaluate("window.scrollTo(0, document.body.scrollHeight);");

// wait for the page to load

await page.waitForTimeout(2000);

// get the new height and compare with last height

const newHeight = await page.evaluate("document.body.scrollHeight");

if (newHeight === lastHeight) {

// extract data once all content has loaded

await scraper(page);

break;

}

lastHeight = newHeight;

}

// close the browser

await browser.close();

})();

Your final code should look like this:

// import the required library

const { chromium } = require("playwright");

async function scraper(page) {

// extract the product container

const products = await page.$$(".post");

// loop through the product container to extract names and prices

for (const product of products) {

const name = await product.$eval("h4 a", element => element.innerText);

const price = await product.$eval("h5", element => element.innerText);

console.log(`Name: ${name}`);

console.log(`Price: ${price}`);

}

}

(async () => {

// start a browser instant

const browser = await chromium.launch({ timeout: 60000 });

const context = await browser.newContext();

const page = await context.newPage();

// open the target page

await page.goto(

"https://scrapingclub.com/exercise/list_infinite_scroll/",

{ timeout: 60000 }

);

let lastHeight = 0;

while (true) {

// scroll down to bottom

await page.evaluate("window.scrollTo(0, document.body.scrollHeight);");

// wait for the page to load

await page.waitForTimeout(2000);

// get the new height and compare with last height

const newHeight = await page.evaluate("document.body.scrollHeight");

if (newHeight === lastHeight) {

// extract data once all content has loaded

await scraper(page);

break;

}

lastHeight = newHeight;

}

// close the browser

await browser.close();

})();

The code scrolls through the page and extracts all its content:

Name: Short Dress

Price: $24.99

# ... 58 products omitted for brevity

Name: Blazer

Price: $49.99

Your code works, and you now know how to scrape a website that loads content dynamically with infinite scroll. Next, you'll learn how to handle the "Load more" button.

Click on a Button to Load More Content

Websites using the "Load more" button require clicking a button to get more content as they scroll down.

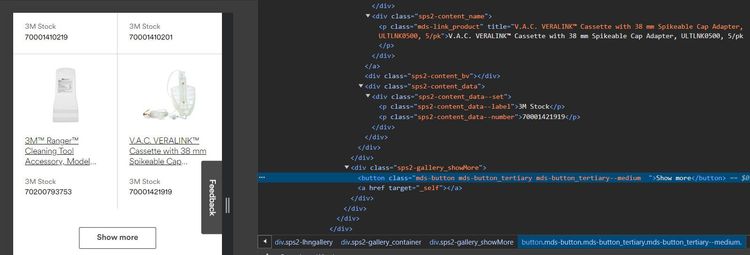

Here, you'll simulate that action and scrape product data using 3D Medicals as a demo website. See how the website loads more content:

Let's inspect the website's elements before extracting its content:

Each product is inside a div tag, with the "Show more" element inside a button.

Let's define the scraping logic in a scraper function that extracts content iteratively from their containers:

// import the required library

const { chromium } = require("playwright");

// define the scraper function

async function scraper(page) {

const productContainers = await page.$$(".sps2-content");

for (const product of productContainers) {

const name = await product.$eval(".sps2-content_name", element => element.innerText);

const itemId = await product.$eval(".sps2-content_data--number", element => element.innerText);

console.log(`Name: ${name}`);

console.log(`Product ID: ${itemId}`);

}

}

Set up the browser instance inside an anonymous function, visit the target website, and wait for the product containers to load. The code uses the headless mode due to the nature of the target website. The default headless mode might work for other websites.

(async () => {

// launch the browser instance

const browser = await chromium.launch({ headless:false, timeout:60000 });

const context = await browser.newContext({ viewport: { width: 1920, height: 1080 } });

const page = await context.newPage();

// visit the target website

await page.goto("https://www.3m.com.au/3M/en_AU/p/c/medical/", { timeout: 1000000 });

// wait for the product containers

await page.waitForSelector(".sps2-content");

// close the browser

await browser.close();

})();

Define a function that scrolls to the bottom of the page to make the "Load more" button visible:

(async () => {

// ...

// define a scroll function

async function scroll_to_bottom() {

await page.evaluate("window.scrollTo(0, document.body.scrollHeight);");

await page.waitForTimeout(5000);

}

await browser.close();

})();

Finally, execute the scroll function five times in a for loop" and click the "Load more" button for each scroll. Then, call the scraper function after loading all content. You can increase the scroll count if you like:

(async () => {

// ...

const scrollCount = 5;

for (let i = 0; i < scrollCount; i++) {

await scroll_to_bottom();

try {

await page.click(".mds-button_tertiary--medium");

} catch (error) {

break;

}

}

try {

await scraper(page);

} catch (error) {

console.log(`An error occurred: ${error}`);

}

await browser.close();

})();

See the final code below:

// import the required library

const { chromium } = require("playwright");

// define the scraper function

async function scraper(page) {

const productContainers = await page.$$(".sps2-content");

for (const product of productContainers) {

const name = await product.$eval(".sps2-content_name", element => element.innerText);

const itemId = await product.$eval(".sps2-content_data--number", element => element.innerText);

console.log(`Name: ${name}`);

console.log(`Product ID: ${itemId}`);

}

}

(async () => {

const browser = await chromium.launch({ headless: false, timeout: 60000 });

const context = await browser.newContext({ viewport: { width: 1920, height: 1080 } });

const page = await context.newPage();

// visit the target website

await page.goto("https://www.3m.com.au/3M/en_AU/p/c/medical/", { timeout: 1000000 });

// wait for the product containers

await page.waitForSelector(".sps2-content");

// define a scroll function

async function scroll_to_bottom() {

await page.evaluate("window.scrollTo(0, document.body.scrollHeight);");

await page.waitForTimeout(5000);

}

const scrollCount = 5;

for (let i = 0; i < scrollCount; i++) {

await scroll_to_bottom();

try {

await page.click(".mds-button_tertiary--medium");

} catch (error) {

break;

}

}

try {

await scraper(page);

} catch (error) {

console.log(`An error occurred: ${error}`);

}

await browser.close();

})();

This extracts the product names and IDs from 5 consecutive scrolls:

Name: ACTIV.A.C.™ Sterile Canister with Gel, M8275059/5, 5/cs

Product ID: 70001413270

# ... other products omitted for brevity

Name: 3M™ Tegaderm™ Silicone Foam Dressing, 90632, Non-Bordered, 15 cm x 15 cm, 10/ct, 4ct/Case

Product ID: 70201176958

You just scraped dynamic data by clicking a button to load more content. That's awesome! However, you need to learn to bypass anti-bot detection during scraping.

Avoid Getting Blocked With Playwright

Scraping many pages with Playwright will get you blocked. Even a single page scraping is enough to flag you as a bot. You'll have to bypass protections in Playwright to scrape the data you want.

For instance, try accessing a protected page like Capterra with Playwright with the following code:

const { chromium } = require("playwright");

(async () => {

// start the browser instance

const browser = await chromium.launch({ timeout: 60000 });

const context = await browser.newContext();

const page = await context.newPage();

// launch the target website

await page.goto(

"https://www.capterra.com/p/186596/Notion/reviews/",

{ timeout: 1000000 }

);

// get the HTML content of the page

const html = await page.content();

console.log(html); // Print the HTML content

// close the browser

await browser.close();

})();

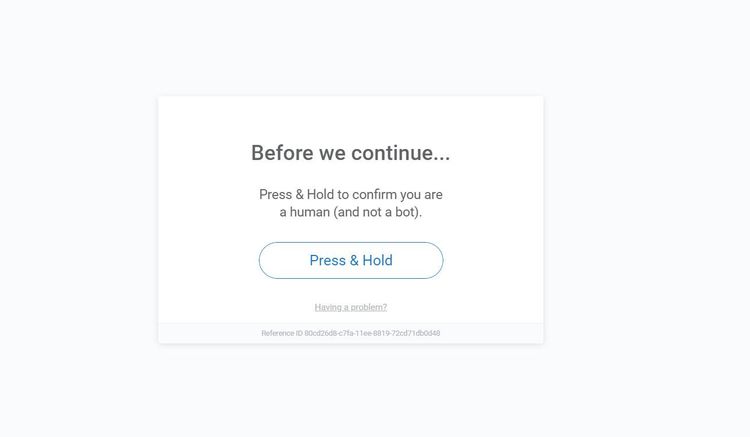

It returns an HTML with an access denied title and a CAPTCHA image, indicating that the target website has blocked the request:

<!DOCTYPE html>

<html lang="en">

<head>

<title>Access to this page has been denied</title>

</head>

<body>

<!-- ... -->

<div class="px-captcha-error">

<img class="px-captcha-error" src="data:image/png;base64,iVBORw0KG..." />

</div>

<!-- ... -->

</body>

</html>

Here's the blocking CAPTCHA when accessed without the headless mode:

You can avoid anti-bot detection by configuring proxies for Playwright to mask your request and scrape unnoticed. Additionally, rotating the Playwright user agent lets you mimic a real user and avoid getting blocked. However, these little steps aren't enough to evade detection while scraping.

A reliable way to bypass anti-bot systems is to use a web scraping API like ZenRows. It provides premium proxies, helps you optimize your request headers, and lets you scrape any website without getting blocked.

You can easily integrate ZenRows with your Playwright scraper to evade blocks. Let's modify the previous code with ZenRows to see how it works.

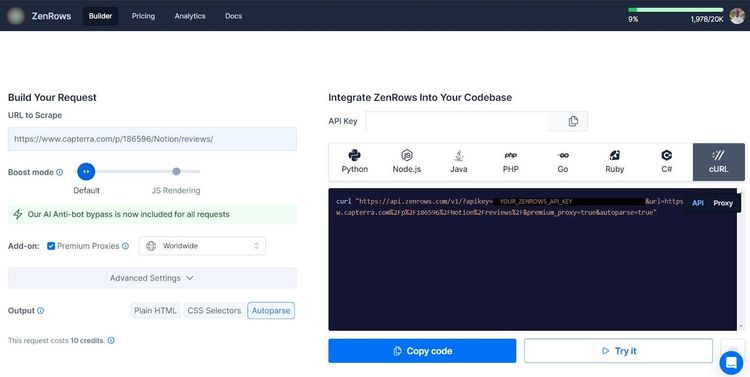

Sign up on ZenRows, and you'll get to the request builder. Next, click "Premium Proxies", select "Autoparse", and choose "cURL" as your request type.

Copy the generated cURL and use it in your code like so:

const { chromium } = require("playwright");

(async () => {

// start the browser instance

const browser = await chromium.launch({ timeout: 60000 });

const context = await browser.newContext();

const page = await context.newPage();

const url = "https://www.capterra.com/p/186596/Notion/reviews/";

// reformat the generated cURL

const formatted_url = (

`https://api.zenrows.com/v1/?` +

`apikey=<YOUR_ZENROWS_API_KEY>&` +

`url=${encodeURIComponent(url)}&` +

`premium_proxy=true&` +

`autoparse=true`

);

// launch the target website

await page.goto(

formatted_url,

{ timeout: 1000000 }

);

// get the HTML content of the page

const result = await page.content();

console.log(result);

// close the browser

await browser.close();

})();

The code bypasses the CAPTCHA and scrapes the website, as shown:

[

{

"reviewBody": "Notion is the one stop shop that will transform the way you work. ",

"reviewRating": {

"@type": "Rating",

"bestRating": 5,

"ratingValue": 5,

"worstRating": 1

}

},

# other data omitted for brevity

{

"reviewBody": " A Game-Changer in Digital Experience - My Notion Experience",

"reviewRating": {

"@type": "Rating",

"bestRating": 5,

"ratingValue": 5,

"worstRating": 1

}

}

]

Congratulations! You just scraped a protected website by integrating ZenRows with PlayWright.

You can even replace Playwright with ZenRows since it features headless functionalities like JavaScript instructions with anti-bot bypass capabilities.

Conclusion

In this article, you've seen the different techniques of scraping all kinds of paginated websites with Playwright in JavaScript. Here’s a recap of what you’ve learned:

- Extracting content from multiple pages by following the next page link.

- How to change the page number in the URL and continuously collect data from each page.

- Infinite scrolling implementation to scrape JavaScript-loaded content.

- How to scrape dynamic data hidden behind a "Load more" button.

Feel free to practice all you've learned. Remember that many websites will use anti-bot systems to block your scraper. Bypass all blocks with ZenRows and scrape any website without limitations. Try ZenRows for free!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.