Does your Playwright scraping project require extracting data from a page with infinite scrolling? We've got your back!

In this tutorial, you'll learn how to solve the challenge of continuously scrolling a website to scrape all its data using Playwright in Python:

How to Scrape Infinite Scrolling Content With Playwright

Web pages that use infinite scrolling automatically load more content as the user scrolls down a page. Some also require the user to click a "load more" button at a specific scroll height to view more content.

You'll learn how to deal with each scenario in this section.

Method 1: Scroll to the Bottom

Scrolling to the bottom of a page works for websites that automatically load more content as you scroll vertically.

To see how this method works, you'll scrape product names, prices, and image URLs from the ScrapingCourse Infinite Scrolling playground.

In this example, you'll scroll the page and extract data continuously until there is no more content.

First, import the required library and define a scraper function that accepts a page as an argument. Open a try block, extract the product containers, and create an empty list to collect the extracted data:

# import the required libraries

from playwright.sync_api import sync_playwright

# define a scraper function

def scraper(page):

try:

# extract the product containers

products = page.query_selector_all(".flex.flex-col.items-center.rounded-lg")

# create an empty list to write the extracted data

data_list = []

Iterate through the product containers using a for loop and collect each product's information in a dictionary. Then, append the extracted data to the data list, print the extracted data, and close the try block:

# define a scraper function

def scraper(page):

try:

# ...

# loop through the product containers to extract names and prices

for product in products:

# create a dictionary to collect each product container

data = {}

# extract the product name by pointing to the first span element

name_element = product.query_selector(".self-start.text-left.w-full > span:first-child")

if name_element:

data["name"] = name_element.inner_text()

# extract the price

price_element = product.query_selector(".text-slate-600")

if price_element:

data["price"] = price_element.inner_text()

# extract the image source

image_source = product.query_selector("img")

if image_source:

data["image"] = image_source.get_attribute("src")

# append the extracted data

data_list.append(data)

# output the result data

print(data_list)

except Exception as e:

print(f"Error occurred: {e}")

The next step is to implement the scrolling effect.

Start Playwright in synchronous mode, launch a browser instance, and open the target web page with a timeout that pauses for the page to load. Then, set an initial scroll height:

with sync_playwright() as p:

# start a browser instance

browser = p.chromium.launch(headless=False)

page = browser.new_page()

# open the target page

page.goto("https://scrapingcourse.com/infinite-scrolling")

# set the initial height

last_height = 0

Open a while loop and scroll to the bottom of the page with JavaScript evaluation. Wait for the content to load and get the value of the new height:

with sync_playwright() as p:

# ...

while True:

# scroll to bottom

page.evaluate("window.scrollTo(0, document.body.scrollHeight);")

# wait for the page to load

page.wait_for_timeout(5000)

# get the new height value

new_height = page.evaluate("document.body.scrollHeight")

Update the initial height to the new scroll height. Then, execute the scraper function and break the loop until the vertical scroll height stops changing. Finally, close the browser:

with sync_playwright() as p:

# ...

while True:

# ...

# break the loop if there are no more heights to scroll

if new_height == last_height:

# extract data once all content has loaded

scraper(page)

break

# update the initial height to the new height

last_height = new_height

# close the browser

browser.close()

Combine all the snippets. Your final code should look like this:

# import the required libraries

from playwright.sync_api import sync_playwright

# define a scraper function

def scraper(page):

try:

# extract the product containers

products = page.query_selector_all(".flex.flex-col.items-center.rounded-lg")

# create an empty list to write the extracted data

data_list = []

# loop through the product containers to extract names and prices

for product in products:

# create a dictionary to collect each product container

data = {}

# extract the product name by pointing to the first span element

name_element = product.query_selector(".self-start.text-left.w-full > span:first-child")

if name_element:

data["name"] = name_element.inner_text()

# extract the price

price_element = product.query_selector(".text-slate-600")

if price_element:

data["price"] = price_element.inner_text()

# extract the image source

image_source = product.query_selector("img")

if image_source:

data["image"] = image_source.get_attribute("src")

# append the extracted data

data_list.append(data)

# output the result data

print(data_list)

except Exception as e:

print(f"Error occurred: {e}")

with sync_playwright() as p:

# start a browser instance

browser = p.chromium.launch(headless=False)

page = browser.new_page()

# open the target page

page.goto("https://scrapingcourse.com/infinite-scrolling")

# set the initial height

last_height = 0

while True:

# scroll to bottom

page.evaluate("window.scrollTo(0, document.body.scrollHeight);")

# wait for the page to load

page.wait_for_timeout(5000)

# get the new height value

new_height = page.evaluate("document.body.scrollHeight")

# break the loop if there are no more heights to scroll

if new_height == last_height:

# extract data once all content has loaded

scraper(page)

break

# update the initial height to the new height

last_height = new_height

# close the browser

browser.close()

The code scrolls the page and outputs the names, prices, and image URLs from all products:

[

{

'name': 'Chaz Kangeroo Hoodie',

'price': '$52',

'image': 'https://scrapingcourse.com/ecommerce/wp-content/uploads/2024/03/mh01-gray_main.jpg'

},

# ... other products omitted for brevity

{

'name': 'Breathe-Easy Tank',

'price': '$34',

'image': 'https://scrapingcourse.com/ecommerce/wp-content/uploads/2024/03/wt09-white_main.jpg'

}

]

You’ve just scraped dynamic content by scrolling continuously through a web page using Playwright in Python. Good job!

In the next section, you'll see how to handle a web page with a "load more" button.

Method 2: Click on a Button to Load More Content

Websites with the "load more" button require continuous clicking to load more content as you scroll vertically.

In this example, let's scrape product information from the ScrapingCourse Load More playground.

See how the target web page loads more content as you click the button:

First, define a scraper function that gets the product containers and loops through them to extract the target data:

# import the required libraries

from playwright.sync_api import sync_playwright

def scraper(page):

try:

# extract the product containers

products = page.query_selector_all(".flex.flex-col.items-center.rounded-lg")

# create an empty list to write extracted data

data_list = []

# loop through the product containers to extract names and prices

for product in products:

# create a disctionary to collect each product container

data = {}

# extract the product name by pointing to the first span element

name_element = product.query_selector(".self-start.text-left.w-full > span:first-child")

if name_element:

data["name"] = name_element.inner_text()

# extract price

price_element = product.query_selector(".text-slate-600")

if price_element:

data["price"] = price_element.inner_text()

# extract the image source

image_source = product.query_selector("img")

if image_source:

data["image"] = image_source.get_attribute("src")

# append the extracted product information to a list

data_list.append(data)

# output the result data

print(data_list)

except Exception as e:

print(f"Error occurred: {e}")

Start a browser instance and navigate to the target page.

with sync_playwright() as p:

# start a browser instance

browser = p.chromium.launch()

page = browser.new_page()

# visit the target website

page.goto("https://scrapingcourse.com/button-click", timeout=100000)

Scroll to the bottom of the page and continuously click the "load more" button in a while loop. Check if the button is in the DOM, and break the loop if not. Wait for the page to load after clicking the button. Then, execute the scraper function to extract product information once all the products are visible.

with sync_playwright() as p:

# ...

# keep scrolling and clicking the load more button

while True:

# select the load more button and break the loop if not in the DOM anymore

load_more_button = page.query_selector("#load-more-btn")

if not load_more_button or not load_more_button.is_visible():

# exit the loop if the button is not available or not visible

break

# scroll to the bottom of the page

page.evaluate("window.scrollTo(0, document.body.scrollHeight);")

# click the load more button after scrolling to the bottom

page.click("#load-more-btn")

# wait for the products to load

page.wait_for_timeout(5000)

# extract data once all content has loaded

scraper(page)

# close the browser

browser.close()

You should have the following complete code after merging all snippets:

# import the required libraries

from playwright.sync_api import sync_playwright

def scraper(page):

try:

# extract the product containers

products = page.query_selector_all(".flex.flex-col.items-center.rounded-lg")

# create an empty list to write extracted data

data_list = []

# loop through the product containers to extract names and prices

for product in products:

# create a disctionary to collect each product container

data = {}

# extract the product name by pointing to the first span element

name_element = product.query_selector(".self-start.text-left.w-full > span:first-child")

if name_element:

data["name"] = name_element.inner_text()

# extract price

price_element = product.query_selector(".text-slate-600")

if price_element:

data["price"] = price_element.inner_text()

# extract the image source

image_source = product.query_selector("img")

if image_source:

data["image"] = image_source.get_attribute("src")

# append the extracted product information to a list

data_list.append(data)

# output the result data

print(data_list)

except Exception as e:

print(f"Error occurred: {e}")

with sync_playwright() as p:

# start a browser instance

browser = p.chromium.launch()

page = browser.new_page()

# visit the target website

page.goto("https://scrapingcourse.com/button-click", timeout=100000)

# keep scrolling and clicking the load more button

while True:

# select the load more button and break the loop if not in the DOM anymore

load_more_button = page.query_selector("#load-more-btn")

if not load_more_button or not load_more_button.is_visible():

# exit the loop if the button is not available or not visible

break

# scroll to the bottom of the page

page.evaluate("window.scrollTo(0, document.body.scrollHeight);")

# click the load more button after scrolling to the bottom

page.click("#load-more-btn")

# wait for the products to load

page.wait_for_timeout(5000)

# extract data once all content has loaded

scraper(page)

# close the browser

browser.close()

```

The code above clicks the "load more" button continuously and extracts the names, prices, and image URLs of all products:

[

{

'name': 'Chaz Kangeroo Hoodie',

'price': '$52',

'image': 'https://scrapingcourse.com/ecommerce/wp-content/uploads/2024/03/mh01-gray_main.jpg'

},

# ... other products omitted for brevity

{

'name': 'Breathe-Easy Tank',

'price': '$34',

'image': 'https://scrapingcourse.com/ecommerce/wp-content/uploads/2024/03/wt09-white_main.jpg'

}

]

Congratulations! Your scraper now extracts content hidden behind a "load more" button. However, anti-bots can block you from getting the data you want. How can you deal with them?

How to Avoid Getting Blocked When Scraping With Playwright?

The solution above works if you want to scrape one page. But if you’re using Playwright to scrape at scale or interact with infinite scroll pages, you put yourself at risk of getting blocked by anti-bot systems. You need to bypass them to scrape without limitations.

For example, Playwright will inevitably get blocked while scraping a protected page like the G2 Reviews. Try it out with the following code:

# import the required library

from playwright.sync_api import sync_playwright

# initialize Playwright in synchronous mode

with sync_playwright() as p:

# start a browser instance

browser = p.chromium.launch()

page = browser.new_page()

# navigate to the target web page

page.goto(

"https://www.g2.com/products/asana/reviews",

timeout=3000

)

# print the page source

print(page.content())

# close the browser

browser.close()

The code outputs the following HTML, showing that Playwright got blocked by Cloudflare Turnstile:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<!-- ... -->

<title>Attention Required! | Cloudflare</title>

</head>

How to avoid detection? You can try:

- Configuring Playwright proxies to mask your IP address

- Customizing the user agent for Playwright to mimic a real browser.

Check out the links above for detailed guides on how to do it!

However, complex anti-bot systems like Cloudflare and Akamai combine JavaScript challenges with unpredictable machine-learning algorithms to detect bot-like activities. So, you'll need more than proxy and user agent configuration to bypass advanced blocks.

The best way to avoid anti-bot detection with Playwright is to use a web scraping API, such as ZenRows. It auto-rotates premium proxies, fixes your request headers, and bypasses CAPTCHAs and any other anti-bot system, regardless of complexity.

You can also integrate ZenRows with Playwright and scrape any website at scale without getting blocked.

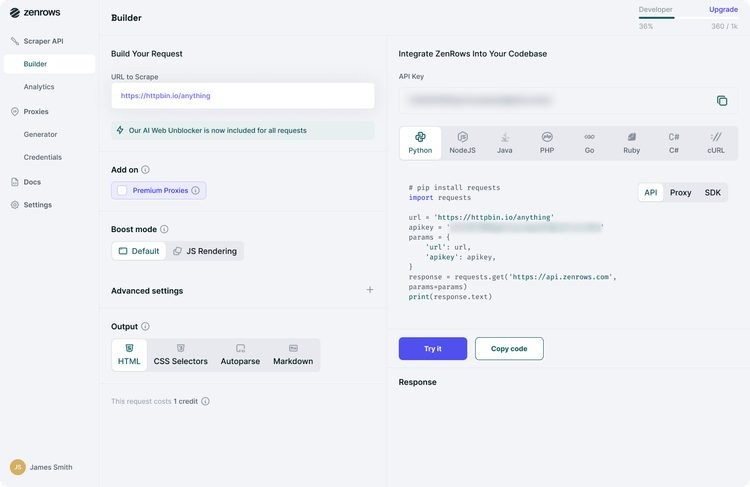

To try it for free, sign up to open the Request Builder. Paste the protected page URL in the link box, set the Boost mode to JS Rendering, and activate Premium Proxies. Select cURL and choose the API request mode:

Modify the generated cURL in your code like so:

# import the required library

from playwright.sync_api import sync_playwright

from urllib.parse import urlencode

with sync_playwright() as p:

# start a browser instance

browser = p.chromium.launch()

page = browser.new_page()

# modify the generated cURL and encode the target URL

formatted_url = (

"https://api.zenrows.com/v1/?"

"apikey=<YOUR_ZENROWS_API_KEY>&"

f"{urlencode({"url":"https://www.g2.com/products/asana/reviews"})}&"

"js_render=true&"

"premium_proxy=true&"

)

# open the target website

page.goto(formatted_url)

# print the page content

print(page.content())

# close the browser

browser.close()

The code accesses the protected website and outputs its HTML, as shown:

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8" />

<link href="https://www.g2.com/images/favicon.ico" rel="shortcut icon" type="image/x-icon" />

<title>Asana Reviews, Pros + Cons, and Top Rated Features</title>

</head>

<body>

<!-- other content omitted for brevity -->

</body>

You've just bypassed anti-bot protection by integrating ZenRows into your Playwright scraper. Congratulations!

ZenRows also features JavaScript instructions for scraping dynamic content. You can successfully replace ZenRows with Playwright and scrape without worrying about Playwright's complexities!

Conclusion

In this article, you've learned how to extract data from pages with infinite scrolling and a "load more" button, the two common hurdles in web scraping at scale.

Apart from challenging UI elements, you'll encounter various anti-bot systems that prevent you from accessing your target data while web scraping. The surefire solution to bypass them is a web scraping API such as ZenRows. Thanks to premium proxies, you’ll avoid detection mechanisms and scrape any website without getting blocked. Try ZenRows for free!