Scraping a website involves implementing mechanisms to avoid being detected as a bot. In this tutorial, we'll change the User Agent in Playwright and learn the best practices.

What Is the Playwright User Agent?

The Playwright User Agent is a string that identifies you to the websites you visit when using the headless browser. It's typically included in the HTTP request header and shares information about your operating system and browser.

A typical User Agent (UA) looks like this:

Mozilla/5.0 (Macintosh; Intel Mac OS X 10.15; rv:109.0) Gecko/20100101 Firefox/109.0

From it, we can tell that, for example, the user is accessing the website using Firefox version 109 on a Mac OS X version 10.15.

However, the default Playwright UA is different and looks something like the one below, with the second part being the version:

Playwright/1.12.0

BoldClearly, it's easy to spot that you're not a regular user. Thus, it's critical to change the default Playwright User Agent to avoid detection.

How to Set a Custom User Agent in Playwright

Let's learn how to set a custom User Agent (UA) in Playwright to web scrape flying under the radar!

1. Getting Started

To get started, create a project folder on your device. Then, create a JavaScript file (e.g. scraping.js) in the folder.

Next, install Playwright in your project by using npm (Node.js package manager).

npm install playwright

Then, import Playwright.

const { chromium } = require('playwright');

To scrape a webpage with Playwright, your initial code would look like this:

const { chromium } = require('playwright');

(async () => {

// Launch the Chromium browser

const browser = await chromium.launch();

const context = await browser.newContext();

// Create a new page in the browser context and navigate to target URL

const page = await context.newPage();

await page.goto('https://httpbin.io/user-agent');

// Get the entire page content

const pageContent = await page.content();

console.log(pageContent);

// Close the browser

await browser.close();

})();

We used Httpbin.io as a target URL to get our UA in the response.

Run it, and you'll get a similar output to the one below. It displays your default User Agent in Playwright.

<html><head><meta name="color-scheme" content="light dark"></head><body><pre style="word-wrap: break-word; white-space: pre-wrap;">{

"user-agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) HeadlessChrome/115.0.5790.75 Safari/537.36"

}

</pre></body></html>

If you want to review your fundamentals in this headless browser, take a look at our Playwright tutorial.

2. Customize UA

To change your Playwright User Agent, specify a custom one when launching the browser instance. We'll use the real one we displayed earlier.

Mozilla/5.0 (Macintosh; Intel Mac OS X 10.15; rv:109.0) Gecko/20100101 Firefox/109.0

UnderlineAdd it in the userAgent parameter:

const { chromium } = require('playwright');

(async () => {

// Launch the Chromium browser

const browser = await chromium.launch();

const context = await browser.newContext({

userAgent: 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10.15; rv:109.0) Gecko/20100101 Firefox/109.0',

});

// Create a new page in the browser context and navigate to target URL

const page = await context.newPage();

await page.goto('https://httpbin.io/user-agent');

// Get the entire page content

const pageContent = await page.content();

console.log(pageContent);

// Close the browser

await browser.close();

})();

Run the script with the terminal command node scraping.js. You should see the following:

<html><head><meta name="color-scheme" content="light dark"></head><body><pre style="word-wrap: break-word; white-space: pre-wrap;">{

"user-agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10.15; rv:109.0) Gecko/20100101 Firefox/109.0"

}

</pre></body></html>

That's it! You've successfully set a custom Playwright User Agent.

However, using a single UA and making many requests will make it easy for anti-bot systems to detect you as a bot. Therefore, you need to randomize it to pretend your requests are coming from different users.

3. Use a Random User Agent in Playwright

By rotating your Playwright User Agent randomly, you can mimic user behavior, making it more challenging for websites to identify and block your automated activities.

To rotate the User Agent, first create a list of UAs. We'll take some examples from our list of User Agents for web scraping. Here's how to add them:

const { chromium } = require('playwright');

// An array of user agent strings for different versions of Chrome on Windows and Mac

const userAgentStrings = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/110.0.0.0 Safari/537.36',

];

Next, use the Math.random() function to select random UAs from your list for each request. It generates a random floating-point number between 0 and 1. By multiplying it by the length of the User Agent list and using Math.floor(), you get a random index that selects a UA.

(async () => {

// Launch the Chromium browser

const browser = await chromium.launch();

const context = await browser.newContext({

userAgent: userAgentStrings[Math.floor(Math.random() * userAgentStrings.length)],

});

Bringing it all together:

const { chromium } = require('playwright');

// An array of user agent strings for different versions of Chrome on Windows and Mac

const userAgentStrings = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/110.0.0.0 Safari/537.36',

];

(async () => {

// Launch the Chromium browser

const browser = await chromium.launch();

const context = await browser.newContext({

userAgent: userAgentStrings[Math.floor(Math.random() * userAgentStrings.length)],

});

// Create a new page in the browser context and navigate to target URL

const page = await context.newPage();

await page.goto('https://httpbin.io/user-agent');

// Get the entire page content

const pageContent = await page.content();

console.log(pageContent);

// Close the browser

await browser.close();

})();

With that, each time the script runs, a different User Agent is used. Run the command node scraping.js about two times in your terminal, and you should see the UA changes:

<html><head><meta name="color-scheme" content="light dark"></head><body><pre style="word-wrap: break-word; white-space: pre-wrap;">{

"user-agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"

}

</pre></body></html>

=====

<html><head><meta name="color-scheme" content="light dark"></head><body><pre style="word-wrap: break-word; white-space: pre-wrap;">{

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"

}

</pre></body></html>

Excellent! You've now successfully rotated your User Agent.

To generate a bigger list (you'll need it for real-world scraping), you'll realize it's quite easy to create incorrectly formed UAs. For example, if you add Google Chrome v83 on Windows, and the other headers in the HTTP request are that of a Mac, your target websites may detect this discrepancy and block your requests. This might seem easy, but it might not be evident when creating your UAs.

Also, it's important to keep your User Agent list up-to-date to avoid being flagged as a bot. However, this can be a tiring process and somewhat difficult to keep up with.

But is there any easier and automated way to do all of that?

The Easy Solution to Change User Agents in Playwright and Avoid Getting Blocked

Changing your Playwright User Agent alone is not enough to bypass anti-bot challenges like rate limiting, cookie analysis, and CAPTCHAs. In these cases, using residential proxies is a more effective solution to prevent getting blocked. Yet, the UAs still play an important role.

An easy solution to deal with all those difficulties is to use a web scraping API like ZenRows. It deals with those challenges for you, including rotating your User Agent at scale so that you can focus on getting the data you need.

You can integrate its proxies with Playwright, but we recommend trying it without it because you get the full anti-bot bypass functionality, including the whole Playwright features.

To get started with ZenRows, sign up to obtain your free API key, and you'll get to the Request Builder page.

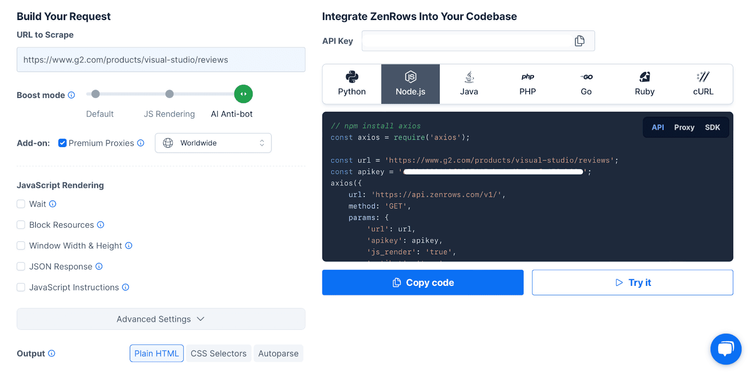

Input the URL of the website you want to scrape (for example, the heavily protected G2), select the "Anti-bot" boost mode (it also includes JS rendering), and add the "Premium Proxies” add-on. Lastly, select Node.js as a language, and you'll get your scraper code.

You'll need an HTTP request library. We recommend Axios for Node.js, but you can use any.

npm install axios

Copy the scraper code from ZenRows and paste it into your project file.

// Import the Axios library

const axios = require('axios');

// Define the URL and API key

const url = 'https://www.g2.com/products/visual-studio/reviews';

const apikey = 'YOUR_API_KEY';

// Make an HTTP request using Axios

axios({

url: 'https://api.zenrows.com/v1/',

method: 'GET',

params: {

'url': url,

'apikey': apikey,

'js_render': 'true',

'antibot': 'true',

'premium_proxy': 'true',

},

})

.then(response => console.log(response.data)) // Print the API response data

.catch(error => console.log(error)); // Handle any errors

Run the code, and you should get a result similar to this one:

// The following HTML code has been reduced for clarity.

<!DOCTYPE html><head><meta charset="utf-8" /><link href="https://www.g2.com/assets/favicon-fdacc4208a68e8ae57a80bf869d155829f2400fa7dd128b9c9e60f07795c4915.ico" rel="shortcut icon" type="image/x-icon" /><title>Visual Studio Reviews 2023: Details, Pricing, & Features | G2</title><meta content="78D210F3223F3CF585EB2436D17C6943" name="msvalidate.01" /><meta content="width=device-width, initial-scale=1" name="viewport" /><meta content="GNU Terry Pratchett" http-equiv="X-Clacks-Overhead" /><meta content="ie=edge" http-equiv="x-ua-compatible" /><meta content="en-us" http-equiv="content-language" /><meta content="website" property="og:type" /><meta content="G2" property="og:site_name" /><meta content="@G2dotcom" name="twitter:site" /><meta content="The G2 on Visual Studio" property="og:title" /><meta content="https://www.g2.com/products/visual-studio/reviews" property="og:url" /><meta content="Filter 3382 reviews by the users' company size, role or industry to find out how Visual Studio works for a business like yours." property="og:description" />

Awesome! See how easy that was?

Conclusion

Scraping under the radar is frequently necessary to retrieve the data you want from a web page without the worry of getting blocked while web scraping. Customizing your Playwright User Agent and randomizing is one of the best practices you can implement.

Beyond that, there are more advanced anti-bot measures you need to be aware of, like rate limiting, CAPTCHAs, etc. And sadly, changing your User Agent alone isn't a good enough solution. Yet, a web scraping API like ZenRows provides an easy route to retrieve data from any website. Give it a try for happy scraping!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.