Do you want to extract content from multiple pages using Selenium with Python? It’s easy to do with Selenium’s automation capabilities.

In this article, you'll learn how to scrape different paginated websites with Selenium, including those using JavaScript for infinite scrolling.

This tutorial uses Python 3+, and similar methods applied here also work for other languages like Java, Golang, PHP, and C#.

When You Have a Navigation Page Bar

Navigation bars are the most common forms of website pagination. You can scrape from them by following the next page link or changing the page number in the URL.

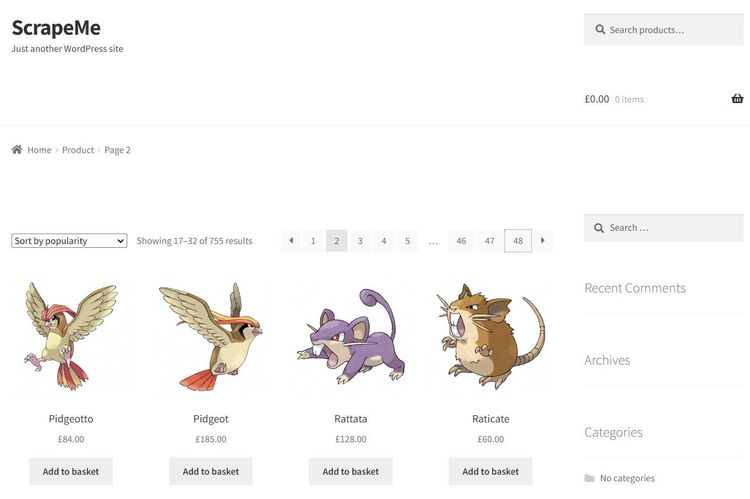

You'll scrape product information from ScrapeMe, a paginated website with navigation bars, to see how both methods work. See its layout below:

That website has 48 pages, and you'll scrape them all. You'll see how to do that in the next sections.

Use the Next Page Link

The next page link method involves clicking the next button on the navigation bar to follow the next page. You'll extract product names and prices from the target website to see how it works.

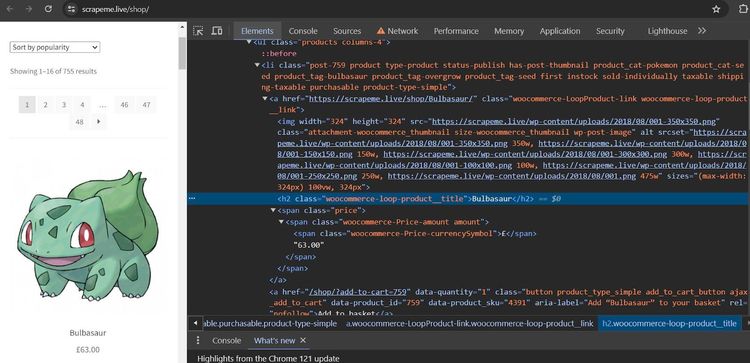

First, inspect the page to view each product's HTML layout:

Next, scrape the first page with the following code. The code launches the Selenium WebDriver in headless mode and visits the target website. It then iterates through the product containers to extract the required information:

#import the required libraries

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.options import Options

chrome_options = Options()

chrome_options.add_argument("--headless")

driver = webdriver.Chrome(options=chrome_options)

# visit the target website

driver.get("https://scrapeme.live/shop/")

# extract the product containers

product_container = driver.find_elements(By.CLASS_NAME, "woocommerce-LoopProduct-link")

# extract the product names and prices

for product in product_container:

name = product.find_element(By.CLASS_NAME, "woocommerce-loop-product__title")

price = product.find_element(By.CLASS_NAME, "price")

print(name.text)

print(price.text)

driver.quit()

The code outputs all the products on the first page as expected:

Bulbasaur

£63.00

#... 12 other products omitted for brevity

Pidgey

£159.00

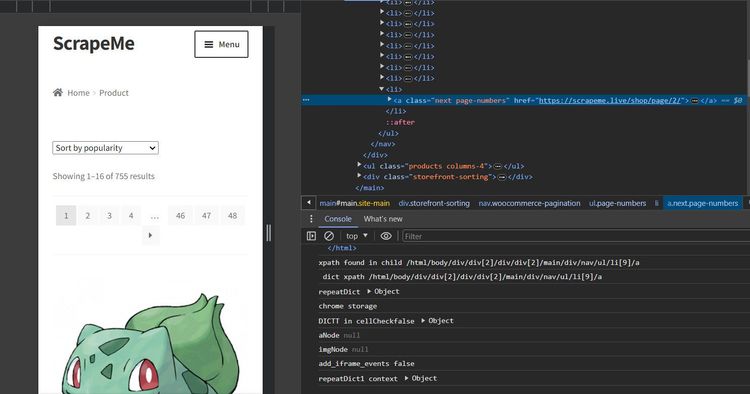

That works! But you want to follow all pages and scrape the entire website. Before doing so, right-click the next page button and select Inspect to expose its HTML attributes:

It's time to modify the previous code to scrape all pages. Set a new driver instance and visit the target web page, as shown:

# import the required libraries

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.options import Options

from selenium.common.exceptions import NoSuchElementException

chrome_options = Options()

chrome_options.add_argument("--headless")

driver = webdriver.Chrome(options=chrome_options)

# visit the target website

driver.get("https://scrapeme.live/shop/")

Define the scraping logic inside a dedicated function. This loops through the product containers to extract the required data:

def scraper():

# get the product containers

product_container = driver.find_elements(By.CLASS_NAME, "woocommerce-LoopProduct-link")

# extract the product names and prices

for product in product_container:

name = product.find_element(By.CLASS_NAME, "woocommerce-loop-product__title")

price = product.find_element(By.CLASS_NAME, "price")

print(name.text)

print(price.text)

Next, check if the next page button exists and click it iteratively inside a while loop. The try/except block ensures that the loop stops executing the scraper function once it hits the last page:

# ...

while True:

# execute the scraper function

scraper()

try:

# find and click the next page link

next_page_link = driver.find_element(By.CLASS_NAME, "next.page-numbers")

if next_page_link:

next_page_link.click()

except NoSuchElementException:

print("No more pages available")

break

driver.quit()

Put everything together, and your final code should look like this:

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.options import Options

from selenium.common.exceptions import NoSuchElementException

chrome_options = Options()

chrome_options.add_argument("--headless")

driver = webdriver.Chrome(options=chrome_options)

# visit the target website

driver.get("https://scrapeme.live/shop/")

def scraper():

# get the product containers

product_container = driver.find_elements(By.CLASS_NAME, "woocommerce-LoopProduct-link")

# extract the product names and prices

for product in product_container:

name = product.find_element(By.CLASS_NAME, "woocommerce-loop-product__title")

price = product.find_element(By.CLASS_NAME, "price")

print(name.text)

print(price.text)

while True:

# execute the scraper function

scraper()

try:

# find and click the next page link

next_page_link = driver.find_element(By.CLASS_NAME, "next.page-numbers")

if next_page_link:

next_page_link.click()

except NoSuchElementException:

print("No more pages available")

break

driver.quit()

The code follows each page and extracts its content, as shown:

Name: Bulbasaur

Price: £63.00

Name: Ivysaur

Price: £87.00

#... 747 items omitted for brevity

Name: Stakataka

Price: £190.00

Name: Blacephalon

Price: £149.00

You've now scraped all 755 items from a paginated website by following its next page link. That's great! You'll learn the other method in the next section.

Change the Page Number in the URL

Changing the page number in the URL is a manual method where you'll increase the page number in the URL iteratively until the last page.

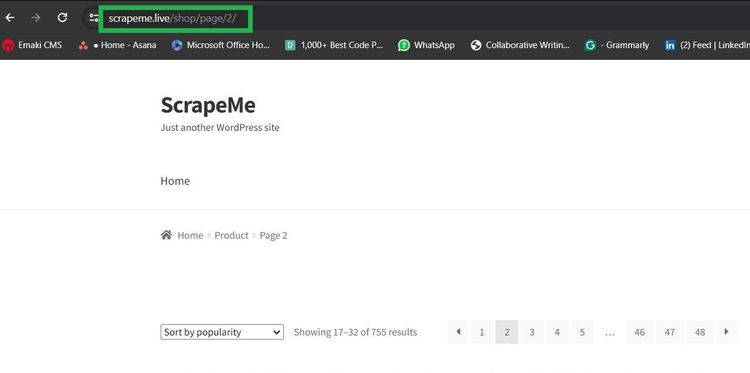

To use this method, you must understand how the website formats the page number in its URL during page navigation. You'll stick to ScrapeMe as the demo website for this section.

Observe how it formats the second page in the URL box:

The URL format is website/page/page-number. You'll use that information to navigate the website and scrape all its products.

First, spin the browser in headless mode and define a scraper function to extract the desired product information from their containers:

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.options import Options

chrome_options = Options()

chrome_options.add_argument("--headless")

driver = webdriver.Chrome(options=chrome_options)

def scraper():

product_container = driver.find_elements(By.CLASS_NAME, "woocommerce-LoopProduct-link")

for product in product_container:

name = product.find_element(By.CLASS_NAME, "woocommerce-loop-product__title")

price = product.find_element(By.CLASS_NAME, "price")

print(name.text)

print(price.text)

Next, increment the page count in a for loop based on the maximum page number. Add the count to the website's URL continuously until it reaches the last page. Then run the scraper function:

page_count = 1

max_page_count = 49

for i in range(1, max_page_count):

# add the page count to the page number iteratively

driver.get(f"https://scrapeme.live/shop/page/{page_count}")

# execute the scraper function

scraper()

page_count += 1

driver.quit()

Here's the final code:

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.options import Options

chrome_options = Options()

chrome_options.add_argument("--headless")

driver = webdriver.Chrome(options=chrome_options)

def scraper():

product_container = driver.find_elements(By.CLASS_NAME, "woocommerce-LoopProduct-link")

for product in product_container:

name = product.find_element(By.CLASS_NAME, "woocommerce-loop-product__title")

price = product.find_element(By.CLASS_NAME, "price")

print(name.text)

print(price.text)

page_count = 1

max_page_count = 49

for i in range(1, max_page_count):

# add the page count to the page number iteratively

driver.get(f"https://scrapeme.live/shop/page/{page_count}")

# execute the scraper function

scraper()

page_count += 1

driver.quit()

The script extracts the specified product information from all pages:

Name: Bulbasaur

Price: £63.00

Name: Ivysaur

Price: £87.00

#... 747 items omitted for brevity

Name: Stakataka

Price: £190.00

Name: Blacephalon

Price: £149.00

Splendid! You just added two methods of scraping a navigation bar to your portfolio. That's not all. Some websites use JavaScript pagination, and you must learn to handle them.

When JavaScript-Based Pagination Is Required

Websites using JavaScript-based pagination load more content dynamically as you scroll down the page. They achieve that using an autonomous infinite scroll or a "Load More" button that shows more content when clicked.

Selenium makes scraping such websites seamless because it features a browser instance and fully supports JavaScript execution. You'll see the two methods of scraping JavaScript pagination in the following sections.

Infinite Scroll to Load More Content

Infinite scroll means a website loads more content automatically as you scroll down its page. It's common with most social and e-commerce sites.

For this, you'll scrape product names and prices from ScrapingClub, a web page using infinite scrolling to show more content. See the demonstration below:

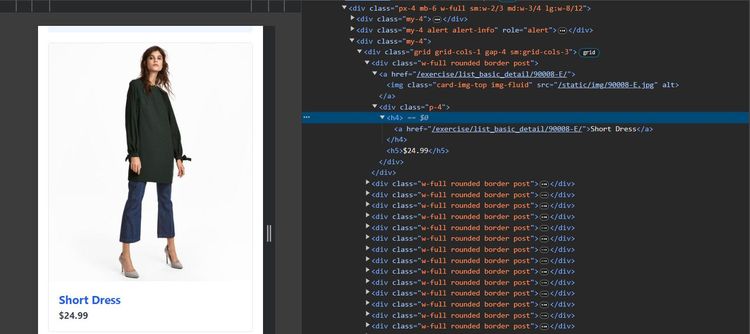

Right-click any product in your browser and select Inspect to see its HTML layout. You'll see that each product is inside a div tag containing their names and prices.

First, use the following code to extract the page HTML without implementing a scroll.

# import the required libraries

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

# initialize the Chrome browser in headless mode

chrome_options = Options()

chrome_options.add_argument("--headless")

driver = webdriver.Chrome(options=chrome_options)

# open the target page

driver.get("https://scrapingclub.com/exercise/list_infinite_scroll/")

# wait for the page to load completely (adjust wait time as needed)

driver.implicitly_wait(10)

# print the page HTML content

print(driver.page_source)

# close the browser

driver.quit()

The HTML output shows that the code only gets the first 10 products:

<!-- ... -->

<div class="p-4">

<h4>

<a href="/exercise/list_basic_detail/90008-E/">Short Dress</a>

</h4>

<h5>$24.99</h5>

</div>

<!-- 8 products omitted for brevity -->

<div class="p-4">

<h4>

<a href="/exercise/list_basic_detail/94766-A/">Fitted Dress</a>

</h4>

<h5>$34.99</h5>

</div>

<!-- ... -->

Now, let's add scrolling functionality to scrape more content. This time, you'll scrape the prices and names of each product.

To begin, set up the browser in headless mode with the options flag:

# import the required libraries

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.options import Options

import time

# initialize the Chrome browser

chrome_options = Options()

chrome_options.add_argument("--headless")

driver = webdriver.Chrome(options=chrome_options)

Define the scraper function to extract data from each product container using their selectors. Extend the code like so:

# function to extract data from the page

def scraper():

# extract the product container

products = driver.find_elements(By.CLASS_NAME, "post")

# loop through the product container to extract names and prices

for product in products:

name = product.find_element(By.CSS_SELECTOR, "h4 a").text

price = product.find_element(By.CSS_SELECTOR, "h5").text

print(f"Name: {name}")

print(f"Price: {price}")

Finally, open the web page and implement scrolling by comparing the page's current scroll height with the previous one in a while loop. The code terminates the scroll with a break statement and executes the scraper function after scrolling to the bottom and loading all the content:

# ...

# open the target page

driver.get("https://scrapingclub.com/exercise/list_infinite_scroll/")

last_height = driver.execute_script("return document.body.scrollHeight")

while True:

# scroll down to bottom

driver.execute_script("window.scrollTo(0, document.body.scrollHeight);")

# wait for the page to load

time.sleep(2)

# get the new height and compare with last height

new_height = driver.execute_script("return document.body.scrollHeight")

if new_height == last_height:

# extract data once all content has loaded

scraper()

break

last_height = new_height

# close the browser

driver.quit()

Here's your final code:

# import the required libraries

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.options import Options

import time

# initialize the Chrome browser

chrome_options = Options()

chrome_options.add_argument('--headless')

driver = webdriver.Chrome(options=chrome_options)

# function to extract data from the page

def scraper():

# extract the product container

products = driver.find_elements(By.CLASS_NAME, "post")

# loop through the product container to extract names and prices

for product in products:

name = product.find_element(By.CSS_SELECTOR, "h4 a").text

price = product.find_element(By.CSS_SELECTOR, "h5").text

print(f"Name: {name}")

print(f"Price: {price}")

# open the target page

driver.get("https://scrapingclub.com/exercise/list_infinite_scroll/")

last_height = driver.execute_script("return document.body.scrollHeight")

while True:

# scroll down to bottom

driver.execute_script("window.scrollTo(0, document.body.scrollHeight);")

# wait for the page to load

time.sleep(2)

# get the new height and compare with last height

new_height = driver.execute_script("return document.body.scrollHeight")

if new_height == last_height:

# extract data once all content has loaded

scraper()

break

last_height = new_height

# close the browser

driver.quit()

This code scrolls the page and extracts all its content:

Name: Short Dress

Price: $24.99

# ... 58 products omitted for brevity

Name: Blazer

Price: $49.99

Congratulations! You just scraped infinitely loaded content with Selenium and Python. What if the website uses a "Load More" button?

Click on a Button to Load More Content

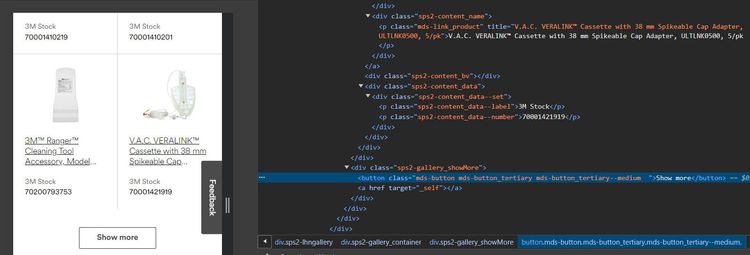

A "Load More" pagination requires clicking a button to show more content dynamically. You'll scrape product names and their IDs from 3M Medicals to see how it works.

See how the website loads content below:

Inspect the element to see its HTML layout:

Each product is in a div tag, while the "Show more" button is in a dedicated HTML button. You'll extract each content by clicking the "Show more" button with Selenium.

Start a browser and load the target page, as shown below:

# import the required libraries

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support import expected_conditions as EC

import time

driver = webdriver.Chrome()

driver.maximize_window()

# visit the target website

driver.get("https://www.3m.com.au/3M/en_AU/p/c/medical/")

driver.implicitly_wait(10)

Next, define a scraper function that extracts the desired information from their containers:

def scraper():

product_container = driver.find_elements(By.CLASS_NAME, "sps2-content")

for product in product_container:

name = product.find_element(By.CLASS_NAME, "sps2-content_name")

item_id = product.find_element(By.CLASS_NAME, "sps2-content_data--number")

print(f"Name: {name.text}")

print(f"Product ID: {item_id.text}")

Finally, implement a 5-time scroll in a for loop and click the "Load more" button in each case. Then, extract the content after completing all scrolling actions. The sleep function ensures the driver waits for a specific time before clicking the load button.

# ...

scroll_count = 5

for _ in range(scroll_count):

# scroll to the bottom of the page

driver.execute_script("window.scrollTo(0, document.body.scrollHeight);")

time.sleep(5)

load_more = driver.find_element(

By.CLASS_NAME,

"mds-button_tertiary--medium"

)

load_more.click()

try:

scraper()

except:

pass

driver.quit()

Combine the pieces, and your final code should look like this:

# import the required libraries

from selenium import webdriver

from selenium.webdriver.common.by import By

import time

driver = webdriver.Chrome()

driver.maximize_window()

# visit the target website

driver.get("https://www.3m.com.au/3M/en_AU/p/c/medical/")

driver.implicitly_wait(10)

def scraper():

product_container = driver.find_elements(By.CLASS_NAME, "sps2-content")

for product in product_container:

name = product.find_element(By.CLASS_NAME, "sps2-content_name")

item_id = product.find_element(By.CLASS_NAME, "sps2-content_data--number")

print(f"Name: {name.text}")

print(f"Product ID: {item_id.text}")

scroll_count = 5

for _ in range(scroll_count):

# scroll to the bottom of the page

driver.execute_script("window.scrollTo(0, document.body.scrollHeight);")

time.sleep(5)

load_more = driver.find_element(

By.CLASS_NAME,

"mds-button_tertiary--medium"

)

load_more.click()

try:

# execute the scraper function

scraper()

except:

pass

driver.quit()

This clicks the "Load more" button and extracts page content, as shown:

Name: ACTIV.A.C.™ Sterile Canister with Gel, M8275059/5, 5/cs

Product ID: 70001413270

# ... other products omitted for brevity

Name: 3M™ Tegaderm™ Silicone Foam Dressing, 90632, Non-Bordered, 15 cm x 15 cm, 10/ct, 4ct/Case

Product ID: 70201176958

Nice! You now know how to scrape content hidden behind a "Load more" button. However, handling anti-bot measures is also essential while web scraping.

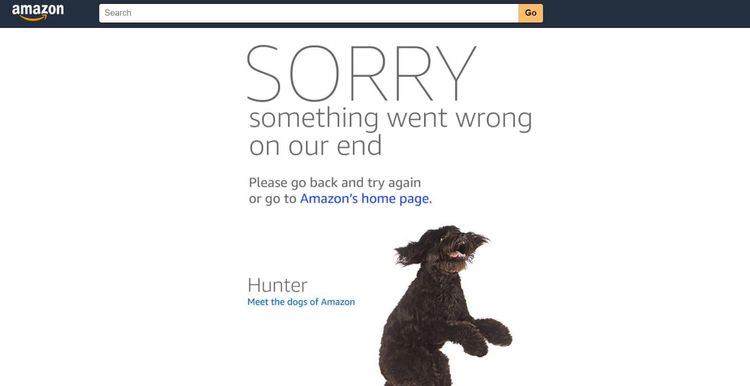

Getting Blocked When Scraping Multiple Pages With Selenium

You're likely to get blocked while scraping multiple pages with Selenium. You can even get blocked while scraping only one page. So, you need to bypass these blocks to scrape all you want.

For example, Selenium might get blocked while accessing an Amazon product page. Attempt to open it with the following code:

# import the required libraries

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

chrome_options = Options()

chrome_options.add_argument("--headless")

driver = webdriver.Chrome(options=chrome_options)

# open the target page

driver.get("https://www.amazon.com/s?hidden-keywords=bluetooth+headphones")

# wait for the page to load completely (adjust wait time as needed)

driver.implicitly_wait(10)

# print the page HTML content

print(driver.page_source)

# close the browser

driver.quit()

Amazon blocks the request with a 503 error, as shown.

<body>

<a href="/ref=cs_503_logo">

<img id="b" src="https://images-na.ssl-images-amazon.com/images/G/01/error/logo._TTD_.png" alt="Amazon.com">

</a>

<form id="a" accept-charset="utf-8" action="/s" method="GET" role="search">

<div id="c">

<input id="e" name="field-keywords" placeholder="Search">

<input name="ref" type="hidden" value="cs_503_search">

<input id="f" type="submit" value="Go">

</div>

</form>

</body>

See the typical blocking page below. It's even more deceitful because Amazon makes it look like a server error, whereas it's a way to block unwanted requests.

You can avoid anti-bot detection in Selenium by masking your IP with premium proxies. Another way is to mimic a real browser by rotating the Selenium user agent. However, these steps are usually not enough to bypass detection.

The most effective way to bypass anti-bot detection is via a web scraping API like ZenRows with your web scraper. ZenRows helps you fix your headers, configure premium proxies, and evade any block behind the scenes so you can scrape any website undetected.

For instance, you can avoid the earlier scenario where Amazon throws a 503 error by integrating ZenRows.

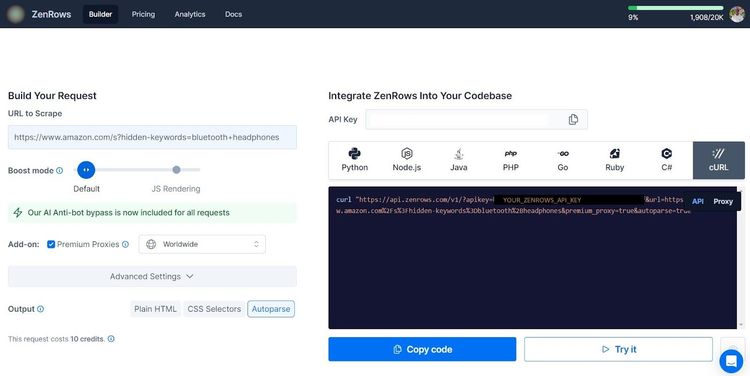

Sign up, and you'll get to the request builder. Once in the Request Builder, click "Premium Proxies", select "Autoparse" and choose "cURL" as your request type.

Now, use the generated cURL in your Selenium web scraper. Pay attention to the new URL format:

# import the required libraries

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

from urllib.parse import urlencode

chrome_options = Options()

chrome_options.add_argument("--headless")

driver = webdriver.Chrome(options=chrome_options)

url = "https://www.amazon.com/s?hidden-keywords=bluetooth+headphones"

# reformat the generated cURL

target_url = (

f"https://api.zenrows.com/v1/?"

f"apikey=<YOUR_ZENROWS_API_KEY>&"

f"{urlencode({'url': url})}&"

f"premium_proxy=true&"

f"autoparse=true"

)

# open the target page

driver.get(target_url)

# wait for the page to load completely (adjust wait time as needed)

driver.implicitly_wait(10)

# print the page HTML content

print(driver.page_source)

# close the browser

driver.quit()

Selenium with ZenRows scrapes the target page like so:

[

{

"title": "Sony WH-CH520 Wireless Headphones Bluetooth On-Ear Headset with Microphone, Black New",

"asin": "B0BS1PRC4L",

"avg_rating": "4.5 out of 5 stars",

"review_count": "7,836"

},

# ... other products omitted for brevity

{

"title": "Skullcandy Sesh Evo In-Ear Wireless Earbuds - Mint (Discontinued by Manufacturer)",

"asin": "B0857JPMND",

"avg_rating": "4.1 out of 5 stars",

"review_count": "35,809"

}

]

That works! You just bypassed an anti-bot system in Selenium using ZenRows.

ZenRows can also replace Selenium since it has rich web scraping functionalities, including anti-bot bypass and JavaScript instructions for dynamic content extraction.

Conclusion

In this article, you've learned the four methods of scraping different kinds of paginated websites in Selenium with Python. Now you know:

- How to extract content from a paginated website using the next page link method.

- How to scrape a paginated website by simulating its page number in the URL.

- The scrolling technique for scraping content from a website with infinite scrolling.

- How to scrape data hidden behind a "Load more" button.

Remember that you'll always encounter anti-bots while scraping with automated browsers like Selenium. Avoid all of them with ZenRows, an all-in-one web scraping solution. Try ZenRows for free!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.