Are you facing IP bans when scraping with Selenium? We've all been there. Most websites implement measures to block IPs that make too many requests. But today, you'll learn how to overcome this obstacle using a Selenium proxy in NodeJS.

With a step-by-step guide, this article will walk you through integrating proxies with Selenium in a NodeJS environment.

How to Use a Proxy in Selenium NodeJS?

Using a proxy in Selenium NodeJS involves setting up the WebDriver to route your requests through a defined proxy server. While there are different ways to achieve this depending on your project requirements, they all revolve around configuring your proxy settings and passing them to the WebDriver instance.

Let's put it into practice.

First, here's a basic Selenium scraper to which you can add proxy configurations.

const { Builder, By } = require('selenium-webdriver');

const firefox = require('selenium-webdriver/firefox');

async function scraper() {

// set the browser options

const options = new firefox.Options().addArguments('--headless');

// initialize the webdriver

const driver = new Builder()

.forBrowser('firefox')

.setFirefoxOptions(options)

.build();

try {

// navigate to target website

await driver.get('https://httpbin.io/ip');

// get the page text content

const pageText = await driver.findElement(By.css('body')).getText();

console.log(pageText);

} catch (error) {

console.error('An error occurred:', error);

} finally{

await driver.quit();

}

}

scraper();

The code snippet above navigates to HTTPbin, a test website that returns the client's IP address and prints its content. But since no proxy settings were added, it'll return your machine's IP address, like the example below.

{

"origin": "105.XX.YY.ZZZ:60804"

}

If you'd like to polish your scraping skills, check out this guide on web scraping in JavaScript.

Step 1: Use a Proxy in an HTTP Request

Start by importing the proxy module from the Selenium WebDriver package. This enables access to the manual() method for manual proxy configuration.

Then, open an async function and define your proxy details. You can grab a free proxy from Free Proxy List.

// import the required libraries

const proxy = require('selenium-webdriver/proxy');

async function scraper() {

// define your proxy details

const proxyString = '167.99.174.59:80';

}

Next, initialize a browser (Firefox) options object and set the headless property. After that, create a new WebDriver instance and manually add your proxy settings to the WebDriver using the manual() method.

async function scraper() {

//...

// set the browser options

const options = new firefox.Options().addArguments('--headless');

// initialize the webdriver

const driver = new Builder()

.forBrowser('firefox')

// add the proxy to the webdriver

.setProxy(proxy.manual({

http: proxyString,

https: proxyString,

}))

.setFirefoxOptions(options)

.build();

}

That's it. You've configured your first Selenium proxy in NodeJS.

Remark: For Chrome, manual configuration isn't necessary. You can achieve the same result by passing your proxy settings as a command line argument in your Chrome Options object and initializing a WebDriver instance with the preset options. Here's an example.

async function scraper(){

//...

const options = new chrome.Options().addArguments('--headless', `--proxy-server=http://167.99.174.59:80`);

const driver = new Builder()

.forBrowser('chrome')

.setChromeOptions(option)

.build()

}

If you add your proxy configuration to the basic script created earlier, your complete code for Firefox should look like this.

// import the required libraries

const { Builder, By } = require('selenium-webdriver');

const firefox = require('selenium-webdriver/firefox');

const proxy = require('selenium-webdriver/proxy');

async function scraper() {

// define your proxy details

const proxyString = `167.99.174.59:80`;

// set the browser options

const options = new firefox.Options().addArguments('--headless');

// initialize the webdriver

const driver = new Builder()

.forBrowser('firefox')

// add the proxy to the webdriver

.setProxy(proxy.manual({

http: proxyString,

https: proxyString,

}))

.setFirefoxOptions(options)

.build();

try {

// navigate to target website

await driver.get('https://httpbin.io/ip');

await driver.sleep(8000);

// get the page text content

const pageText = await driver.findElement(By.tagName('body')).getText();

console.log(pageText);

} catch (error) {

console.error('An error occurred:', error);

} finally {

await driver.quit();

}

}

scraper();

For Chrome, replace the Firefox proxy settings with the Chrome configuration above.

Run it, and it'll return your proxy address.

{

"origin": "167.99.174.59:53443"

}

Congrats! Everything works.

While the example above used a free proxy, real-world cases need premium proxies, some requiring additional configuration. Let's see how to implement such in Selenium NodeJS.

Proxy Authentication with Selenium NodeJS

Premium proxy providers often use credentials like username and password to control access to their proxy servers. Including these credentials in your proxy settings often requires additional configuration.

Let's see how.

Selenium doesn't support basic authentication with username and password. But you can integrate with other libraries or APIs that offer support for such functionality.

For this tutorial, we'll use proxy-chain. This NodeJS library allows you to create a local proxy server that can forward requests to an upstream proxy server, even if it requires authentication.

With this library, you only need to "anonymize" the authenticated proxy and then pass the anonymized proxy to your WebDriver instance.

To get started, install proxy-chain using the following command.

npm i proxy-chain

Next, import the library and define your proxy credentials.

// import required library

const proxyChain = require('proxy-chain');

async function scraper(){

// define your proxy details

const proxyUsername = 'username';

const proxyPassword = 'password';

const ipHost = '167.09.123.305';

const port = '80';

const proxyUrl = `http://${proxyUsername}:${proxyPassword}@${ipHost}:${port}`;

}

After that, anonymize proxyUrl using proxyChain.anonymizeProxy().

The anonymizeProxy() method takes your proxy URL with username and password and creates a new proxy you can use without authentication.

However, it produces a URL including a scheme (for example, http://127.0.0.1:45678), but Selenium WebDriver expects just the host and port. So, you must extract them before passing them to the WebDriver.

async function scraper(){

//...

const anonymizedProxy = await proxyChain.anonymizeProxy(proxyUrl);

// parse the anonymized proxy URL

const parsedUrl = new URL(anonymizedProxy);

// extract the host and port

const proxyHost = parsedUrl.hostname;

const proxyPort = parsedUrl.port;

// construct the new proxy string

const newProxyString = `${proxyHost}:${proxyPort}`;

}

Once you have your new proxy string, pass it to your WebDriver instance.

async function scraper(){

//...

// set the browser options

const options = new firefox.Options().addArguments('--headless');

// initialize the webdriver

const driver = new Builder()

.forBrowser('firefox')

// add the proxy to the webdriver

.setProxy(proxy.manual({

http: newProxyString,

https: newProxyString,

}))

.setFirefoxOptions(options)

.build();

)

To verify this works, integrate this into the basic script provided earlier. You should have the following complete code.

// import the required libraries

const { Builder, By } = require('selenium-webdriver');

const firefox = require('selenium-webdriver/firefox');

const proxyChain = require('proxy-chain');

const proxy = require('selenium-webdriver/proxy');

async function scraper() {

// define your proxy details

const proxyUsername = 'username';

const proxyPassword = 'password';

const ipHost = '167.99.174.59';

const port = '80';

const proxyUrl = `http://${proxyUsername}:${proxyPassword}@${ipHost}:${port}`;

// anonymize proxyUrl

const anonymizedProxy = await proxyChain.anonymizeProxy(proxyUrl);

// parse anonymized proxy URL

const parsedUrl = new URL(anonymizedProxy);

// extract the host and port

const proxyHost = parsedUrl.hostname;

const proxyPort = parsedUrl.port;

// construct the new proxy string

const newProxyString = `${proxyHost}:${proxyPort}`;

// set the browser options

const options = new firefox.Options().addArguments('--headless');

// initialize the webdriver

const driver = new Builder()

.forBrowser('firefox')

// add the proxy to the webdriver

.setProxy(proxy.manual({

http: newProxyString,

https: newProxyString,

}))

.setFirefoxOptions(options)

.build();

try {

// navigate to target website

await driver.get('https://httpbin.io/ip');

await driver.sleep(8000);

// get the page text content

const pageText = await driver.findElement(By.css('body')).getText();

console.log(pageText);

} catch (error) {

console.error('An error occurred:', error);

} finally {

await driver.quit();

// clean up, forcibly close all pending connections

await proxyChain.closeAnonymizedProxy(newProxyString, true);

}

}

scraper();

Step 2: Rotate Proxies in Selenium for JavaScript

In most cases, configuring a single proxy isn't enough. To avoid getting blocked, it's essential to rotate between multiple proxies.

Websites often identify "too many" requests as suspicious activity and can block your IP address. However, by actively switching proxies, you can distribute traffic across multiple IPs. This approach makes your requests appear to originate from different users, increasing your chances of avoiding detection.

To rotate Selenium proxies in NodeJS, start by defining your proxy pool. You can grab a few proxies from Free Proxy List for testing.

// import required libraries

const { Builder, By } = require('selenium-webdriver');

const firefox = require('selenium-webdriver/firefox');

const proxy = require('selenium-webdriver/proxy');

// list of proxies

const proxyPool = [

'136.244.99.51:8888',

'45.11.95.166:6012',

'110.34.3.229:3128',

// add more proxies as needed

];

Next, select a proxy randomly from your proxy pool. Then, set browser options and assign the selected proxy to the WebDriver.

async function scraper() {

// select a proxy from the pool

const proxy = proxyPool[Math.floor(Math.random() * proxyPool.length)];

// set browser options

const options = new firefox.Options().addArguments('--headless');

// initialize the webdriver

const driver = new Builder()

.forBrowser('firefox')

// add the proxy to the webdriver

.setProxy(proxy.manual({

http: proxy,

https: proxy,

}))

.setFirefoxOptions(options)

.build();

};

All that's left is making your request like in the basic script. Your full code should look like this.

// import required libraries

const { Builder, By } = require('selenium-webdriver');

const firefox = require('selenium-webdriver/firefox');

const proxy = require('selenium-webdriver/proxy');

// list of proxies

const proxyPool = [

'136.244.99.51:8888',

'45.11.95.166:6012',

'110.34.3.229:3128',

// add more proxies as needed

];

async function scraper() {

// select a proxy from the pool

const selectedProxy = proxyPool[Math.floor(Math.random() * proxyPool.length)];

// set browser options

const options = new firefox.Options().addArguments('--headless');

// initialize the webdriver

const driver = new Builder()

.forBrowser('firefox')

// add the proxy to the webdriver

.setProxy(proxy.manual({

http: selectedProxy,

https: selectedProxy,

}))

.setFirefoxOptions(options)

.build();

try {

// navigate to target website

await driver.get('https://httpbin.io/ip');

await driver.sleep(8000);

// get the page text content

const pageText = await driver.findElement(By.css('body')).getText();

console.log(pageText);

} catch (error) {

console.error('An error occurred:', error);

} finally {

await driver.quit();

}

}

scraper();

Run this code multiple times, and you should get a different IP address each time. Here are the results for two requests.

{

"origin": "136.244.99.51:8888"

}

//...

{

"origin": "110.34.3.229:3128"

}

Congrats! You've built your first Selenium proxy rotator in NodeJS.

Now, let's try your proxy rotator in a real-world scenario against a protected website, a G2 Product review page.

For that, replace the test target URL with https://www.g2.com/products/salesforce-salesforce-sales-cloud/reviews. Run your code, and it'll fail, displaying an error message like the one below.

<!DOCTYPE html>\n

<!--[if lt IE 7]>undefined</head>\n undefined<body>\n

<div.......">\n

<h1 data-translate="block_headline">Sorry, you have been blocked</h1>

<h2 class="cf-subheadline">

<span data-translate="unable_to_access">You are unable to access</span> g2.com/...

</h2>

<!-- ... -->

The result above reaffirms that free proxies are unreliable and only suitable for testing.

Step 3: Get a Residential Proxy to Avoid Getting Blocked

We proved before that free proxies are impractical for real-life scenarios, whereas premium proxies provide greater reliability and evasion capabilities.

Check out our list of the best web scraping proxies that integrate with Selenium in NodeJS.

While Selenium allows you to emulate browser interactions, anti-bot systems will ultimately block you, even with proxies.

This happens because Selenium displays automation indicator flags like ‘navigator.webdriver’ that web servers can easily detect. Although tools like Undetected ChromeDriver can hide some of these properties, they often prove ineffective against advanced anti-bot protection measures.

Additionally, Selenium can get resource-intensive and difficult to scale, especially when large-scale web scraping.

Luckily, ZenRows offers the ultimate solution. It's a web scraping API that provides the complete toolkit to scrape without getting blocked, including auto-rotating premium proxies and user agents, anti-CAPTCHAs, geolocation, etc.

Additionally, ZenRows offers headless browser functionality, like Selenium, but without the additional infrastructure overhead. It's also much easier to scale and cost-effective, as you only pay for successful requests.

Let's see ZenRows against the same protected website that blocked Selenium.

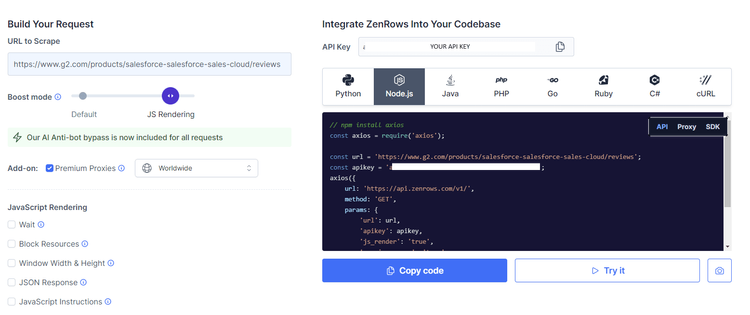

To get started, sign up for free, and you'll be directed to the Request Builder page.

Paste your target URL (https://www.g2.com/products/salesforce-salesforce-sales-cloud/reviews), select the anti-bot boost mode, and check the box for Premium Proxies to rotate residential proxies automatically. Then, select NodeJS as the language that'll generate your request code on the right.

Although ZenRows suggests Axios, you can use any NodeJS HTTP client you choose.

Copy the generated code to your favorite editor. Your new script should look like this.

// npm install axios

const axios = require('axios');

const url = 'https://www.g2.com/products/salesforce-salesforce-sales-cloud/reviews';

axios({

url: 'https://api.zenrows.com/v1/',

method: 'GET',

params: {

'url': url,

'apikey': '<YOUR_ZENROWS_API_KEY>',

'js_render': 'true',

'premium_proxy': 'true',

},

})

.then(response => console.log(response.data))

.catch(error => console.log(error));

Run it, and you'll get the page's HTML content.

<!DOCTYPE html>

<!-- ... -->

<title id="icon-label-55be01c8a779375d16cd458302375f4b">G2 - Business Software Reviews</title>

<!-- ... -->

<h1 ...id="main">Where you go for software.</h1>

Awesome, right? ZenRows makes it extremely easy to bypass any anti-bot protection.

Conclusion

Proxies are valuable tools for avoiding IP bans and rate limiting. Here's a quick recap on configuring a Selenium proxy in NodeJS.

- Define your proxy credentials

- Pass them to the WebDriver instance.

- Rotate between proxies to increase your chances of avoiding detection.

While these steps may bring you success when testing, they don't work against advanced anti-bot systems. So, Try ZenRows now to spare yourself time and effort and ensure you reach your scraping goals.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.