Would you like to learn how to take screenshots while scraping with JavaScript?

In this article, you'll learn how to do it using seven different tools:

Before going into detail, let's see a comparison between all solutions:

| Puppeteer | ZenRows | Playwright | Selenium | HTML2Canvas | Electron | Screenshot API | |

|---|---|---|---|---|---|---|---|

| Main purpose | Automation testing | Bypassing CAPTCHAs and other anti-bot systems at scale | Automation testing | Automation testing | Mainly for screenshotting a web page within the browser environment | Creating desktop applications with HTML, CSS, and JavaScript | Screenshotting web pages |

| Pros | Easy to use and good for screenshotting any part of a web page (including full-page capture) | Bypasses any anti-bot system, easy to use, supports full-page screenshots, works with any programming language | Beginner-friendly, supports many languages, good for full-page screenshots | Cross-platform with support for many languages and browsers | Dedicated to taking screenshots | Rapid execution of screenshot actions | Saves time and effort |

| Cons | Limited to JavaScript | Requires an API key | Multiple browser download takes up disk space | WebDriver setup is complex and requires extra steps for full-page screenshots | Operates within a browser context and isn't a web scraping tool | Has a steep learning curve and requires an application instance for screenshots; not a web-scraping tool | Costly |

| Ideal use cases | Browser automation, dynamic web scraping, taking screenshots | Avoiding blocks while scraping, scraping dynamic content, grabbing screenshots | Browser automation, dynamic content scraping | Browser automation, web scraping | Taking in-page screenshots | Desktop application | Taking screenshots |

| Ease of use | Easy | Easy | Easy | Difficult | Difficult | Difficult | Easy |

| Popularity | High | Moderate | Moderate | High | High | High | High |

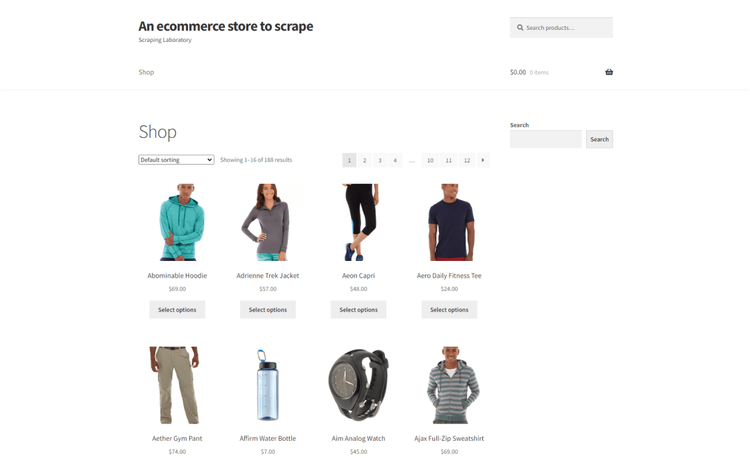

Below, you'll find a description of each tool and code snippets that will let you test them on the job. For learning purposes, you'll take screenshots of ScrapingCourse.com.

1. Puppeteer

Puppeteer, a Node.js library that provides a high-level API to control Chrome/Chromium over the DevTools Protocol, is straightforward and beginner-friendly. It lets you screenshot a web page with a single line of code.

Puppeteer lets you take full-page screenshots or capture specific elements and the visible part of the page. Still, Puppeteer's major weakness are its limitation to JavaScript and scarce cross-browser support.

Here's the code to get a full-page screenshot with Puppeteer. Test it yourself:

// import the required library

const puppeteer = require("puppeteer");

(async () =>{

// start browser instance in headless mode and launch a new page

const browser = await puppeteer.launch({ headless: false });

const page = await browser.newPage();

// visit the product page

await page.goto("https://scrapingcourse.com/ecommerce/");

// use setTimeout to wait for elements to load

await new Promise(resolve => setTimeout(resolve, 10000));

// take a screenshot of the full page

await page.screenshot({ path: "pupppeteer-screenshot.png", fullPage:true})

// close the browser

await browser.close();

})();

Still, using Puppeteer alone won't help you avoid blocks when screenshotting protected pages such as G2. Let's look at a solution that will help you bypass any anti-bot system automatically.

2. ZenRows

ZenRows is an all-in-one web scraping solution. It features JavaScript instructions for dynamic content extraction with a simple screenshot parameter for grabbing full-page screenshots.

Unlike most free solutions, ZenRows lets you bypass any anti-bot detection and capture any web page without getting blocked.

To take a screenshot with ZenRows, sign up and grab your API key. The code below will let you take a full-page screenshot with ZenRows using JavaScript's Axios as the HTTP client:

// import the required modules

const axios = require("axios");

const fs = require("fs");

axios({

url: "https://api.zenrows.com/v1/",

method: "GET",

// specify your request parameters

params: {

"url": "https://scrapingcourse.com/ecommerce/",

"apikey": "<YOUR_ZENROWS_API_KEY>",

"js_render": "true",

"premium_proxy": "true",

"return_screenshot": "true"

},

// set response type to stream

responseType: "stream"

})

.then(function (response) {

// write the image file to your project directory

const writer = fs.createWriteStream("screenshot-from-zenrows.png");

response.data.pipe(writer);

writer.on("finish", () => {

console.log("Image saved successfully.");

});

})

.catch(function (error) {

console.error("Error occurred:", error);

});

You now know how to take a full-page screenshot with ZenRows.

3. Playwright

Playwright is Microsoft's open-source browser automation library. Its screenshot feature is easy to implement and syntactically resembles Puppeteer. Playwright also supports multiple browsers, such as Chrome, Brave, Edge, and Firefox, and works in other languages, including Java, Python, and C#.

One major limitation of Playwright is that it downloads multiple browsers by default unless manually controlled, which takes up a lot of disc space.

Here's how to take a screenshot with Playwright in JavaScript:

// import the required library

const { chromium } = require("playwright");

(async () => {

// start a browser instance

const browser = await chromium.launch();

const page = await browser.newPage();

// navigate to the desired URL

await page.goto("https://scrapingcourse.com/ecommerce/");

// take a screenshot of the full page

await page.screenshot({ path: "playwright-screenshot.png", fullPage: true });

// close the browser

await browser.close();

})();

It's this simple!

4. Selenium

Selenium is one of the most popular browser automation libraries with strong dynamic web scraping capabilities. Selenium features a built-in method for screenshotting content within the viewport. However, taking full-page screenshots requires extra steps like scrolling and only works in headless mode, making it less beginner-friendly.

Although Selenium supports many browsers and programming languages, its WebDriver setup is technically challenging.

Here's the code to take a full-page screenshot with Selenium:

// import the required library

const { Builder, By } = require("selenium-webdriver");

const chrome = require("selenium-webdriver/chrome");

// define an async function to encapsulate our Selenium code

async function scraper() {

// create a new WebDriver instance using Chrome in headless mode

const driver = new Builder()

.forBrowser("chrome")

.setChromeOptions(new chrome.Options().addArguments("--headless"))

.build();

try {

// open the target website

await driver.get("https://scrapingcourse.com/ecommerce/");

// define a function to get scroll dimensions

async function getScrollDimension(axis) {

return await driver.executeScript(`return document.body.parentNode.scroll${axis}`);

}

// get the page scroll dimensions

const width = await getScrollDimension("Width");

const height = await getScrollDimension("Height");

// set the browser window size

await driver.manage().window().setRect({ width, height });

// take a full-page screenshot

const fullBodyElement = await driver.findElement(By.css("body"));

await fullBodyElement.takeScreenshot().then(function (data) {

require("fs").writeFileSync("selenium-full-page-screenshot.png", data, "base64");

});

} finally {

// quit the browser

await driver.quit();

}

}

// call the scraper function to take screenshot

scraper();

5. HTML2Canvas

Html2canvas is a dedicated screenshot library in JavaScript. It works within the browser environment and is unsuitable for taking screenshots during web scraping.

Html2cannvas appends the screenshot to a canvas element and displays it directly in the DOM.

The sample code below assumes you can access a web page's DOM directly. It uses html2canvas to capture the body element and append the result to a div inside a dedicated function. You can attach this function to an event listener in your website's DOM:

function takeshot() {

// get the body tag to screenshot the body content

let div = document.getElementsByTagName("body")[0];

// use html2canvas to take a screenshot and append to an output div

html2canvas(div).then(

function (canvas) {

document.getElementById("screenshot").appendChild(canvas);

}

)

}

6. Electron

Electron is a JavaScript framework for creating desktop applications that include a browser feature capable of loading web pages. It also features a screenshot method for capturing the application window.

You can take a screenshot of any web page by loading it inside an Electron application and using the page capture method. However, Electron's major drawbacks are its steep learning curve and complex setup.

To use Electron for screenshots, you must run a dedicated Electron server to load your application. You can learn how to do it from Electron's documentation.

After you set up the server, paste the following code in your main.js file to grab a screenshot of the target page:

// import the required libraries

const { app, BrowserWindow } = require("electron");

const fs = require("fs");

function createWindow() {

// create a new desktop window

const win = new BrowserWindow({

width: 800,

height: 1500

});

// load the target page into the desktop application

win.loadURL("https://scrapingcourse.com/ecommerce/");

// create a web content context

const contents = win.webContents;

// wait for the page to finish loading and take a screenshot

contents.on("did-finish-load", async () => {

// capture the target page's screenshot

const image = await contents.capturePage();

// specify the custom file path

const filePath = "pictures/screenshot.png";

// write the screenshot into the file path

fs.writeFile(filePath, image.toPNG(), (err) => {

if (err) {

console.error("Error saving screenshot:", err);

} else {

console.log("Screenshot saved to:", filePath);

}

});

});

}

// create a window only when the app is ready

app.whenReady().then(createWindow);

// quite the app after closing the running window

app.on("window-all-closed", () => {

if (process.platform !== "darwin") {

app.quit();

}

});

// activate the app when triggered by a user

app.on("activate", () => {

if (BrowserWindow.getAllWindows().length === 0) {

createWindow();

}

});

The code above opens the target web page via an Electron desktop application, grabs its screenshot, and saves it in the specified directory.

7. Screenshot API

A screenshot API provides an endpoint you can integrate into your application or web scraper. Screenshot APIs are easier than screenshotting with headless browsers, and they work with any programming language. However, they're usually costly.

URL2PNG, Stillio, and Urlbox are great examples of screenshot APIs suitable for capturing web pages.

Conclusion

In this article, you've learned how to take screenshots in JavaScript using seven different tools.

Puppeteer, Playwright, and Selenium are the best choices when taking screenshots of unprotected websites. When screenshotting at scale, you're better off with a web scraping API such as ZenRows, which will let you bypass any anti-bot mechanism. Try ZenRows for free!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.