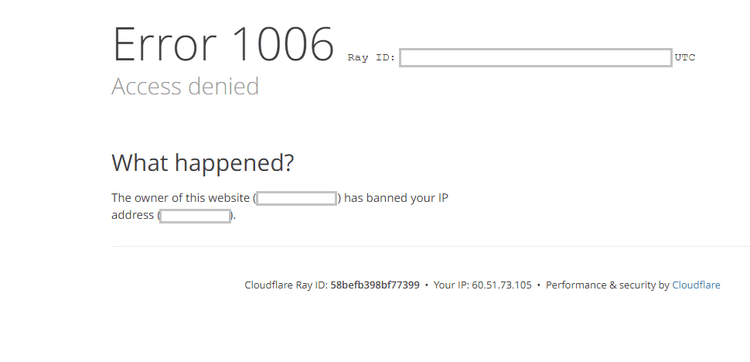

What Are Cloudflare Errors 1006, 1007, and 1008: Access Denied

Cloudflare Errors 1006, 1007, and 1008 indicate the traffic from your IP address has been blocked. That can happen when trying to scrape a website using tools easily identifiable as bots. The web server assumes you're trying to bypass Cloudflare to retrieve data, so it denies you access.

Let's see how to fix this error!

How to Fix Cloudflare Errors 1006, 1007, and 1008

You can fix Cloudflare Errors 1006, 1007, and 1008 by mimicking human behavior. Here are three ways to fly under the radar:

1. Get Premium Proxies

Proxies are essential web scraping tools as they act as intermediaries between you and your target web server. Namely, the proxy server receives your requests and sends them through its IP address.

However, not all proxies are the same. Free solutions are unreliable and easily detectable. Therefore, it's important to use premium providers, as they provide stable connections and aren't associated with bots.

We recommend residential ones, meaning your requests are routed through IP addresses assigned to real devices. That way, your traffic seems like that of a natural user, and you can avoid errors 1006, 1007, and 1008 from Cloudflare.

Check out our guide on web scraping proxy services to learn about the best proxy types and providers.

2. Rotate User Agents

HTTP headers play a crucial role in client-server communication. They're sent alongside requests to provide additional context to the web server, such as data type, cookie, User Agent, etc.

Among all the headers, the User Agent (UA) string plays the most critical role in web scraping because it informs the web server of the client making the request. Websites use this information to detect and block automated traffic. So, if you have a non-browser UA, you can easily be identified as a bot.

However, you can avoid the mentioned Cloudflare errors by rotating actual browser User Agents to make your requests appear from different users or devices. But remember, you must use correctly formed UA strings, otherwise your bot will be easily detected.

In our top list of user agents for web scraping, you'll learn more about well-formed UAs.

3. Use a Web Scraping API

The previous methods aren't entirely reliable as they don't work against advanced anti-bot measures. For a more effective solution, you might want to consider a web scraping API like ZenRows.

ZenRows is an all-in-one solution designed to help developers scrape without directly dealing with anti-bot measures like Cloudflare. With a large proxy rotator, you can avoid errors 1006, 1007, and 1008. Sign up to try it and get 1,000 free API credits.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.