When scraping with NodeJS, a default or bad User Agent is one of the most frequent reasons why you get blocked. So here, you'll learn how to set a custom one and randomize it using Axios in NodeJS to ensure the success of your project.

What Is the User-Agent in NodeJS?

The User-Agent header is a string sent with an HTTP request, identifying the client making the request. In NodeJS, you can use a library such as Axios or Selenium to make HTTP requests.

Install Axios with the following command:

npm install axios

To see your current UA, send a request to https://httpbin.org/headers and log the User-Agent header using Axios. You can copy-paste the below sample scraper and save it in a file named index.js, for example.

const axios = require('axios');

axios.get('https://httpbin.org/headers')

.then(response => {

const headers = response.data.headers;

console.log(headers['User-Agent']);

})

.catch(error => {

console.log(error);

});

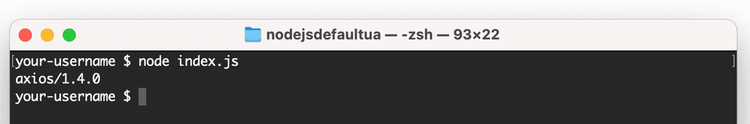

To run it, use the node command followed by your file name.

As you see, the default NodeJS User Agent using Axios is axios/1.4.0. (it can be slightly different for you depending on the library version you use). This default UA string is easily identifiable by website security measures and will likely get you blocked.

Let's learn how to change it!

How to Use a Custom User-Agent in NodeJS

To set a custom User Agent in NodeJS, follow this process:

The first step is to get a real sample, and you can find some reliable ones in our list of UAs for web scraping. Grab a User Agent and put it on an object:

const headers = {

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/113.0.0.0 Safari/537.36'

};

You'll use this object as the second parameter for the Axios GET call:

axios.get('https://httpbin.org/headers', { headers })

So this is the complete code that sends a request to https://httpbin.com/headers with a custom User-Agent header and logs the User-Agent header received in the response:

const axios = require('axios');

const headers = {

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/113.0.0.0 Safari/537.36'

};

axios.get('https://httpbin.org/headers', { headers })

.then(response => {

const headers = response.data.headers;

console.log(headers['User-Agent']);

})

.catch(error => {

console.log(error);

});

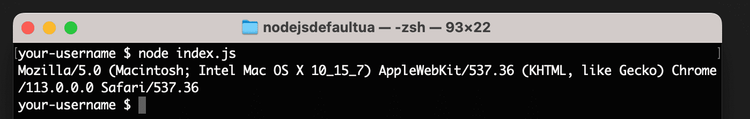

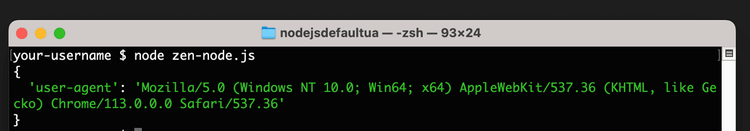

After running the code, you should see an output similar to the one below:

Cool! You've just set a NodeJS fake User Agent.

However, more than a single User-Agent header is necessary to avoid getting blocked because too many consecutive requests with the same one are a hint to detect you as a bot. That's why you should rotate UA headers to use a different one for each request.

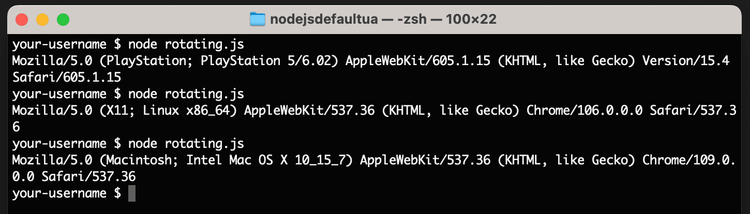

Here's an example that sends a request to https://httpbin.org/headers with a randomly selected User Agent header. This code defines an array of User-Agents, chooses one randomly, and then uses a self-invoking async function to request the target website with the selected string.

const axios = require('axios');

const userAgents = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 13_1) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15'

];

(async function fetchWithRandomUserAgent() {

const randomIndex = Math.floor(Math.random() * userAgents.length);

const randomUserAgent = userAgents[randomIndex];

const options = {

headers: {

'User-Agent': randomUserAgent

}

};

try {

const response = await axios.get('https://httpbin.org/headers', options);

console.log(response.data);

} catch (error) {

console.error(error);

}

})();

The script also logs the random User Agent from the response data to the console, so it worked!

How to Change the User-Agent in NodeJS at Scale

When doing web scraping, constructing a proper User Agent is crucial to avoid detection and potential blocking. By carefully constructing a User Agent that mimics a typical user, it becomes less likely for the web scraping to be flagged as suspicious or automated. A well-crafted User Agent helps maintain a low profile. Therefore, understanding the importance of constructing an appropriate User Agent is essential for successful and uninterrupted scraping.

Let's check one example of an incomplete and suspicious User Agent:

Mozilla/5.0 (X11; Linux) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15

This User Agent has incomplete information for the Operating System part since it just says it uses the X11 window system running on Linux but doesn't specify what version of Linux or the architecture of the system. Moreover, it claims to be a Safari browser, which is not supported on Linux. Any anti-bot system could easily classify this uncommon User Agent as suspicious.

Additionally, you need to make sure that your UAs stay updated.

Then, besides User-Agent, you need to pay attention to other headers to simulate a real browser:

And yet, not only header configuration will come your way when web scraping. There are other big challenges you might have to face. To learn more about them, check our guide on the anti-scraping techniques you need to know.

Is there an easy solution?

A web scraping API like ZenRows auto-rotates the User Agent in NodeJS, as well as sets the whole header set, and deals with any anti-bot measures.

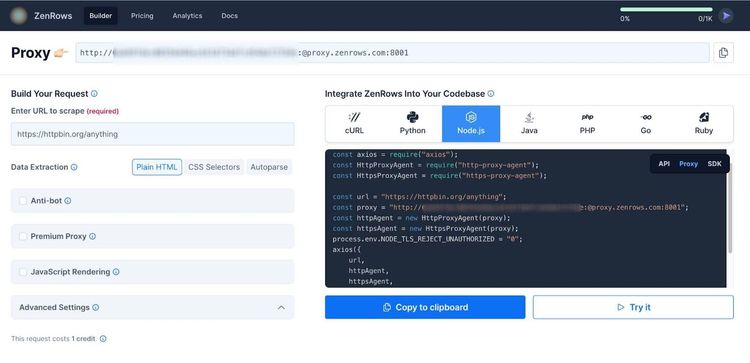

Sign up to get your free API key and try it for free. You'll get to the Request Builder page:

With the code on the right, you can start making requests to any webpage you want with User Agent auto-rotation. To verify it works, we used https://httpbin.io/user-agent as a target URL.

As you can see, it's super easy to make requests with ZenRows to get real and working UAs. Additionally, you can use other anti-bot features it has available. We usually recommend activating premium proxies, JavaScript rendering and Anti-bot.

Conclusion

Using different and well-formed User Agents in NodeJS for each request helps prevent you from being blocked by your target website. Additionally, you'll need to deal with other techniques to scrape at scale successfully, for which we recommend checking out our guide on the best methods for web scraping without getting blocked.

If you'd like to implement an easy solution that deals with all anti-bot measures, then you can sign up for free to ZenRows and try it out yourself.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.