A major issue when scraping with a tool like Puppeteer is getting blocked by your target website. If you've encountered this, it's likely that your User Agent flagged you as a bot.

In this article, you'll learn how to change the Puppeteer User Agent to avoid detection and access the data you need.

What Is the Puppeteer User Agent?

To understand what the Puppeteer User Agent is, you must know that HTTP request headers are a set of data that provide additional information about the request. They include details that help the server understand and process the request appropriately.

One of the key elements of an HTTP request header is the User Agent (UA), which provides information that helps the server identify clients and tailor its response.

Below is what a sample of real Google Chrome UA looks like:

Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/111.0.0.0 Safari/537.36

There, Mozilla/5.0 is a product token that tells the server that the client is Mozilla-compatible. (Windows NT 10.0; Win64; x64) means that the client runs on a 64-bit version of Windows 10, and AppleWebKit/537.36 (KHTML, like Gecko) is the rendering engine with version number 537.36 that powers the client. Lastly, Chrome/111.0.0.0 and Safari/537.36 indicate that a Chrome browser is used and is compatible with Safari.

Now, let's see what your default Puppeteer User Agent looks like:

Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) HeadlessChrome/116.0.5845.96 Safari/537.36

You can tell it doesn't look like a real one, so your requests are easily identifiable as automated.

How Do I Set a Custom User Agent in Puppeteer?

Let's customize your User Agent to bypass CAPTCHAs in Puppeteer. You will begin by installing Puppeteer on your local machine, then set a custom UA using a dedicated method, and finally randomize the UA.

Step 1. Getting Started

Let's set up a new Puppeteer project.

Start by installing Puppeteer if it's not installed yet:

npm install puppeteer

Then, initialize a new project:

npm init esnext

Here's a minimal script that makes a request to HTTPBin to log the User Agent of the requesting client:

import puppeteer from 'puppeteer';

(async () => {

// Launch the browser and open a new blank page

const browser = await puppeteer.launch({

headless: 'new',

});

const page = await browser.newPage();

// Navigate the page to target website

await page.goto('https://httpbin.io/user-agent');

// Get the text content of the page's body

const content = await page.evaluate(() => document.body.textContent);

// Log the text content

console.log('Content: ', content);

// Close the browser

await browser.close();

})();

Run the script, and you'll get an output like this:

{

"user-agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/116.0.0.0 Safari/537.36"

}

This is your default UA.

You can have a look at our guide on web scraping with Puppeteer guide to review the fundamentals if needed.

Now, let's set a custom User Agent in Puppeteer.

Step 2. Customize UA

To customize your default UA, use the page.setUserAgent() method, which takes in the new User Agent that you want to set as its first argument. Here's how:

// ...

const page = await browser.newPage();

// Custom user agent

const customUA = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/111.0.0.0 Safari/537.36';

// Set custom user agent

await page.setUserAgent(customUA);

// Navigate the page to target website

await page.goto('https://httpbin.io/user-agent');

// ...

Note that page.setUserAgent() must be called immediately before navigating to your target website.

Your full Puppeteer script should look like this now:

import puppeteer from 'puppeteer';

(async () => {

// Launch the browser and open a new blank page

const browser = await puppeteer.launch({

headless: 'new',

});

const page = await browser.newPage();

// Custom user agent

const customUA = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/111.0.0.0 Safari/537.36';

// Set custom user agent

await page.setUserAgent(customUA);

// Navigate the page to target website

await page.goto('https://httpbin.io/user-agent');

// Get the text content of the page's body

const content = await page.evaluate(() => document.body.textContent);

// Log the text content

console.log(content);

// Close the browser

await browser.close();

})();

Run it, and you will get this output:

{

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/111.0.0.0 Safari/537.36"

}

Awesome! The UA in the output is the same as the one you set in the code.

However, using a single custom UA is not enough to avoid getting blocked because you can still be easily identified when using the same UA many times. Therefore, you need to randomize your UA per request. Let's see how in the next section.

Step 3. Use a Random User Agent in Puppeteer

Clients that use the same UA to make too many requests may be denied access. Therefore, it's advisable to use a random User Agent with Puppeteer to avoid getting blocked.

To randomly rotate your it, first collect a pool of UAs to pick from. You can grab a few from our User Agent list for web scraping.

Now, let's create a separate function just below the Puppeteer import that returns a different UA whenever it's called.

// ...

const generateRandomUA = () => {

// Array of random user agents

const userAgents = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 13_1) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15'

];

// Get a random index based on the length of the user agents array

const randomUAIndex = Math.floor(Math.random() * userAgents.length);

// Return a random user agent using the index above

return userAgents[randomUAIndex];

}

// ...

Adding the function generateRandomUA() to the main Puppeteer code, you'll have:

// ...

// Open a new blank page

const page = await browser.newPage();

// Custom user agent from generateRandomUA() function

const customUA = generateRandomUA();

// Set custom user agent

await page.setUserAgent(customUA);

// ...

This is your full code:

import puppeteer from 'puppeteer';

const generateRandomUA = () => {

// Array of random user agents

const userAgents = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 13_1) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15'

];

// Get a random index based on the length of the user agents array

const randomUAIndex = Math.floor(Math.random() * userAgents.length);

// Return a random user agent using the index above

return userAgents[randomUAIndex];

}

(async () => {

// Launch the browser

const browser = await puppeteer.launch({

headless: 'new',

});

// Open a new blank page

const page = await browser.newPage();

// Custom user agent from generateRandomUA() function

const customUA = generateRandomUA();

// Set custom user agent

await page.setUserAgent(customUA);

// Navigate the page to target website

await page.goto('https://httpbin.io/user-agent');

// Get the text content of the page's body

const content = await page.evaluate(() => document.body.textContent);

// Log the text content

console.log(`Request ${i + 1}: `, content);

// Close the page

await page.close();

// Close the browser

await browser.close();

})();

Run it twice, and you'll get a randomly selected Puppeteer User Agent logged each time. For example, we got this:

// Output 1

{

"user-agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15"

}

// Output 2

{

"user-agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36"

}

Great! Your User Agent in Puppeteer now changes per request so that you have more chances to do web scraping without getting blocked.

To be noted, when adding UAs, you must ensure that they're well constructed to prevent you from being flagged as a bot. Examples of incorrect ones include those that are outdated or have inconsistencies between the browser name and published versions.

Yet, maintaining a pool of valid UAs yourself is hard. Luckily, there's an easier solution we'll learn about in the next section.

Easy Solution to Change User Agent in Puppeteer at Scale

Anti-bot measures will check the validity of your UA. Also, other techniques like TLS fingerprinting and CAPTCHA may be set up.

However, an easy solution to changing your User Agent at scale, as well as avoiding being flagged by other anti-bot measures, is to use ZenRows. It's an API with the same functionality as Puppeteer and also provides the most complete toolkit to avoid getting blocked. It acts as a web scraping proxy, auto-rotates your UA, and equips you with advanced scraping tools.

Let's try out ZenRows by using it to scrape data from a well-protected G2 page.

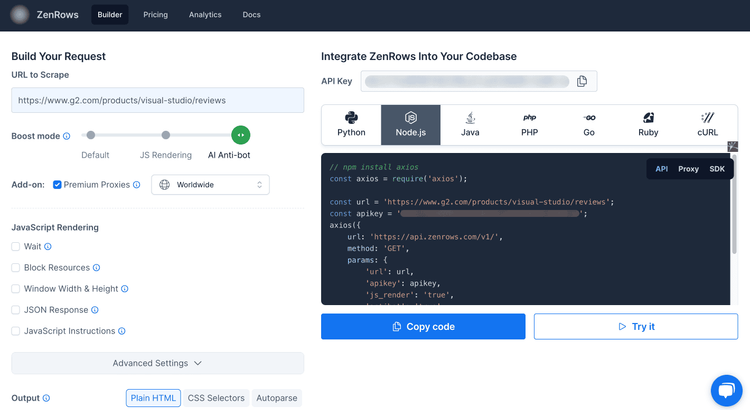

Start by signing up for a new account, and you'll get to the Request Builder page. There, paste your target URL, activate the anti-bot bypass mode, and enable premium proxies. Then, select Node.js as a language.

Here's the full code you'll get from ZenRows:

import axios from 'axios';

const url = 'https://www.g2.com/products/visual-studio/reviews';

const apikey = '<YOUR_ZENROWS_API_KEY>';

axios({

url: 'https://api.zenrows.com/v1/',

method: 'GET',

params: {

'url': url,

'apikey': apikey,

'js_render': 'true',

'premium_proxy': 'true',

},

})

.then(response => console.log(response.data))

.catch(error => console.log(error));

The ready-to-use scraper code on the right uses Axios, so install it (but any other HTTP client also works):

npm install axios

Run it, and you'll get the HTML content of the reviews page logged in your terminal like this:

<!DOCTYPE html><head><meta charset="utf-8" /><link href="https://www.g2.com/assets/favicon-fdacc4208a68e8ae57a80bf869d155829f2400fa7dd128b9c9e60f07795c4915.ico" rel="shortcut icon" type="image/x-icon" /><title>Visual Studio Reviews 2023: Details, Pricing, & Features | G2</title>

<!-- Cut for brevity... -->

Amazing! You've scraped a heavily protected web page with ZenRows.

Conclusion

Setting and randomizing your Puppeteer User Agent is an important factor in ensuring the success of your web scraping operations. It should be properly formed and be kept up-to-date.

Yet, there are many more challenges, like CAPTCHAs, IP blocking, honeypot traps, and fingerprinting, that you also need to overcome for web scraping without getting blocked. Doing that is tricky and may come at a huge cost. Happily, ZenRows makes it a breeze to achieve.

Try out ZenRows today to save time and frustration fighting against anti-bot protections.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.