HTML parsers are crucial for web scraping, enabling you to process and extract specific content from web pages. However, Python HTML parsers vary in performance, learning curve, ease of use, and flexibility. So you want to choose carefully.

In this article, we'll compare the seven best HTML parsers for scraping in Python to determine what works best for you.

Before we begin, here's a quick comparison of the best Python HTML parsers in the table below.

| Libraries | Ease of Use | Speed | Parsing strategies | Popularity | Documentation & Community |

|---|---|---|---|---|---|

| BeautifulSoup | User-friendly | Fast | CSS selectors, XPath | High | Good |

| lxml | User-Friendly | Fast | XPath | High | Good |

| html5lib | Steep but easier with BeautifulSoup | Slow | CSS selectors | Low | Poor documentation with low adoption |

| requests-html | Steep learning curve for advanced usage | Very slow | CSS selectors, XPath | Moderate | Moderate |

| PyQuery | User-friendly | Fast | CSS selectors, XPath | High | Moderate |

| Scrapy | Steep learning curve | Slow | CSS selectors, XPath | High | Good |

| jusText | Steep but easier with BeautifulSoup | Fast | CSS selectors | Moderate | Poor |

Next, we'll go into detail about each parser, demonstrating how each works using a sample request to ScrapeMe.

Let's dive right in!

1. BeautifulSoup

BeautifulSoup is the most popular Python HTML and XML parser, boasting an impressive user community. It returns a hierarchical structure of a web page document called a parse tree. This makes searching, navigation, and element location much easier using CSS and XPath selectors.

Using its modification feature, you can delete, edit, or add tags and attributes on the fly, allowing you to clean and restructure data while scraping. Although the BeautifulSoup parse tree can be messy, the library features built-in methods to enhance document readability.

⭐️ Popularity: 697k users.

👍 Pros:

- Easy to use.

- Powerful search and filter options.

- An active community to solve problems quickly.

- The built-in HTML prettifier and smoother allow for clean document formatting.

- Uses universally acceptable character encoding to interpret web pages.

- Well-documented with consistent updates.

- Integrates seamlessly with headless browsers like Selenium.

👎 Cons:

- No support for JavaScript dynamic content rendering.

- No built-in HTTP client.

⚙️ Features:

- HTML and XML parsing.

- CSS and XPath selectors.

- Unicode and UTF-8 character decoding and encoding.

- HTML prettifier and smoother.

- Document modification.

- HTML tree navigation.

- Search and filter.

👨💻 Example: The example below retrieves the product web page and parses its HTML using BeautifulSoup, which extracts the stock details using the find_all() method.

# Import the required libraries

import requests

from bs4 import BeautifulSoup

url = 'https://scrapeme.live/shop/Charizard/'

# Make a get request to retrieve page

response = requests.get(url)

# Resolve the response

if response.status_code==200:

html_content = response.text

else:

print(response.status_code)

# Parse HTML page with BeautifulSoup

soup = BeautifulSoup(html_content, 'lxml')

# Get the text content

left_in_stock = soup.find_all('p', {'class': 'stock'})

print(left_in_stock[0].text)

2. lxml

lxlm is a binding for the libxml2 and libxslt libraries in C. Based on the ElementTree Path, it returns an XML tree (lxlm.tree) of a target website and navigates its elements using the XPath selector. lxml also supports Extensible Stylesheet Language Transformations (XSLT) for converting XML into other formats like HTML.

Given the extensibility and simplicity of XML, lxml is faster than other Python parsers, only trailing behind BeautifulSoup in popularity. Although it only supports the XPath selector and ElementTree interface, combining it with BeautifulSoup confers friendly API syntaxes, more readable CSS selectors, and a prettifying capability.

Describe the library and its specificities (1-2 paragraphs)

⭐️ Popularity: 385k users.

👍 Pros:

- Lightweight and fast.

- Support for HTML5 parsing for handling modern web pages that use HTML5.

- Extensible with other parsers like BeautifulSoup.

- Robust XPath support allows precise and advanced queries.

- Consistently maintained with an active community.

- Namespace support for element identification.

- Supports E-factory for generating XML and HTML content.

- Good documentation.

👎 Cons:

- No JavaScript support for dynamic content.

- Unlike

html.parser, lxml requires installation. - Dependence on C-binding might require additional C-specific setups.

⚙️ Features:

- XSLT support.

- XPath.

- ElementTree API.

- XML and HTML parsing.

- HTML5 support.

- E-factory.

- Namespace support.

👨💻 Example: The following example extracts the products left in stock using lxml's XPath selector:

# Import the required libraries

import requests

import lxml.html

url = 'https://scrapeme.live/shop/Charizard/'

# Make a get request to retrieve page

response = requests.get(url)

# Resolve the response

if response.status_code==200:

html_content = response.text

else:

print(response.status_code)

# Parse the HTML content

tree = lxml.html.document_fromstring(html_content)

# Define XML tree path

path = tree.xpath('//*[@id="product-733"]/div[2]/p[2]')

# Extract text content

print(path[0].text)

3. html5lib

html5lib is an HTML5 parser that’s compatible with modern browsers. When parsing, it returns a serializable XML tree instance of a web page, making it more accessible to selectors. Unlike other lxml, html5lib can be challenging for beginners to learn.

The library integrates well with advanced parsers like BeautifulSoup and supports incremental parsing, which lets you fit content into memory. However, html5lib doesn’t have an active community. Another caveat is its poor documentation, which negatively impacts its adoption.

⭐️ Popularity: No dependent repository found.

👍 Pros:

- Integrates easily with parsers like BeautifulSoup.

- HTML serialization maintains DOM integrity for easier innerHTML access.

- XML document tree eases navigation.

- Compatible with modern browsers.

- Maintains the HTML5 standard specifications.

👎 Cons:

- Steep learning curve.

- Poor documentation and community.

- Slow performance.

- No JavaScript support.

- No built-in support for XPath selector.

⚙️ Features:

- HTML5 standard parsing algorithm.

- XML document tree.

- HTML serialization and formatting.

- Unicode support.

- CSS selectors.

- Streaming view.

👨💻 Example: This example serializes and parses an HTML page using html5lib. Then it locates the target content with the BeautifulSoup CSS selector.

import requests

import html5lib

from html5lib.serializer import serialize

from bs4 import BeautifulSoup

url = 'https://scrapeme.live/shop/Charizard/'

# Make a get request to retrieve page

response = requests.get(url)

# Resolve the response

if response.status_code==200:

html_content = response.text

else:

print(response.status_code)

# Parse HTML with html5lib

doc = html5lib.parse(html_content)

# Serialize the HTML page and expose its DOM tree to BeautifulSoup

serialized_html = serialize(doc)

soup = BeautifulSoup(serialized_html, 'html5lib')

# Retrieve the content

left_in_stock = soup.find_all('p', {'class': 'stock'})

print(left_in_stock[0].text)

4. Requests-html

requests-html wraps HTML parsing with a built-in HTTP client and supports CSS and XPath selectors. Its ability to reference HTML content also makes external HTTP client integration possible. In addition to its built-in pagination handling, it uses session rendering, which has a wait strategy for dynamically rendered content.

A unique attribute of requests-html is its support for muti-threaded requests in asynchronous mode, which allows you to interact with several websites simultaneously. Although the library has a steep learning curve for beginners, it has a fairly active community with comprehensive documentation. Requests-html can be slow, especially with JavaScript-rendered websites.

⭐️ Popularity: 12.1k users

👍 Pros:

- JavaScript support allows dynamic content scraping.

- Built-in pagination strategy.

- Supports external HTTP clients like the

requestslibrary. - Manipulate web elements with direct JavaScript code execution.

- Asynchronous web page interaction.

- Detailed documentation.

- XPath support for precise navigation.

- Actively maintained with an active community.

👎 Cons:

- Very slow.

- JavaScript rendering functionality can be unreliable.

- The learning curve can be steep for advanced usage.

⚙️ Features:

- Multithreaded asynchronous rendering.

- JavaScript support.

- Pagination.

- Built-in HTTP client.

- Session handling.

- CSS and XPath selectors.

👨💻 Example: Here's a sample usage of requests-html's asynchronous property for retrieving the number of items left on a product page using XPath.

# Import the required library

from requests_html import AsyncHTMLSession

# Instantiate a request session

session = AsyncHTMLSession()

url = 'https://scrapeme.live/shop/Charizard/'

# Define the function to access target page

async def get_left_items():

r = await session.get(url)

# Specify element path with XPath

path = r.html.xpath('//*[@id="product-733"]/div[2]/p[2]')

# Get required content

print(path[0].text)

# Run the request session

session.run(get_left_items)

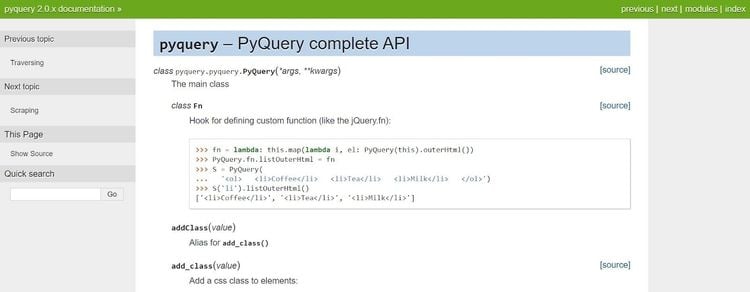

5. PyQuery

PyQuery is an extension of the lxml library, built with speed and efficiency in mind. It supports HTML and XML parsing and uses jQuery-like syntaxes to query web elements.

Using jQuery's web element querying styles, pyquery supports element location using CSS or XPath selectors. And you can execute multiple actions using chaining operations. In addition to a friendly learning curve, PyQuery is quite popular, with good community support.

⭐️ Popularity: 20.8k users

👍 Pros:

- Faster than most Python HTML parsers.

- Uses simple syntax.

- Easier to learn.

- Support for XML and HTML parsing.

- Efficient and fast HTML parsing.

- Support for web element modification

- Integrable into other HTML parsers.

- Good documentation and community support.

- Write JQuery in Python.

👎 Cons:

- Edge case implementation can be complex.

- No JavaScript support.

⚙️ Features:

- Element filtering.

- Operation chaining.

- XML and HTML parsing

- JQuery querying.

- XPath and CSS selectors.

- Web element manipulation.

👨💻 Example: The code below queries the web page for the number of stock left using a CSS selector.

# Import the required libraries

import requests

from pyquery import PyQuery as pq

url = 'https://scrapeme.live/shop/Charizard/'

# Make a get request to retrieve page

response = requests.get(url)

# Resolve the response

if response.status_code==200:

html_content = response.text

else:

print(response.status_code)

# Parse HTML content

doc = pq(html_content)

# Retrieve the target content

left_in_stock = doc('p.stock')

print(left_in_stock.text())

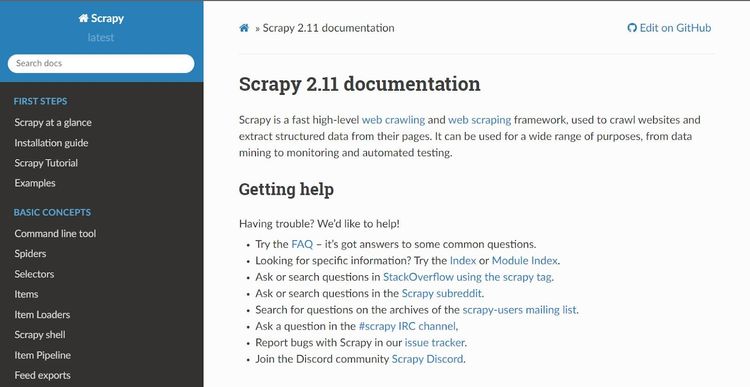

6. Scrapy

Featuring full-scale web scraping and crawling capabilities, Scrapy is an extensive Python tool with parser functionality. Thus, beyond HTML parsing, it provides a complete framework for collecting, organizing, and storing data parsed from a target web page.

Scrapy features a built-in HTTP client for requesting multiple URLs in a queue or parallel. After obtaining a web page, it parses its HTML through a dedicated parser function and retrieves content from the response objects using XPath or CSS selectors.

⭐️ Popularity: 41.1k users.

👍 Pros:

- Scalable, modular, and extensible.

- Support for XML and HTML parsing.

- Supports XPath selector for precise element location.

- Wide adoption and active community

- Detailed documentation.

- Extensible plugin for scraping JavaScript-rendered pages.

- Support for asynchronous and concurrent processing.

- Built-in data cleaning and storage methods.

👎 Cons:

- The learning curve can be steep for beginners.

- Can be resource-intensive.

- No built-in JavaScript support.

- Dependence on external libraries for JavaScript support introduces complexity.

⚙️ Features:

- XML and HTML parsing.

- Built-in HTTP client.

- Concurrent and asynchronous operations.

- Item pipeline.

- Data organization.

- Crawling and following rules.

- Web scraping and crawling.

- Pagination.

- Dedicated spiders.

👨💻 Example: Below is an example Scrapy spider that obtains a product page using the start_urls variable. It parses the HTML content to the parser method and gets the stock details using the CSS selector.

# Import the Spider class

from scrapy.spiders import Spider

class MySpider(Spider):

# Specify spider name

name = 'my_spider'

start_urls = ['https://scrapeme.live/shop/Charizard/']

# Parse HTML page as response

def parse(self, response):

# Extract text content from the h2 element with class 'test-id'

stock_text = response.css('p.stock::text').get()

# Log extracted text

self.log(f'Stock: {stock_text}')

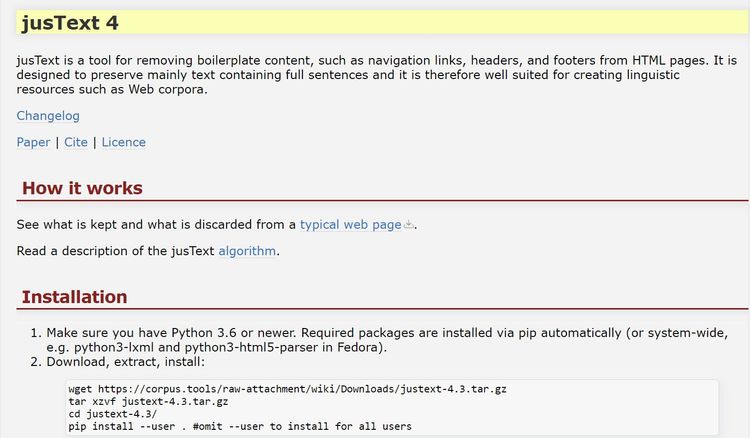

7. jusText

jusText is an HTML boilerplate remover for extracting only text content from web pages in any language. While it's not a dedicated Python HTML parser library, it analyzes HTML tag patterns based on simple segmentation and supports element location using CSS selectors.

However, using jusText independently for web scraping can be complex, considering its steep learning curve and low adoption by the scraping community. But it pairs well with scraping tools like BeautifulSoup for simpler syntax and robustness.

⭐️ Popularity: 2.4k users.

👍 Pros:

- Suitable for extracting only text content.

- Integrates well with libraries like BeautifulSoup.

- Extract texts in different languages.

- No JavaScript support.

- Element location with CSS selector.

👎 Cons:

- Steep learning curve.

- Low community support and poor documentation.

⚙️ Features:

- HTML boilerplate removal.

- Language support.

- CSS selector.

- Page paragraphing.

👨💻 Example: Using the text value, the code below iterates through the web page elements to extract the product details from the target website.

import requests

import justext

url = 'https://scrapeme.live/shop/Charizard/'

# Make a get request to retrieve page

response = requests.get(url)

# Resolve the response

if response.status_code==200:

html_content = response.text.encode('utf-8')

else:

print(response.status_code)

# Parse HTML with justText

paragraphs = justext.justext(html_content, justext.get_stoplist('English'))

# Get target content

for paragraph in paragraphs:

if 'in stock' in paragraph.text:

print(paragraph.text)

Benchmark: Which HTML Parser in Python Is Faster?

We ran a 50-iteration benchmark test on each Python HTML parser to provide insights into their speed. We recorded their mean execution time in seconds under each scenario and computed their combined mean.

The table below is the result for two scenarios: text and table data extraction.

The table below is the result for two scenarios: text and table data extraction.

| Libraries | Text Data Extraction (s) | Table Data Extraction (s) | Combined Mean (s) |

|---|---|---|---|

| BeautifulSoup | 0.04 | 0.05 | 0.045 |

| lxml | 0.01 | 0.01 | 0.01 |

| html5lib | 0.10 | 0.15 | 0.125 |

| requests-html | 51.42 | 50.74 | 51.08 |

| PyQuery | 0.01 | 0.01 | 0.01 |

| jusText | 0.06 | 0.04 | 0.05 |

| Scrapy | 9.17 | 6.85 | 8.01 |

Note: The unit used is the second (s = seconds).

Here are the overall results in a graph, from faster to slower:

It's unsurprising to see PyQuery and lxml toping the performance benchmarks, as both are descendants of C libraries. Despite its robustness and simplicity, BeautifulSoup proved to be a bit faster, with jusText and html5lib trailing behind it.

As expected, Scrapy was relatively slow since it bundles many Python code and libraries. request-html is the slowest, probably due to interval pauses between requests.

What Is The Best Python HTML Parser?

The overall best Python HTML parser, from our verdict, is BeautifulSoup. Although, according to the benchmark, it fell behind PyQuery and lxml in speed, it's more extensible. Plus, it has an easier learning curve, a more active community, and more detailed documentation with simple implementation.

That said, the choice also depends on the use case. For instance, while HTML parsers like BeautifulSoup, lxml, and PyQuery are ideal for small-to-medium scale projects, you want to consider Scrapy for edge cases and JavaScript support.

However, an HTTP client must employ effective antibot bypass strategies for these libraries to parse HTML successfully. Try ZenRows today for a reliable and effortless way to extract HTML data from any webpage at any scale.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.