You'll often encounter CAPTCHAs while scraping with Scrapy. This will always get you blocked if you don't find a way to bypass them.

In this article, you'll learn the different ways of bypassing CAPTCHAs in Scrapy.

Can you Solve CAPTCHAs with Scrapy?

You can solve CAPTCHAs in Scrapy using three methods. These include using a web scraping API, employing a CAPTCHA resolver, or rotating premium proxies.

A Web scraping API helps you avoid CAPTCHAs and other anti-bot protections completely. You can rotate premium proxies to prevent CAPTCHA services from flagging your IP address. And CAPTCHA resolvers work by passing your request to a dedicated CAPTCHA-solving service or a human.

All these methods help you focus on data extraction without worrying about getting blocked. The next sections show you how to implement each in full detail.

Method #1: Bypass any CAPTCHA with a Web Scraping API

As powerful as Scrapy is for web scraping, it can be blocked by CAPTCHAs or other anti-bot protection. The best way to solve CAPTCHAs and anti-bots is to bypass them with web scraping APIs so they don't show at all.

ZenRows is an all-in-one web scraping API that helps you bypass CAPTCHAs and other anti-bot measures to scrape any web page at scale.

For instance, Scrapy fails to scrape G2, a CAPTCHA-protected website. To try it, copy and paste the following code into your spider file:

# import the required library

import scrapy

class TutorialSpider(scrapy.Spider):

# set the spider name

name = "scraper"

# specify the allowed domains and target URLs

allowed_domains = ["g2.com"]

start_urls = ["https://www.g2.com/products/asana/reviews"]

# customize your scrapy request

def start_requests(self):

for url in self.start_urls:

yield scrapy.Request(

url,

callback=self.parse

)

# parse the response HTML

def parse(self, response):

print(response.text)

Now, run the spider with the following command:

scrapy crawl scraper

The code fails with Scrapy's 403 forbidden error, indicating that the target website has blocked your request:

Crawled (403) <GET https://www.g2.com/products/asana/reviews>

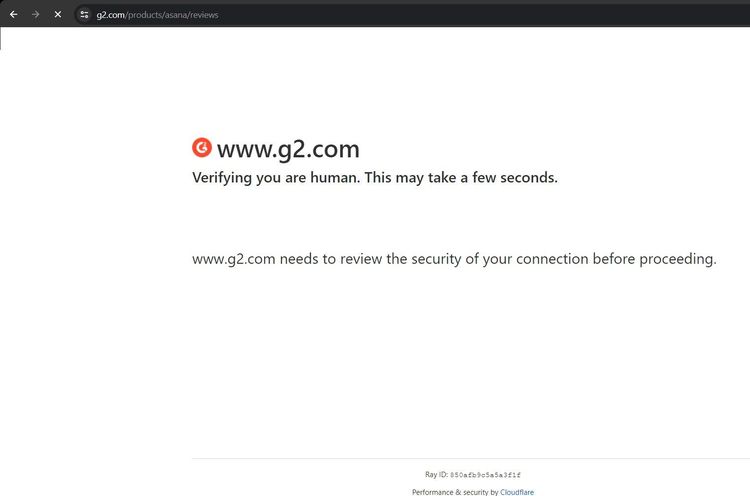

That doesn't work. According to the screenshot below, the website uses the Turnstile CAPTCHA.

To bypass Cloudflare CAPTCHA in Scrapy, you'll modify the previous code by integrating ZenRows with your spider.

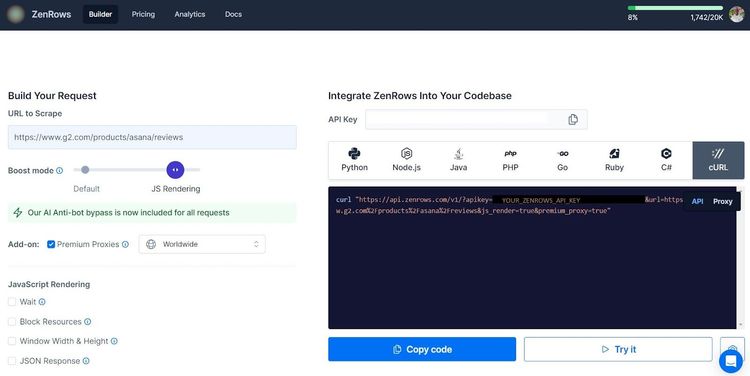

Sign up on ZenRows to open the request builder. Set the Boost Mode to JS Rendering, select Premium Proxies, and choose the cURL request option.

The generated cURL looks like this:

curl "https://api.zenrows.com/v1/?apikey=<YOUR_ZENROWS_API_KEY>&url=https%3A%2F%2Fwww.g2.com%2Fproducts%2Fasana%2Freviews&js_render=true&premium_proxy=true"

Next, paste the following function in your spider file to reformat the generated cURL. This function adds the required parameters and encodes the URL via Python's quote_plus. The reformatted URL will be your requested URL in the spider class (more on this later).

# import the required library

import scrapy

from urllib.parse import urlencode, quote_plus

def ZenRows_api_url(url, api_key):

# set ZenRows request parameters

params = {

"apikey": api_key,

"url": url,

"js_render":"true",

"premium_proxy":"true",

}

# encode the parameters and merge it with the ZenRows base URL

encoded_params = urlencode(params, quote_via=quote_plus)

final_url = f"https://api.zenrows.com/v1/?{encoded_params}"

return final_url

Specify the target URL in your spider class:

# import the required library

import scrapy

from urllib.parse import urlencode, quote_plus

#...

class TutorialSpider(scrapy.Spider):

# set the spider name

name = "scraper"

# specify the target URL

allowed_domains = ["g2.com"]

start_urls = ["https://www.g2.com/products/asana/reviews"]

Use the previous function to format the generated URL in the request method. The function accepts the target URL and your API key, as shown:

class TutorialSpider(scrapy.Spider):

#...

def start_requests(self):

for url in self.start_urls:

# use the function to specify the request URL and your API key

api_url = ZenRows_api_url(url, "<YOUR_ZENROWS_API_KEY>")

yield scrapy.Request(

api_url,

callback=self.parse

)

Finally, parse the response as text:

class TutorialSpider(scrapy.Spider):

#...

# parse the response HTML

def parse(self, response):

print(response.text)

Combine the chunks, and your final code should look like this:

# import the required library

import scrapy

from urllib.parse import urlencode, quote_plus

def ZenRows_api_url(url, api_key):

# set ZenRows request parameters

params = {

"apikey": api_key,

"url": url,

"js_render":"true",

"premium_proxy":"true",

}

# encode the parameters and merge it with the ZenRows base URL

encoded_params = urlencode(params, quote_via=quote_plus)

final_url = f"https://api.zenrows.com/v1/?{encoded_params}"

return final_url

class TutorialSpider(scrapy.Spider):

# set the spider name

name = "scraper"

# specify the target URL

allowed_domains = ["g2.com"]

start_urls = ["https://www.g2.com/products/asana/reviews"]

def start_requests(self):

for url in self.start_urls:

# use the function to specify the request URL and your API key

api_url = ZenRows_api_url(url, "<YOUR_ZENROWS_API_KEY>")

yield scrapy.Request(

api_url,

callback=self.parse

)

# parse the response HTML

def parse(self, response):

print(response.text)

Now, run the code with the crawl command:

scrapy crawl scraper

This outputs the website's complete HTML with its title, showing that ZenRows with Scrapy bypasses the anti-bot system successfully:

<!DOCTYPE html>

<!-- ... -->

<head>

<title>Asana Reviews 2024</title>

</head>

<body>

<!-- ... other page content ignored for brevity -->

</body>

Congratulations! You've just bypassed a CAPTCHA system in Scrapy using the ZenRows web scraping API. Let's look at other methods of solving CAPTCHA protections.

Method #2: Use a CAPTCHA Resolver

You can solve CAPTCHAs in Scrapy with CAPTCHA-resolving services. Most solving services like 2CAPTCHA employ human solvers, and the request might take some time.

The approach to solving a CAPTCHA with 2CAPTCHA depends on the CAPTCHA provider. While most CAPTCHAs require the target website's site key, others require you to download and upload the CAPTCHA image to the 2CAPTCHA server.

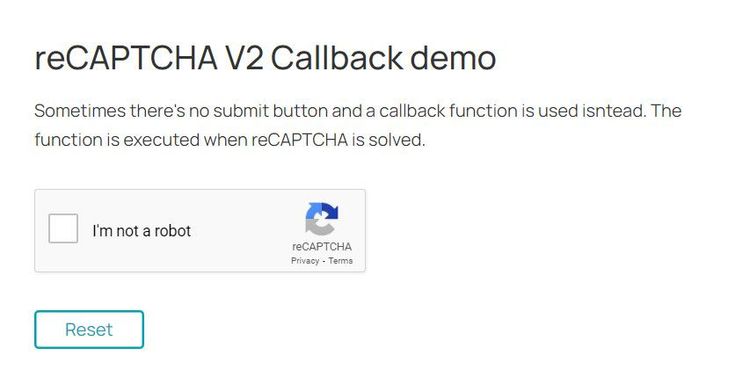

You'll solve the reCAPTCHA demo on the 2CAPTCHA website to see how that works. Here's the unsolved CAPTCHA.

To solve that with 2CAPTCHA, install the solver package using pip:

pip install 2captcha-python

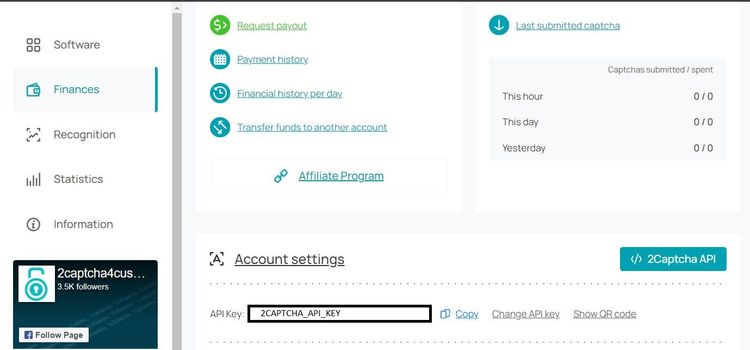

You need two things to solve the reCAPTCHA CAPTCHA. These include your 2CAPTCHA API key and the target website's site key.

Sign up on the 2CAPTCHA website and grab your API key from your dashboard.

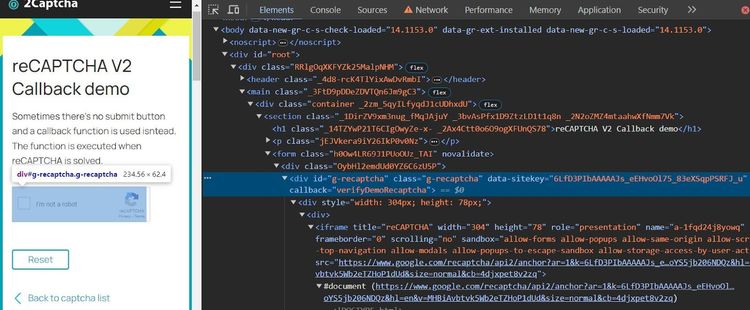

You'll find the site key in the target website's HTML. Launch the demo website on a browser like Chrome and right-click on the CAPTCHA box. Then click "Inspect". Expand the outer element and look for the data-sitekey attribute, as shown below:

It's time to write your web scraping code.

Start your spider class by defining a function that solves the reCAPTCHA CAPTCHA. Pass your API key with the 2CAPTCHA instance and use the recaptcha method to solve the CAPTCHA based on the site key and the target URL.

# import the required libraries

import scrapy

from twocaptcha import TwoCaptcha

class TutorialSpider(scrapy.Spider):

name = "scraper"

start_urls = ["https://2captcha.com/demo/recaptcha-v2-callback"]

def solve_with_2captcha(self, sitekey, url):

# start the 2CAPTCHA instance

captcha2_api_key = "YOUR_2CAPTCHA_API_KEY"

solver = TwoCaptcha(captcha2_api_key)

try:

# resolve the CAPTCHA

result = solver.recaptcha(sitekey=sitekey, url=url)

if result:

print(f"Solved: {result}")

return result["code"]

else:

print("CAPTCHA solving failed")

return None

except Exception as e:

print(e)

return None

Next, write another function to use the previous solver function. This function passes the response URL and site key to the solver function. It then executes the scraping logic if successful.

class TutorialSpider(scrapy.Spider):

# ...

def solve_captcha(self, response):

# specify reCAPTCHA sitekey, replace with the target site key

captcha_sitekey = "6LfD3PIbAAAAAJs_eEHvoOl75_83eXSqpPSRFJ_u"

# call the CAPTCHA solving function

captcha_solved = self.solve_with_2captcha(captcha_sitekey, response.url)

# check if CAPTCHA is solved and proceed with scraping

if captcha_solved:

print("CAPTCHA solved successfully")

# extract elements after solving CAPTCHA successfully

element = response.css("title::text").get()

print("Scraped element:", element)

Finally, define the parse method and send a callback to the above solver function.

class TutorialSpider(scrapy.Spider):

#...

def parse(self, response):

# send a request to solve the CAPTCHA using the solver function as a callback

yield scrapy.Request(url=response.url, callback = self.solve_captcha)

Here's the final code:

# import the required libraries

import scrapy

from twocaptcha import TwoCaptcha

class TutorialSpider(scrapy.Spider):

name = "scraper"

start_urls = ["https://2captcha.com/demo/recaptcha-v2-callback"]

def solve_with_2captcha(self, sitekey, url):

# start the 2CAPTCHA instance

solver = TwoCaptcha("<YOUR_CAPTCHA_2_API_KEY">)

try:

# resolve the CAPTCHA

result = solver.recaptcha(sitekey=sitekey, url=url)

if result:

print(f"Solved: {result}")

return result["code"]

else:

print("CAPTCHA solving failed")

return None

except Exception as e:

print(e)

return None

def solve_captcha(self, response):

# specify reCAPTCHA sitekey

captcha_sitekey = "6LfD3PIbAAAAAJs_eEHvoOl75_83eXSqpPSRFJ_u"

# call the CAPTCHA solving function

captcha_solved = self.solve_with_2captcha(captcha_sitekey, response.url)

# check if CAPTCHA is solved and proceed with scraping

if captcha_solved:

print("CAPTCHA solved successfully")

# extract elements after solving CAPTCHA successfully

element = response.css("title::text").get()

print("Scraped element:", element)

def parse(self, response):

# send a request to solve the CAPTCHA using the solver function as a callback

yield scrapy.Request(url=response.url, callback = self.solve_captcha)

The code solves the reCAPTCHA CAPTCHA successfully and returns a solved code, as shown:

Solved: {

'captchaId': '75653786097',

'code': '03AFcWeA7Ap7jFxiBmNjBbwiHSGjMCD_oP3Ae8cUxzdtqJnNkj4XnuUJOUFRfUkkjU_GPCXwqHYYFCynXdrQhAQce-F...'

}

CAPTCHA solved successfully

Scraped element: How to solve reCAPTCHA V2 Callback on PHP, Java, Python, Go, Csharp, CPP

That's it! You just solved a CAPTCHA with 2CAPTCHA. However, remember that 2CAPTCHA doesn't solve all CAPTCHAs and can be expensive for large-scale projects.

Method #3: Rotate Premium Proxies

Proxy rotation can help bypass CAPTCHAs, but it's less effective than the two previous methods. Some websites limit the number of requests from every IP address and often spin a CAPTCHA for those that exceed their limits.

Rotating proxies helps mask your IP address and prevents the server from identifying the request source. Thus, you can scrape the web unnoticed and avoid runtime interruptions due to IP bans.

However, ensure you use premium proxies when dealing with CAPTCHAs because the free ones usually don't work. There are also many CAPTCHA-compatible proxies out there.

You can use proxies with Scrapy and also rotate them. Check our full tutorial on using proxies with Scrapy to learn more.

Conclusion

This article has highlighted the various techniques of bypassing CAPTCHAs in Scrapy. You've learned to achieve this with a web scraping API, a CAPTCHA-solving service, and premium proxy rotation.

As mentioned, the best of the three is to use web scraping APIs, and ZenRows comes on top. ZenRows is an all-in-one web scraping solution for bypassing CAPTCHAs and other anti-bot systems. Try ZenRows for free!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.