Your headers can either get you blocked or give you safe passage while web scraping with Scrapy. If your Scrapy spider gets blocked every time, your default Scrapy headers need fixing.

In this tutorial, you'll learn how to customize your Scrapy headers, including the importance of HTTP headers in web scraping.

- Why are headers so important?

- How to set custom headers in Scrapy.

- Most important headers for web scraping.

- Easy middleware to fix Scrapy headers.

Why Are Scrapy Headers Important?

HTTP headers are information included in the request and response data exchanged between a browser and a server. It determines how the server will handle a request and the type of response it'll provide.

There are generally two types of HTTP headers; the request headers and the response headers. You'll focus on the request headers in this tutorial because they're the most relevant to web scraping.

Scrapy presents request headers during web scraping, and you can replace them with custom ones to make your requests more legitimate.

For instance, you can bypass blocks like Cloudflare in Scrapy by setting and rotating a genuine User-Agent header. Another common use case of Scrapy headers is cookie customization to manage sessions and scrape behind a login.

Quickly add the code below to your spider file to send a request to https://httpbin.org/headers. Then, observe the default headers that Scrapy sends.

# import the required library

import scrapy

class TutorialSpider(scrapy.Spider):

# set the spider name

name = "tutorial"

# specify the target URL

allowed_domains = ["httpbin.io"]

start_urls = ["https://httpbin.io/headers"]

# parse the response HTML

def parse(self, response):

# print the response text

print(response.text)

See the result below:

{

"headers": {

"Accept": [

"text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8"

],

"Accept-Encoding": [

"gzip, deflate"

],

"Accept-Language": [

"en"

],

"Host": [

"httpbin.io"

],

"User-Agent": [

"Scrapy/2.11.0 (+https://scrapy.org)"

]

}

}

Now, visit the same URL using a real browser to see the expected headers. Here's what the headers look like in Chrome:

{

"headers": {

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7",

"Accept-Encoding": "gzip, deflate, br",

"Accept-Language": "en-US,en;q=0.9",

"Host": "httpbin.org",

"Referer": "https://www.google.com/",

"Sec-Ch-Ua": "\"Not_A Brand\";v=\"8\", \"Chromium\";v=\"120\", \"Google Chrome\";v=\"120\"",

"Sec-Ch-Ua-Mobile": "?0",

"Sec-Ch-Ua-Platform": "\"Windows\"",

"Sec-Fetch-Dest": "document",

"Sec-Fetch-Mode": "navigate",

"Sec-Fetch-Site": "cross-site",

"Upgrade-Insecure-Requests": "1",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36",

"X-Amzn-Trace-Id": "Root=1-65b28b96-037321352b0637b429c9b697"

}

}

Compare both results, and you'll see that the default Scrapy request headers are missing some vital information.

For instance, you need to change Scrapy's default User-Agent header because it tells the server that the request is from an automated program (Scrapy). Some essential typical headers like the Referer and browser version (Sec-Ch-Ua) are also missing.

The Accept-Language header is also incomplete and not in the correct order, starting with en instead of the typical en-US string in Chrome's response. All these can get you blocked if you don't fix them.

You'll learn how to fix them in the next section.

How to Set Up Custom Headers in Scrapy

Customizing the Scrapy headers is straightforward. Let's see how to achieve it.

Add Headers with Scrapy

You can modify the headers attribute in Scrapy right inside your project's settings file. To achieve that, create a dictionary of your custom headers and add it to settings.py like so:

DEFAULT_REQUEST_HEADERS = {

'Accept-Language': 'en-US,en;q=0.9',

"Referer": "https://www.google.com/",

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7",

"Accept-Encoding": "gzip, deflate, br",

"Sec-Ch-Ua": "\"Not A(Brand\";v=\"99\", \"Google Chrome\";v=\"121\", \"Chromium\";v=\"121\"",

"Sec-Ch-Ua-Platform": "\"Windows\"",

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/121.0.0.0 Safari/537.36',

}

Next, add https://httpbin.org/headers as a start URL inside the spider class in your spider file (scraper.py in this case). This sample URL returns the headers used during a request.

# import the required library

import scrapy

class TutorialSpider(scrapy.Spider):

# set the spider name

name = "tutorial"

# specify the target URL

allowed_domains = ["httpbin.io"]

start_urls = ["https://httpbin.io/headers"]

# parse the response HTML

def parse(self, response):

print(response.text)

Running the spider with the spider run scraper.py command outputs the new headers:

{

"headers": {

"Accept": [

"text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7"

],

"Accept-Encoding": [

"gzip, deflate, br"

],

"Accept-Language": [

"en-US,en;q=0.9"

],

"Host": [

"httpbin.io"

],

"Referer": [

"https://www.google.com/"

],

"Sec-Ch-Ua": [

"\"Not A(Brand\";v=\"99\", \"Google Chrome\";v=\"121\", \"Chromium\";v=\"121\""

],

"Sec-Ch-Ua-Platform": [

"\"Windows\""

],

"User-Agent": [

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/121.0.0.0 Safari/537.36"

]

}

}

Congratulations! You just replaced Scrapy default headers with custom ones. But what if you want to edit a few of these headers and preserve others?

Edit Header's Values

You should consider preserving other headers and only modifying one instead. To do that, edit the headers dictionary by passing the target header into a request interceptor inside your spider file (scraper.py).

The code below edits the existing User Agent and platform headers without modifying the others.

# import the required library

import scrapy

class TutorialSpider(scrapy.Spider):

# set the spider name

name = "tutorial"

# specify the target URL

allowed_domains = ["httpbin.io"]

start_urls = ["https://httpbin.io/headers"]

def start_requests(self):

# alter the user agent's platform with new custom headers

custom_headers = {

"Sec-Ch-Ua-Platform": "\"Linux\"",

"User-Agent": "Mozilla/5.0 (Linux; x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/121.0.0.0 Safari/537.36"

}

for url in self.start_urls:

yield scrapy.Request(url, headers=custom_headers, callback=self.parse)

# parse the response HTML

def parse(self, response):

print(response.text)

This preserves the other headers but changes the User Agent's platform to Linux. See the output below:

{

"headers": {

"Accept": [

"text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7"

],

"Accept-Encoding": [

"gzip, deflate, br"

],

"Accept-Language": [

"en-US,en;q=0.9"

],

"Host": [

"httpbin.io"

],

"Referer": [

"https://www.google.com/"

],

"Sec-Ch-Ua": [

"\"Not A(Brand\";v=\"99\", \"Google Chrome\";v=\"121\", \"Chromium\";v=\"121\""

],

"Sec-Ch-Ua-Platform": [

"\"Linux\""

],

"User-Agent": [

"Mozilla/5.0 (Linux; x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/121.0.0.0 Safari/537.36"

]

}

}

You now know how to edit specific headers in Scrapy. That's great!

Most Important Headers for Web Scraping

Some headers are more relevant than others during web scraping, and you should include the most vital ones in your Scrapy HTTP request headers. Let's see the common web scraping headers and what they mean.

User-Agent

The User-Agent header describes the user's browser environment, including the browser type, version, platform, and driver engine. Sending the wrong User Agent can mark you as a bot, which can result in getting blocked.

You can modify the Scrapy User Agent with the following code:

# import the required library

import scrapy

class TutorialSpider(scrapy.Spider):

# set the spider name

name = "tutorial"

# specify the target URL

allowed_domains = ["httpbin.io"]

start_urls = ["https://httpbin.io/headers"]

def start_requests(self):

# alter the user agent's platform with new custom headers

custom_headers = {

"Sec-Ch-Ua-Platform": "\"Linux\"",

"User-Agent": "Mozilla/5.0 (Linux; x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/121.0.0.0 Safari/537.36"

}

for url in self.start_urls:

yield scrapy.Request(url, headers=custom_headers, callback=self.parse)

# parse the response HTML

def parse(self, response):

print(response.text)

Check out our guide on changing the User Agent in Scrapy to learn more.

Referer

The referer header is another important header that provides information about the URL of the website that referred the user to the current page. For example, setting the referer header as https://www.google.com/ means the user got to the current page via Google.

Cookie

A cookie is a piece of information used to track the user's session and usually contains the session details. Cookies are relevant in web scraping if you need to mimic a real user's session in real time.

Other Relevant Headers

Other relevant headers in web scraping include Accept-Language, Sec-Ch-Ua, Accept-Encoding, and Accept. Including them in your Scrapy header can reduce your chance of getting detected by anti-bots.

Easy Middleware to Fix Scrapy Headers

Customizing the headers can take time and may introduce inaccuracies that can get you blocked.

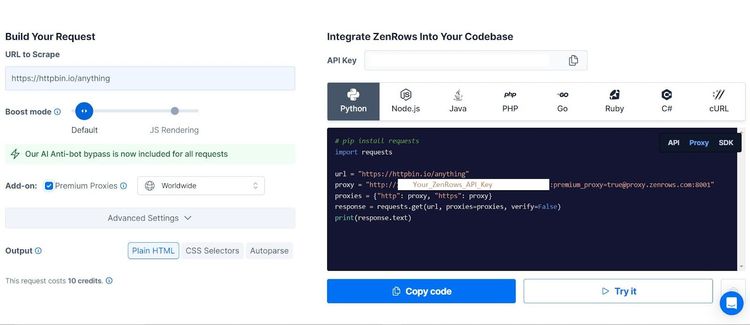

ZenRows is a tool that integrates with Scrapy and automatically fixes the set of extra headers you need for your scraper. It auto-sets and auto-rotates the User Agent and has features like IP rotation and anti-CAPTCHA for scraping easily without getting blocked.

To try ZenRows, sign up for free, and you'll get your API key and 1000 free credits.

Now, send a request with the following code. Replace YOUR_ZENROWS_API_KEY with your API key.

# import the required library

import scrapy

class TutorialSpider(scrapy.Spider):

# set the spider name

name = "tutorial"

# specify the target URL

allowed_domains = ["httpbin.io"]

start_urls = ["https://httpbin.io/headers"]

def start_requests(self):

# intercept the request with the proxy URL

for url in self.start_urls:

yield scrapy.Request(

url=url,

callback=self.parse,

meta={"proxy": f"http://{YOUR_ZENROWS_API_KEY}:@proxy.zenrows.com:8001"},

)

# parse the response HTML

def parse(self, response):

print(response.text)

The output now contains more headers, and some header values have changed. For instance, the user agent and platform types now differ from what you set in the settings file, proving that ZenRows is rotating headers for you.

See the output below:

{

#... other headers omitted for brevity

"Sec-Ch-Ua": [

"\"Not_A Brand\";v=\"8\", \"Chromium\";v=\"120\", \"Google Chrome\";v=\"120\""

],

"Sec-Ch-Ua-Mobile": [

"?0"

],

"Sec-Ch-Ua-Platform": [

"\"macOS\""

],

"Sec-Fetch-Dest": [

"document"

],

"Sec-Fetch-Mode": [

"navigate"

],

"Sec-Fetch-Site": [

"none"

],

"Sec-Fetch-User": [

"?1"

],

"Upgrade-Insecure-Requests": [

"1"

],

"User-Agent": [

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36"

]

}

}

You've now handed everything to ZenRows, including header customization and anti-bot bypass. Bravo!

Conclusion

In this article, you've seen the importance of HTTP headers and learned how to customize the Scrapy HTTP request headers for web scraping.

You now know:

- How to override Scrapy's default headers from the settings.py file.

- How to edit existing HTTP headers using Scrapy's request interceptor.

- The most relevant HTTP headers for web scraping.

You're now confident handling Scrapy headers. So feel free to play around with more examples. Although customizing the request header can reduce your chances of getting blocked, it's still ineffective against most advanced anti-bots. Integrate your Scrapy scraper with ZenRows and bypass any sophisticated blocks.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.