Does your IP address get banned during scraping? Using proxies with Selenium will hide your identity and prevent anti-bot detection.

In this tutorial, you'll learn how to set a proxy using Selenium in PHP.

How to Use a Proxy in Selenium With PHP?

Setting up a proxy while scraping with PHP is straightforward and usually depends on the proxy type. You'll learn the various methods of configuring proxies in this section.

Check out our tutorial about setting up Selenium in PHP if you’re not familiar with the process.

Before adding a proxy, try viewing your request's current IP address with the following code. It visits https://httpbin.io/ip to output the IP address:

namespace Facebook\WebDriver;

// import the required libraries

use Facebook\WebDriver\Remote\DesiredCapabilities;

use Facebook\WebDriver\Remote\RemoteWebDriver;

use Facebook\WebDriver\Chrome\ChromeOptions;

require_once('vendor/autoload.php');

// specify the URL to the local Selenium Server

$host = 'http://localhost:4444/';

// specify the desired capabilities

$capabilities = DesiredCapabilities::chrome();

$chromeOptions = new ChromeOptions();

// run Chrome in headless mode

$chromeOptions->addArguments(['--headless']); // <- comment out for testing

// register the Chrome options

$capabilities->setCapability(ChromeOptions::CAPABILITY_W3C, $chromeOptions);

// initialize a driver to control a Chrome instance

$driver = RemoteWebDriver::create($host, $capabilities);

// maximize the window

$driver->manage()->window()->maximize();

// open the target page

$driver->get('https://httpbin.io/ip');

// extract the HTML page source to view the current IP

$html = $driver->getPageSource();

echo $html;

// close the driver and release its resources

$driver->close();

The code outputs the current IP address like so:

{

"origin": "105.113.16.42:43363"

}

You'll add a proxy to that request in the next section.

Step 1: Use a Proxy in an HTTP Request

You can add a proxy directly to an HTTP request by specifying your proxy address as a browser capability. You'll use a free proxy from the Free Proxy List to achieve that.

The proxy used here will probably not work at the time of reading since it's free. So, you might need to grab a new one from that website.

First, add the proxy details to Chrome's desired capabilities:

namespace Facebook\WebDriver;

// import the required libraries

use Facebook\WebDriver\Remote\DesiredCapabilities;

use Facebook\WebDriver\Remote\RemoteWebDriver;

use Facebook\WebDriver\Chrome\ChromeOptions;

require_once('vendor/autoload.php');

// specify the URL to the local Selenium Server

$host = 'http://localhost:4444/';

// configure proxy

$proxy = [

'proxyType' => 'manual',

'httpProxy' => 'http://103.25.210.102:347',

'sslProxy' => 'https://103.25.210.102:347',

];

// specify the desired capabilities

$capabilities = DesiredCapabilities::chrome();

$capabilities->setCapability('proxy', $proxy);

Then, start Chrome in headless mode and visit https://httpbin.io/ip to view the current IP address:

// ...

// define the browser options

$chromeOptions = new ChromeOptions();

// run Chrome in headless mode

$chromeOptions->addArguments(['--headless']);

// register the Chrome options

$capabilities->setCapability(ChromeOptions::CAPABILITY_W3C, $chromeOptions);

// initialize a driver to control a Chrome instance

$driver = RemoteWebDriver::create($host, $capabilities);

// maximize the window to avoid responsive rendering

$driver->manage()->window()->maximize();

// open the target page in a new tab

$driver->get('https://httpbin.io/ip');

// extract the HTML page source and print it

$html = $driver->getPageSource();

echo $html;

// close the driver and release its resources

$driver->close();

Your final code should look like this:

namespace Facebook\WebDriver;

// import the required libraries

use Facebook\WebDriver\Remote\DesiredCapabilities;

use Facebook\WebDriver\Remote\RemoteWebDriver;

use Facebook\WebDriver\Chrome\ChromeOptions;

require_once('vendor/autoload.php');

// specify the URL to the local Selenium Server

$host = 'http://localhost:4444/';

// configure proxy

$proxy = [

'proxyType' => 'manual',

'httpProxy' => 'http://103.25.210.102:347',

'sslProxy' => 'https://103.25.210.102:347',

];

// specify the desired capabilities

$capabilities = DesiredCapabilities::chrome();

$capabilities->setCapability('proxy', $proxy);

// define the browser options

$chromeOptions = new ChromeOptions();

// run Chrome in headless mode

$chromeOptions->addArguments(['--headless']);

// register the Chrome options

$capabilities->setCapability(ChromeOptions::CAPABILITY_W3C, $chromeOptions);

// initialize a driver to control a Chrome instance

$driver = RemoteWebDriver::create($host, $capabilities);

// maximize the window to avoid responsive rendering

$driver->manage()->window()->maximize();

// open the target page in a new tab

$driver->get(' https://httpbin.io/ip');

// extract the HTML page source and print it

$html = $driver->getPageSource();

echo $html;

// close the driver and release its resources

$driver->close();

The code routes the request through the specified SSL proxy, as shown below:

{

"origin": "103.25.210.102:3348"

}

Good job! You just configured a proxy for Selenium in PHP.

Proxy Authentication With Selenium PHP

You might need to supply a username and password if the proxy service requires authentication. However, this isn't necessary with free proxies.

Edit the previous code, as shown below, to add authentication credentials to your Selenium proxy. Replace the username and password flags with your credentials:

namespace Facebook\WebDriver;

// import the required libraries

use Facebook\WebDriver\Remote\DesiredCapabilities;

use Facebook\WebDriver\Remote\RemoteWebDriver;

use Facebook\WebDriver\Chrome\ChromeOptions;

require_once('vendor/autoload.php');

// specify the URL to the local Selenium Server

$host = 'http://localhost:4444/';

// configure proxy

$proxy = [

'proxyType' => 'manual',

'httpProxy' => 'http://username:[email protected]:347:7564',

'sslProxy' => 'https://username:[email protected]:347:7564',

];

// specify the desired capabilities

$capabilities = DesiredCapabilities::chrome();

$capabilities->setCapability('proxy', $proxy);

// define the browser options

$chromeOptions = new ChromeOptions();

// run Chrome in headless mode

$chromeOptions->addArguments(['--headless']);

// register the Chrome options

$capabilities->setCapability(ChromeOptions::CAPABILITY_W3C, $chromeOptions);

// initialize a driver to control a Chrome instance

$driver = RemoteWebDriver::create($host, $capabilities);

// maximize the window to avoid responsive rendering

$driver->manage()->window()->maximize();

// open the target page in a new tab

$driver->get(' https://httpbin.io/ip');

// extract the HTML page source and print it

$html = $driver->getPageSource();

echo $html;

// close the driver and release its resources

$driver->close();

The above code will pass your authentication credentials to the proxy service before routing your request through its IP. Next, you'll learn how to rotate proxies in PHP with Selenium.

Step 2: Rotate Proxies in Selenium for PHP

Scraping with only one IP address can result in limitations like an IP ban. Rotating the proxy server can mitigate this by ensuring that Selenium uses a different IP address per request.

First, grab a few proxies from the Free Proxy List and put them in an array. Keep in mind that these proxies may not work at the time of reading since they're free.

namespace Facebook\WebDriver;

// import the required libraries

use Facebook\WebDriver\Remote\DesiredCapabilities;

use Facebook\WebDriver\Remote\RemoteWebDriver;

use Facebook\WebDriver\Chrome\ChromeOptions;

require_once('vendor/autoload.php');

// specify the URL to the local Selenium Server

$host = 'http://localhost:4444/';

// define an array of proxies

$proxies = [

'https://54.234.224.219:80',

'https://173.230.117.77:8000',

'https://35.185.196.38:3128',

];

Initialize a counter to track the proxy index. Then, add the proxies to the desired capabilities and start Chrome in headless mode:

// ...

// initialize a counter to keep track of the current proxy index

$proxyIndex = 0;

// specify the desired capabilities with the first proxy

$proxy = [

'proxyType' => 'manual',

'sslProxy' => $proxies[$proxyIndex],

];

// specify the desired capabilities

$capabilities = DesiredCapabilities::chrome();

$capabilities->setCapability('proxy', $proxy);

// define the browser options

$chromeOptions = new ChromeOptions();

// run Chrome in headless mode

$chromeOptions->addArguments(['--headless']);

Next, create a driver instance, visit the web page, and log its HTML source to view the current IP address. Then, increment the proxy index to change the IP address for each request:

// ...

// register the Chrome options

$capabilities->setCapability(ChromeOptions::CAPABILITY_W3C, $chromeOptions);

// initialize a driver to control a Chrome instance

$driver = RemoteWebDriver::create($host, $capabilities);

// maximize the window to avoid responsive rendering

$driver->manage()->window()->maximize();

// open the target page in a new tab

$driver->get('https://httpbin.io/ip');

// extract the HTML page source and print it

$html = $driver->getPageSource();

echo $html;

// increment the proxy index to rotate through proxies

$proxyIndex = ($proxyIndex + 1) % count($proxies);

// close the driver and release its resources

$driver->close();

See the full code below:

namespace Facebook\WebDriver;

// import the required libraries

use Facebook\WebDriver\Remote\DesiredCapabilities;

use Facebook\WebDriver\Remote\RemoteWebDriver;

use Facebook\WebDriver\Chrome\ChromeOptions;

require_once('vendor/autoload.php');

// specify the URL to the local Selenium Server

$host = 'http://localhost:4444/';

// define an array of proxies

$proxies = [

'https://54.234.224.219:80',

'https://173.230.117.77:8000', // Add more proxies here as needed

'https://35.185.196.38:3128',

];

// initialize a counter to keep track of the current proxy index

$proxyIndex = 0;

// specify the desired capabilities with the first proxy

$proxy = [

'proxyType' => 'manual',

'sslProxy' => $proxies[$proxyIndex],

];

// specify the desired capabilities

$capabilities = DesiredCapabilities::chrome();

$capabilities->setCapability('proxy', $proxy);

// define the browser options

$chromeOptions = new ChromeOptions();

// run Chrome in headless mode

$chromeOptions->addArguments(['--headless']);

// register the Chrome options

$capabilities->setCapability(ChromeOptions::CAPABILITY_W3C, $chromeOptions);

// initialize a driver to control a Chrome instance

$driver = RemoteWebDriver::create($host, $capabilities);

// maximize the window to avoid responsive rendering

$driver->manage()->window()->maximize();

// open the target page in a new tab

$driver->get('https://httpbin.io/ip');

// extract the HTML page source and print it

$html = $driver->getPageSource();

echo $html;

// increment the proxy index to rotate through proxies

$proxyIndex = ($proxyIndex + 1) % count($proxies);

// close the driver and release its resources

$driver->close();

The code outputs a different IP address per request:

{

"origin": "54.234.224.219:80"

}

{

"origin": "173.230.117.77:8000"

}

{

"origin": "35.185.196.38:3128"

}

{

"origin": "54.234.224.219:80"

}

That works! However, this tutorial only used free proxies to show the basics. Rotating free proxies isn't reliable because protected websites detect and block them easily.

For example, try opening a heavily protected website like G2 by rotating free proxies. The request gets blocked by Cloudflare Turnstile:

Using a free proxy increases your chances of getting blocked. A reliable solution is to use a premium proxy, and you'll see how that works in the next section.

Step 3: Get a Residential Proxy to Avoid Getting Blocked

You need dedicated web scraping proxies to make your request more legitimate and avoid detection. That's where premium proxies come in handy.

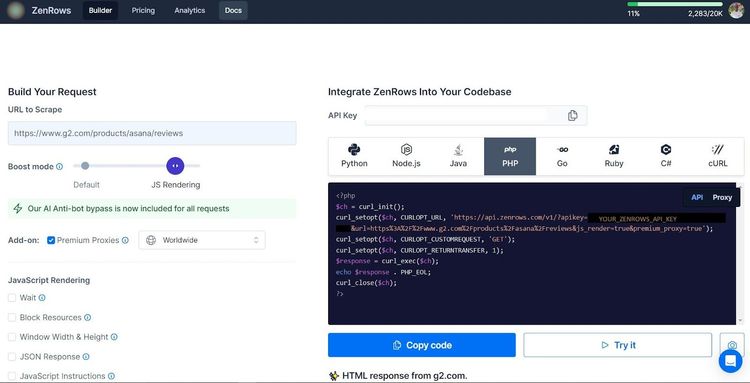

However, a better alternative to Selenium's extra configurations is to use a web scraping API like ZenRows. It automatically rotates premium proxies, configures the request headers, and bypasses any anti-bot without infrastructure overhead.

Try accessing the G2 product page that blocked Selenium earlier with ZenRows.

Sign up for free, and you'll get to the Request Builder. Paste the target URL in the link box, toggle the Boost mode to JS Rendering, and activate Premium proxies. Click PHP and select API as your connection mode.

Copy the generated code and modify it in your scraper like so:

// format the request parameters

$apiUrl = 'https://api.zenrows.com/v1/' .

'?apikey=<YOUR_ZENROWS_API_KEY>' .

'&url=' . urlencode('https://www.g2.com/products/asana/reviews') .

'&js_render=true&premium_proxy=true';

// send the request

$ch = curl_init();

curl_setopt($ch, CURLOPT_URL, $apiUrl);

curl_setopt($ch, CURLOPT_CUSTOMREQUEST, 'GET');

curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1);

// disable SSL verification

curl_setopt($ch, CURLOPT_SSL_VERIFYPEER, false);

$response = curl_exec($ch);

// get the ouput

if ($response === false) {

echo 'Error: ' . curl_error($ch);

} else {

echo $response . PHP_EOL;

}

curl_close($ch);

The code scrapes the protected web page successfully with its title:

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8" />

<link href="https://www.g2.com/images/favicon.ico" rel="shortcut icon" type="image/x-icon" />

<title>Asana Reviews 2024</title>

</head>

<body>

<!-- other content omitted for brevity -->

</body>

</html>

You just used ZenRows to scrape a protected website in PHP. Congratulations! You can also apply ZenRows' JavaScript instructions to extract dynamic content and replace Selenium completely.

Conclusion

This article teaches you three ways of configuring proxies in Selenium with PHP to avoid anti-bot detection. Here's a recap of all you've learned:

- Routing the Selenium HTTP request through a free proxy.

- Authenticating a proxy service that requires a password and a username using Selenium in PHP.

- How to rotate proxies from an array of free proxies.

The overall best option to avoid anti-bot detection is to route your PHP scraper through premium proxies. ZenRows is the most reliable web scraping solution for rotating premium proxies and bypassing blocks, allowing you to scrape any website without limitations. Try ZenRows for free!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.