The best library for browser automation in Golang is Chromedp. It provides JavaScript rendering capabilities and allows you to instruct a headless browser to simulate human behavior on dynamic web pages for either web scraping or testing. In this tutorial, you'll first dig into the basics and then explore more advanced interactions through examples.

- Getting started.

- How to use Chromedp in Golang.

- Find web page elements: CSS selectors and XPath in Chromedp.

- Interact with web pages: Scroll, Screenshot, etc.

- Chromedp proxy: an essential tool.

- User Agent in Chromedp proxy: Avoid getting blocked.

- Best way to avoid getting blocked while web scraping.

- Get Docker for Chromedp.

- Login with Chromedp.

Let's dive in!

What Is Chromedp?

Chromedp is a Golang library for controlling Chrome in headless mode through the DevTools Protocol. This browser automation tool is great to perform testing, simulate user interaction, or scrape online. To learn more, check out our article about headless browser scraping.

Also, Chromedp enables scraping dynamic-content sites where JavaScript execution is crucial. Learn more in our in-depth guide on web scraping in Golang.

Getting Started

Follow this step-by-step section to set up a Chromedp Go environment.

1. Set Up a Go Project

Make sure you have Go on your computer. Otherwise, download the Golang installer, execute it, and follow the installation wizard.

After setting it up, it's time to initialize a Chromedp project. Create a chromedp-project folder and enter it in the terminal:

mkdir chromedp-project

cd chromedp-project

_Italic_Then, execute the init command to set up a Go module:

go mod init web-scraper

Add a file named scraper.go to the project folder, with the following code. The first line contains the name of the package, then there are the imports. The main() function represents the entry point of any Go program and will contain the scraping logic.

package main

import (

"fmt"

)

func main() {

fmt.Println("Hello, World!")

// scraping logic...

}

You can run the Go script like this:

go run scraper.go

Great! Your Golang project is now ready!

2. Install Chromedp

Install Chromedp and its dependencies:

go get -u github.com/chromedp/chromedp

This command may take some time, so be patient.

It only remains to import the Go headless browser library and get ready to use it.

3. Import the Headless Browser

Make sure the scraper.go contains the following imports. context, fmt, and log come from the Golang standard library, while the other two imports are for Chromedp.

import (

"context"

"fmt"

"github.com/chromedp/cdproto/cdp"

"github.com/chromedp/chromedp"

"log"

)

Add the following lines to your main() function to initialize a headless browser context. NewContext() creates a Chromedp context that allows you to control a browser instance. Then, cancel() releases the resources allocated for the browser when no longer required.

ctx, cancel := chromedp.NewContext(

context.Background(),

)

defer cancel()

That's what the current scraper.go looks like:

package main

import (

"context"

"fmt"

"github.com/chromedp/cdproto/cdp"

"github.com/chromedp/chromedp"

"log"

)

func main() {

// initialize a controllable Chrome instance

ctx, cancel := chromedp.NewContext(

context.Background(),

)

// to release the browser resources when

// it is no longer needed

defer cancel()

// scraping logic...

}

Time to learn how to scrape data with Chromedp!

How to Use Chromedp in Golang

To move the first steps with Chromedp, we'll use an infinite scrolling demo page as the target URL:

This page loads new products as the user scrolls down, so it's a perfect example of a dynamic-content page that uses JavaScript for data retrieval. Let's see Chromedp in action in a basic example. The goal is to visit the target page and extract its raw HTML as a string. Start by initializing a string variable where to store the HTML source code of the page:

var html string

Next, use the Run() Chromedp function to instruct a browser to perform specific actions. Also, as the automation process may fail, you should define some error handling logic.

err := chromedp.Run(ctx,

// browser actions...

)

if err != nil {

log.Fatal("Error while performing the automation logic:", err)

}

Navigate() is an action to tell the browser to navigate to the specified URL:

chromedp.Navigate("https://scrapingclub.com/exercise/list_infinite_scroll/")

After that, you should wait some time for the page to render with the Sleep() action:

chromedp.Sleep(2000*time.Millisecond)

Hard waits aren't recommended and you should prefer more consistent waiting strategies instead. But don't worry, you'll soon see how to avoid them.

Finally, execute a custom action function with ActionFunc() to specify the HTML source code extraction logic. This snippet gets the root DOM node on the page and retrieves its outer HTML content:

chromedp.ActionFunc(func(ctx context.Context) error {

rootNode, err := dom.GetDocument().Do(ctx)

if err != nil {

return err

}

html, err = dom.GetOuterHTML().WithNodeID(rootNode.NodeID).Do(ctx)

return err

}),

Putting it all together:

package main

import (

"context"

"fmt"

"github.com/chromedp/cdproto/dom"

"github.com/chromedp/chromedp"

"log"

"time"

)

func main() {

// initialize a controllable Chrome instance

ctx, cancel := chromedp.NewContext(

context.Background(),

)

// to release the browser resources when

// it is no longer needed

defer cancel()

var html string

err := chromedp.Run(ctx,

// visit the target page

chromedp.Navigate("https://scrapingclub.com/exercise/list_infinite_scroll/"),

// wait for the page to load

chromedp.Sleep(2000*time.Millisecond),

// extract the raw HTML from the page

chromedp.ActionFunc(func(ctx context.Context) error {

// select the root node on the page

rootNode, err := dom.GetDocument().Do(ctx)

if err != nil {

return err

}

html, err = dom.GetOuterHTML().WithNodeID(rootNode.NodeID).Do(ctx)

return err

}),

)

if err != nil {

log.Fatal("Error while performing the automation logic:", err)

}

fmt.Println(html)

}

Verify that it works as expected:

go run scraper.go

And you'll see this output in the terminal:

<!DOCTYPE html><html class="h-full"><head>

<meta charset="utf-8">

<meta name="viewport" content="width=device-width, initial-scale=1">

<meta name="description" content="Learn to scrape infinite scrolling pages"><title>Scraping Infinite Scrolling Pages (Ajax) | ScrapingClub</title>

// omitted for brevity

Great! That's the HTML code of the destination page.

Real-world scrapers select elements and extract data from them, instead of dealing with raw HTML. So, let's see how to do it in next!

How to Find Web Page Elements: CSS Selectors and XPath in Chromedp

Assume your scraping goal is to collect the name and price of each product on the page. To achieve that, you must select the product HTML elements, and then extract the desired data from them.

Selecting the HTML elements is about applying a DOM selector strategy. That typically involves an expression written in XPath or as a CSS selector, two languages to find elements in the DOM.

CSS selectors are pretty straightforward, but XPath expressions can be tricky. For example,

. allows you to select the current node, .. is to get the parent of the current node, and @ to point to a specific attribute. Learn more in our complete guide on XPath for web scraping.

Now, follow the instructions below to see how to extract product names and prices from the target page.

Create a global type to store the scraped data:

type Product struct {

name, price string

}

In main(), initialize an array of type Product. At the end of the script, this will store the scraped data.

var products []Product

Use the Nodes() action to select the product HTML elements. Then, ByQueryAll instructs Chromedp to select all DOM elements that match the CSS selector passed as the first argument. Use BySearch instead for XPath expressions.

var productNodes []*cdp.Node

err := chromedp.Run(ctx,

// navigate action...

chromedp.Nodes(".post", &productNodes, chromedp.ByQueryAll),

)

if err != nil {

log.Fatal("Error:", err)

}

Once you get the product nodes, iterate over them and apply the data extraction logic. To be noted, Text() is an action that extracts the text of the first element node matching the input selector. It makes scraping data easier and enables you to achieve your goal.

var name, price string

for _, node := range productNodes {

err = chromedp.Run(ctx,

chromedp.Text("h4", &name, chromedp.ByQuery, chromedp.FromNode(node)),

chromedp.Text("h5", &price, chromedp.ByQuery, chromedp.FromNode(node)),

)

if err != nil {

log.Fatal("Error:", err)

}

product := Product{}

product.name = name

product.price = price

products = append(products, product)

}

This what the global scraper.go looks like so far:

package main

import (

"context"

"fmt"

"github.com/chromedp/cdproto/cdp"

"github.com/chromedp/chromedp"

"log"

)

// Product data structure to store the scraped data

type Product struct {

name, price string

}

func main() {

// to keep track of all the scraped objects

var products []Product

// initialize a controllable Chrome instance

ctx, cancel := chromedp.NewContext(

context.Background(),

)

// to release the browser resources when

// it is no longer needed

defer cancel()

// browser automation logic

var productNodes []*cdp.Node

err := chromedp.Run(ctx,

chromedp.Navigate("https://scrapingclub.com/exercise/list_infinite_scroll/"),

chromedp.Nodes(".post", &productNodes, chromedp.ByQueryAll),

)

if err != nil {

log.Fatal("Error:", err)

}

// scraping logic

var name, price string

for _, node := range productNodes {

// extract data from the product HTML node

err = chromedp.Run(ctx,

chromedp.Text("h4", &name, chromedp.ByQuery, chromedp.FromNode(node)),

chromedp.Text("h5", &price, chromedp.ByQuery, chromedp.FromNode(node)),

)

if err != nil {

log.Fatal("Error:", err)

}

// initialize a new product instance

// with scraped data

product := Product{}

product.name = name

product.price = price

products = append(products, product)

}

fmt.Println(products)

}

Run it, and the output below will print in the terminal:

[{Short Dress $24.99} {Patterned Slacks $29.99} {Short Chiffon Dress $49.99} {Off-the-shoulder Dress $59.99} {V-neck Top $24.99} {Short Chiffon Dress $49.99} {V-neck Top $24.99} {V-neck Top $24.99} {Short Lace Dress $59.99} {Fitted Dress $34.99}]

Well done! You master the fundamentals of Chromedp now!

The content only involved ten elements because the page relies on infinite scrolling, so let's see how to scrape all products in the next section of this tutorial.

Interact with Web Pages: Scroll, Screenshot, etc.

Chromdp gives you access to all headless browser interactions, including moving the mouse, scrolling, waiting for elements, and more. These actions help you bypass anti-bot measures as your automated script will behave like a human user.

The interactions supported by Chromedp include:

- Scroll down or up the page.

- Click page elements and perform other mouse actions.

- Wait for page elements to load.

- Fill out and clear input fields.

- Submit forms.

- Take screenshots.

- Export the page to a PDF.

- Drag and drop elements.

You can achieve those operations through common Run actions or via the Evaluate() function. This special action accepts a JavaScript snippet as input and executes it on the page. With both tools, any browser interaction becomes possible.

Let's take a look at how Evaluate() helps you scrape all products from the infinite scroll demo page and then explore other popular Chromedp interactions!

Scrolling

After the first page load of our target demo shop, the page only contains ten products, as it relies on infinite scrolling to load new data. Dealing with that popular interaction requires some JavaScript logic. In this case, the code below instructs the browser to scroll down the page eight times at an interval of 0.5 seconds via JS.

// scroll down the page 8 times

const scrolls = 8

let scrollCount = 0

// scroll down and then wait for 0.5s

const scrollInterval = setInterval(() => {

window.scrollTo(0, document.body.scrollHeight)

scrollCount++

if (scrollCount === numScrolls) {

clearInterval(scrollInterval)

}

}, 500)

Store the script below in a scrollingScript variable and pass it to Evaluate() as follows. Note that the Evaluate() action must be placed before the HTML node selection logic.

scrollingScript := `

// scroll down the page 8 times

const scrolls = 8

let scrollCount = 0

// scroll down and then wait for 0.5s

const scrollInterval = setInterval(() => {

window.scrollTo(0, document.body.scrollHeight)

scrollCount++

if (scrollCount === numScrolls) {

clearInterval(scrollInterval)

}

}, 500)

`

// browser automation logic

var productNodes []*cdp.Node

err := chromedp.Run(ctx,

chromedp.Navigate("https://scrapingclub.com/exercise/list_infinite_scroll/"),

chromedp.Evaluate(scrollingScript, nil),

chromedp.Nodes(".post", &productNodes, chromedp.ByQueryAll),

)

if err != nil {

log.Fatal("Error:", err)

}

Since the scrolling logic uses timers, you also need to instruct Chromedp to wait for the operation to end. So, add the following action:

chromedp.Sleep(5000*time.Millisecond) // wait 5 seconds

This should be your new complete code:

package main

import (

"context"

"fmt"

"github.com/chromedp/cdproto/cdp"

"github.com/chromedp/chromedp"

"log"

"time"

)

// Product data structure to store the scraped data

type Product struct {

name, price string

}

func main() {

// to keep track of all the scraped objects

var products []Product

// initialize a controllable Chrome instance

ctx, cancel := chromedp.NewContext(

context.Background(),

)

// to release the browser resources when

// it is no longer needed

defer cancel()

// scrolling logic in JavaScript

scrollingScript := `

// scroll down the page 8 times

const scrolls = 8

let scrollCount = 0

// scroll down and then wait for 0.5s

const scrollInterval = setInterval(() => {

window.scrollTo(0, document.body.scrollHeight)

scrollCount++

if (scrollCount === numScrolls) {

clearInterval(scrollInterval)

}

}, 500)

`

// browser automation logic

var productNodes []*cdp.Node

err := chromedp.Run(ctx,

chromedp.Navigate("https://scrapingclub.com/exercise/list_infinite_scroll/"),

chromedp.Evaluate(scrollingScript, nil),

chromedp.Sleep(5000*time.Millisecond),

chromedp.Nodes(".post", &productNodes, chromedp.ByQueryAll),

)

if err != nil {

log.Fatal("Error:", err)

}

// scraping logic

var name, price string

for _, node := range productNodes {

// extract data from the product HTML node

err = chromedp.Run(ctx,

chromedp.Text("h4", &name, chromedp.ByQuery, chromedp.FromNode(node)),

chromedp.Text("h5", &price, chromedp.ByQuery, chromedp.FromNode(node)),

)

if err != nil {

log.Fatal("Error:", err)

}

// initialize a new product instance

// with scraped data

product := Product{}

product.name = name

product.price = price

products = append(products, product)

}

fmt.Println(products)

}

The products array will now get all 60 product elements instead of just the first ten. Run the script again to verify it:

go run scraper.go

This will be in your logs:

[{Short Dress $24.99} {Patterned Slacks $29.99} {Short Chiffon Dress $49.99} {Off-the-shoulder Dress $59.99} /* omitted for brevity... */ {T-shirt $6.99} {Blazer $49.99}]

Wonderful, mission complete! You just scraped all products from the page.

Wait for Element

Your current Go scraper relies on a hard wait, which is discouraged as it makes the scraping logic unreliable. The script might fail because of a browser or network slowdown, and you want to avoid that!

Waiting for nodes to be on the page before interacting with them leads to consistent results. Considering how common it's for pages to get data dynamically or render elements in the browser, that's key for building effective scrapers.

Chromedp provides several waiting actions to wait for an element to be visible, ready for interaction, and more. Replace Sleep() with WaitVisible() to wait for the 60th .post element to be on the page. After scrolling down, the script will wait for data to get retrieved via AJAX and the final product element to be visible on the page.

var productNodes []*cdp.Node

err := chromedp.Run(ctx,

chromedp.Navigate("https://scrapingclub.com/exercise/list_infinite_scroll/"),

chromedp.Evaluate(scrollingScript, nil),

chromedp.WaitVisible(".post:nth-child(60)"),

chromedp.Nodes(".post", &productNodes, chromedp.ByQueryAll),

)

if err != nil {

log.Fatal("Error:", err)

}

Now, the complete code of your spider code no longer involves hard waits:

package main

import (

"context"

"fmt"

"github.com/chromedp/cdproto/cdp"

"github.com/chromedp/chromedp"

"log"

)

// Product data structure to store the scraped data

type Product struct {

name, price string

}

func main() {

// to keep track of all the scraped objects

var products []Product

// initialize a controllable Chrome instance

ctx, cancel := chromedp.NewContext(

context.Background(),

)

// to release the browser resources when

// it is no longer needed

defer cancel()

// scrolling logic in JavaScript

scrollingScript := `

// scroll down the page 8 times

const scrolls = 8

let scrollCount = 0

// scroll down and then wait for 0.5s

const scrollInterval = setInterval(() => {

window.scrollTo(0, document.body.scrollHeight)

scrollCount++

if (scrollCount === numScrolls) {

clearInterval(scrollInterval)

}

}, 500)

`

// browser automation logic

var productNodes []*cdp.Node

err := chromedp.Run(ctx,

chromedp.Navigate("https://scrapingclub.com/exercise/list_infinite_scroll/"),

chromedp.Evaluate(scrollingScript, nil),

chromedp.WaitVisible(".post:nth-child(60)"),

chromedp.Nodes(".post", &productNodes, chromedp.ByQueryAll),

)

if err != nil {

log.Fatal("Error:", err)

}

// scraping logic

var name, price string

for _, node := range productNodes {

// extract data from the product HTML node

err = chromedp.Run(ctx,

chromedp.Text("h4", &name, chromedp.ByQuery, chromedp.FromNode(node)),

chromedp.Text("h5", &price, chromedp.ByQuery, chromedp.FromNode(node)),

)

if err != nil {

log.Fatal("Error:", err)

}

// initialize a new product instance

// with scraped data

product := Product{}

product.name = name

product.price = price

products = append(products, product)

}

fmt.Println(products)

}

If you launch it, you'll get the same results as before but with better performance because you're now waiting for the right amount of time.

Time to see other useful interactions in action!

Wait for Page to Load in Chromedp

chromedp.Navigate() automatically waits for the load event to fire, which happens when the whole page has loaded. So, Chromedp already waits for pages to load.

At the same time, web pages are now extremely dynamic and it's no longer easy to say when a page has loaded. Use one of the action functions below for more control:

-

WaitEnabled() -

WaitNotPresent() -

WaitNotVisible() -

WaitReady() -

WaitSelected() -

WaitVisible() -

Poll() -

PollFunction() -

ListenTarget()

Click Elements

Chromedp exposes the Click() action to simulate click interactions:

chromedp.Click("#.post", chromedp.NodeVisible),

This function sends a mouse click event to the first element node matching the selector. chromedp.NodeVisible is an optional option that causes the DOM selector query to wait for the matching element to be visible on the page.

Take a Screenshot with Chromedp

Use the FullScreenshot() action to perform a screenshot of the entire browser viewport with the specified image quality:

var screenshotBuffer []byte

err := chromedp.Run(ctx,

chromedp.Navigate("https://scrapingclub.com/exercise/list_infinite_scroll/"),

chromedp.FullScreenshot(&screenshotBuffer, 100),

)

If you instead want to take a screenshot of a single HTML element, take advantage of the Screenshot() action:

var screenshotBuffer []byte

err := chromedp.Run(ctx,

chromedp.Navigate("https://scrapingclub.com/exercise/list_infinite_scroll/"),

chromedp.Screenshot(".post", &screenshotBuffer, chromedp.NodeVisible),

)

Next, export the resulting byte buffer to an image. The above snippet will export the screenshot to a screenshot.png file.

err = os.WriteFile("screenshot.png", screenshotBuffer, 00644)

if err != nil {

log.Fatal("Error:", err)

}

This code requires the following import:

import (

// other imports...

"os"

)

Submit Form with Chromedp

Chromedp allows you to submit a form through the actions. This all revolves around two functions:

-

SendKeys(): Action that synthesizes the key up, char, and down events to type strings in an input element. -

Submit(): Action that submits the parent form of the first element node matching the selector. See an example below:

err := chromedp.Run(ctx,

chromedp.Navigate("https://example.org/form"),

chromedp.WaitVisible(sel),

chromedp.SendKeys(".name", "Maria"),

chromedp.SendKeys(".surname", "Williams"),

// filling out the other fields

chromedp.Submit("button[role=submit]"),

)

File Download Using Chromedp

Chromedp offers methods to download both an image on the page or a specific file from a button or link. See the snippet below to download an image:

// initialize a download channel

done := make(chan bool)

// this will be used to capture the request id

// for matching network events

var requestID network.RequestID

// set up a listener to watch the network events and close the channel when

// the desired request completes

chromedp.ListenTarget(ctx, func(v interface{}) {

switch ev := v.(type) {

case *network.EventRequestWillBeSent:

log.Printf("EventRequestWillBeSent: %v: %v", ev.RequestID, ev.Request.URL)

if ev.Request.URL == urlstr {

requestID = ev.RequestID

}

case *network.EventLoadingFinished:

log.Printf("EventLoadingFinished: %v", ev.RequestID)

if ev.RequestID == requestID {

close(done)

}

}

})

// all we need to do here is navigate to the download url

err := chromedp.Run(ctx,

chromedp.Navigate("https://example.com/image"),

)

if err != nil {

log.Fatal("Error:", err)

}

// this will block the execution

// until the chromedp listener closes the channel

<-done

// get the downloaded bytes for the request id

var imageBuffer []byte

err = chromedp.Run(ctx, chromedp.ActionFunc(func(ctx context.Context) error {

var err error

imageBuffer, err = network.GetResponseBody(requestID).Do(ctx)

return err

}))

if err != nil {

log.Fatal("Error:", err)

}

// write the image file to disk

err = os.WriteFile("download.png", imageBuffer, 0644)

if err != nil {

log.Fatal("Error:", err)

}

And the code that follows to download a file:

// set up a download channel

done := make(chan string, 1)

// set up a listener to watch the download events and close the channel

// when this completes

chromedp.ListenTarget(ctx, func(v interface{}) {

if ev, ok := v.(*browser.EventDownloadProgress); ok {

completed := "(unknown)"

if ev.TotalBytes != 0 {

completed = fmt.Sprintf("%0.2f%%", ev.ReceivedBytes/ev.TotalBytes*100.0)

}

log.Printf("state: %s, completed: %s\n", ev.State.String(), completed)

if ev.State == browser.DownloadProgressStateCompleted {

done <- ev.GUID

close(done)

}

}

})

// get path of the working directory

workingDirectoryPath, err := os.Getwd()

if err != nil {

log.Fatal(err)

}

// download the file

err = chromedp.Run(ctx,

// navigate to the page

chromedp.Navigate(`https://example.com/file-download`),

browser.

SetDownloadBehavior(browser.SetDownloadBehaviorBehaviorAllowAndName).

WithDownloadPath(workingDirectoryPath).

WithEventsEnabled(true),

// click the download link/button when visible

chromedp.Click(".download-pdf", chromedp.NodeVisible),

)

if err != nil && !strings.Contains(err.Error(), "net::ERR_ABORTED") {

// ignore net::ERR_ABORTED is essential as also successful downloads

// emit this error

log.Fatal("Error:", err)

}

// block the execution until

// the chromedp listener closes the channel

<-done

Chromedp Proxy: An Essential Tool

Anti-scraping technologies tend to prevent automated requests by banning IPs. That's terrible as it undermines the effectiveness of your web scraping process. A practical way to avoid those measures is to use proxies, rotating requests over many IPs.

Let's now learn how to set a proxy in Chromedp.

First, get a free proxy from providers like Free Proxy List. Then, specify it through the ProxyServer setting:

// define the proxy settings

options := append(

chromedp.DefaultExecAllocatorOptions[:],

chromedp.ProxyServer("50.206.111.88:80"),

// other options...

)

// specify a new context set up for NewContext

ctx, cancel := chromedp.NewExecAllocator(context.Background(), options...)

defer cancel()

// browser context with a proxy

ctx, cancel = chromedp.NewContext(ctx)

defer cancel()

Proxy URLs must be in the following format:

<proxy_protocol>:<proxy_user>:<proxy_password>@<proxy_host>:<proxy_port>

Note: Free proxies typically don't have a username and password. The major disadvantage of this approach is that free proxies are short-lived and error-prone. In short, they're unreliable, and you can't use them in a real-world scenario. The solution? ZenRows! As a powerful web scraping API that you can easily integrate with Chromedp, it offers IP rotation through premium residential proxies and much more. Follow the steps below to get started with ZenRows proxies:

- Sign up for free to get your free 1,000 credits.

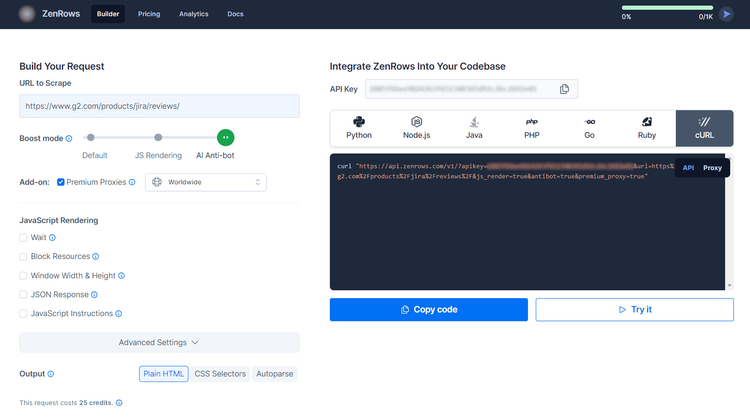

- You'll get to the Request Builder page.

- Paste your target URL, check the "Premium Proxies" option and use "cURL".

- Copy the generated link and paste it into your code as a target URL inside Chromedp's

Navigate()method.

package main

import (

"context"

"fmt"

"github.com/chromedp/cdproto/dom"

"github.com/chromedp/chromedp"

"log"

"time"

)

func main() {

ctx, cancel := chromedp.NewContext(

context.Background(),

)

defer cancel()

var html string

err := chromedp.Run(ctx,

chromedp.Navigate("https://api.zenrows.com/v1/?apikey=<YOUR_ZENROWS_API_KEY>&url=https%3A%2F%2Fscrapingclub.com%2Fexercise%2Flist_infinite_scroll%2F&premium_proxy=true"),

// scraping actions...

chromedp.Sleep(2000*time.Millisecond),

chromedp.ActionFunc(func(ctx context.Context) error {

rootNode, err := dom.GetDocument().Do(ctx)

if err != nil {

return err

}

html, err = dom.GetOuterHTML().WithNodeID(rootNode.NodeID).Do(ctx)

return err

}),

)

if err != nil {

log.Fatal("Error while performing the automation logic:", err)

}

fmt.Println(html)

}

User Agent in Chromedp Proxy: Avoid Getting Blocked

Besides using a premium proxy, it's also crucial to set a real User-Agent header. The reason is that the most basic anti-bot measure involves analyzing it to detect automated requests. Most HTTP clients and scraping tools set easily recognizable User Agents, such as:

axios/1.3. 5

Meanwhile, real-world browsers use more complex strings, like this one:

Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36

As you can see, it's not difficult to spot bot requests based on it.

Override the default Chromedp User-Agent header with the UserAgent setting. You can grab working user-agents for web scraping from our list.

// define the proxy settings

options := []chromedp.ExecAllocatorOption{

chromedp.DefaultExecAllocatorOptions[:],

chromedp.ProxyServer("50.206.111.88:80"),

chromedp.UserAgent("Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36"),

// other options...

}

// specify a new context set up for NewContext

ctx, cancel := chromedp.NewExecAllocator(context.Background(), options...)

defer cancel()

// browser context with a proxy

ctx, cancel = chromedp.NewContext(ctx)

defer cancel()

// By default, ZenRows also sets a custom User-Agent and auto-rotates it.

Note: By default, ZenRows also sets a custom User-Agent and auto-rotates it.

Best Way to Avoid Getting Blocked while Web Scraping

The biggest challenge when scraping data from the web is getting blocked by anti-scraping measures, like rate limiting and IP bans. Adopting proxies and custom User-Agent headers are just baby steps to avoid blocks. With sites under a WAF like G2.com, a free proxy and a real-world User-Agent won't be enough:

package main

import (

"context"

"fmt"

"github.com/chromedp/cdproto/dom"

"github.com/chromedp/chromedp"

"time"

)

func main() {

// define the proxy settings

options := append(chromedp.DefaultExecAllocatorOptions[:],

chromedp.UserAgent("Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36"),

)

ctx, cancel := chromedp.NewExecAllocator(context.Background(), options...)

defer cancel()

ctx, cancel = chromedp.NewContext(ctx)

defer cancel()

var html string

err := chromedp.Run(ctx,

chromedp.Navigate("https://www.g2.com/products/jira/reviews/"),

chromedp.Sleep(2000*time.Millisecond),

chromedp.ActionFunc(func(ctx context.Context) error {

rootNode, err := dom.GetDocument().Do(ctx)

if err != nil {

return err

}

html, err = dom.GetOuterHTML().WithNodeID(rootNode.NodeID).Do(ctx)

return err

}),

)

if err != nil {

fmt.Println("Error:", err)

return

}

fmt.Println(html)

}

The G2 server will respond with a 403 Forbidden error and an HTML error message. That occurs because the site is still able to identify the requests as coming from automated software.

<p>You do not have access to www.g2.com.</p><p>The site owner may have set restrictions that prevent you from accessing the site.</p>

How to overcome anti-bot? Again, with ZenRows! This next-generation scraping technology comes equipped with the most advanced anti-bot bypass toolkit that exists. JavaScript rendering capabilities, rotating premium proxies, auto-rotating UAs, and anti-CAPTCHA are only some of many its built-in tools available.

Here are the easy steps to use ZenRows in your scraper without even needing Chromedp:

- Sign up for free to get your free 1,000 credits.

- You'll reach the Request Builder page.

- Paste your target URL, activate the “AI Anti-bot” mode (it includes JS rendering), and add “Premium Proxies”.

- Choose cURL to integrate with Go.

- Select the “API” connection mode.

- Copy the code and run it.

Your new Go scraper reduces to a simpler script that no longer requires Chromedp:

package main

import (

"io"

"log"

"net/http"

)

func main() {

client := &http.Client{}

req, err := http.NewRequest("GET", "https://api.zenrows.com/v1/?apikey=<YOUR_ZENROWS_API_KEY>&url=https%3A%2F%2Fwww.g2.com%2Fproducts%2Fjira%2Freviews%2F&js_render=true&antibot=true&premium_proxy=true", nil)

resp, err := client.Do(req)

if err != nil {

log.Fatalln(err)

}

defer resp.Body.Close()

body, err := io.ReadAll(resp.Body)

if err != nil {

log.Fatalln(err)

}

log.Println(string(body))

}

Run it, and you'll now get a `200` response. The script above prints this:

<!DOCTYPE html>

<head>

<meta charset="utf-8" />

<link href="https://www.g2.com/assets/favicon-fdacc4208a68e8ae57a80bf869d155829f2400fa7dd128b9c9e60f07795c4915.ico" rel="shortcut icon" type="image/x-icon" />

<title>Jira Reviews 2023: Details, Pricing, & Features | G2</title>

<!-- omitted for brevity ... -->

Fantastic! Bye bye, forbidden access errors!

Get Docker for Chromedp

The chromedp/headless-shell project provides a Docker image containing a special pre-built version of Chrome. This is a tweaked and slimmed-down version of Chrome that's useful for efficient scraping and browser automation.

You can use a Docker image following the steps below:

- Pull the latest version of headless-shell:

docker pull chromedp/headless-shell:latest - Pull the specific tagged version of headless-shell:

docker pull chromedp/headless-shell:74.0.3717.1 - Run the Docker image:

docker run -d -p 9222:9222 --rm --name headless-shell chromedp/headless-shell

You then need to specify the local Docker URL in your Go scraper:

dockerURL := "wss://localhost:9222"

ctx, cancel := chromedp.NewRemoteAllocator(context.Background(), dockerURL)

defer cancel()

ctx, cancel = chromedp.NewContext(allocatorContext)

defer cancel()

Chromedp will now operate over an optimized Chrome version.

Get Cookies with Chromedp

The server might respond to a web page request by setting some cookies, which usually contain crucial information for caching or session management. Knowing how to deal with them in Chromedp may be essential for the effectiveness of your scraper.

Use the action below to read a cookie in Chromedp. GetCookies() comes from the storage component of Chromedp, which contains the browser's local data.

chromedp.ActionFunc(func(ctx context.Context) error {

cookies, err := storage.GetCookies().Do(ctx)

if err != nil {

return err

}

for i, cookie := range cookies {

log.Printf("Cookie %d: %+v", i, cookie)

}

return nil

}),

To set a cookie in Chromedp, you can go through the path below. The SetCookie() function comes from net/http and enables you to specify some cookies on a network request. The following Navigate() action will contain the cookies set here.

chromedp.ActionFunc(func(ctx context.Context) error {

// the cookie to set

cookie := [2]int{"cookie_name", "cookie value"}

// set the cookie expiration

expirationTime := cdp.TimeSinceEpoch(time.Now().Add(180 * 24 * time.Hour))

err := network.SetCookie(cookie[i], cookies[i+1]).

WithExpires(&expirationTime).

WithDomain("<YOUR_DOMAIN>").

WithHTTPOnly(true).

Do(ctx)

if err != nil {

return err

}

return nil

}),

Login with Chromedp

When logging in, what usually happens behind the scene is that the client makes a POST request to the server with the credentials specified in the login form. The server responds by setting some cookies to authenticate the user session.

To log in with a Chromedp scraper, you can fill out and submit the form as below:

err := chromedp.Run(ctx,

chromedp.Navigate("https://example.org/login"),

chromedp.WaitVisible(sel),

chromedp.SendKeys(".login", "<YOUR_USERNAME>"), // login input

chromedp.SendKeys(".password", "<YOUR_PASSWORD>"), // password input

chromedp.Submit("button[role=submit]"), / "Login" button

)

Great! That will produce the POST request and authenticate the headless browser session. Explore our Python guide on how to scrape a website that requires a login. Even though the programming language there is different, the login scenarios and techniques are the same.

Conclusion

In this Chromedp tutorial for Go, you learned the fundamentals of controlling a headless Chrome instance. You started from the basics and dove into more advanced techniques to become an expert.

Now you know:

- How to set up a Chromedp Golang project.

- What user interactions you can simulate with it.

- How to run custom JavaScript scripts in Chromedp.

- The challenges of web scraping and how to overcome them.

No matter how sophisticated your browser automation is, anti-bots can still detect you. Avoid them all with ZenRows, a web scraping API with IP rotation, headless browser capabilities, and an advanced built-in bypass for anti-scraping measures. Scraping dynamic-content sites is easier now. Try ZenRows for free!

Frequent Questions

How to Use Chromedp?

Using Chromedp involves orchestrating headless Chrome browser actions with Go:

- Import the required packages, including

chromedpandcontext. - Create a browser context with

NewContext(). - Execute Chromedp actions like navigation, interactions, and data extraction to the context via the

Run()method.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.