Hitting the 403 forbidden error while scraping with Axios gets your scraper stuck. But there are ways out.

This article will show various ways to overcome the Axios 403 error and scrape like a champ. Let's get started!

What Is an Axios Code 403?

An Axios 403 error in NodeJS means the server understands your request but forbids it and can't grant access. This web scraping 403 issue for Node.js happens when the server flags you as a bot due to IP bans, rate limiting, request filtering, misconfigured headers, or worse, Cloudflare anti-bot protection.

The error typically looks like this in your terminal:

Error: AxiosError: Request failed with status code 403

Does this problem sound familiar?

Let's walk through the proven ways to prevent it while using Axios for web scraping.

1. Scraping API to Bypass Error 403 in JavaScript

Scraping APIs are at the top of the game, allowing you to scrape a website effectively without getting blocked by anti-bots.

Tools like ZenRows support seamless integration with Axios and let you bypass anti-bots effectively.

Adding these functionalities to your Axios request helps your scraper bypass the Axios 403 forbidden error without hassle.

Using ZenRows, let's see how to apply these features to an Axios request to https://www.g2.com/products/asana/reviews, a website protected by Cloudflare.

Sending an Axios request to this website without scraping API support returns the following error:

AxiosError: Request failed with status code 403

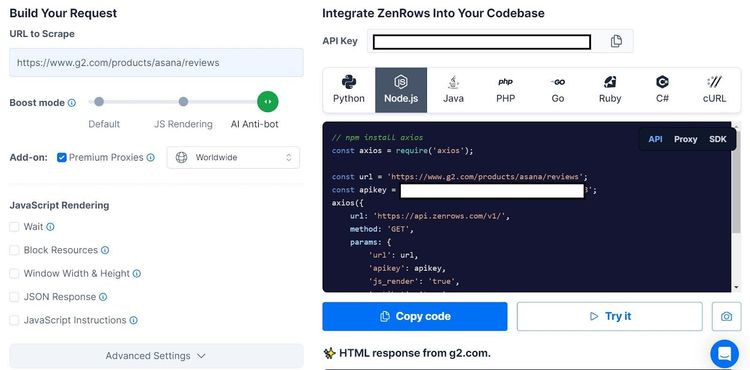

To bypass this blockage with a scraping API, sign up and log in to your ZenRows Request Builder so you can follow along.

Once in the Request Builder, paste the target URL in the field below "URL to Scrape."

Next, select "Node.js" at the top of the code box and set the Boost mode to AI "Anti-Bot." We'll maintain the "API" option this time.

Tick the "Premium Proxies" checkbox to add the rotating proxy Add-on to your Axios request.

Copy and paste the generated code into your scraper script.

The generated code should look like this:

// npm install axios

const axios = require('axios');

const url = 'https://www.g2.com/products/asana/reviews';

const apikey = '<YOUR_ZENROWS_API_KEY>';

axios({

url: 'https://api.zenrows.com/v1/',

method: 'GET',

params: {

'url': url,

'apikey': apikey,

'js_render': 'true',

'antibot': 'true',

'premium_proxy': 'true',

},

})

.then(response => console.log(response.data))

.catch(error => console.log(error));

Running the code should output the complete page HTML, revealing the page title as shown.

<!DOCTYPE html>

<head>

<!-- ... -->

<title>Asana Reviews 2023 & Product Details</title>

<meta content="78D210F3223F3CF585EB2436D17C6943" name="msvalidate.01" />

<meta content="width=device-width, initial-scale=1" name="viewport" />

<meta content="GNU Terry Pratchett" http-equiv="X-Clacks-Overhead" />

<meta content="ie=edge" http-equiv="x-ua-compatible" />

<meta content="en-us" http-equiv="content-language" />

<meta content="website" property="og:type" />

<meta content="G2" property="og:site_name" />

<meta content="@G2dotcom" name="twitter:site" />

<meta content="The G2 on Asana" property="og:title" />

<meta content="https://www.g2.com/products/asana/reviews" property="og:url" />

<meta content="Filter 9523 reviews by the users' company size, role or industry to find out how Asana works for a business like yours." property="og:description" />

<meta name="twitter:label1" value="G2.com Category" />

<meta name="twitter:data1" value="Project Collaboration" />

<!-- ... -->

In this case, the HTML output indicates that Axios has bypassed Cloudflare. Does that sound magical?

Although this is the best option for bypassing anti-bots, it's great to have multiple solutions. Let's consider other methods to help you understand and remove this error.

2. Optimize Your Rate Requests Frequency

Rate limiting allows websites to automatically flag and ban an IP address that exceeds the request limit, resulting in an Axios 403 forbidden error.

Limiting request frequency at intervals can prevent rate limiting. You can achieve this by increasing the interval between requests or using an exponential back-off, which is more intuitive.

How does interval delay work?

Using Interval Delays to Reduce Rate Frequency

Interval delay pauses request execution for a specified time before the subsequent one. Let's quickly see a custom function that delays between retries.

The code below sets the request count to five and adds a three-second interval between each request.

// Import the Axios library

const axios = require('axios');

const targetUrl = 'https://httpbin.io/';

// Configure access method

const configRule = {

method: 'get',

}

// Create the scheduler function

async function requestScheduler() {

console.log('Scraper - Start');

const countRange = 5; // Set the desired count range

const delayBetweenRequests = 2000; // Set the delay between each request in milliseconds

for (let count = 1; count <= countRange; count++) {

// Run the delayed code for each count

await delayedRequest(count, configRule, targetUrl);

// Pause for the specified delay between each request

if (count < countRange) {

await new Promise(resolve => setTimeout(resolve, delayBetweenRequests));

}

}

console.log('Main code - End');

}

delayedRequest is an Axios request function based on requestScheduler.

Let's apply this in an Axios request function.

// Define the Axios request function

async function delayedRequest(count, rule, targetUrl) {

console.log(`Request #${count}`);

try {

// Pass the configuration rule and target URL

const response = await axios.request({ ...rule, url: targetUrl });

// Handle the successful response

console.log(

response.status === 200 ?

`Response: ${response.status}`

: `Unexpected status: ${response.status}`

);

} catch (error) {

// Handle errors

console.error('Error:', error.message);

}

}

Call the scheduler function at the bottom of the code file to execute the Axios request at intervals.

// Run the scheduler to start making HTTP requests at intervals

requestScheduler();

The code looks like this when merged:

// Import the Axios library

const axios = require('axios');

const targetUrl = 'https://httpbin.io/';

// Configure access method

const configRule = {

method: 'get',

}

// Create the scheduler function

async function requestScheduler() {

console.log('Scraper - Start');

const countRange = 5; // Set the desired count range

const delayBetweenRequests = 2000; // Set the delay between each request in milliseconds

for (let count = 1; count <= countRange; count++) {

// Run the delayed code for each count

await delayedRequest(count, configRule, targetUrl);

// Pause for the specified delay between each request

if (count < countRange) {

await new Promise(resolve => setTimeout(resolve, delayBetweenRequests));

}

}

console.log('Main code - End');

}

// Define the axios request function

async function delayedRequest(count, rule, targetUrl) {

console.log(`Request #${count}`);

try {

// Pass the configuration rule and target URL

const response = await axios.request({ ...rule, url: targetUrl });

// Handle the successful response

console.log(

response.status === 200 ?

`Response: ${response.status}`

: `Unexpected status: ${response.status}`

);

} catch (error) {

// Handle errors

console.error('Error:', error.message);

}

}

// Run the scheduler to start making HTTP requests at intervals

requestScheduler();

The timed schedule runs as shown.

While delaying requests can help bypass rate limiting, it can still reveal that you are a bot due to the consistent pauses, resulting in an IP ban. You can deploy proxies to boost your requests.

3. Deploy Proxies to Randomize Your IP

Proxies allow you to mask your IP during web scraping. When you randomize them, the server thinks your request is from different regions, allowing you to bypass the 403 forbidden error due to an IP ban.

Thankfully, there are various ways to use proxies with Axios as the most effective way to avoid IP bans.

Datacenter and residential proxies are the most common proxy types. However, residential premium proxies are the best for web scraping since they originate from internet service providers (ISPs).

Cost-effective premium proxy providers like ZenRows let you easily deploy proxies into your scrapers.

Sign up with ZenRows to see how to deploy one.

Once in your dashboard, click the "Premium Proxy" checkbox and copy your API key.

Select "Proxy" from the options at the top-right of the code snippet on the right to reveal the ZenRows proxy URL.

As shown below, apply the copied parameters to your request configuration (config_rules).

const axios = require('axios');

require('dotenv').config();

// Load your API key from the .env file

const apiKey = '<YOUR_ZENROWS_API_KEY>'

// Configure access method, including user-agent in header and proxy rules

const config_rule = {

method: 'get',

headers: {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

},

proxy: {

protocol: 'http',

// The hostname of ZenRows proxy server

host: 'proxy.zenrows.com', // If using a free proxy, replace this with its value

// The port number

port: 8001,

// authentication credentials for the proxy server

auth: {

// Use your API_Key as your username

username: apiKey,

// Set the premium proxy parameter as your password

password: 'premium-proxy=true'

},

},

}

After adding the proxy parameter, including the authentication information, extend the script with the below code, which logs the current IP address from response.request.socket.remoteAddress.

const url = 'https://httpbin.io/'

// Pass configuration and target URL detail into Axios request

axios.request({...config_rule, url})

.then(response => {

// Handle the successful response

response.status === 200?

console.log('Response:', response.request.socket.remoteAddress):

console.log(response.status);

})

.catch(error => {

// Handle errors

console.error('Error:', `${error}`);

});

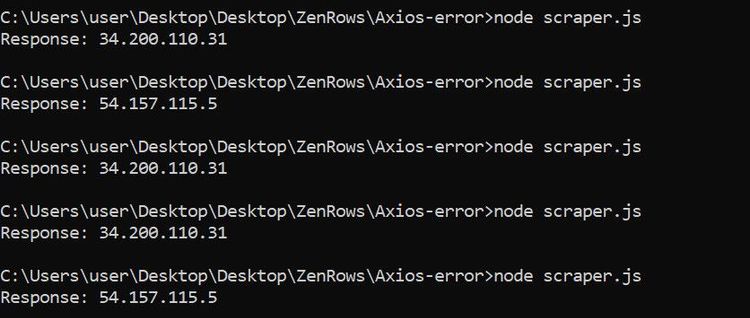

Running the code a few times outputs the following IPs per request, indicating periodic IP switching.

The added premium proxy now manages IP rotation, periodically randomizing it from a pool at its discretion.

Want to see more custom solutions? You can also change the default Axios user agent. The section that follows will explain this in detail.

4. Change your User Agent to Solve Axios 403

The default Axios user agent is vulnerable to anti-bot detection since it doesn't convey the ideal client information, resulting in an error 403.

Let's see the default Axios user agent with the following code.

const axios = require('axios');

// Configure access method, including user-agent in header and proxy rules

const config_rule = {

method: 'get',

}

const url = 'https://httpbin.io/user-agent'

// Pass configuration and target URL detail into Axios request

axios.request({...config_rule, url})

.then(response => {

// Handle the successful response

response.status === 200?

console.log('Response:', response.data):

console.log(response.status);

})

.catch(error => {

// Handle errors

console.error('Error:', `${error}`);

});

We only use https://httpbin.io/user-agent as a test website to show the current user agent since it has this endpoint (/user-agent). Appending the user-agent directory to regular websites won't work. Instead, you can use response.config.headers to view the user agent in the request header.

Here's the result:

Response: { 'user-agent': 'axios/1.6.2' }

As seen above, the User-Agent value is axios/1.6.2. It gives the server little or no information about the client and might get blocked easily. The goal is to change this to a browser format, which is less likely to get blocked.

Most browser user agents include the OS information, platform details, and supported extensions. A typical browser user agent that's less likely to get blocked looks like this:

Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36

You don't have to generate this yourself. Feel free to copy one from the top list of user agents for web scraping.

To change the default user agent in Axios, pass a user agent key to your request header as shown:

const axios = require('axios');

// Configure access method, including user-agent in header and proxy rules

const config_rule = {

method: 'get',

headers: {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36'

},

}

const url = 'https://httpbin.io/user-agent'

// Pass configuration and target URL detail into Axios request

axios.request({...config_rule, url})

.then(response => {

// Handle the successful response

response.status === 200?

console.log('Response:', response.data):

console.log(response.status);

})

.catch(error => {

// Handle errors

console.error('Error:', `${error}`);

});

Running the code outputs the following, indicating your request now uses the custom user agent.

Response: {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36'

}

The server sometimes eventually blocks a user agent due to frequent requests. So, more than sticking to one user agent is required. Randomizing your user agents per request solves this problem.

Next, let's explore how to achieve this.

Randomize the User Agent in Axios Request

User-agent randomization routes your Axios request through different clients per request, saving your scraper from the Axios 403 forbidden error.

The following function (getRandomUserAgent) rotates the user agents from the userAgents list. Remember to extend this list from the best scraping user agents.

// Import the Axios library

const axios = require('axios');

const userAgents = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

];

// Function to get a random user agent from the user agents list

const getRandomUserAgent = (userAgentsList) => {

const randomIndex = Math.floor(Math.random() * userAgentsList.length);

return userAgentsList[randomIndex];

};

Next, apply the randomization to your Axios request.

// Declare the target URL

const url = 'https://httpbin.io/user-agent';

// Pass the function to a variable

const randomUserAgent = getRandomUserAgent(userAgents);

// Configure your Axios request

const configRule = {

method: 'get',

url: url, // Changed this line to use 'url' directly in the config

// Pass the randomized user agents to the request header

headers: {

'user-agent': randomUserAgent,

},

};

// Make an Axios request

axios.request(configRule)

.then(response => {

// Handle the successful response and output the request headers

response.status === 200 ?

console.log('Response:', response.data) :

console.log('Could not fetch page');

})

.catch(error => {

// Handle errors

console.error('Error:', error.message);

});

The request header passes randomUserAgent as the user agent value, allowing this function to take effect.

The combined code looks like this:

// Import the Axios library

const axios = require('axios');

const userAgents = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

];

// Function to get a random user agent from the list

const getRandomUserAgent = (userAgentsList) => {

const randomIndex = Math.floor(Math.random() * userAgentsList.length);

return userAgentsList[randomIndex];

};

// Declare the target URL

const url = 'https://httpbin.io/user-agent';

// Pass the function to a variable

const randomUserAgent = getRandomUserAgent(userAgents);

// Configure your Axios request

const configRule = {

method: 'get',

url: url, // Changed this line to use 'url' directly in the config

// Pass the randomized user agents to the request header

headers: {

'user-agent': randomUserAgent,

},

};

// Make an Axios request

axios.request(configRule)

.then(response => {

// Handle the successful response and output the request headers

response.status === 200 ?

console.log('Response:', response.data) :

console.log('Could not fetch page');

})

.catch(error => {

// Handle errors

console.error('Error:', error.message);

});

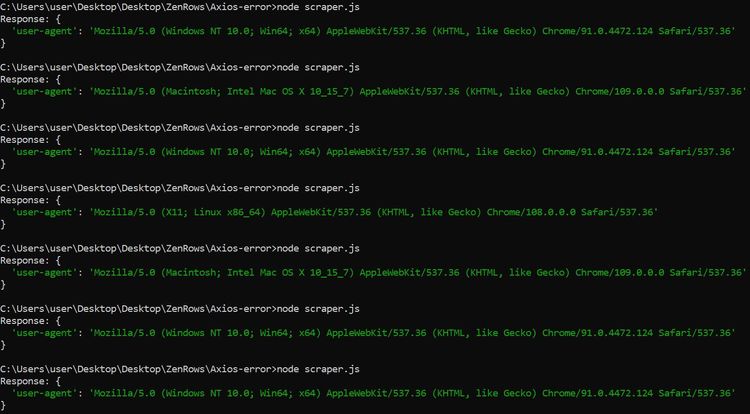

Run the code a few times, and the user agents will change randomly for each request.

Want to scale this further? You can deploy a full-scale header.

5. Make Sure Your Headers Are Complete

Unlike conventional browsers, which feature full request headers, Axios doesn't present all header strings required for smooth server access.

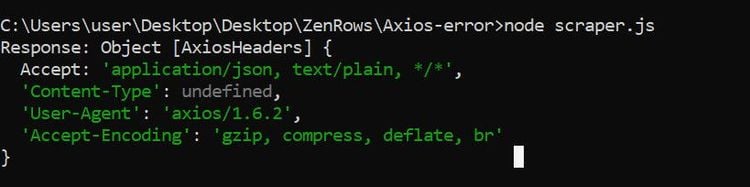

Let's quickly see what's in the Axios default headers. The code below displays this information from response.config.headers

// Import Axios

const axios = require('axios');

// Configure access method, including user-agent in header and proxy rules

const config_rule = {

method: 'get',

}

const url = 'https://httpbin.io/'

// Pass configuration and target URL detail into Axios request

axios.request({...config_rule, url})

.then(response => {

// Handle the successful response

response.status === 200?

console.log('Response:', response.config.headers):

console.log(response.status);

})

.catch(error => {

// Handle errors

console.error('Error:', `${error}`);

});

The above code outputs the following header details:

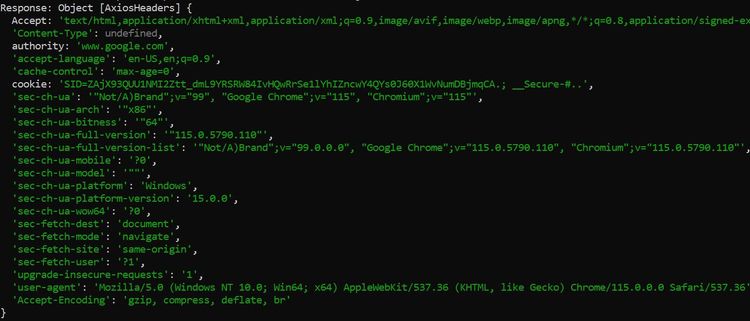

Let's compare this to that of a real browser like Chrome.

To do so, go to the "Network" tab of your Chrome browser (click "Inspect" > "Network"). Select a request on the network traffic table and click "Headers".

Scroll down to "Request Headers" to see the complete header details.

Yours might be different depending on the current website.

To override the Axios default headers with that of a real browser, copy the Chrome request headers from the Network tab and apply it like this:

// Store the request headers from chrome into a variable

const reqHeaders = {

'authority': 'www.google.com',

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

'accept-language': 'en-US,en;q=0.9',

'cache-control': 'max-age=0',

'cookie': 'SID=ZAjX93QUU1NMI2Ztt_dmL9YRSRW84IvHQwRrSe1lYhIZncwY4QYs0J60X1WvNumDBjmqCA.; __Secure-#..', // Cookie value truncated for brevity

'sec-ch-ua': '"Not/A)Brand";v="99", "Google Chrome";v="115", "Chromium";v="115"',

'sec-ch-ua-arch': '"x86"',

'sec-ch-ua-bitness': '"64"',

'sec-ch-ua-full-version': '"115.0.5790.110"',

'sec-ch-ua-full-version-list': '"Not/A)Brand";v="99.0.0.0", "Google Chrome";v="115.0.5790.110", "Chromium";v="115.0.5790.110"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-model': '""',

'sec-ch-ua-platform': 'Windows',

'sec-ch-ua-platform-version': '15.0.0',

'sec-ch-ua-wow64': '?0',

'sec-fetch-dest': 'document',

'sec-fetch-mode': 'navigate',

'sec-fetch-site': 'same-origin',

'sec-fetch-user': '?1',

'upgrade-insecure-requests': '1',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36',

};

// Import Axios

const axios = require('axios');

// Configure access method, including user-agent in header and proxy rules

const config_rule = {

method: 'get',

// Spread the properties of reqHeaders into the request headers

headers:{

...reqHeaders

}

}

const url = 'https://httpbin.io/'

// Pass configuration and target URL detail into Axios request

axios.request({...config_rule, url})

.then(response => {

// Handle the successful response

response.status === 200?

console.log('Response:', response.config.headers):

console.log(response.status);

})

.catch(error => {

// Handle errors

console.error('Error:', `${error}`);

});

Running the code to confirm the changes, here's what the output looks like:

Try scaling this by applying the previous user agent randomization function to the user-agent parameter.

You can update your code like this:

const userAgents = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36',

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36',

];

// Function to get a random user agent from the list

const getRandomUserAgent = (userAgentsList) => {

const randomIndex = Math.floor(Math.random() * userAgentsList.length);

return userAgentsList[randomIndex];

};

const randomUserAgent = getRandomUserAgent(userAgents);

const reqHeaders = {

'authority': 'www.google.com',

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

'accept-language': 'en-US,en;q=0.9',

'cache-control': 'max-age=0',

'cookie': 'SID=ZAjX93QUU1NMI2Ztt_dmL9YRSRW84IvHQwRrSe1lYhIZncwY4QYs0J60X1WvNumDBjmqCA.; __Secure-#..', // Cookie value truncated for brevity

'sec-ch-ua': '"Not/A)Brand";v="99", "Google Chrome";v="115", "Chromium";v="115"',

'sec-ch-ua-arch': '"x86"',

'sec-ch-ua-bitness': '"64"',

'sec-ch-ua-full-version': '"115.0.5790.110"',

'sec-ch-ua-full-version-list': '"Not/A)Brand";v="99.0.0.0", "Google Chrome";v="115.0.5790.110", "Chromium";v="115.0.5790.110"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-model': '""',

'sec-ch-ua-platform': 'Windows',

'sec-ch-ua-platform-version': '15.0.0',

'sec-ch-ua-wow64': '?0',

'sec-fetch-dest': 'document',

'sec-fetch-mode': 'navigate',

'sec-fetch-site': 'same-origin',

'sec-fetch-user': '?1',

'upgrade-insecure-requests': '1',

'user-agent': randomUserAgent,

};

//...

This technique can be effective, but combine it with the other ones mentioned earlier for better results.

Conclusion

As you've seen, you can easily bypass the 403 forbidden Axios error with the right solutions.

While we've considered a couple of workarounds, the overall best solutions are proxy deployment and web scraping API options. These make your life easier by providing the tools needed to extract information from a website at scale without getting blocked. Thankfully, all is at your fingertips with ZenRows.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.