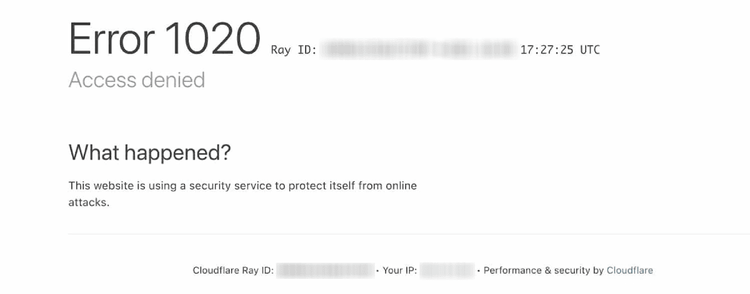

What Is Error 1020 Delivered by Cloudflare

Error 1020 happens when Cloudflare's Firewall Rules detect suspicious activity from the client or browser used for scraping. When that takes place, the security service blocks the traffic from the IP address to protect against potential threats.

Let's see what you can do to resolve this issue.

How to Bypass Cloudflare Error 1020

To bypass Cloudflare Error 1020, you can rely on the following tried-and-tested solutions:

1. Use a Rotating Proxy to Hide Your IP

One method worth trying is using a rotating proxy to hide your IP address. It'll automatically switch your IP every few minutes or per request, making it difficult for the website to detect and block you.

It's important to note that free proxies are often prone to failure and can be easily detected. Take a look at our guide on web scraping proxies to learn what solutions are the best.

Also, consider using a reputable proxy provider like ZenRows, which offers a large pool of residential IPs and auto-rotates them for you.

2. Customize and Rotate User-Agent Headers

As Error 1020 might mean Cloudflare isn't happy with your browser signature, one solution is to customize the User-Agent header in your scraping code. The UA is the most important HTTP header for scraping, identifying the client making the request to the server.

The firewall uses this string to detect and block bots, so rotating User-Agents will help you bypass the error 1020. You can find working UA headers on our list of best User-Agents for web scraping. Additionally, you can find the best practices to rotate them at scale in our guide on User Agents in Python Requests.

3. Mask Headless Browser with Undetected ChromeDriver

A headless browser runs without a graphical user interface. It's used for web scraping because it can simulate human behavior and avoid triggering Cloudflare's security features. Puppeteer, Playwright, and [Selenium can succesfully bypass Cloudflare[(https://www.zenrows.com/blog/selenium-cloudflare-bypass).

The problem is that headless browsers have a set of features that make them easy to detect by Cloudflare. You can use a plugin like Undetected ChromeDriver, a patched version of ChromeDriver, to mask these properties and stay under the anti-bot radar.

4. Use a Web Scraping API

A web scraping API like ZenRows combines the above-mentioned techniques and many others to bypass Cloudflare Error 1020. It's the most reliable tool you can use to extract the data you need, and you can get a free API key to try it now.

Frequent Questions

How to Bypass Cloudflare 1020?

To bypass Cloudflare Error 1020, you'll usually have to combine different techniques, like:

- Customizing your client's User-Agent header.

- Masking the automation properties of headless browsers with Undetected ChromeDriver.

- Using a rotating proxy to hide your IP.

Alternatively, an API like ZenRows automatically provides you with those techniques and other ones.

Why Am I Getting Error 1020 When Scraping a Website?

You're getting Error 1020 when scraping a website because Cloudflare detected suspicious activity from your client or browser. That happens for several reasons, including:

- Scraping with non-browser User-Agents like the ones HTTP libraries use.

- Using headless browsers not tailored for web scraping.

- Making requests from the same IP too frequently.

- Displaying behavior different from a typical user.

Can a VPN Solve Error 1020 When Scraping a Website?

Sometimes, using a VPN can solve Error 1020 when scraping a website. However, it's not a guaranteed solution, as Cloudflare's security features might be able to detect VPNs and proxies, which also trigger that error.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.