What is Puppeteer 403 Forbidden Error

The Puppeteer 403 Forbidden error is a common HTTP response for blocked requests. It’s related to the HTTP status code 403: Forbidden, common in web scraping.

When a protected website detects signs of automation, it blocks your request, returning this error, which typically looks like this:

HTTPError: 403 Client Error: Forbidden for url: https://www.g2.com/products/asana/reviews

You’ll learn how to overcome it in this article.

How to Overcome 403 Forbidden Error with Puppeteer

Let's explore five proven techniques:

- Use a proxy to web scrape.

- Adjust your frequency of requests.

- Change your User-Agent in Puppeteer.

- Optimize your HTTP headers.

- Leverage the Puppeteer Stealth extension.

We'll mostly focus on the solutions for the Puppeteer 403 error when web scraping.

1. Use a Proxy to Web Scrape

Proxies help in bypassing rate limits and preventing your IP from being blacklisted. They act as intermediaries between your scraper and the target website, receiving your request and sending it to the web server from a different IP address.

To implement a Puppeteer proxy, use the --proxy-server command-line option when launching the browser and grab a free proxy from FreeProxyList for testing.

const puppeteer = require('puppeteer');

(async () => {

// specify the proxy server

const proxyServer = 'http://20.219.176.57:3129';

// launch Puppeteer with the proxy

const browser = await puppeteer.launch({

args: [`--proxy-server=${proxyServer}`],

});

// open a new page

const page = await browser.newPage();

// navigate to a website to check the IP (httpbin.org in this case)

await page.goto('http://httpbin.org/ip');

// get the HTML text content

const htmlTextContent = await page.evaluate(() => document.body.textContent);

// log only the HTML text content

console.log(htmlTextContent);

// close the browser

await browser.close();

})();

We used Httpbin as the target URL because it returns the proxy IP so that you verify it worked.

While we made use of a free proxy, they're unreliable and can easily be detected, therefore you’ll need premium proxies. Additionally, rotating proxies (changing IP addresses, such as per request) increases your chances of avoiding detection. So, you’ll also need a proxy pool for the best results. But do you want a quick way to give you those?

ZenRows is a web scraping API that offers premium proxies and rotates them automatically under the hood. It can complement Puppeteer or also replace it because it offers the same headless browser functionality and the full power you need to avoid getting blocked: anti-CAPTCHA, User Agent rotation, etc.

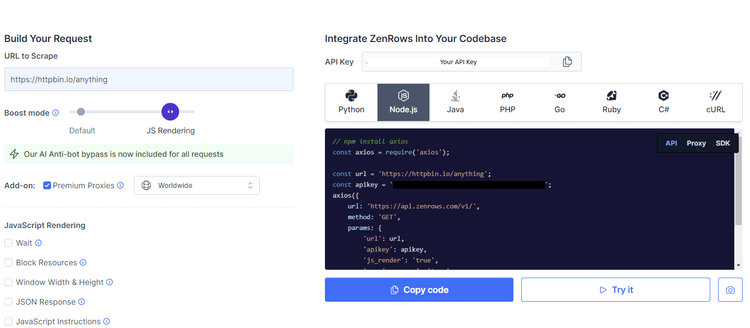

To try it for free, sign up, and you'll get redirected to the Request Builder page.

Paste your target URL, check the box for Premium Proxies to implement proxies, and activate the JavaScript Rendering boost mode if needed.

Then, select a language (Node.js), and you’ll get your script ready to try. Run it, and you'll get access to the HTML content of your target website.

Awesome right? That's how easy it is to scrape using ZenRows.

If you’d prefer to integrate this web scraping API with Puppeteer, check out the documentation.

2. Adjust Your Frequency of Requests

Too many requests within short time frames can result in a 403 Forbidden error because websites often set thresholds on request frequency to regulate traffic and prevent abuse.

A solution is to adjust the frequency of your requests by adding delays between them so that you mimic natural browsing behavior and reduce the likelihood of triggering anti-scraping measures. In Puppeteer, use the setTimeout() function and a Promise to introduce delays. Below is a code snippet illustrating how.

const puppeteer = require('puppeteer');

const delay = (ms) => new Promise(resolve => setTimeout(resolve, ms));

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

// list of URLs to scrape

const urls = ['https://example.com/page1', 'https://example.com/page2', 'https://example.com/

```page3'];

for (const url of urls) {

await page.goto(url);

// example scraping logic: Get and log the page title

const pageTitle = await page.title();

console.log(`Title of ${url}: ${pageTitle}`);

// introduce a delay of 3 seconds before the next request

await delay(3000);

}

// Close the browser

await browser.close();

})();

3. Change Your User-Agent in Puppeteer

The User-Agent string in Puppeteer is a crucial HTTP header. It identifies your web client and characteristics to the server, which websites often use to tailor their responses based on the browser type, operating system, or device making the request.

So, if you're identified as a bot, you'll ultimately receive 403 errors as a response. Therefore, changing your User-Agent string to mimic an actual browser is critical to avoid detection.

Puppeteer provides the page.setUserAgent() method for setting a custom user agent:

const puppeteer = require('puppeteer');

(async () => {

// launch a headless browser

const browser = await puppeteer.launch();

// open a new page

const page = await browser.newPage();

// set a custom user agent (replace it with your desired user agent)

await page.setUserAgent('Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36');

// navigate to the target URL

await page.goto('http://httpbin.org/user-agent');

// get the HTML text content

const htmlContent = await page.evaluate(() => document.body.textContent);

// print the HTML content to the console

console.log(htmlContent);

// close the browser

await browser.close();

})();

Check out our list of User Agents for web scraping for easy use.

4. Optimize Your HTTP Headers

While the User-Agent (UA) string is one of the most important HTTP headers, it isn't the only one. To increase your chances of avoiding detection, you must optimize all.

Here's what Puppeteer's default request headers look like.

Request Headers: {

'upgrade-insecure-requests': '1',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) HeadlessChrome/119.0.0.0 Safari/537.36',

'sec-ch-ua': '"Chromium";v="119", "Not?A_Brand";v="24"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"'

}

Meanwhile, a browser's default header is something like this:

{

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

'accept-language': 'en-US,en;q=0.9',

'cache-control': 'max-age=0',

'cookie': 'prov=4568ad3a-2c02-1686-b062-b26204fd5a6a; usr=p=%5b10%7c15%5d%5b160%7c%3bNewest%3b%5d',

'referer': 'https://www.google.com/',

'sec-ch-ua': '"Not.A/Brand";v="8", "Chromium";v="114", "Google Chrome";v="114"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"',

'sec-fetch-dest': 'document',

'sec-fetch-mode': 'navigate',

'sec-fetch-site': 'cross-site',

'sec-fetch-user': '?1',

'upgrade-insecure-requests': '1',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36'

}

From the examples above, it's obvious that Puppeteer doesn’t include all the header components necessary to mimic a real browser. Header strings such as referer, cookie, accept, and accept-language are absent. Therefore, by adding the missing headers and in the correct order, you can get your scraper closer to an actual browser.

To set additional headers, Puppeteer provides the page.setExtraHTTPHeaders() method. Here's an example showing how to implement it.

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

// set custom headers to mimic a real browser

await page.setExtraHTTPHeaders({

'referer': 'www.google.com',

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

'accept-language': 'en-US,en;q=0.9',

'cookie': 'prov=4568ad3a-2c02-1686-b062-b26204fd5a6a; usr=p=%5b10%7c15%5d%5b160%7c%3bNewest%3b%5d',

});

// navigate to the target URL

await page.goto('http://httpbin.org/headers');

// get the HTML text content of the page

const htmlContent = await page.evaluate(() => document.body.textContent);

// print the HTML text content to the console

console.log(htmlContent);

// close the browser

await browser.close();

})();

Additionally, it's important to note that while the order of request headers generally doesn't matter, non-browser clients like Puppeteer have default headers in certain arrangements. Maintaining such orders can result in getting flagged.

Check out our guide on how to set Puppeteer headers to learn more.

5. Leverage the Puppeteer Stealth Extension

Puppeteer Stealth is an extension for Puppeteer designed to mitigate anti-scraping mechanisms and overcome challenges, including Puppeteer 403 Forbidden error.

The plugin employs various techniques to mask Puppeteer's automation properties, such as Navigator.webdriver and iframe.contentWindow, and mimic human-like behavior.

Check out our Puppeteer Stealth tutorial to learn more.

Conclusion

Puppeteer is a powerful headless browser for extracting data from websites, but it comes with challenges, notably the Puppeteer 403 Forbidden error, and we've explored various techniques to help you avoid it.

However, you might still get blocked by advanced anti-bot mechanisms. To guarantee the bypass of any anti-scraping measures, try ZenRows for free.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.