Are you trying to navigate through multiple pages with Puppeteer to scrape the content?

In this tutorial, you'll learn how to use Puppeteer's pagination capability and apply it to different scenarios you'll face:

When You Have a Navigation Page Bar

Navigation page bars are the simplest forms of website pagination, and there are two ways to extract data from them: using the next page link or changing the page number in the URL.

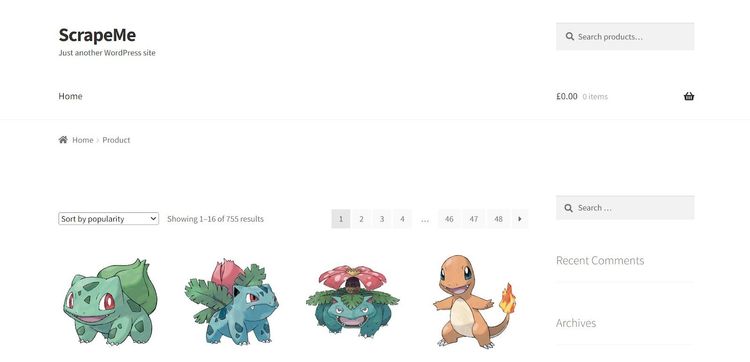

We'll use ScrapeMe as a demo website in this example. See the layout below:

You're about to scrape a 48-page website using Puppeteer pagination. Let’s get started!

Use the Next Page Link

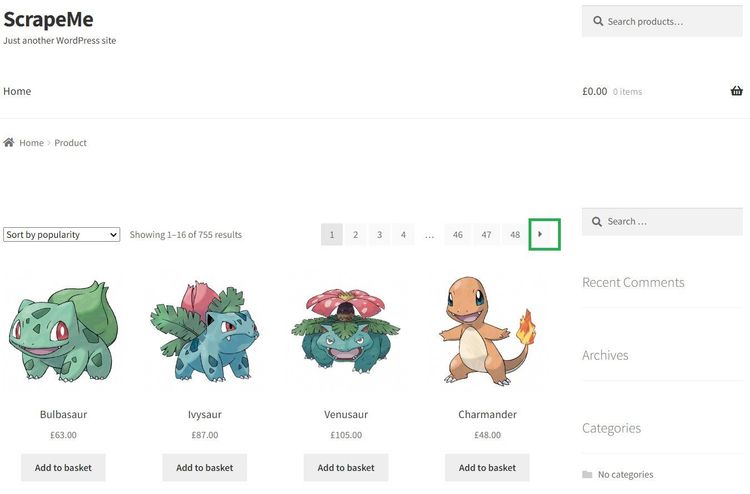

This method involves clicking the next page link continuously until there are no more pages. So the target website has a next link arrow that looks like this:

You’ll simulate a click on that next arrow element in Puppeteer. First, we write our scraping logic inside a function.

The function accepts a page parameter and obtains all the product container elements using a CSS selector. It then iterates through the containers to obtain the relevant product information:

// import the required library

const puppeteer = require('puppeteer');

async function scrapeProducts(page) {

// use page.$$ to find all matching elements on the current page

const productElementsHandle = await page.$$('.woocommerce-LoopProduct-link');

// check if the elements are present

if (productElementsHandle.length > 0) {

// iterate through each element and extract text content

for (const productElementHandle of productElementsHandle) {

const productText = await page.evaluate(productElement => productElement.textContent, productElementHandle);

console.log(productText);

}

} else {

console.log('No product elements found');

}

}

Next, create a navigation logic that initializes a headless browser and page instance. It opens the target web page and executes the scraping function to scrape the first page.

// import the required library

const puppeteer = require('puppeteer');

//...

(async () => {

// launch the headless browser instance

const browser = await puppeteer.launch({ headless: 'new' });

// create a new page instance

const page = await browser.newPage();

// visit the target website

await page.goto('https://scrapeme.live/shop/', { timeout: 60000 });

// call the function to scrape products on the current page

await scrapeProducts(page);

})();

Our Puppeteer script needs to keep scraping until there are no more pages. So, we use a loop to keep clicking the next page link and recalling the scraping function per iteration.

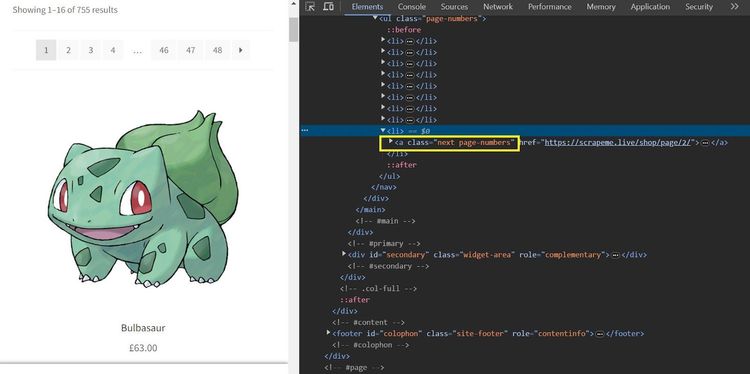

Before extending the navigation logic, here's the next page element in the inspection tab:

The logic within the loop checks for the presence of the next page link and clicks it to load the next page. Otherwise, it terminates page navigation. The code waits for the navigation to complete and tracks the current page URL before calling the scraping function.

(async () => {

//...

// set last page reached to false

let lastPageReached = false;

// keep scraping if not the last page

while (!lastPageReached) {

const nextPageLink = await page.$('.next.page-numbers');

if (!nextPageLink) {

console.log('No more pages. Exiting.');

lastPageReached = true;

} else {

// click the next page link

await nextPageLink.click();

// wait for navigation to complete

await page.waitForNavigation();

// track the current URL

const URL = page.url()

console.log(URL)

// call the function to scrape products on the current page

await scrapeProducts(page);

}

}

// close the browser

await browser.close();

})();

Let's put the code together in one piece:

// import the required library

const puppeteer = require('puppeteer');

async function scrapeProducts(page) {

// use page.$$ to find all matching elements on the current page

const productElementsHandle = await page.$$('.woocommerce-LoopProduct-link');

// check if the elements are present

if (productElementsHandle.length > 0) {

// iterate through each element and extract text content

for (const productElementHandle of productElementsHandle) {

const productText = await page.evaluate(productElement => productElement.textContent, productElementHandle);

console.log(productText);

}

} else {

console.log('No product elements found');

}

}

(async () => {

// launch the headless browser instance

const browser = await puppeteer.launch({ headless: 'new' });

// create a new page instance

const page = await browser.newPage();

// visit the target website

await page.goto('https://scrapeme.live/shop/', { timeout: 60000 });

// call the function to scrape products on the current page

await scrapeProducts(page);

// set last page reached to false

let lastPageReached = false;

// keep scraping if not the last page

while (!lastPageReached) {

const nextPageLink = await page.$('.next.page-numbers');

if (!nextPageLink) {

console.log('No more pages. Exiting.');

lastPageReached = true;

} else {

// click the next page link

await nextPageLink.click();

// wait for navigation to complete

await page.waitForNavigation();

// track the current URL

const URL = page.url();

console.log(URL);

// call the function to scrape products on the current page

await scrapeProducts(page);

}

}

// close the browser

await browser.close();

})();

Running the code scrapes the paginated website to the last product page, as shown:

//... other products omitted for brevity

Naganadel

£30.00

Stakataka

£190.00

Blacephalon

£149.00

You just scraped all the pages of a website using Puppeteer pagination.

Let's consider the second technique for obtaining a similar result.

Change the Page Number in the URL

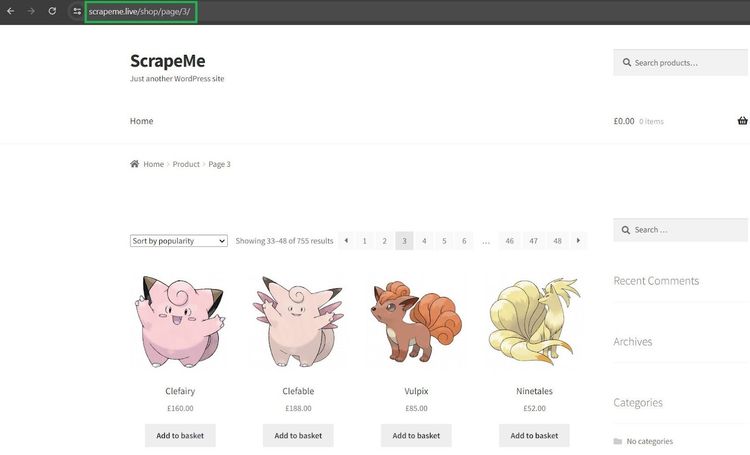

In this method, you'll dynamically append page numbers to the website's base URL and extract content from each page iteratively. Before you move on, see how the website displays the page number in the URL box.

The number format for the third page is https://scrapeme.live/shop/page/3/. You’ll use this format to increment the page number.

As done in the previous method, let's handle the scraping logic inside a function. This function accepts a page argument and obtains product information by iterating through the product containers:

const puppeteer = require('puppeteer');

async function scrapeProducts(page) {

// get product containers

const productElementsHandle = await page.$$('.woocommerce-LoopProduct-link');

// check if elements were found

if (productElementsHandle.length > 0) {

// iterate through the product containers and extract text

for (const productElementHandle of productElementsHandle) {

const productText = await page.evaluate(productElement => productElement.textContent, productElementHandle);

console.log(productText);

}

} else {

console.log('No product elements found');

}

}

The navigation logic below launches the browser instance and visits the target website. Notably, it sets the current page number to 1. We'll increment this in a while loop later.

We've set the last page to 48 based on the number of pages on the target website:

// import the required library

const puppeteer = require('puppeteer');

//...

(async () => {

const browser = await puppeteer.launch({headless:'new'});

const page = await browser.newPage();

const baseUrl = 'https://scrapeme.live/shop/';

let currentPage = 1;

const lastPageReached = false;

// set the last page based on the number of pages on the target website

const lastPage = 48;

})();

Now, let's unravel what's in the while loop. The loop starts by appending the page URL to the page numbers and visits each appended URL incrementally.

The logic ensures the scraping loop terminates once the current page reaches the last page number. Otherwise, it keeps incrementing the current page number. Finally, we add a delay to pause for the next DOM content to load:

(async () => {

//...

while (!lastPageReached) {

// append page URL to the page number incrementally.

const currentUrl = `${baseUrl}page/${currentPage}`;

await page.goto(currentUrl, { timeout: 60000 });

// track the current url

const URL = page.url();

console.log(URL);

// call the function to scrape products on the current page

await scrapeProducts(page);

// check if the last page is reached

if (currentPage===lastPage) {

console.log('No more pages. Exiting.');

lastPageReached = true;

} else {

// increment the page counter

currentPage++;

// add a delay to give the page some time to load (adjust as needed)

new Promise(r => setTimeout(r, 2000)); // 2 seconds

}

}

await browser.close();

})();

Here's the final code:

// import the required library

const puppeteer = require('puppeteer');

async function scrapeProducts(page) {

// get product containers

const productElementsHandle = await page.$$('.woocommerce-LoopProduct-link');

// check if elements were found

if (productElementsHandle.length > 0) {

// iterate through the product containers and extract text

for (const productElementHandle of productElementsHandle) {

const productText = await page.evaluate(productElement => productElement.textContent, productElementHandle);

console.log(productText);

}

} else {

console.log('No product elements found');

}

}

// execute the scraping logic

(async () => {

const browser = await puppeteer.launch({ headless: 'new' });

const page = await browser.newPage();

const baseUrl = 'https://scrapeme.live/shop/';

let currentPage = 1;

let lastPageReached = false;

// set the last page based on the number of pages on the target website

const lastPage = 48;

while (!lastPageReached) {

// append page URL to the page number incrementally.

const currentUrl = `${baseUrl}page/${currentPage}`;

await page.goto(currentUrl, { timeout: 60000 });

// track the current URL

const URL = page.url();

console.log(URL);

// call the function to scrape products on the current page

await scrapeProducts(page);

// check if the last page is reached

if (currentPage === lastPage) {

console.log('No more pages. Exiting.');

lastPageReached = true;

} else {

// increment the page counter

currentPage++;

// add a delay to give the page some time to load (adjust as needed)

await new Promise(r => setTimeout(r, 2000)); // 2 seconds

}

}

await browser.close();

})();

The code outputs the product information from all pages, as shown:

//... other products omitted for brevity

Naganadel

£30.00

Stakataka

£190.00

Blacephalon

£149.00

You now know how to scrape data from websites with navigation bars using Puppeteer. Congratulations!

However, there are more complex scenarios that involve JavaScript pagination. Let's see how to deal with those in the next section.

When JavaScript-Based Pagination is Required

JavaScript-paginated websites load more content dynamically in two common ways: via infinite scroll or a load more button. Puppeteer makes it easy to scrape such dynamic content since it's a headless browser.

You'll see how to handle JavaScript-based pagination in the next sections.

Infinite Scroll to Load More Content

Infinite scroll loads more content as you scroll down on a web page. This is common with social and e-commerce websites.

The scraping logic here is simple. Instruct Puppeteer to scroll down and wait for more content to load before extraction.

Let's see how it works by scraping product names and prices from ScrapingClub, a demo website that uses infinite scroll.

Here's what the website looks like:

We'll handle the entire scraping logic inside a function. This assumes a previous height for the web page. Much of the job is within the while loop, which starts by getting the current scroll height.

We then update the previous height and implement a logic to break the scraping loop if the current height is the same as the previous one:

// import the required library

const puppeteer = require('puppeteer');

async function scrapeProducts(page) {

const data = [];

let previousHeight;

while (true) {

// get the current height of the page

const currentHeight = await page.evaluate(() => document.body.scrollHeight);

// if there is a previous height and it hasn't changed, break the loop

if (previousHeight && currentHeight === previousHeight) {

break;

}

// update the previous height

previousHeight = currentHeight;

Next, we obtain the product containers from the DOM and iterate through them to scrape the desired content. We've also removed empty line characters by trimming the output.

The code then scrolls infinitely to the bottom until there is no more content. It’s essential to use a timeout to allow more content to load.

//...

// get product containers

const productElementsHandle = await page.$$('.w-full.rounded.border.post');

// iterate through the product containers and extract text

if (productElementsHandle.length > 0) {

for (const productElementHandle of productElementsHandle) {

const productText = await page.evaluate(productElement => productElement.textContent, productElementHandle);

// remove empty line charaters

const cleanProductText = productText.replace(/\n/g, '').trim();

data.push(cleanProductText);

}

} else {

console.log('No product elements found');

}

// scroll down to the bottom of the page

await page.evaluate(() => {

window.scrollTo(0, document.body.scrollHeight);

});

// Wait for some time to allow content to load (adjust the time as needed)

await page.waitForTimeout(5000);

}

console.log(data);

}

The next code launches a browser instance and executes the scraping function:

// import the required library

const puppeteer = require('puppeteer');

//...

// execute the scraping logic

(async () => {

const browser = await puppeteer.launch({ headless: 'new' });

const page = await browser.newPage();

const URL = 'https://scrapingclub.com/exercise/list_infinite_scroll/';

await page.goto(URL);

// call the function to scrape products on the current page

await scrapeProducts(page);

await browser.close();

})();

Let's put it all together in one piece:

// import the required library

const puppeteer = require('puppeteer');

async function scrapeProducts(page) {

const data = [];

let previousHeight;

while (true) {

// get the current height of the page

const currentHeight = await page.evaluate(() => document.body.scrollHeight);

// if there is a previous height and it hasn't changed, break the loop

if (previousHeight && currentHeight === previousHeight) {

break;

}

// update the previous height

previousHeight = currentHeight;

// get product containers

const productElementsHandle = await page.$$('.w-full.rounded.border.post');

// iterate through the product containers and extract text

if (productElementsHandle.length > 0) {

for (const productElementHandle of productElementsHandle) {

const productText = await page.evaluate(productElement => productElement.textContent, productElementHandle);

// remove empty line characters

const cleanProductText = productText.replace(/\n/g, '').trim();

data.push(cleanProductText);

}

} else {

console.log('No product elements found');

}

// scroll down to the bottom of the page

await page.evaluate(() => {

window.scrollTo(0, document.body.scrollHeight);

});

// Wait for some time to allow content to load (adjust the time as needed)

await page.waitForTimeout(5000);

}

console.log(data);

}

// execute the scraping logic

(async () => {

const browser = await puppeteer.launch({ headless: 'new' });

const page = await browser.newPage();

const URL = 'https://scrapingclub.com/exercise/list_infinite_scroll/';

await page.goto(URL);

// call the function to scrape products on the current page

await scrapeProducts(page);

await browser.close();

})();

This scrolls the page infinitely and retrieves the content, as shown:

[

//... other products omitted for brevity

'Jersey Dress $19.99',

'T-shirt $6.99',

'T-shirt $6.99',

'Blazer $49.99'

]

Great job using Puppeteer pagination to scrape an infinite scroll!

But what if a website requires clicking a button to load more content? Let's handle that in the next section.

Click on a Button to Load More Content

This pagination method involves instructing Puppeteer to click a load more button to show and scrape content dynamically.

In this example, we'll scrape related movie details from the IMDB search page. Here's how the target website loads content:

Let's start by defining a function to handle the scraping logic. The loop inside the function runs as far as the load count is less than the specified maximum loads.

We've set the maximum load count value to 10, so Puppeteer clicks the load button only 10 times. Feel free to adjust this value as you feel.

Next, call the scraping function by extending the above script with the following code:

// import the required library

const puppeteer = require('puppeteer');

async function scrapeProducts(page) {

const data = [];

const maxLoad = 10;

let loadCount = 1;

while (loadCount < maxLoad) {

const productElementsHandle = await page.$$('.ipc-metadata-list-summary-item__c');

if (productElementsHandle.length > 0) {

for (const productElementHandle of productElementsHandle) {

const productText = await page.evaluate(productElement => productElement.textContent, productElementHandle);

const cleanProductText = productText.replace(/\n/g, '').trim();

data.push(cleanProductText);

}

} else {

console.log('No product elements found');

}

// check if there are more products by querying the button again after scrolling

const loadMoreButton = await page.$('.ipc-see-more__text');

// click load more until max page load is reached

if (loadCount === maxLoad) {

console.log('Max page reached');

break;

} else {

await loadMoreButton.click();

}

// wait for some time to allow content to load (adjust the time as needed)

await page.waitForTimeout(1000);

// Increment loadCount inside the loop

loadCount++;

}

console.log(data);

}

Your final code should look like this:

// import the required library

const puppeteer = require('puppeteer');

async function scrapeProducts(page) {

const data = [];

const maxLoad = 10;

let loadCount = 1;

while (loadCount < maxLoad) {

const productElementsHandle = await page.$$('.ipc-metadata-list-summary-item__c');

if (productElementsHandle.length > 0) {

for (const productElementHandle of productElementsHandle) {

const productText = await page.evaluate(productElement => productElement.textContent, productElementHandle);

const cleanProductText = productText.replace(/\n/g, '').trim();

data.push(cleanProductText);

}

} else {

console.log('No product elements found');

}

// check if there are more products by querying the button again after scrolling

const loadMoreButton = await page.$('.ipc-see-more__text');

// click load more until max page load is reached

if (loadCount === maxLoad) {

console.log('Max page reached');

break;

} else {

await loadMoreButton.click();

}

// wait for some time to allow content to load (adjust the time as needed)

await page.waitForTimeout(1000);

// Increment loadCount inside the loop

loadCount++;

}

console.log(data);

}

(async () => {

const browser = await puppeteer.launch({ headless: false });

const page = await browser.newPage();

const URL = 'https://www.imdb.com/find/?q=Godfather&ref_=nv_sr_sm';

await page.goto(URL);

await scrapeProducts(page);

await browser.close();

})();

The code retrieves the movie information from the target website, as shown:

[

//... other results omitted for brevity

'Our Godfather2019Cristina Buscetta, Roberto Buscetta',

'The Godfather: Part 22018TV SeriesDimsy Dohanji, Ahmed Hatem',

'Godfather 42020ShortEinar, Þórður Logi Hauksson'

]

You just scraped dynamic content hidden behind a load more button. That's great!

But there's one more challenge you must solve. And that's getting blocked while scraping.

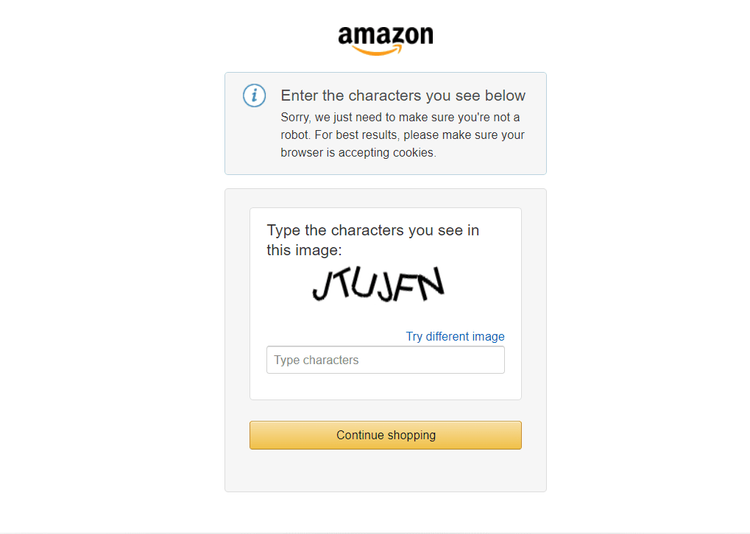

Getting Blocked when Scraping Multiple Pages with Puppeteer

Puppeteer presents bot-like properties that can get you blocked while scraping multiple pages. For instance, using Puppeteer to access a protected page like an Amazon category page may block your scraper.

Try to access Amazon and log the response message with the following code:

// import the required library

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch({ headless: 'new' });

const page = await browser.newPage();

const url = 'https://www.amazon.com/s?k=headphones';

const response = await page.goto(url);

// check and log the response status

if (response && response.status() !== 200) {

console.error(`Failed to load the page. Status: ${response.status()}`);

await browser.close();

return;

} else {

console.log(response.status());

}

await browser.close();

})();

The target website blocks the request with the following response:

Failed to load the page. Status: 503

One way to avoid anti-bot detection and IP ban is to configure proxies for Puppeteer. Proxies help mask your IP address, reducing the likelihood of being detected as a bot. You can also implement a plugin called Puppeteer Stealth to lower the chances of detection.

You can also try to bypass anti-bots by rotating Puppeteer user agents. This allows you to mimic a real browser. However, these inherent methods aren’t enough to evade blocks completely.

A more reliable way to avoid getting blocked is to use a web scraping solution like ZenRows. ZenRows helps autorotate premium proxies and user agents behind the scenes so you can focus on content scraping without worrying about blocks.

You can easily integrate ZenRows with Puppeteer to bypass anti-bot detection.

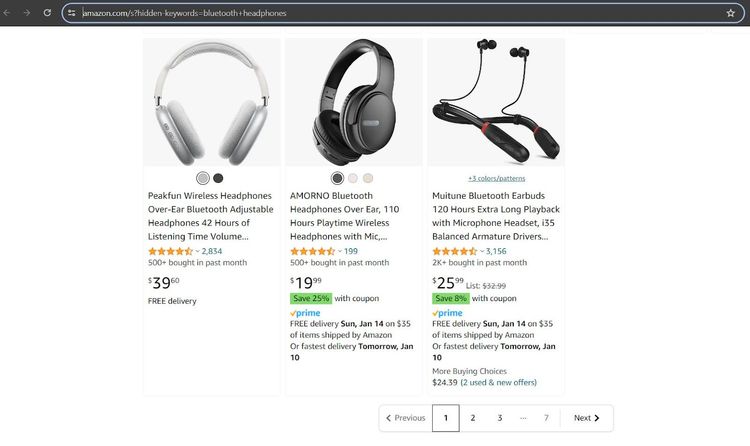

Let’s modify the previous code with ZenRows integration and scrape the first page on the Amazon category page. The page looks like so without a CAPTCHA:

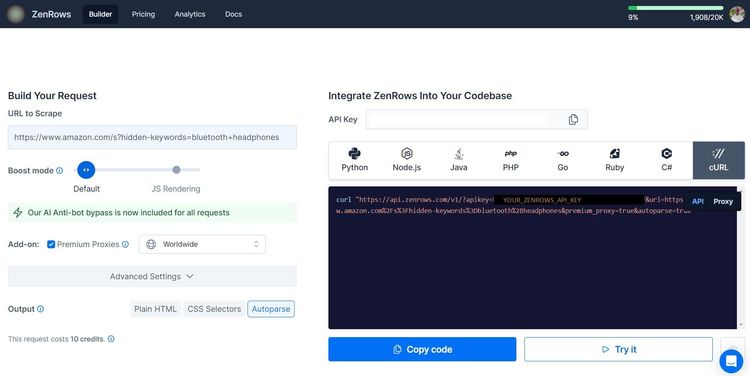

Next, sign up for free to open your ZenRows Request Builder.

Once in the Request Builder, click “Premium Proxies” and select “cURL” as your request type.

Add the generated cURL to your scraper via Puppeteer’s request interceptor like so:

// import the required library

const puppeteer = require('puppeteer');

const url = 'https://www.amazon.com/s?hidden-keywords=bluetooth+headphones';

(async () => {

const browser = await puppeteer.launch({ headless: 'new' });

const page = await browser.newPage();

try {

// add the generated cURL

const modifiedUrl = `

https://api.zenrows.com/v1/?apikey=<YOUR_ZENROWS_API_KEY>&url=${encodeURIComponent(url)}

&premium_proxy=true&autoparse=true

`;

// intercept requests to modify them

await page.setRequestInterception(true);

page.on('request', (request) => {

request.continue({ url: modifiedUrl });

});

const response = await page.goto(modifiedUrl);

// validate the request and scrape product information

if (response && response.status() === 200) {

const extractedData = await page.evaluate(() => {

return {

// extract text content of the body

message: document.body.textContent.trim(),

};

});

console.log(extractedData);

} else {

console.error(`Failed to load the page. Status: ${response ? response.status() : 'unknown'}`);

}

} catch (error) {

console.error('Error:', error);

} finally {

await browser.close();

}

})();

The request goes through and logs the extracted products like so:

[

{"title":"UliptzWireless Bluetooth Headphones, 65H Playtime, 6 EQ Sound Modes, HiFi Stereo Over Ear Headphones with Microphone,

Foldable Lightweight Bluetooth 5.3 Headphones for Travel/Office/Cellphone/PC","asin":"B09NNBBY8F","avg_rating":"4.5 out of 5

stars","price":"$19.99","review_count":"5,839"},

{"title":"BERIBESBluetooth Headphones Over Ear, 65H Playtime and 6 EQ Music Modes Wireless Headphones with Microphone, HiFi Stereo

Foldable Lightweight Headset, Deep Bass for Home Office Cellphone PC Ect.","asin":"B09LYF2ST7","avg_rating":"4.5 out of 5

stars","price":"$26.99","review_count":"19,070"},

//... other products omitted for brevity

]

Bravo! Your scraper can now access the target web page without a CAPTCHA.

ZenRows also allows you to interact dynamically with web pages using JavaScript instructions. Thus, you can replace Puppeteer with ZenRows and leverage its complete anti-bot capabilities, including anti-CAPTCHA, AI anti-bot bypass, and more.

Conclusion

In this Puppeteer pagination tutorial, you learned about the different forms of pagination and how to scrape content from them using Puppeteer. You're now a Puppeteer pagination master.

Now, you know:

- How to apply the next page link method to scrape content from all pages on a paginated website.

- How to dynamically change the page number in the URL and scrape content from each page of a paginated website.

- How to use Puppeteer to scrape dynamically loaded content from infinite scroll.

- How to scrape dynamic content hidden behind a load more button.

Ensure you try more examples to stay confident with what you've learned. Sadly, blocks are almost unavoidable while scraping multiple pages. Bypass them all with ZenRows, an all-in-one scraping solution with IP rotation, dynamic rendering, and anti-bot bypass features. Try ZenRows for free!

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.