Do you need help choosing between Scrapy and Selenium for web scraping? Both tools have their unique strengths and are suited to different types of web scraping tasks.

In this article, we'll review each to draw a line between them, and you'll be able to decide which is best for you.

Scrapy vs. Selenium: Which Is Best?

The choice between Selenium vs. Scrapy depends on your scraping goal. Here's a brief overview of what you need to know before making a choice:

Selenium is the right tool for headless browser automation. Go for Selenium if your goal is to scrape websites that load content using JavaScript, among other cases.

Scrapy works best if you want an easier approach to large-scale web scraping. It's the ideal tool to organize, clean, and store data as you collect it easily. The only setback is that it doesn’t support JavaScript by default.

You can render JavaScript with Scrapy using scrapy-selenium but it isn't the best idea because it last received an update in 2020 and is now obsolete in Selenium 4+. A better choice would be to use the scrapy-splash library.

Feature Comparison: Scrapy vs. Selenium

Let's compare the features and use cases of Selenium vs. Scrapy to decide which works best for you.

First, we'll start with a brief comparison table and delve into more details after.

Considerations

| Criteria | Scrapy | Selenium |

|---|---|---|

| Language | Python | Python, Ruby, Perl, C#, Java, JavaScript, PHP |

| Ease of use | Straightforward and easy to set up with default code structure | Can be more complex and depends on the use case |

| JavaScript support | Requires integration with tools like Scrapy Splash or Selenium | Built-in JavaScript support is available |

| Built-in browser | None | Multi-browser-compatible |

| HTTP requests | Yes | Yes |

| Avoid getting blocked | Proxy middleware and headers rotation | Proxy and header rotation. Using the Undetected. Headless mode. Selenium Stealth. WebDriver plugin. Limited integration with web scraping APIs |

| Speed | Fast | Can be slow for advanced scraping |

| Memory usage | Memory efficient | Uses more memory |

| Community and documentation | Good | Good |

| Versatility | Primarily for web scraping | Automation testing and web scraping |

| Maintenance and upkeep | Actively maintained | Actively maintained |

Now let’s critically compare Selenium and Scrapy in detail.

Scrapy Is for Web Scraping, While Selenium Is Versatile

Scrapy is for web scraping and nothing else. It's a framework that packs all the tools, including extensions for collecting, cleaning, and storing data.

Selenium is more versatile, featuring web automation for application testing. However, its headless browser feature and ability to interact with dynamic and static web elements and extract texts from them make it a valuable web scraping tool.

Scrapy Works with Python, but Selenium with More Languages

While Scrapy is more desirable for large-scale web scraping, it only works with Python. This might make it less suitable if you don't have a Python background or want more language flexibility.

Selenium wins here, as it's compatible with many programming languages, including Ruby, Perl, PHP, Python, C#, JavaScript, and Java, allowing you to pick it up for scraping regardless of your programming language.

Flexibility on Headless Browsing Libraries with Scrapy

Selenium's headless browsing is handy for scraping dynamic websites. Scrapy doesn't have built-in headless browser support, but you can implement Selenium or external libraries, like the recommended scrapy-splash.

Selenium's Wide Browser Compatibility or Scrapy Splash's Solo

Selenium's cross-browser compatibility is more relevant to automation testing. However, it can be helpful in web scraping, particularly if you want to mimic different browsers while scraping.

Scrapy lacks multi-browser compatibility, and that’s OK because multi-browser functionality isn’t essential in web scraping. Besides, you can address differences in rendering across browsers by combining Scrapy with a headless browser library.

Scrapy Is Easier to Learn than Selenium

Both Scrapy and Selenium boast strong points in documentation, maintainability, and community support.

However, Scrapy has an easier learning curve considering its simple command line setup, Pythonic nature, default code structure, and clear goal for web scraping and crawling goal. Selenium's versatility gives it a steeper learning curve, and usually, setting up depends on the use case.

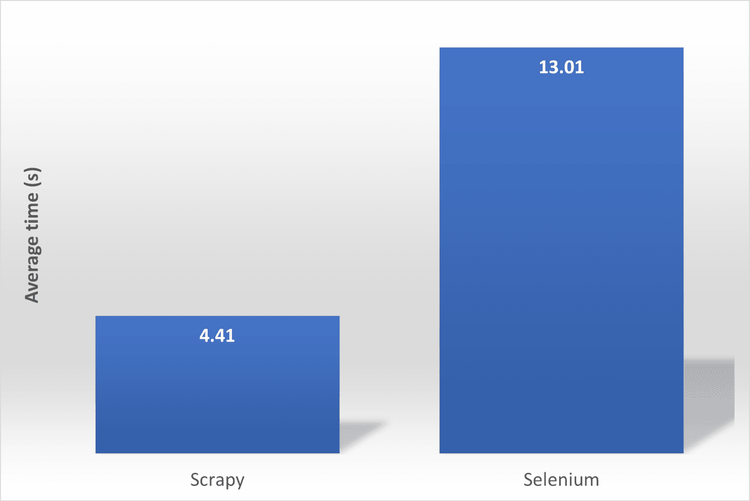

Scrapy is Faster than Selenium

Speed is pivotal to web scraping since you want to quickly collect as much data as possible.

Scrapy is relatively faster for static content scraping since it doesn't introduce extra browser overhead like Selenium, which runs a browser instance. Surprisingly, Scrapy also collects data faster than Selenium when combined with Scrapy Splash for dynamic data scraping.

We ran a 100-iteration speed benchmark test on Selenium vs. Scrapy + Scrapy Splash for collecting dynamic content. It took Scrapy an average of 4.41 seconds and Selenium an average of 13.01 seconds to obtain the same content.

Here's a graphical presentation of the result, from the faster to the slower:

The time unit used is the second (s = seconds).

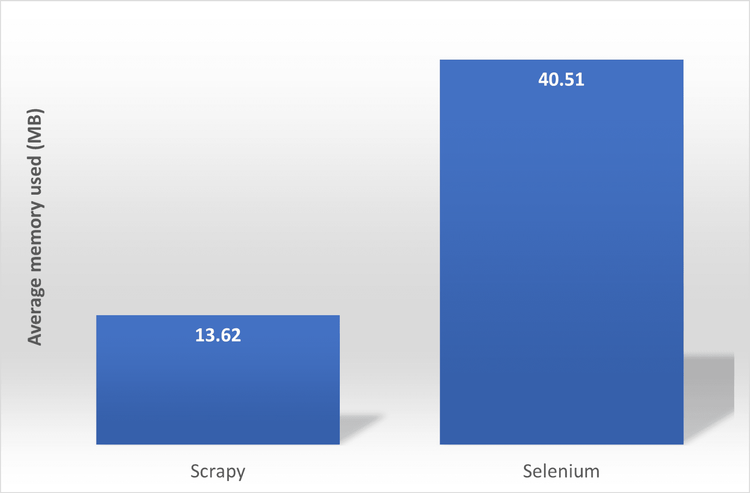

Selenium Consumes More Memory than Scrapy

Although memory usage varies per project complexity and machine specifications, Scrapy outperforms Selenium in handling large and small-scale scraping.

We also conducted a 100-iteration memory consumption benchmark test on Selenium vs. Scrapy for dynamic content collection. While Scrapy only used an average of 13.62MB, Selenium plus its browser instance consumed an average of 40.51MB.

See the final result in the graph below (from lower to higher):

The memory unit used is the megabytes (MB).

Scrapy's optimization to minimize memory footprint gives it a lead over Selenium, which accounts for browser instances that run in a separate process.

Scrapy's Superior Crawling Capabilities

Selenium can be used for light crawling, especially if the target website is JavaScript-rendered. However, you want to choose Scrapy over Selenium any time for simple to complex crawling due to its efficient multi-page crawling feature.

Although Scrapy is efficient at web crawling, it needs the Scrapy Splash plugin to crawl dynamic web pages.

Although, as mentioned, Scrapy is efficient at web crawling, it needs the Scrapy Splash plugin to crawl dynamic pages.

Best Choice to Avoid Getting Blocked While Scraping

Let's face it: getting blocked while scraping can be a nightmare, preventing you from accessing a web page to collect the required data. Luckily, both Selenium and Scrappy have block-evading mechanisms.

While Selenium boasts tools like Undetected ChromeDriver and Selenium Stealth to bypass basic anti-bot detection, it also supports services like ZenRows for better efficiency in rotating proxies and headers.

However, Scrapy fully integrates with tools like ZenRows, which provides rotating premium proxies, user agent and header rotation, JavaScript support, and an advanced anti-bot bypassing toolkit.

Overall, Scrapy’s extensibility with various tools, including Scraping APIs like ZenRows, gives it an edge over Selenium.

Start your ZenRows free trial today and overcome sophisticated anti-scraping measures.

Scrapy Review

Scrapy is a dedicated web scraping Python framework that's extensible for JavaScript support. Web scraping with Scrapy is an ideal choice for data extraction, and it has its pros and cons.

👍 Pros of Scrapy:

- Easier to learn and set up.

- More structured codebase architecture.

- Well-documented.

- Active community.

- Consistently maintained.

- Faster crawling and scraping.

- Memory efficient.

- Suitable for large-scale web scraping.

- Extensible for JavaScript support.

- It can work with Selenium and other libraries like Splash.

- Easily integrates with anti-bot solvers.

- Built-in HTTP proxy middleware is available.

- Item pipelines for organizing and storing collected data.

👎 Cons of Scrapy:

- Requires third-party plugin for dynamic content scraping.

- Only limited to Python.

- No support for web automation.

- Headless browser not available.

👨💻 Best Use Cases for Scrapy:

- Simple to complex web data collection.

- Web crawling.

- Data mining, cleaning, and storage.

Selenium Review

Selenium is an automation testing tool with web scraping abilities and support for more programming languages. Selenium web scraping has gained ground because of its headless browser functionality and capability to deal with JavaScript.

👍 Pros of Selenium:

- Comprehensively documented.

- Versatile.

- Headless browser support.

- Web automation functionality to mimic users' behavior.

- Cross-browser and device compatibility.

- Active community.

- Consistently maintained.

- Built-in JavaScript support.

- Easy integration with proxies.

- Extensible with a rich set of libraries and APIs.

- It can be used along with other scraping tools.

👎 Cons of Selenium:

- The learning curve is steeper for beginners.

- Slower and more memory-demanding.

- Not suitable for large-scale web scraping.

- There is no built-in way to organize and structure data.

- Initial setup can be technical and project-dependent.

👨💻 Best Use Cases for Selenium:

- Cross-browser and cross-platform test automation.

- Performance and integration testing.

- Web scraping of dynamic content.

- General web automation.

- Automated form filling.

- Web application monitoring.

Conclusion

Your project's complexity should guide your choice between Scrapy and Selenium. Choose Scrapy for its streamlined, Python-centric web scraping and crawling efficiency. Go with Selenium if you want language flexibility with built-in JavaScript support.

Regardless of your final choice, it's crucial to avoid getting blocked. Integrate ZenRows with Selenium or Scrapy for free today.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.