Stopped by the Cheerio 403 error again? Here’s some good news: after reading this article, you’ll learn how to avoid it for future web scraping or parsing efforts.

Cheerio, a NodeJS parsing library, is prone to restrictions and blocks from websites’ anti-bot systems. In this article, you'll learn five techniques for overcoming them:

- Get a proxy.

- Use a web scraping API.

- Configure a correct User-Agent.

- Adapt your request frequency.

- Try with a headless browser.

Let’s go!

What Is Cheerio 403 Error?

The Cheerio 403 error is directly linked to the HTTP status code 403: Forbidden, which is common in web scraping. This error code means that the server understood your requests but couldn't fulfill them because you didn't exhibit the required threshold (natural browsing behavior).

When attempting to scrape a protected website with Cheerio, you may receive a response like the one below.

HTTPError: 403 Client Error: Forbidden for url: https://www.g2.com/products/asana/reviews

It's important to note that Cheerio is primarily a parsing library and does not make HTTP requests. Instead, it relies on NodeJS HTTP clients, with Axios being the most popular, to fetch the web pages it'll parse. This is why the Cheerio 403 error can also arise due to the HTTP client.

Thankfully, there are ways to resolve the error.

How to Fix 403 Forbidden in Cheerio

Let’s go through five ways of solving the 403 Forbidden error while web scraping with Cheerio.

1. Get a Proxy

Proxies act as a bridge between your scraping script and the target website, hiding your IP address and routing your requests through a different server. This helps avoid IP-based restrictions, such as rate limiting and other detection mechanisms, including the Cheerio 403 error.

To implement a single proxy with Axios and Cheerio, pass your proxy settings as part of the request configuration and then use Cheerio to parse the HTML obtained through the proxy.

The code snippet below shows how to configure an Axios proxy. It uses HTTPBin, a test website that returns the client's public IP address, as the target page and a free proxy from Free Proxy List.

// import required libraries

const axios = require('axios');

const cheerio = require('cheerio');

// make an HTTP GET request to the target URL with proxy settings

axios.get('https://httpbin.org/ip',

{

proxy: {

protocol: 'http',

host: '159.65.221.25',

port: 80,

},

}

)

.then(response => {

// log the response data

console.log(response.data);

// HTTPBin returns a JSON reponse, which Cheerio cannot parse.

// if your target website returns HTML, you can parse using Cheerio as follows

// const $ = cheerio.load(response.data)

// const textContent = $('body').text();

// console.log(textContent);

})

.catch(error => {

console.error('Error:', error);

});

Free proxies are unreliable due to frequent downtime and high risk of blocks. Additionally, websites can detect and block requests from specific IP addresses, so rotating between multiple proxies is essential to avoid IP bans. For your projects outside of learning, you need premium web scraping proxies, which offer more reliable connections.

2. Use a Web Scraping API

The most effective way to bypass the Cheerio 403 error is to use a web scraping API, such as ZenRows. They’ll let you automate the process of bypassing anti-bot measures and only focus on data extraction.

With a single API call, you can leverage all of ZenRows' features, including headless browser functionality, premium proxy rotation, optimized headers, CAPTCHA bypass, geolocation, etc. The tool works with Axios and any other HTTP client.

Let’s see how to useZenRows to bypass a Cloudflare-protected web page.

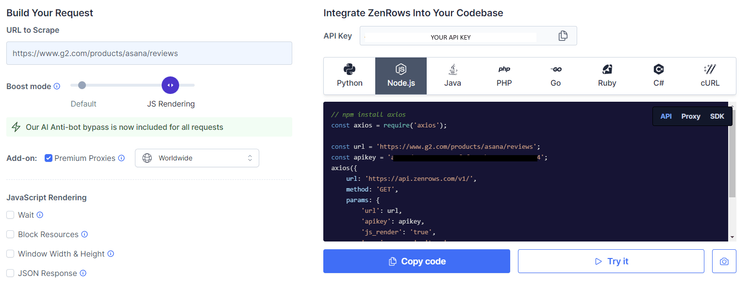

Sign up, and you'll be redirected to the Request Builder page.

Input the target URL (in this case, https://www.g2.com/products/asana/reviews), activate JavaScript Rendering, and check the box for Premium Proxies.

That'll generate your request code. Copy it, and use your preferred HTTP client.

Your code should look like this:

// npm install axios

const axios = require('axios');

const url = 'https://www.g2.com/products/asana/reviews';

axios({

url: 'https://api.zenrows.com/v1/',

method: 'GET',

params: {

'url': url,

'apikey': <YOUR_ZENROWS_API_KEY>,

'js_render': 'true',

'premium_proxy': 'true',

},

})

.then(response => console.log(response.data))

.catch(error => console.log(error));

Run it, and you'll get the HTML of the page.

<!--...--!>

<title>

Asana Reviews 2023: Details, Pricing, & Features | G2

</title>

<!--...--!>

That's how easy bypassing the Cheerio 403 error using ZenRows is. Awesome, right?

3. Configure a Correct User-Agent

The User-Agent string identifies the client making the requests. Websites often use this information to tailor their responses accordingly.

If you're identified as a non-browser client, you'll most likely receive the Cheerio 403 error response code. Therefore, changing your User-Agent string to mimic a regular browser reduces your chances of getting blocked. Many websites employ User-Agent string inspection technologies to distinguish between human behavior and automated requests, so using a regular browser's UA increases your chances of flying under the radar.

To customize your user agent in Axios, set the new UA in the User-Agent header before making the request. The example below shows how to change Axios' UA to that of a Firefox browser.

// import the required library

const axios = require('axios');

// set custom user agent header

const headers = {

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10.15; rv:109.0) Gecko/20100101 Firefox/109.0'

}

// make GET request

axios.get('https://httpbin.org/user-agent', {

headers,

})

.then(({ data }) => console.log(data));

Additionally, you must ensure you set a correct user agent since a wrongly constructed UA will trigger anti-bot systems. You can get proper UAs from this guide on setting Axios user agents.

4. Adapt Your Requests Frequency

Websites often limit the requests a client can make within a certain time frame to prevent abuse or overload on their servers. Exceeding these limits can result in IP bans, so you must adapt your request frequency.

There’s an easy way to do this: introduce delays between successive requests. Pausing between requests simulates natural browsing behavior and helps you avoid detection.

The code snippet below shows how to introduce delays in a web scraping script with Axios and Cheerio:

// import required libraries

const axios = require('axios');

const cheerio = require('cheerio');

// define the URLs to scrape

const urls = ['http://example.com/1', 'https://example.com/2', 'https://example.com/3']; // replace with actual URLs

const scraper = async (urls) => {

try {

// loop through each URL

for (let i = 0; i < urls.length; i++) {

// make an HTTP GET request to the current URL

const response = await axios.get(urls[i]);

// load HTML content into Cheerio

const $ = cheerio.load(response.data);

// perform parsing operations

// example:

console.log(`Response for URL ${i + 1}:`, $('body').text());

// wait for 5 seconds before making the next request

await new Promise(resolve => setTimeout(resolve, 5000));

}

} catch (error) {

console.error('Error:', error);

}

};

scraper(urls);

5. Try a Headless Browser

Headless browsers like Puppeteer are valuable web scraping tools, especially when dealing with JavaScript-heavy websites. Unlike traditional web scraping libraries, Puppeteer can render web pages like a real browser. Its ability to simulate user behavior lets it solve JavaScript challenges imposed by anti-bot systems like Cloudflare.

Let’s learn how to integrate Puppeteer with Cheerio.

To get started, install Puppeteer using the following command.

npm install puppeteer

Then, import the required libraries, launch a Puppeteer instance, open a new page, and navigate to the target website (https://scrapingclub.com/exercise/list_infinite_scroll/).

// import the required libraries

const puppeteer = require('puppeteer');

const cheerio = require('cheerio');

(async () => {

// launch Puppeteer instance and open new page

const browser = await puppeteer.launch();

const page = await browser.newPage();

try {

// navigate to target web page

await page.goto('https://scrapingclub.com/exercise/list_infinite_scroll/');

}

})();

The target web page loads data as you scroll down, so you must reach the bottom of the page to access all the content. For now, let's call an undefined autoScroll() function (it will be defined later).

Next, retrieve the HTML content. Parse it using Cheerio, extract the desired data, and close the async function. This code example extracts each item's title and price:

(async () => {

//...

try {

//...

// scroll to the bottom of the page

await autoScroll(page);

// retrieve HTML content

const htmlContent = await page.content();

// parse HTML using Cheerio

const $ = cheerio.load(htmlContent);

// extract desired data using Cheerio selectors

$('.post').each((index, element) => {

const title = $(element).find('h4').text().trim();

const price = $(element).find('h5').text().trim();

console.log(`item ${index + 1}: ${title}, ${price}`);

});

} catch (error) {

console.error('Error:', error);

} finally {

await browser.close();

}

})();

Now, let's define the autoScroll() function.

// function to automatically scroll to the bottom of the page

async function autoScroll(page) {

await page.evaluate(async () => {

// use Promise to handle asynchronous scrolling

await new Promise((resolve, reject) => {

let totalHeight = 0; // initialize total height scrolled

const distance = 100; // set distance to scroll each time

// set interval to continuously scroll the page

const scrollInterval = setInterval(() => {

// calculate the total scroll height of the page

const scrollHeight = document.body.scrollHeight;

// scroll the page by the specified distance

window.scrollBy(0, distance);

// update the total height scrolled

totalHeight += distance;

// check if the total height scrolled is equal to or exceeds the scroll height of the page

if (totalHeight >= scrollHeight) {

// if so, clear the interval to stop scrolling

clearInterval(scrollInterval);

// resolve the Promise to indicate scrolling is complete

resolve();

}

}, 100); // set interval duration

});

});

}

This function scrolls to the bottom of the page by repeatedly moving down in small increments (distance) until it reaches the bottom. The function uses setInterval to continuously scroll the page and stops when the total scrolled height equals or exceeds the full height of the page. It returns a Promise that resolves when scrolling is complete.

Finally, combine everything to get the following complete code:

// import the required libraries

const puppeteer = require('puppeteer');

const cheerio = require('cheerio');

(async () => {

// launch Puppeteer instance and open new page

const browser = await puppeteer.launch();

const page = await browser.newPage();

try {

// navigate to target web page

await page.goto('https://scrapingclub.com/exercise/list_infinite_scroll/');

// scroll to the bottom of the page

await autoScroll(page);

// retrieve HTML content

const htmlContent = await page.content();

// parse HTML using Cheerio

const $ = cheerio.load(htmlContent);

// extract desired data using Cheerio selectors

$('.post').each((index, element) => {

const title = $(element).find('h4').text().trim();

const price = $(element).find('h5').text().trim();

console.log(`item ${index + 1}: ${title}, ${price}`);

});

} catch (error) {

console.error('Error:', error);

} finally {

await browser.close();

}

})();

// function to automatically scroll to the bottom of the page

async function autoScroll(page) {

await page.evaluate(async () => {

// use Promise to handle asynchronous scrolling

await new Promise((resolve, reject) => {

let totalHeight = 0; // initialize total height scrolled

const distance = 100; // set distance to scroll each time

// set interval to continuously scroll the page

const scrollInterval = setInterval(() => {

// calculate the total scroll height of the page

const scrollHeight = document.body.scrollHeight;

// scroll the page by the specified distance

window.scrollBy(0, distance);

// update the total height scrolled

totalHeight += distance;

// check if the total height scrolled is equal to or exceeds the scroll height of the page

if (totalHeight >= scrollHeight) {

// if so, clear the interval to stop scrolling

clearInterval(scrollInterval);

// resolve the Promise to indicate scrolling is complete

resolve();

}

}, 100); // set interval duration

});

});

}

You should have each product's title and price as your result.

item 1: Short Dress, $24.99

item 2: Patterned Slacks, $29.99

item 3: Short Chiffon Dress, $49.99

//...

item 58: T-shirt, $6.99

item 59: T-shirt, $6.99

item 60: Blazer, $49.99

Conclusion

The 403 error is common when web scraping with Axios (or other HTTP clients) and Cheerio. Techniques such as premium proxies, custom user agents, optimized request frequency, and headless browsers can help you avoid the blocks, but they may prove ineffective with a strictly protected website. The only surefire way to avoid all blocks and errors is a web scraping API such as Zenrows.

Sign up now to try ZenRows for free.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.