Are you finding it increasingly challenging to extract data from modern websites? You're not alone. Websites are becoming more complex, employing dynamic content, user-driven interactivity, and sophisticated defense mechanisms.

This article is your roadmap to mastering advanced web scraping techniques in Python, ensuring you can efficiently extract data, regardless of the website's complexity.

Ready? Let's dive in.

Tactic #1: Dynamic Web Pages and Content: JS Rendering

Dynamic web pages load content asynchronously, updating elements in real-time without requiring a full page reload. This dynamism challenges web scrapers, as the content may need to be more readily available in the initial HTML source.

The page can send requests to a server and receive data in the background while you continue to interact with its visible elements. At the same time, and often facilitated by JavaScript, the page fetches and updates specific parts based on user actions.

Here's an example of a dynamic web page that adds new content as you scroll.

What's the Difference Between Dynamic Content and Dynamic Pages?

Dynamic content pertains to specific elements or sections within an otherwise static page, providing targeted updates, for example, pop-ups. On the other hand, dynamic pages encompass an overarching approach where the entire page is rendered dynamically.

Understanding the distinction between a webpage that primarily uses dynamic content and one that employs dynamic page rendering is crucial. This is because this knowledge informs efficient web scraping. For example, scraping dynamic content requires specific strategies like monitoring AJAX requests and API calls, whereas a dynamic page demands rendering the webpage like a regular browser.

How to Know if It's Dynamic?

Identifying whether a page is dynamic may be obscure at first glance. However, a key indicator is observing changes in page elements or content after the initial page load. For example, it's dynamic if you notice content like preloading bars or infinite scrolling.

Disable JavaScript in your browser and reload the webpage for a more practical approach. If it's static, it should still display the full content. However, if the page is dynamic, you'll need more content or page elements.

How to Scrape the Dynamic?

Traditional methods fail when scraping dynamic content, as the data is often absent in the page's static HTML. But, since websites implement dynamic content using different methods, there are a few advanced web scraping options in Python, depending on the website configuration.

However, we can classify these approaches into two primary techniques:

-

Rendering JavaScript before retrieving the HTML content. This typically involves using headless browsers like Selenium that enable you to render web content like an actual browser. Here's a step-by-step tutorial on how to scrape dynamic pages in Python using Selenium.

-

Making direct requests to AJAX or API endpoints. Sometimes, websites populate the page using backend APIs or AJAX requests. You can locate these backend queries and replicate them in your scraper. Here's a guide on replicating AJAX requests and API calls in Python.

While the techniques above can help you scrape a dynamic website, they're not scalable. For example, identifying AJAX requests or Backend API calls can get tedious and time-consuming. Also, headless browsers can introduce additional resource constraints, especially when dealing with complex or large-scale scraping tasks.

The best way to scrape websites is using web scraping APIs, such as ZenRows. It renders JavaScript like headless browsers without the infrastructure headaches, offering considerable savings in machine costs.

Tactic #2: Authentication Required Before Scraping

Many platforms, especially those hosting user data, implement authentication to regulate access. You must successfully navigate the authentication process to extract data from such websites.

Are Some Sites Harder to Log in than Others?

Some sites employ straightforward authentication methods, relying on standard username and password inputs. However, others may implement multifactor authentication, the most common being the CSRF (Cross-Site Request Forgery) token, which makes it harder to log in.

Additionally, protected websites can complicate things as they can easily rebuff automated login attempts. Luckily, there are workarounds, which we'll discuss next.

How to Login for Scraping?

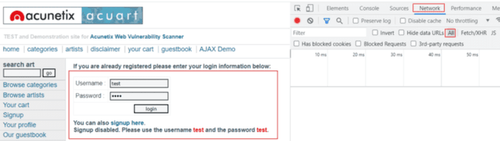

For basic websites, you only need to identify the login request, mimic it in your scraper using a POST request, and store it in a session, which allows you to access the data behind the login page.

However, more complex websites require advanced Python web scraping tactics. You should set up additional payload and headers alongside your login credentials. Regardless, your first step is to inspect the login page to identify the scenario you're dealing with.

Here's a step-by-step tutorial on how to scrape websites that require login

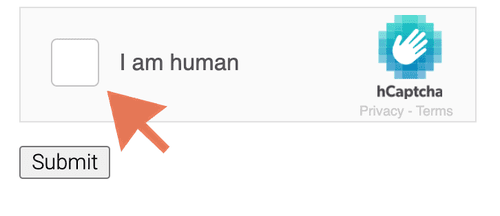

Tactic #3: CAPTCHA Bypassing Is Better Than CAPTCHA Solving

Modern websites also use CAPTCHAs to regulate bot traffic and mitigate automated requests. When a page requires CAPTCHA solving to access its content, traditional scrapers will inevitably fail because they cannot automatically bypass the CAPTCHA.

However, while normal CAPTCHAs are common, they could also be WAF-prompted. When a protected website detects automated requests or suspicious activity, it can redirect to a CAPTCHA page. Although web services, like Cloudflare, do that by default.

Understanding this difference is critical as it holds implications for the scraping process, including costs and the advanced Python web scraping tactics needed.

How to Solve CAPTCHAs?

While there are different techniques for solving CAPTCHAs, the most common process typically involves sending the CAPTCHA image to a third-party service like 2Captcha, which deciphers and returns the solution.

Solving CAPTCHAs can be effective for small-scale projects. But it introduces additional costs and can get expensive to scale. Also, it may not work on WAF-prompted challenges, so you may be better off avoiding CAPTCHAs altogether. What do we mean by that?

Why Bypassing CAPTCHAs Is Better than Solving Them?

Bypassing CAPTCHAs, instead of direct solving, is a more efficient and scalable strategy as it is cost-effective and has a higher success rate. As stated earlier, most CAPTCHAs are prompted when a WAF is triggered. Thus, you can avoid them using techniques that enable you to fly under the radar, undetected.

Here are 7 proven techniques to avoid CAPTCHAs.

Better still, web scraping APIs like ZenRows implement all those techniques and more under the hood in a single API call.

Tactic #4: Hidden Trap Avoidance

Websites also strategically set hidden traps to track and identify automated requests. These traps pose unique web scraping challenges as careful navigation is required to extract data successfully without triggering any alarms.

What Kind of Hidden Traps Are Out There?

Hidden traps come in various forms, each designed to expose and impede bots. The most common are honeypots, seemingly innocuous mechanisms that intend to trick bots into revealing themselves.

Hidden inputs, which are not visible to typical users, are another form of hidden traps. Websites can include hidden elements like form fields that bots can interact with. This interaction feeds data to the website, which is used to identify and block accordingly. Also, websites flag missing or mismatched header strings as non-human requests. Recognizing these traps is critical if you're to avoid them.

How to Avoid Hidden Traps?

While hidden traps keep malicious bots out, they also affect harmless web scrapers. If you fall into a hidden trap, you can get banned or end up with the wrong data.

Avoiding hidden traps involves implementing techniques to identify and bypass honeypots, intelligently interact with hidden inputs, and ensure header strings align with the target website's expectations. Luckily, we've covered the most common web scraping headers in a previous article.

Tactic #5: The Need for Human-like Behavior

Blending in with human-like behavior is a crucial tactic to evade detection mechanisms. Although headless browsers enable you to simulate user behavior, anti-bot systems can still detect automated interactions like mouse movements, click patterns, scrolling, etc. Hence, there is a need for an advanced web scraping Python tactic to really emulate human behavior.

How to Browse Like a Human?

Browsing like a human involves replicating the nuanced patterns of user interactions on a website. This can include mimicking mouse movements, emulating scrolling behavior, and introducing delays between requests to simulate the irregular way or pace of human browsing.

However, achieving this level of emulation mostly requires custom scripts or the use of advanced scraping libraries that allow for the integration of human-like behavior. For instance, headless browsers such as Puppeteer or Selenium enable the execution of custom scripts that closely mirror real user interactions.

Check out our tutorial on web scraping with Selenium to learn more.

Tactic #6: Automated Indicator Flagging

Websites employ various indicators to identify automated bots. Moreover, tools like ChromeDriver, commonly used with Selenium, may inadvertently reveal signs of automation. This makes it easy for websites to identify and flag your web scraper.

Understanding these indicators or inherent properties and implementing strategies to mask them is crucial to avoid getting flagged.

How to Fix Automated Properties?

Most headless browsers offer additional libraries to hide these automated properties, making it difficult for websites to detect. For example, Selenium offers Selenium Stealth and Undetected Chromedriver, while Puppeteer offers Puppeteer Extra Plugin Stealth, which integrates with Playwright.

You can also fix automated properties by introducing variability and randomness into scraping activities to mimic more natural browsing behavior. This can include randomizing user agents, using residential premium proxies, varying mouse movements, disabling Webdriver flags, removing JavaScript flags, using cookies, rotating HTTP headers, etc.

We've covered all the techniques above in detail in our guide on how to avoid bot detection.

However, implementing all these configurations or any combination can take time and effort to scale. But with web scraping APIs like ZenRows, which offers everything you need to avoid detection, you can focus on extracting data and worry less about technicalities.

Tactic #7: Resource Blocking for Saving Money When Crawling

Optimizing resource usage is not only about efficiency but can also be a strategy to save costs, especially when dealing with large-scale projects. This typically involves selectively preventing the loading of unnecessary resources during the scraping process.

Doing so can conserve bandwidth, reduce processing time, and save money, mainly when resource-intensive elements are optional. For example, blocking resources like images and scripts when using Selenium can reduce server and infrastructure resources and, ultimately, the cost of Selenium.

How to Save Resources with a Headless Browser?

Saving resources with a headless browser involves blocking certain types of content by configuring the browser to skip loading non-essential resources such as images, videos, or external scripts.

This approach enhances scraping speed and provides a more cost-effective and resource-efficient operation. Check out our step-by-step tutorial on blocking resources in Playwright. Although this tutorial is specific to Playwright, the principles are similar for other headless browsers.

Conclusion

Mastering the art of advanced web scraping in Python is critical for navigating the numerous challenges presented by modern websites.

However, despite the many techniques discussed above, the risk of getting blocked remains a common challenge as websites and antibot-systems continuously evolve and become more adept at detecting bot-like activities. For additional tips on avoiding detection, refer to our guide on web scraping without getting blocked.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.