If you're a web scraping developer, you know the frustration of running into CAPTCHAs. They have less than a 10% failure rate and evolve yearly, becoming one of the most reliable anti-bot measures.

In this article, you'll learn seven proven methods to avoid CAPTCHA and reCAPTCHA while web scraping:

- Skip hidden traps.

- Use real HTTP headers.

- Rotate your headers.

- Use rotating proxies to change IP addresses.

- Implement headless browsers.

- Disable automation indicators.

- Make your scraper look like a real user.

This guide will also cover the basics, such as CAPTCHA types and how they work so that you have a better technical understanding to get around them.

What Is a CAPTCHA?

CAPTCHA (Completely Automated Public Turing test to tell Computers and Humans Apart) are interruptions you must solve before loading the requested page and appear in different forms of challenges. Websites use them to determine whether you're an actual user or a bot by testing the accuracy of end users, and they often have time sensitivity elements, such as time expiration.

An important consideration is that the tests shouldn't compromise user experience. That's why they don't use complex biometrics and facial recognition for verifications.

Types of CAPTCHAs

The types of roadblocks you'll run into are the ones mentioned below.

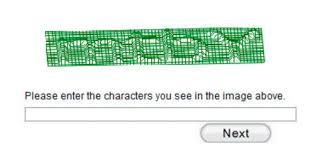

Text CAPTCHAs

These challenges use text characters to prompt users to type strings presented in an image.

3D CAPTCHAs

This new technology is an evolution of the text challenge and uses 3D characters, which are more difficult for computers to recognize.

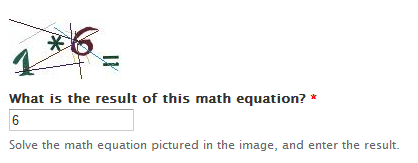

Math Challenges

This method triggers a mathematical equation for the user to solve.

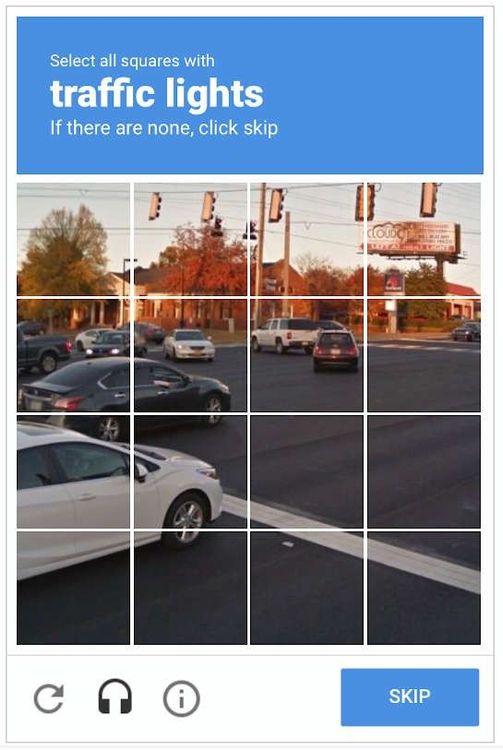

Image CAPTCHAs

In this case, the user has to identify particular objects in a grid image.

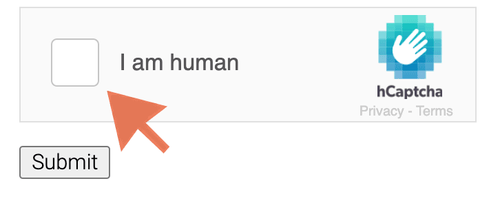

Invisible and Passive CAPTCHAs

These are more difficult to recognize since they're buried within the code.

In the case of invisible tests, imagine a form in which clicking the submit button will run a JavaScript challenge to verify if your browser behaves as a regular one humans use.

When it comes to passive CAPTCHAs, they're time-based checks. For example, if it takes a human over two seconds to type and you did it in 0.1 seconds, that's suspicious.

A combination of the two methods is possible and often used.

How Does CAPTCHA Work?

To avoid CAPTCHA and reCAPTCHA, you need to understand when the challenge might be prompted. There are three cases:

- Unusual spikes in traffic from the same user in a short time span.

- Suspicious interactions, such as visiting many pages without scrolling.

- Randomly since some firewalls with high-security measures check just in case.

Check out our guides on web scraping best practices and scraping without getting blocked.

Is There a Way to Avoid CAPTCHA?

Developers can prevent CAPTCHAs from appearing or solve them if they show up.

The first path is better because it's cheaper and more reliable (e.g., you can't use a solver for all CAPTCHA types). That is the approach used by ZenRows, a web scraping library that comes with an anti-CAPTCHA bypass tool.

How to Avoid CAPTCHA and reCAPTCHA When Scraping?

Web scrapers use various methods to avoid CAPTCHA, and these are seven of the best-proven ones:

1. Avoid hidden traps

Honeypot traps are links hidden from real users but appear for bots. So if you click on them, you'll be flagged as a scraper.

They usually have properties like display: none or visibility: hidden, and the same can be done with forms. To stay away from problems, skip the hidden elements to avoid the honeypot traps.

2. Use Real Headers

Your request headers include characteristic information about your client, so they can be used as an indicator of a web scraper.

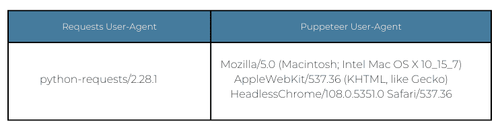

Popular headless browsers, such as Selenium and Puppeteer, have unique User-Agent headers that you should replace for real ones used by humans.

3. Rotate Headers

Too many requests with the same HTTP headers are suspicious, aren't they? A real user wouldn't visit 1,000 pages in five minutes.

For this reason, you should rotate your headers to avoid attracting attention. From the eyes of the website, it'll look like you were different users.

4. Use Rotating Proxies

Using real headers and rotating them isn't enough because websites can detect web scrapers by analyzing the source IP address. So if you rotate your set of headers without changing your IP address, it'd also look suspicious.

What you have to do is rotate both headers and your IP address at the same time, ideally with a residential address. And luckily, you can check out our step-by-step guide on how to rotate proxies in Python.

5. Implement Headless Browsers

Browser automation tools, such as Selenium and Puppeteer, are helpful in avoiding CAPTCHAs because they simulate human-like interactions with a website. In web scraping, they're used in headless mode, which removes the graphical interface and saves resources.

6. Disable Automation Indicators

Most browser-based tools have specific indicators and WebDriver flags that disclose you're a bot. For example, Selenium and Puppeteer have the navigator.webdriver flag set to true by default.

Plugins for headless browsers, such as Puppeteer-stealth, implement many techniques to erase these traces. Take a look at our tutorial on avoiding detection with Puppeteer to learn how they're implemented.

7. Make Your Scraper Look Like a Real User

Mimicking human behavior and avoiding patterns are important to avoid detection. Websites track user navigation, hover elements, and even click coordinates to analyze user behavior.

Actions you can implement include:

- Randomizing actions such as scrolling.

- Clicking.

- Typing

- Using random time intervals between actions.

By following these behavioral patterns, web scrapers can avoid CAPTCHAs and other forms of website protection.

To find out more, take a look at our guide on anti-scraping techniques.

Conclusion

We've learned how to avoid CAPTCHA and reCAPTCHA when web scraping. For example, you should avoid honeypot traps by skipping hidden links, also rotate real HTTP headers in combination with your IP, and implement headless browsers to mimic human behavior with randomized actions.

To make scraping easier, ZenRows will implement those and many other techniques for you in a single API call. Sign up now and try it for free.

Frequent Questions

How Do You Get Rid of a CAPTCHA?

CAPTCHAs are designed to stop bots from accessing websites. However, if you have legitimate reasons for scraping a website, there are some ways to bypass such challenges, including:

- Use a solving service that can automatically solve CAPTCHAs for you.

- Use a headless browser to simulate human behavior.

- Apply human behavioral patterns to your scraper.

- Use rotating proxies and real headers.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.